SEO

9 Aspects That Might Surprise You

Building links to English content has been covered a million times. But what do we know about link building in non-English markets? A lot of that knowledge is often kept locally.

There could be significant differences from what you’re used to. Success in one market doesn’t guarantee the same in another. Moreover, you’ll often run into link opportunities that violate Google’s guidelines, enticing you to overlook ethical link building practices to deliver results.

In this article, we’ll go through nine aspects of international link building with the help of four SEO and link building experts who collectively share insights from more than 20 global markets:

- Anna Podruczna – Link building team lead at eVisions International, with insights across Europe

- Andrew Prasatya – Head of content marketing at RevoU, with insights from Southeast Asia focusing on Indonesia

- Aditya Mishra – SEO and link building consultant, with insights from India

- Sebastian Galanternik – SEO manager at Crehana, with insights from South America focusing on Argentina

Huge thanks to everyone involved.

And we can start with the most controversial tactics right away…

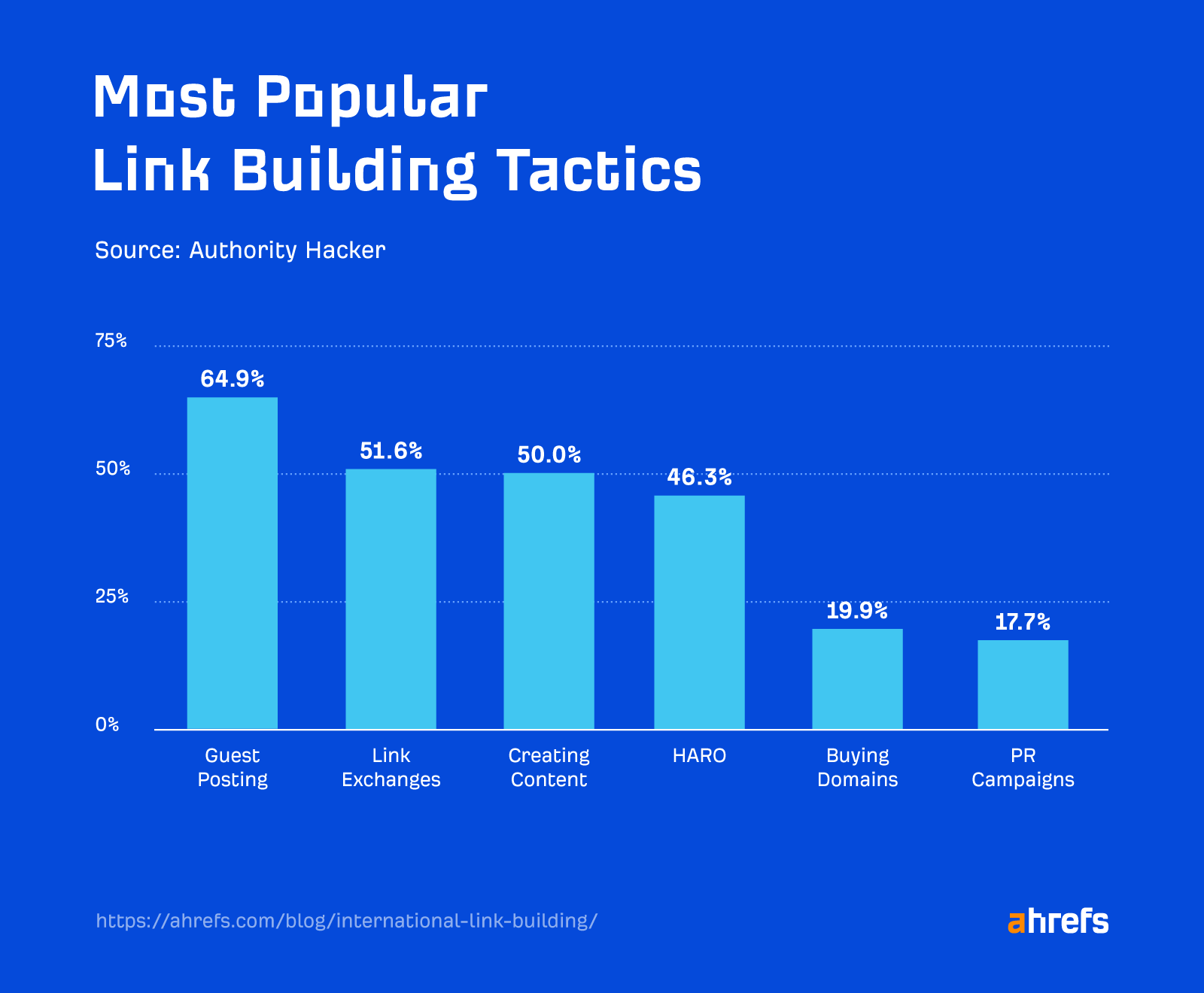

Let’s be honest—link buying is in the arsenal of many link builders. Aira’s annual survey reports that 31% of SEOs buy links. This number can jump to a whopping 74% for link builders, as shown in a recent survey by Authority Hacker.

That’s a huge number for English websites, but I’m convinced that this number is even higher for non-English content. Let me explain.

The fewer link prospecting opportunities there are, the less creative you can be when building links. You’ll waste resources trying to create fantastic link bait or launch ambitious PR campaigns in most niches in many smaller markets. Link buying often becomes an attractive (yet risky) tactic when your options get limited.

We gathered multiple insights on the topic of link buying across different markets:

Most bought links aren’t tagged as “sponsored” or “nofollow”

A compulsory reminder first:

Google considers buying or selling links for ranking purposes a link spam technique. In other words, the only acceptable form of link buying in the eyes of Google is when the link is clearly disclosed with a “nofollow” or “sponsored” link attribute.

But that’s different from what most link builders do. All contributors share this experience, and some of that goes against Google’s guidelines even more.

For example, Anna shared that if you’re negotiating link buying in Poland, you sometimes have to pay more for a “followed” link (i.e., without link attributes). I’ve heard similar stories from others, but it’s unclear how widespread the practice is.

That said, it makes sense, as passing link equity is one of the main reasons we build links. On the other hand, it’s quite a paradox that you have to pay extra to get a link you could get punished for by Google.

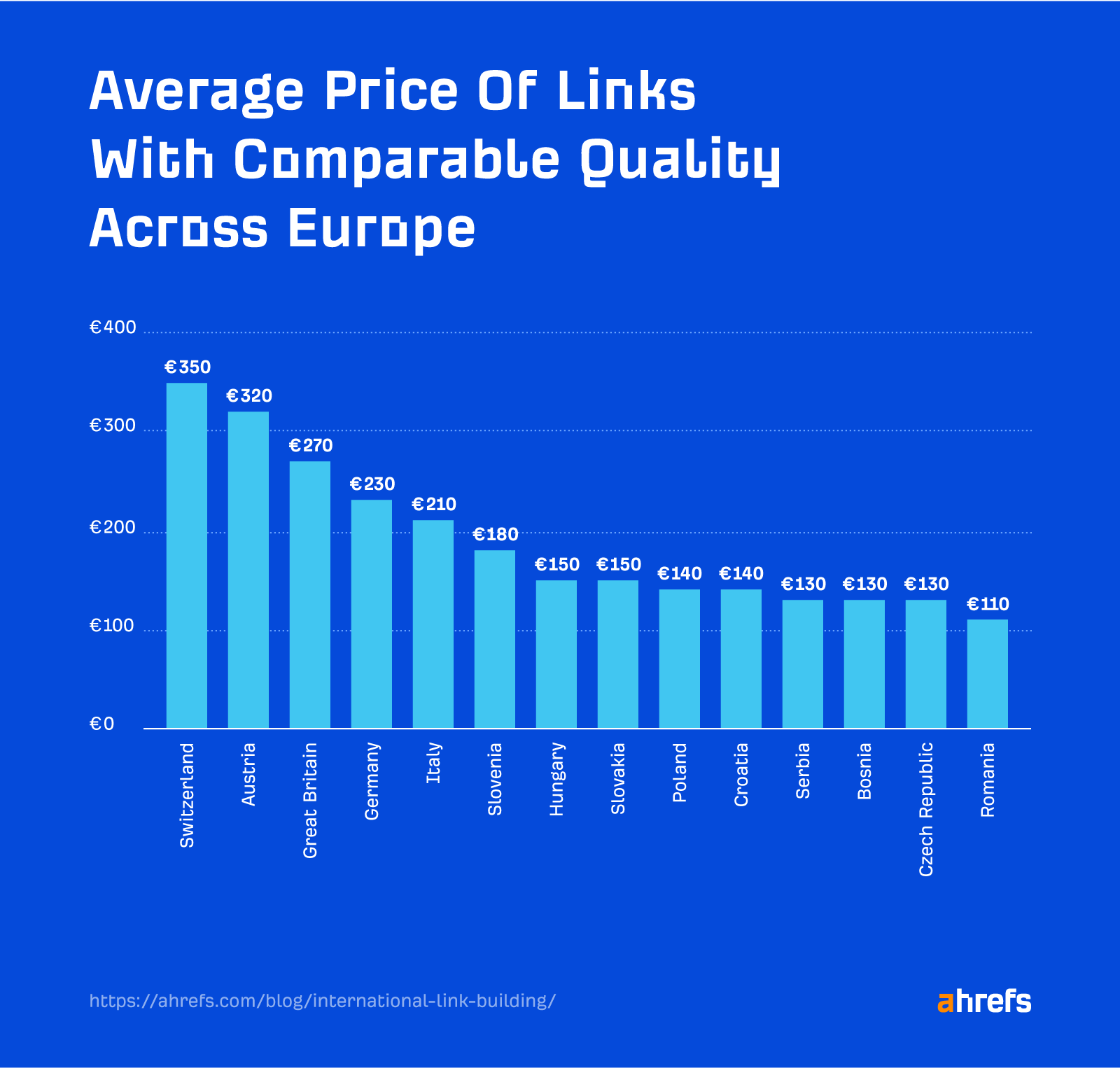

The average price per link is over €100 across all monitored countries in Europe

eVisions International, the agency where Anna works, is focused on expansion across many European markets. It keeps track of all the link offers and deals, so we were able to extract the average price per link in comparable niches for each country where it has a solid sample size (100+ links):

What surprised me is that there isn’t a single country with an average price below €100, considering the large economic disparity across European countries. For comparison, Authority Hacker reports an average price of $83, and our survey from a few years back resulted in a much higher $361 average cost.

Sidenote.

Different methodologies could play a huge role in the cost disparity. Neither of the cited surveys above segmented link cost by country. Mark Webster from Authority Hacker said he didn’t collect country/market data but that most of his respondents likely operate in English-speaking markets. Our study was focused on links in English only.

Regarding specific countries, Austria in second place stood out the most to me, as it’s not very expensive. Anna explained that it’s due mainly to the limited number of suitable Austrian websites you can build links from. So German websites will likely be cheaper if you only care about links from German content.

Reselling links can be a common business practice

Aditya said that reselling links is popular in India. You have networks of link sellers with access to different websites for potential link placements. The same links can cost you more or less based on the reseller.

This overlaps with the everlasting use of PBNs, which are still popular in India.

Needless to say, websites involved in such unsophisticated link schemes can easily be the first target of link spam updates and SpamBrain.

Negotiating prices can make or break the deal

Some cultures thrive on negotiating prices in all aspects of their lives. Others are on the completely opposite side with one definitive fixed price.

Some of this is also reflected in the process of buying links. According to Anna, negotiations are expected in some regions, such as Poland and the Balkans. Still, more often than not, the price is the price, and there is little flexibility. You can make or break the deal with the same response in different countries.

Guest posting is one of the most popular link building tactics worldwide.

What makes this different in smaller markets is the popularity of paid guest posting opportunities. Aditya said that running an outreach campaign with “paid post” in the subject line in India can easily achieve a 20%+ conversion rate, including from established high-authority websites.

I’m also regularly seeing such opportunities in Czech/Slovak SEO groups, and other contributors reported that it’s common in their markets, too.

As expected, these paid article insertions aren’t usually tagged as sponsored, allowing link equity to pass (which is against Google’s guidelines).

Free guest posting then falls into two categories:

- You must show that you’re credible and authoritative enough in the niche to get the opportunity (well, that’s best practice anywhere in the world).

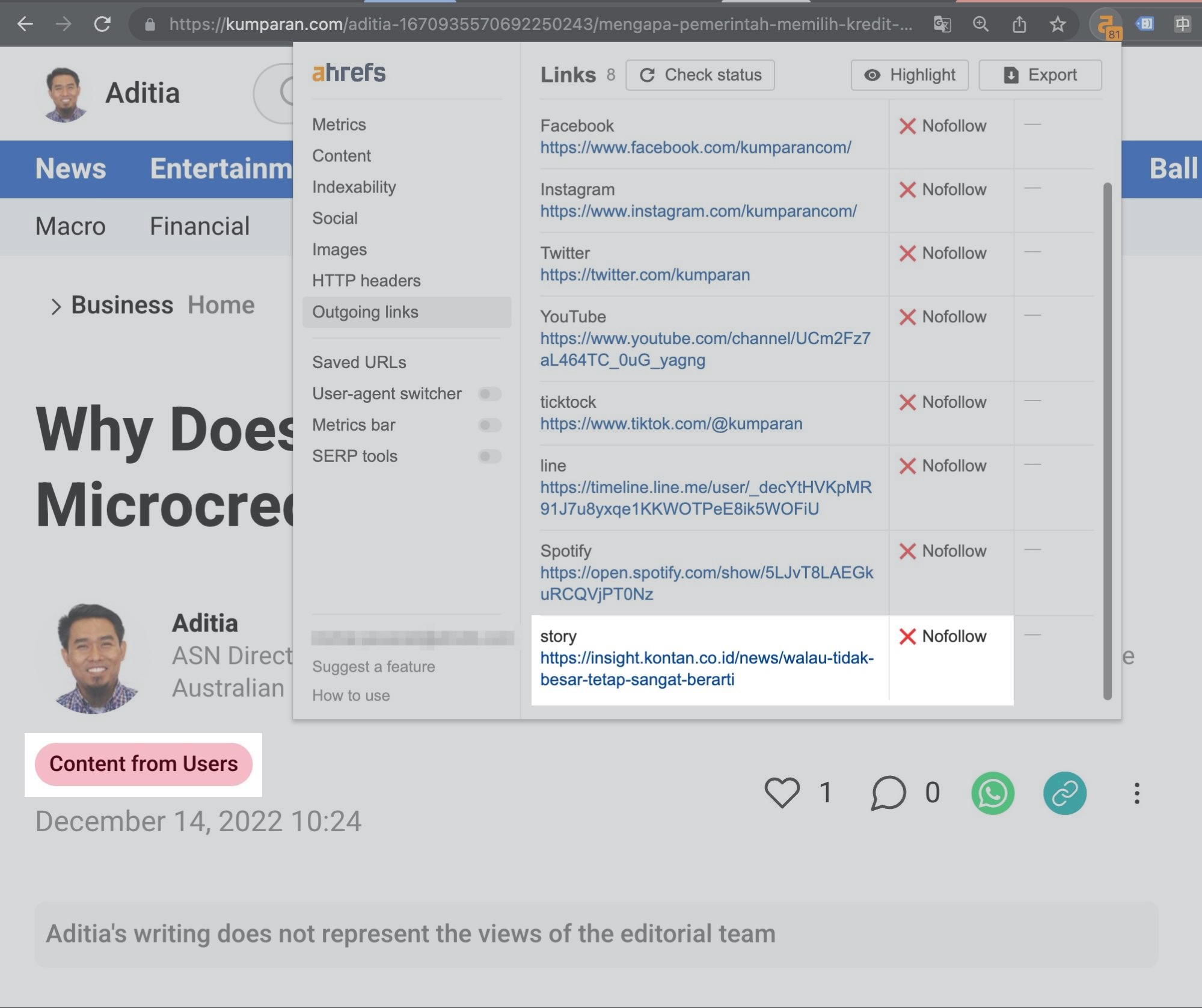

- You post content on websites that allow UGC. Andrew mentioned this is popular in Indonesia, especially on bigger media websites where it’s otherwise difficult to land coverage. The links are usually nofollowed, but the exposure and potential SEO benefits (think E-A-T, “nofollow” being a hint, etc.) are worth it.

Continuing on the topic of link and guest post buying, you may also come across “media packages.” It’s something large websites offer to close bigger deals and properly process selling their website space to other publishers.

Anna shared that this is common practice across most European countries. Aditya also reported a similar experience with India and explained that most of these packages come with strict terms and conditions, such as:

- The link will be live for one year only.

- The post will be clearly tagged as sponsored.

- The link will be “nofollow.”

- The article won’t be displayed on the homepage or the blog category page.

- Payment must be made in advance.

- Turnaround time will be more than a month.

As you can see, this is now perfectly “white hat.” Most links and articles you’d get this way would be tagged, according to Google’s guidelines.

Your success in link building largely depends on your communication and networking skills. Getting links and coverage gets much easier if you’ve built relationships with publishers and journalists in your niche and beyond.

This is often overlooked in the world of English content. Where do you even begin when there are so many websites, bloggers, and journalists? This is where the limited number of link prospects in smaller markets becomes an advantage.

Let’s take a look at a few implications.

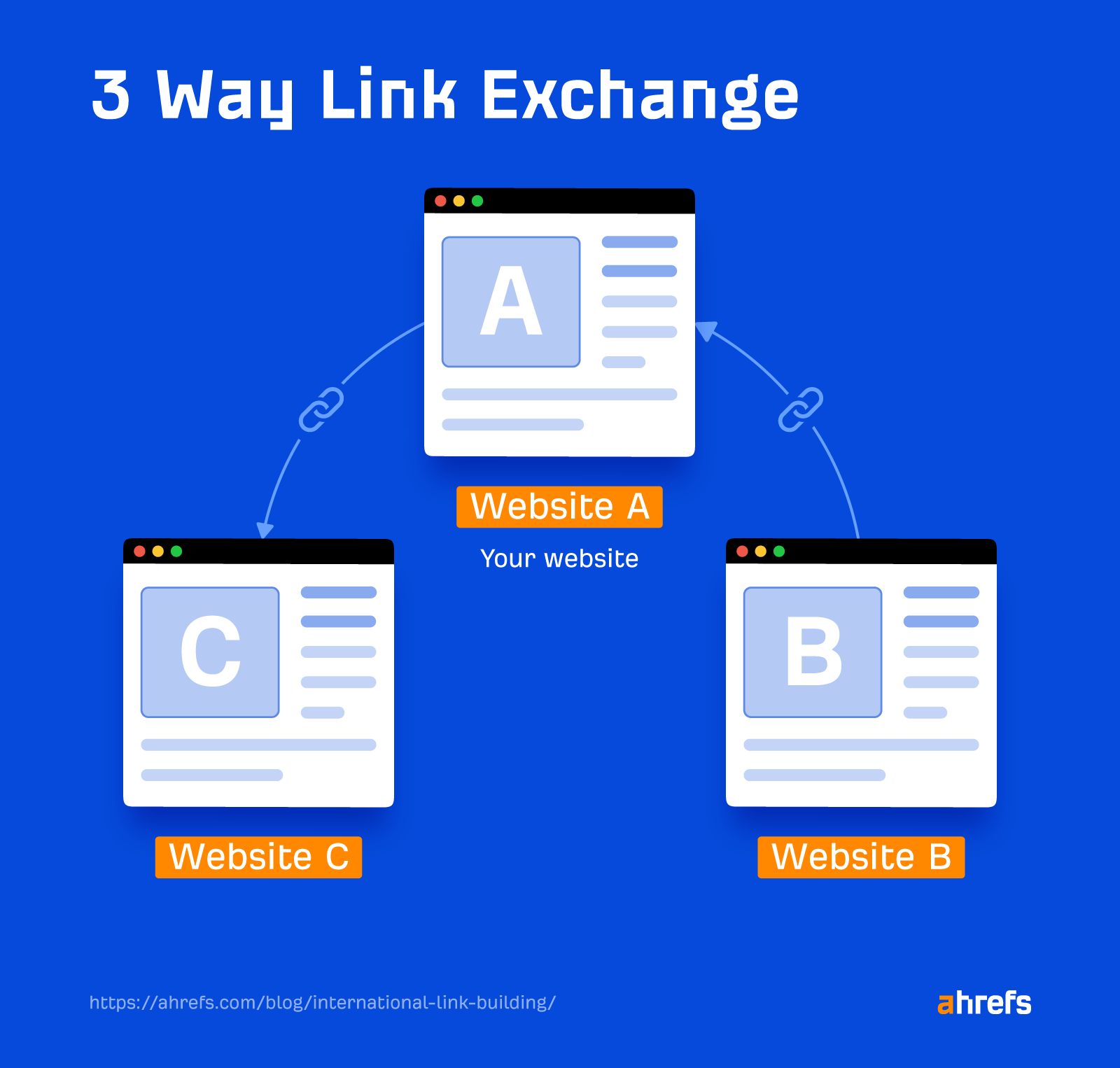

You can easily engage in more sophisticated link exchanges

Reciprocal linking is an outdated tactic that’s easy to spot for both link builders and search engines. But link exchanges are far from dead, and they can be highly effective if done well.

Three-way link exchanges (also known as ABC link exchanges) are thriving in many places across the world.

For example, Sebastian shared that many website owners in Argentina have multiple websites and that this type of link exchange is common practice. Aditya claimed that webmasters in India often have a second website for this purpose as well.

But there are still some markets where this is relatively unheard of. Anna listed the example of Balkan countries.

Keep in mind that this tactic is considered a link scheme and violates Google’s guidelines.

Building personal relationships is your best bet to land top-tier links

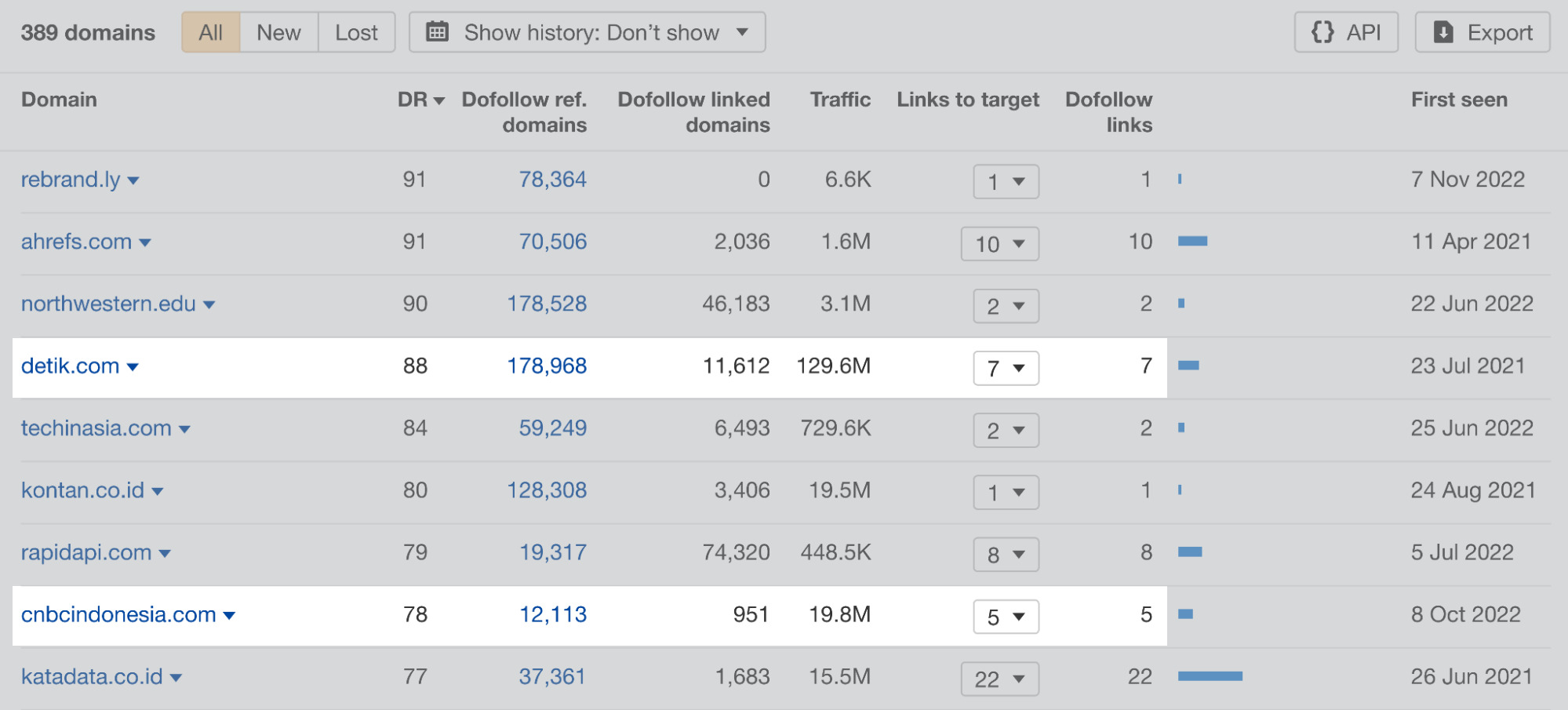

Andrew has experienced massive success by building relationships with journalists and editors in Indonesia. He claimed that knowing senior people from top-tier media websites helped him pitch stories that got the required attention to land the coverage and links.

You can attend industry events these people go to, but Andrew suggested that doing media visits is the most straightforward way to get the results.

Hiring local link building experts is highly recommended

Even though Anna achieved link building success while venturing into new markets, she recommended hiring local experts with valuable connections. This value proposition alone likely beats any skill you have as a link builder. She even tested this in the Balkans and had significantly lower success rates than the locals.

We’ve likely passed the period of some big media houses exclusively using the “nofollow” attribute for all external links. I believe the increasing use of “nofollow” for the sake of being on the safe side pushed Google to expand on the link attributes with “sponsored” and “ugc” and proclaim them all as hints rather than directives.

However, avoiding the risk of passing link equity where you shouldn’t is on a whole different level in some countries, namely Germany and Austria, in our sample.

Many German websites were hit by a link penalty in 2014, which profoundly affected the local (and Austrian) market. Anna reported, still, that too many websites in these two markets only link with “nofollow” links up to this day.

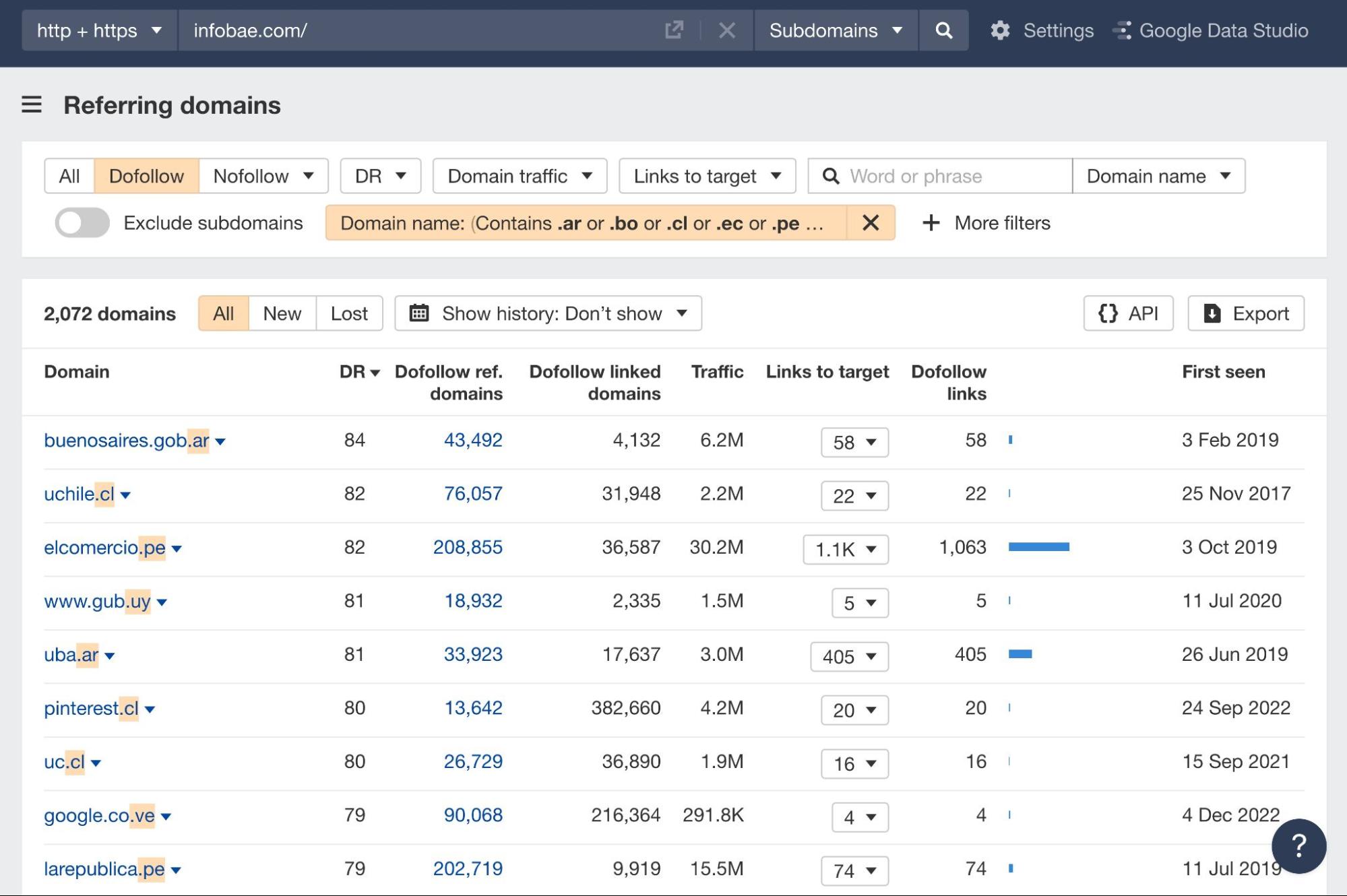

Your TLD choice can impact how your target audience perceives your website and brand. But Sebastian mentioned that this also affects how well your outreach is received.

In South America, having a gTLD instead of ccTLD is important when building links beyond your home country. Many large Spanish sites in Argentina are on a .com domain, making their link building efforts easier.

For example, a Peruvian website will be likelier to link to content on example.com than on example.ar. Argentinian links may still be more important in some cases, but the gTLD opens up many more opportunities.

If you add to that the benefits of PageRank consolidation and easier management and scalability, you have a pretty strong argument for using gTLDs for international SEO.

Doing localized outreach should have a higher success rate than sending people emails in foreign languages. That much is obvious. English should be only used when:

- It’s a common language in that country. Aditya mostly writes outreach emails to Indian websites in English because there are many local languages that aren’t mutually intelligible.

- You’re making a deal with media houses or agencies.

- You know that the other side is OK with using English.

But using the correct language is just a start for proper outreach localization. You should also adjust to local communication styles and some cultural specifics. This mainly translates to how formal the email is.

In Europe, Slavic cultures tend to use the most formal communication, but even that has its differences. Anna highlighted the Balkans as an area where you have to be formal. In contrast, you can get away with being a bit more casual in the Czech Republic or Slovakia.

This can get even more complicated in countries like Japan or South Korea, where you have complex honorific systems. A sentence said to a friend could be entirely different from the one you’d say to an older journalist you weren’t yet acquainted with—even if it had the same meaning.

On the other hand, there are also a lot of countries where informal and casual conversations win. This can be generally applied to Roman languages. Anna shared her experience of having friendly and casual conversations—especially with Italian webmasters and editors.

Recommendation

Even if you know a language well, you should familiarize yourself with local terms to achieve the desired effect. Different spellings and choices of words make even English distinctive across different English-speaking countries. And it can get even more complicated than that with other languages (like Spanish, Portuguese, or Chinese).

Being on the same page as the outreach recipient is another key to success. Try to offer a “40+ DR 3W exchange” to the average Joe on the internet, and they’ll likely label it spam immediately.

Based on my conversations with contributors, there are some advanced markets where many webmasters are well-versed in link building, such as Poland, the Czech Republic, and Slovakia.

Many other markets seem to fall into the “somewhat knowledgeable” bracket. We can name Italy, India, and Argentina here. You should still be fine using SEO terms here and there from the start.

And lastly, there’s the “lack of link building” knowledge bracket where Anna will generally put the Balkans and Germany. Here, you shouldn’t assume that the person receiving your outreach email has any solid knowledge of SEO.

Almost every guide about outreach is focused on writing emails. There’s nothing wrong with this; it’s many people’s preferred communication style. But that doesn’t apply everywhere in the world.

Andrew shared that while email is still good for first-time introductions in Indonesia, it’s much more effective to use apps and platforms like WhatsApp later on.

In other Southeast Asian countries like Thailand or Vietnam, Andrew added that email isn’t really working at all. He recommended using social media like LinkedIn or, even better, Facebook Messenger to pitch your content.

I have a similar experience in the Chinese market. Most people I got in touch with preferred to communicate over WeChat. That often involved exchanging voice messages, bringing me to the last point.

Calling someone as a part of your outreach process could get you far ahead of the competition. Anna said that leaving your phone contact so the other side can get in touch with you on a more personal level works well in Germany. She also reported success doing all aspects of her outreach over the phone in Poland. I know Czech link builders who also got links this way.

Final thoughts

As you can see, the path to link building success and what success even means locally can vastly differ from country to country.

There are also a lot of similarities to the best link building practices you already know, naturally.

Great link bait still makes a fantastic asset that can land you links in top-tier media and websites. But getting the buy-in for carrying this out can be difficult in many niches, as the number of link prospects tends to be quite limited. Another tactic that seems to work well everywhere is ego baiting.

On the other hand, there are still a lot of people using outdated bad tactics. These include mass outreach to buy links with specific anchor texts, spamming UGC links, doing unsophisticated link exchanges, etc.

Got any questions or insights to share? Let me know on Twitter.

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)

You must be logged in to post a comment Login