WORDPRESS

A Visit to Where the Cloud Touches the Ground – WordPress.com News

Hi there! I’m Zander Rose and I’ve recently started at Automattic to work on long-term data preservation and the evolution of our 100-Year Plan. Previously, I directed The Long Now Foundation and have worked on long-term archival projects like The Rosetta Project, as well as advised/partnered with organizations such as The Internet Archive, Archmission Foundation, GitHub Archive, Permanent, and Stanford Digital Repository. More broadly, I see the content of the Internet, and the open web in particular, as an irreplaceable cultural resource that should be able to last into the deep future—and my main task is to make sure that happens.

I recently took a trip to one of Automattic’s data centers to get a peek at what “the cloud” really looks like. As I was telling my family about what I was doing, it was interesting to note their perception of “the cloud” as a completely ephemeral thing. In reality, the cloud has a massive physical and energy presence, even if most people don’t see it on a day-to-day basis.

A trip to the cloud

Given the millions of sites hosted by Automattic, figuring out how all that data is currently served and stored was one of the first elements I wanted to understand. I believe that the preservation of as many of these websites as possible will someday be seen as a massive historic and cultural benefit. For this reason, I was thankful to be included on a recent meetup for WordPres.com’s Explorers engineering team, which included a tour of one of Automattic’s data centers.

The tour began with a taco lunch where we met amazing Automatticians and data center hosts Barry and Eugene, from our world-class systems and operations team. These guys are data center ninjas and are deeply knowledgeable, humble, and clearly exactly who you would want caring about your data.

The data center we visited was built out in 2013 and was the first one in which Automattic owned and operated its servers and equipment, rather than farming it out. By building out our own infrastructure, it gives us full control over every bit of data that comes in and out, as well as reduces costs given the large amount of data stored and served. Automattic now has a worldwide network of 27 data centers that provide both proximity and redundancy of content to the users and the company itself.

The physical building we visited is run by a contracted provider, and after passing through many layers of security both inside and outside, we began the tour with the facility manager showing us the physical infrastructure. This building has multiple customers paying for server space, with Automattic being just one of them. They keep technical staff on site that can help with maintenance or updates to the equipment, but, in general, the preference is for Automattic’s staff to be the only ones who touch the equipment, both for cost and security purposes.

The four primary things any data center provider needs to guarantee are uninterruptible power, cooling, data connectivity, and physical security/fire protection. The customer, such as Automattic, sets up racks of servers in the building and is responsible for that equipment, including how it ties into the power, cooling, and internet. This report is thus organized in that order.

Power

On our drive in, we saw the large power substation positioned right on campus (which includes many data center buildings, not just Automattic’s). Barry pointed out this not only means there is a massive amount of power available to the campus, but it also gets electrical feeds from both the east and west power grids, making for redundant power even at the utility level coming into the buildings.

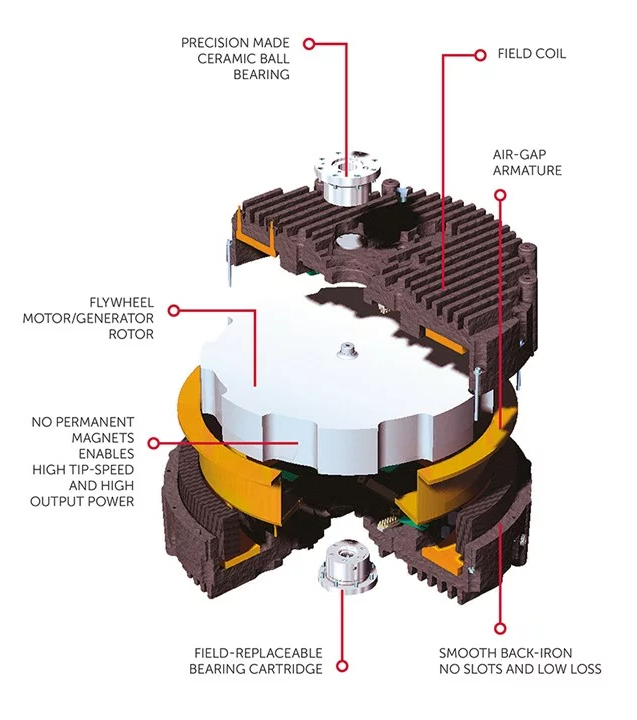

One of the more unique things about this facility is that instead of battery-based instant backup power, it uses flywheel storage by Active Power. This is basically a series of refrigerator-sized boxes with 600-pound flywheels spinning at 10,000 RPM in a vacuum chamber on precision ceramic bearings. The flywheel acts as a motor most of the time, getting fed power from the network to keep it spinning. Then if the power fails, it switches to generator mode, pulling energy out of the flywheel to keep the power on for the 5-30 seconds it takes for the giant diesel generators outside to kick in.

Those generators are the size of semi-truck trailers and supply four megawatts each, fueled by 4,500-gallon diesel tanks. That may sound like a lot, but that basically gives them 48 hours of run time before needing more fuel. In the midst of a large disaster, there could be issues with road access and fuel shortages limiting the ability to refuel the generators, but in cases like that, our network of multiple data centers with redundant capabilities will still keep the data flowing.

Cooling

Depending on outside ambient temperatures, cooling is typically around 30% of the power consumption of a data center. The air chilling is done through a series of cooling units supplied by a system of saline water tanks out by the generators.

Barry and Eugene pointed out that without cooling, the equipment will very quickly (in less than an hour) try to lower their power consumption in response to the heat, causing a loss of performance. Barry also said that when they start dropping performance radically, it makes it more difficult to manage than if the equipment simply shut off. But if the cooling comes back soon enough, it allows for faster recovery than if hardware was fully shut off.

Handling the cooling in a data center is a complicated task, but this is one of the core responsibilities of the facility, which they handle very well and with a fair amount of redundancy.

Data connectivity

Data centers can vary in terms of how they connect to the internet. This center allows for multiple providers to come into a main point of entry for the building.

Automattic brings in at least two providers to create redundancy, so every piece of equipment should be able to get power and internet from two or more sources at all times. This connectivity comes into Automattic’s equipment over fiber via overhead raceways that are separate from the power and cooling in the floor. From there it goes into two routers, each connected to all the cabinets in that row.

Server area

As mentioned earlier, this data center is shared among several tenants. This means that each one sets up their own last line of physical security. Some lease an entire data hall to themselves, or use a cage around their equipment; some take it even further by obscuring the equipment so you cannot see it, as well as extending the cage through the subfloor another three feet down so that no one could get in by crawling through that space.

Automattic’s machines took up the central portion of the data hall we were in, with some room to grow. We started this portion of the tour in the “office” that Automattic also rents to both store spare parts and equipment, as well as provide a quiet place to work. On this tour it became apparent that working in the actual server rooms is far from ideal. With all the fans and cooling, the rooms are both loud and cold, so in general you want to do as much work outside of there as possible.

What was also interesting about this space is that it showed all the generations of equipment and hard drives that have to be kept up simultaneously. It is not practical to assume that a given generation of hard drives or even connection cables will be available for more than a few years. In general, the plan is to keep all hardware using identical memory, drives, and cables, but that is not always possible. As we saw in the server racks, there is equipment still running from 2013, but these will likely have to be completely swapped in the near future.

Barry also pointed out that different drive tech is used for different types of data. Images are stored on spinning hard drives (which are the cheapest by size, but have moving parts so need more replacement), and the longer lasting solid state disk (SSD) and non-volatile memory (NVMe) technology are used for other roles like caching and databases, where speed and performance are most important.

Barry explained that data at Automattic is stored in multiple places in the same data center, and redundantly again at several other data centers. Even with that much redundancy, a further copy is stored on an outside backup. Each one of the centers Automattic uses has a method of separation, so it is difficult for a single bug to propagate between different facilities. In the last decade, there’s only been one instance where the outside backup had to come into play, and it was for six images. Still, Barry noted that there can never be too many backups.

An infrastructure for the future

And with that, we concluded the tour and I would soon head off to the airport to fly home. The last question Barry asked me was if I thought this would all be around in 100 years. My answer was that something like it most certainly will, but that it would look radically different, and may be situated in parts of the world with more sustainable cooling and energy, as more of the world gets large bandwidth connections.

As I thought about the project of getting all this data to last into the deep future, I was very impressed by what Automattic has built, and believe that as long as business continues as normal, the data is incredibly safe. However, on the chance that things do change, I think developing partnerships with organizations like The Internet Archive, Permanent.org, and perhaps national libraries or large universities will be critically important to help make sure the content of the open web survives well into the future. We could also look at some of the long-term storage systems that store data without the need for power, as well as systems that cannot be changed in the future (as we wonder if AI and censorship may alter what we know to be “facts”). For this, we could look at stable optical systems like Piql, Project Silica, and Stampertech. It breaks my heart to think the world would have created all this, only for it to be lost. I think we owe it to the future to make sure as much of it as possible has a path to survive.

Join 106.6M other subscribers