SEO

A Complete Guide To the Google Penguin Algorithm Update

Ten years have passed since Google introduced the Penguin algorithm and took a stronger stance on manipulative link-building practices.

The algorithm has had a number of updates and has become a real-time part of the core Google algorithm, and as a result, penalties have become less common, but still exist both in partial and site-wide format.

For the most part, Google claims to ignore a lot of poor-quality links online, but is still alert and monitoring for unnatural patterns such as link schemes, PBNs, link exchanges, and unnatural outbound linking patterns.

The Introduction Of Penguin

In 2012, Google officially launched the “webspam algorithm update,” which specifically targeted link spam and manipulative link-building practices.

The webspam algorithm later became known (officially) as the Penguin algorithm update via a tweet from Matt Cutts, who was then head of the Google webspam team.

While Google officially named the algorithm Penguin, there is no official word on where this name came from.

The Panda algorithm name came from one of the key engineers involved with it, and it’s more than likely that Penguin originated from a similar source.

One of my favorite Penguin naming theories is that it pays homage to The Penguin, from DC’s Batman.

Minor weather report: We pushed 1st Penguin algo data refresh an hour ago. Affects <0.1% of English searches. Context: http://t.co/ztJiMGMi

— Matt Cutts (@mattcutts) May 26, 2012

Prior to the Penguin algorithm, link volume played a larger part in determining a webpage’s scoring when crawled, indexed, and analyzed by Google.

This meant when it came to ranking websites by these scores for search results pages, some low-quality websites and pieces of content appeared in more prominent positions in the organic search results than they should have.

Why Google Penguin Was Needed

Google’s war on low-quality started with the Panda algorithm, and Penguin was an extension and addition to the arsenal to fight this war.

Penguin was Google’s response to the increasing practice of manipulating search results (and rankings) through black hat link building techniques.

Cutts, speaking at the SMX Advanced 2012 conference, said:

We look at it something designed to tackle low-quality content. It started out with Panda, and then we noticed that there was still a lot of spam and Penguin was designed to tackle that.

The algorithm’s objective was to gain greater control over and reduce the effectiveness of, a number of blackhat spamming techniques.

By better understanding and process the types of links websites and webmasters were earning, Penguin worked toward ensuring that natural, authoritative, and relevant links rewarded the websites they pointed to, while manipulative and spammy links were downgraded.

Penguin only deals with a site’s incoming links. Google only looks at the links pointing to the site in question and does not look at the outgoing links at all from that site.

Initial Launch & Impact

When Penguin first launched in April 2012, it affected more than 3% of search results, according to Google’s own estimations.

Minor weather report: We pushed 1st Penguin algo data refresh an hour ago. Affects <0.1% of English searches. Context: http://t.co/ztJiMGMi

— Matt Cutts (@mattcutts) May 26, 2012

Penguin 2.0, the fourth update (including the initial launch) to the algorithm was released in May 2013 and affected roughly 2.3% of all queries.

On launch, Penguin was said to have targeted two specific manipulative practices, in particular, these being link schemes and keyword stuffing.

Link schemes are the umbrella term for manipulative link building practices, such as exchanges, paying for links, and other unnatural link practices outlined in Google’s link scheme documentation.

Penguin’s initial launch also took aim at keyword stuffing, which has since become associated with the Panda algorithm (which is thought of as more of a content and site quality algorithm).

Key Google Penguin Updates & Refreshes

There have been a number of updates and refreshes to the Penguin algorithm since it was launched in 2012, and possibly a number of other tweaks that have gone down in history as unknown algorithm updates.

Google Penguin 1.1: March 26, 2012

This wasn’t a change to the algorithm itself, but the first refresh of the data within it.

In this instance, websites that had initially been affected by the launch and had been proactive in clearing up their link profiles saw some recovery, while others who hadn’t been caught by Penguin the first time round saw an impact.

Google Penguin 1.2: October 5, 2012

This was another data refresh. It affected queries in the English language, as well as affected international queries.

Weather report: Penguin data refresh coming today. 0.3% of English queries noticeably affected. Details: http://t.co/Esbi2ilX

— Scary Matt Cutts (@mattcutts) October 5, 2012

Google Penguin 2.0: May 22, 2013

This was a more technically advanced version of the Penguin algorithm and changed how the algorithm impacted search results.

Penguin 2.0 impacted around 2.3% of English queries, as well as other languages proportionately.

This was also the first Penguin update to look deeper than the websites homepage and top-level category pages for evidence of link spam being directed to the website.

Google Penguin 2.1: October 4, 2013

The only refresh to Penguin 2.0 (2.1) came on October 4 of the same year. It affected about 1% of queries.

While there was no official explanation from Google, data suggests that the 2.1 data refresh also advanced on how deep Penguin looked into a website and crawled deeper, and conducted further analysis as to whether spammy links were contained.

Google Penguin 3.0: October 17, 2014

While this was named as a major update, it was, in fact, another data refresh; allowing those impacted by previous updates to emerge and recover, while many others who had continued to utilize spammy link practices and had escaped the radar of the previous impacts saw an impact.

Googler Pierre Far confirmed this through a post on his Google+ profile and that the update would take a “few weeks” to roll out fully.

Far also stated that this update affected less than 1% of English search queries.

Google Penguin 4.0: September 23, 2016

Almost two years after the 3.0 refresh, the final Penguin algorithm update was launched.

The biggest change with this iteration was that Penguin became a part of the core algorithm.

When an algorithm transcends to become a part of the core, it doesn’t mean that the algorithm’s functionality has changed or may change dramatically again.

It means that Google’s perception of the algorithm has changed, not the algorithm itself.

Now running concurrently with the core, Penguin evaluates websites and links in real-time. This meant that you can see (reasonably) instant impacts of your link building or remediation work.

The new Penguin also wasn’t closed-fisted in handing out link-based penalties but rather devalued the links themselves. This is a contrast to the previous Penguin iterations, where the negative was punished.

That being said, studies and, from personal experience, algorithmic penalties relating to backlinks still do exist.

Data released by SEO professionals (e.g., Michael Cottam), as well as seeing algorithmic downgrades lifted through disavow files after Penguin 4.0, enforce this belief.

Google Penguin Algorithmic Downgrades

Soon after the Penguin algorithm was introduced, webmasters and brands who had used manipulative link building techniques or filled their backlink profiles with copious amounts of low-quality links began to see decreases in their organic traffic and rankings.

Not all Penguin downgrades were site-wide – some were partial and only affected certain keyword groups that had been heavily spammed and over-optimized, such as key products and in some cases even brands.

A website impacted by a Penguin penalty, which took 17 months to lift.

A website impacted by a Penguin penalty, which took 17 months to lift.

The impact of Penguin can also pass between domains, so changing domains and redirecting the old one to the new can cause more problems in the long run.

Experiments and research show that using a 301 or 302 redirect won’t remove the effect of Penguin, and in the Google Webmasters Forum, John Mueller confirmed that using a meta refresh from one domain to a new domain could also cause complications.

In general, we recommend not using meta-refresh type redirects, as this can cause confusion with users (and search engine crawlers, who might mistake that for an attempted redirect).

Google Penguin Recovery

The disavow tool has been an asset to SEO practitioners, and this hasn’t changed even now that Penguin exists as part of the core algorithm.

As you would expect, there have been studies and theories published that disavowing links doesn’t, in fact, do anything to help with link-based algorithmic downgrades and manual actions, but this has theory has been shot down by Google representatives publicly.

@joshbachynski disavows can help for Penguin.

— Matt Cutts (@mattcutts) June 28, 2013

That being said, Google recommends that the disavow tool should only be used as a last resort when dealing with link spam, as disavowing a link is a lot easier (and a quicker process in terms of its effect) than submitting reconsideration requests for good links.

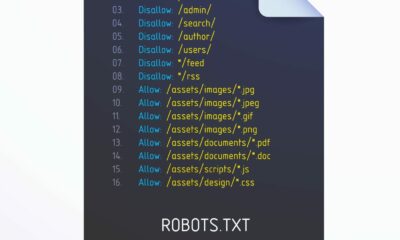

What To Include In A Disavow File

A disavow file is a file you submit to Google that tells them to ignore all the links included in the file so that they will not have any impact on your site.

The result is that the negative links will no longer cause negative ranking issues with your site, such as with Penguin.

But, it does also mean that if you erroneously included high-quality links in your disavow file, those links will no longer help your ranking.

You do not need to include any notes in your disavow file unless they are strictly for your reference. It is fine just to include the links and nothing else.

Google does not read any of the notations you have made in your disavow file, as they process it automatically without a human ever reading it.

Some find it useful to add internal notations, such as the date a group of URLs was added to the disavow file or comments about their attempts to reach the webmaster about getting a link removed.

Once you have uploaded your disavow file, Google will send you a confirmation.

But while Google will process it immediately, it will not immediately discount those links. So, you will not instantly recover from submitting the disavow alone.

Google still needs to go out and crawl those individual links you included in the disavow file, but the disavow file itself will not prompt Google to crawl those pages specifically.

Also, there is no way to determine which links have been discounted and which ones have not been, as Google will still include both in your linking report in Google Search Console.

If you have previously submitted a disavow file to Google, they will replace that file with your new one, not add to it.

So, it is important to make sure that if you have previously disavowed links, you still include those links in your new disavow file.

You can always download a copy of the current disavow file in Google Search Console.

Disavowing Individual Links vs. Domains

It is recommended that you choose to disavow links on a domain level instead of disavowing the individual links.

There will be some cases where you will want to disavow individually specific links, such as on a major site that has a mix of quality versus paid links.

But for the majority of links, you can do a domain-based disavow.

Google only needs to crawl one page on that site for that link to be discounted on your site.

Doing domain-based disavows also means that you do not have to worry about those links being indexed as www or non-www, as the domain-based disavow will take this into account.

Finding Your Backlinks

If you suspect your site has been negatively impacted by Penguin, you need to do a link audit and remove or disavow the low-quality or spammy links.

Google Search Console includes a list of backlinks for site owners, but be aware that it also includes links that are already nofollowed.

If the link is nofollowed, it will not have any impact on your site. But keep in mind that the site could remove that nofollow in the future without warning.

There are also many third-party tools that will show links to your site, but because some websites block those third-party bots from crawling their site, they will not be able to show you every link pointing to your site.

And while some of the sites blocking these bots are high-quality well-known sites not wanting to waste the bandwidth on those bots, it is also being used by some spammy sites to hide their low-quality links from being reported.

Monitoring backlinks is also an essential task, as sometimes the industry we work in isn’t entirely honest and negative SEO attacks can happen. That’s when a competitor buys spammy links and points them to your site.

Many use “negative SEO” as an excuse when their site gets caught by Google for low-quality links.

However, Google has said they are pretty good about recognizing this when it happens, so it is not something most website owners need to worry about.

This also means that proactively using the disavow feature without a clear sign of an algorithmic penalty or a notification of a manual action is a good idea.

Interestingly, however, a poll conducted by SEJ in September 2017 found that 38% of SEOs never disavow backlinks.

Going through a backlink profile, and scrutinizing each linking domain as to whether it’s a link you want or not, is not a light task.

Link Removal Outreach

Google recommends that you attempt to outreach to websites and webmasters where the bad links are originating from first and request their removal before you start disavowing them.

Some site owners demand a fee to remove a link.

Google recommends never paying for link removals. Just include those links in your disavow file instead and move on to the next link removal.

While outreach is an effective way to recover from a link-based penalty, it isn’t always necessary.

The Penguin algorithm also takes into account the link profile as a whole, and the volume of high-quality, natural links versus the number of spammy links.

While in the instances of a partial penalty (impacting over-optimized keywords), the algorithm may still affect you. The essentials of backlink maintenance and monitoring should keep you covered.

Some webmasters even go as far as including “terms” within the terms and conditions of their website and actively outreaching to websites they don’t feel should be linking to them:

Website terms and conditions regarding linking to the website in question.

Website terms and conditions regarding linking to the website in question.

Assessing Link Quality

Many have trouble when assessing link quality.

Don’t assume that because a link comes from a .edu site that it is high-quality.

Plenty of students sell links from their personal websites on those .edu domains which are extremely spammy and should be disavowed.

Likewise, there are plenty of hacked sites within .edu domains that have low-quality links.

Do not make judgments strictly based on the type of domain. While you can’t make automatic assumptions on .edu domains, the same applies to all TLDs and ccTLDs.

Google has confirmed that just being on a specific TLD does not help or hurt the search rankings. But you do need to make individual assessments.

There is a long-running joke about how there’s never been a quality page on a .info domain because so many spammers were using them, but in fact, there are some great quality links coming from that TLD, which shows why individual assessment of links is so important.

Beware Of Links From Presumed High-Quality Sites

Don’t look at the list of links and automatically consider links from specific websites as being a great quality link, unless you know that very specific link is high quality.

Just because you have a link from a major website such as Huffington Post or the BBC does not make that an automatic high-quality link in the eyes of Google – if anything, you should question it more.

Many of those sites are also selling links, albeit some disguised as advertising or done by a rogue contributor selling links within their articles.

These types of links from high-quality sites actually being low-quality have been confirmed by many SEOs who have received link manual actions that include links from these sites in Google’s examples. And yes, they could likely be contributing to a Penguin issue.

As advertorial content increases, we are going to see more and more links like these get flagged as low-quality.

Always investigate links, especially if you are considering not removing any of them simply based on the site the link is from.

Promotional Links

As with advertorials, you need to think about any links that sites may have pointed to you that could be considered promotional links.

Paid links do not always mean money is exchanged for the links.

Examples of promotional links that are technically paid links in Google’s eyes are any links given in exchange for a free product for review or a discount on products.

While these types of links were fine years ago, they now need to be nofollowed.

You will still get the value of the link, but instead of it helping rankings, it would be through brand awareness and traffic.

You may have links out there from a promotional campaign done years ago that are now negatively impacting a site.

For all these reasons, it is vitally important to individually assess every link. You want to remove the poor quality links because they are impacting Penguin or could cause a future manual action.

But, you do not want to remove the good links, because those are the links that are helping your rankings in the search results.

Promotional links that are not nofollowed can also trigger the manual action for outgoing links on the site that placed those links.

No Penguin Recovery In Sight?

Sometimes after webmasters have gone to great lengths to clean up their link profiles, they still don’t see an increase in traffic or rankings.

There are a number of possible reasons behind this, including:

- The initial traffic and ranking boost was seen prior to the algorithmic penalty was unjustified (and likely short-term) and came from the bad backlinks.

- When links have been removed, no efforts have been made to gain new backlinks of greater value.

- Not all the negative backlinks have been disavowed/a high enough proportion of the negative backlinks have been removed.

- The issue wasn’t link-based, to begin with.

When you recover from Penguin, don’t expect your rankings to go back to where they used to be before Penguin, nor for the return to be immediate.

Far too many site owners are under the impression that they will immediately begin ranking at the top for their top search queries once Penguin is lifted.

First, some of the links that you disavowed were likely contributing to an artificially high ranking, so you cannot expect those rankings to be as high as they were before.

Second, because many site owners have trouble assessing the quality of the links, some high-quality links inevitably get disavowed in the process, links that were contributing to the higher rankings.

Add to the mix the fact Google is constantly changing its ranking algorithm, so factors that benefited you previously might not have as big of an impact now, and vice versa.

Google Penguin Myths & Misconceptions

One of the great things about the SEO industry and those involved in it is that it’s a very active and vibrant community, and there are always new theories and experiment findings being published online daily.

Naturally, this has led to a number of myths and misconceptions being born about Google’s algorithms. Penguin is no different.

Here are a few myths and misconceptions about the Penguin algorithm we’ve seen over the years.

Myth: Penguin Is A Penalty

One of the biggest myths about the Penguin algorithm is that people call it a penalty (or what Google refers to as a manual action).

Penguin is strictly algorithmic in nature. It cannot be lifted by Google manually.

Despite the fact that an algorithmic change and a penalty can both cause a big downturn in website rankings, there are some pretty drastic differences between them.

A penalty (or manual action) happens when a member of Google’s webspam team has responded to a flag, investigated, and felt the need to enforce a penalty on the domain.

You will receive a notification through Google Search Console relating to this manual action.

When you get hit by a manual action, not only do you need to review your backlinks and submit a disavow for the spammy ones that go against Google’s guidelines, but you also need to submit a reconsideration request to the Google webspam team.

If successful, the penalty will be revoked; and if unsuccessful, it’s back to reviewing the backlink profile.

A Penguin downgrade happens without any involvement of a Google team member. It’s all done algorithmically.

Previously, you would have to wait for a refresh or algorithm update, but now, Penguin runs in real-time so recoveries can happen a lot faster (if enough remediation work has been done).

Myth: Google Will Notify You If Penguin Hits Your Site

Another myth about the Google Penguin algorithm is that you will be notified when it has been applied.

Unfortunately, this isn’t true. The Search Console won’t notify you that your rankings have taken a dip because of the application of the Penguin.

Again, this shows the difference between an algorithm and a penalty – you would be notified if you were hit by a penalty.

However, the process of recovering from Penguin is remarkably similar to that of recovering from a penalty.

Myth: Disavowing Bad Links Is The Only Way To Reverse A Penguin Hit

While this tactic will remove a lot of the low-quality links, it is utterly time-consuming and a potential waste of resources.

Google Penguin looks at the percentage of good quality links compared to those of a spammy nature.

So, rather than focusing on manually removing those low-quality links, it may be worth focusing on increasing the number of quality links your website has.

This will have a better impact on the percentage Penguin takes into account.

Myth: You Can’t Recover From Penguin

Yes, you can recover from Penguin.

It is possible, but it will require some experience in dealing with the fickle nature of Google algorithms.

The best way to shake off the negative effects of Penguin is to forget all of the existing links on your website, and begin to gain original editorially-given links.

The more of these quality links you gain, the easier it will be to release your website from the grip of Penguin.

Featured Image: Paulo Bobita/Search Engine Journal

!function(f,b,e,v,n,t,s)

{if(f.fbq)return;n=f.fbq=function(){n.callMethod?

n.callMethod.apply(n,arguments):n.queue.push(arguments)};

if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version=’2.0′;

n.queue=[];t=b.createElement(e);t.async=!0;

t.src=v;s=b.getElementsByTagName(e)[0];

s.parentNode.insertBefore(t,s)}(window,document,’script’,

‘https://connect.facebook.net/en_US/fbevents.js’);

if( typeof sopp !== “undefined” && sopp === ‘yes’ ){

fbq(‘dataProcessingOptions’, [‘LDU’], 1, 1000);

}else{

fbq(‘dataProcessingOptions’, []);

}

fbq(‘init’, ‘1321385257908563’);

fbq(‘track’, ‘PageView’);

fbq(‘trackSingle’, ‘1321385257908563’, ‘ViewContent’, {

content_name: ‘penguin-algorithm-update’,

content_category: ‘seo’

});

You must be logged in to post a comment Login