SEO

20 Essential Technical SEO Tools For Agencies

Technical SEO tools are plentiful.

However, tools are just part of the equation.

Tools are useless without an experienced technical SEO professional to guide the strategy and ensure successful results.

But, within the hands of an experienced professional, tools can do many wondrous things. From scaling a site’s SEO effortlessly to creating content, it’s possible to improve things with less effort (rather than more hard work).

Tools can increase efficiencies, including:

- Identifying issues on-site, like crawling and indexing.

- Diagnosing page speed issues.

- Identifying missing or duplicate text and other elements.

- Redirect issues.

- And many others.

Your SEO tools arsenal, and how you use them, can mean the difference between great success and failure.

And indeed, there is no shortage of technical SEO tools for agencies.

Here are a few of the best you should consider.

1. Screaming Frog

Screaming Frog is the crawler to have.

To create a substantial website audit, it is crucial to first perform a website crawl with this tool.

Depending on specific settings, it is possible to introduce false positives or errors into an audit that you otherwise would not know about.

Screaming Frog can help you identify the basics like:

- Missing page titles.

- Missing meta descriptions.

- Missing meta keywords.

- Large images.

- Errored response codes.

- Errors in URLs.

- Errors in canonicals.

Advanced things Screaming Frog can help you do include:

- Identifying issues with pagination.

- Diagnosing international SEO implementation issues.

- Taking a deep dive into a website’s architecture.

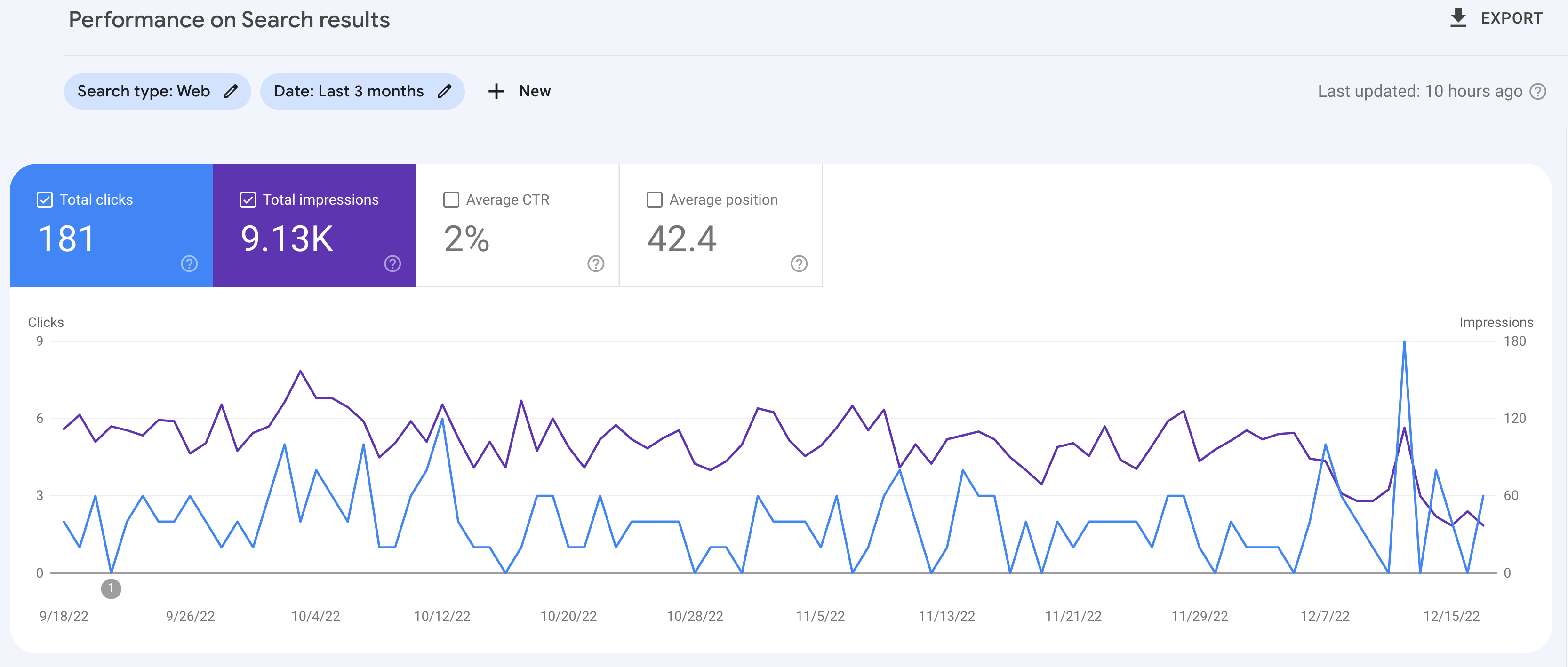

2. Google Search Console

Screenshot from Google Search Console, December 2022

Screenshot from Google Search Console, December 2022The primary tool of any SEO pro should be the Google Search Console (GSC).

This critical tool has recently been overhauled to replace many old features while adding more data, features, and reports.

What makes this tool great for agencies? Setting up a reporting process.

For agencies that do SEO, good reporting is critical. If you have not already set up a reporting process, it is highly recommended that you do so.

This process can save you if you have an issue with website change-overs when GSC accounts can be wiped out. If your account is wiped out, it is possible to go back to all your GSC data, because you have been saving it for all these months.

Agency applications can also include utilizing the API for interfacing with other data usage as well.

3. Google Analytics

Where would we be without a solid analytics platform to analyze organic search performance?

While free, Google Analytics provides much in the way of information that can help you identify things like penalties, issues with traffic, and anything else that may come your way.

In much the same way as Google Search Console works, if you set up Google Analytics correctly, it’s ideal to have a monthly reporting process in place.

This process will help you save data for those situations where something unexpected happens to the client’s Google Analytics access.

At least, you won’t have a situation where you lose all data for your clients.

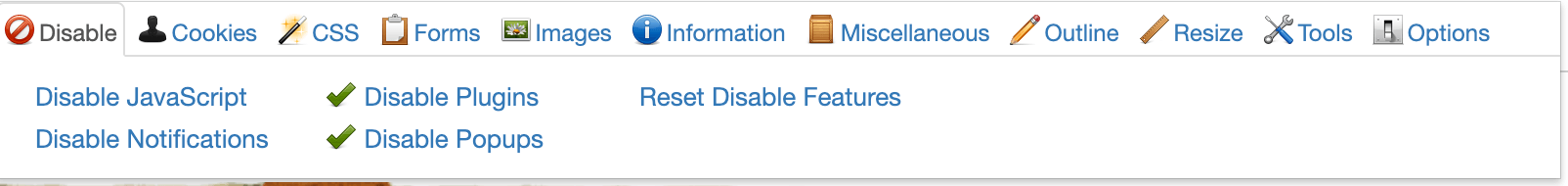

4. Web Developer Toolbar

Screenshot from Web Developer Toolbar extension, December 2022

Screenshot from Web Developer Toolbar extension, December 2022The web developer toolbar extension for Google Chrome can be downloaded here.

It is an official port of the Firefox web developer extension.

One of the primary uses for this extension is identifying issues with code, specifically JavaScript implementations with menus and the user interface.

Turning off JavaScript and CSS makes it possible to identify where these issues are occurring in the browser.

Your auditing is not just limited to JavaScript and CSS issues.

You can also see alt text, find broken images, and view meta tag information and response headers.

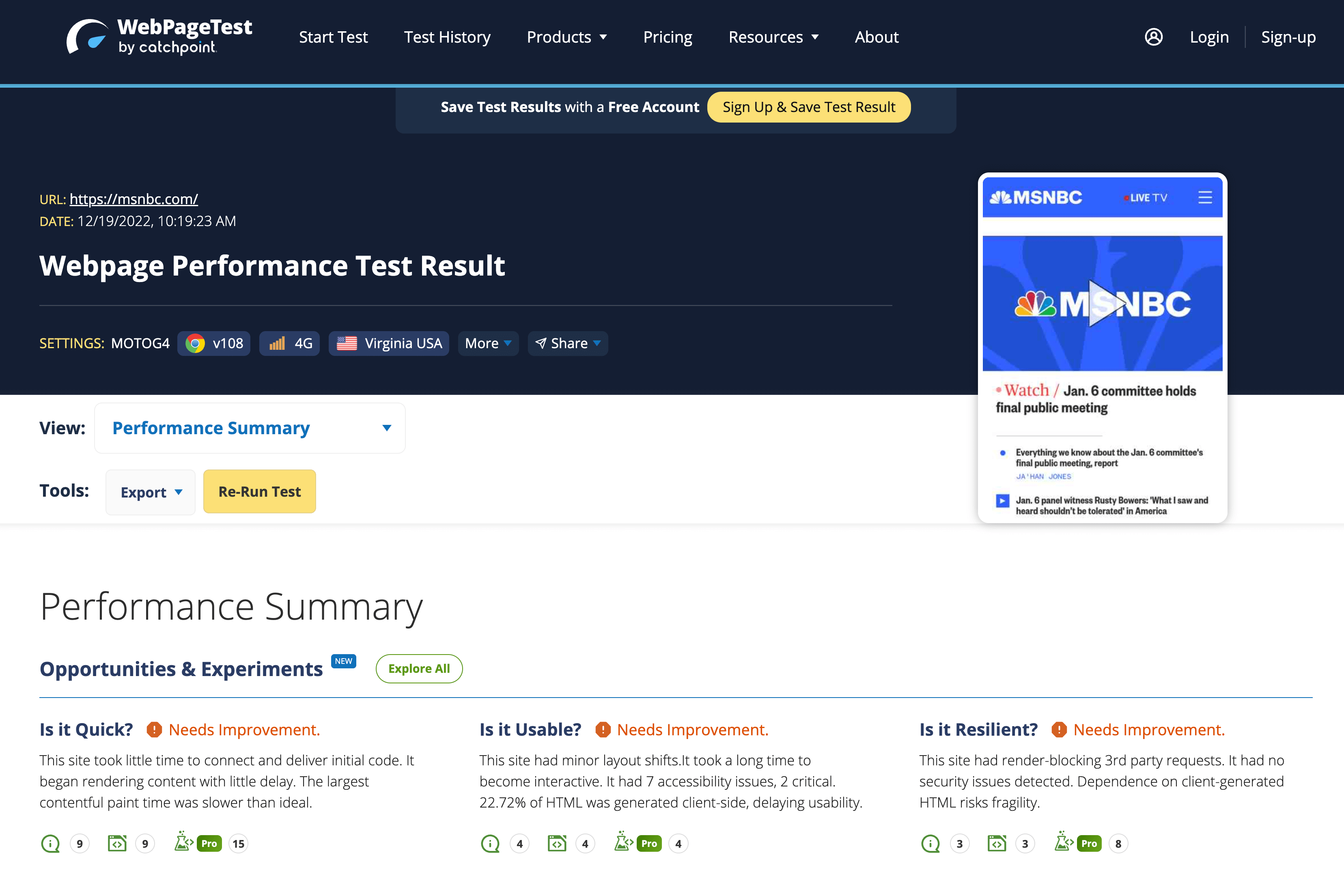

5. WebPageTest

Screenshot of WebPageTest, December 2022

Screenshot of WebPageTest, December 2022Page speed has been a hot topic in recent years, and auditing website page speed brings you many useful tools.

To that end, WebPageTest is one of those essential SEO tools for your agency.

Cool things that you can do with WebPageTest include:

- Waterfall speed tests.

- Competitor speed tests.

- Competitor speed videos.

- Identifying how long it takes a site to load fully.

- Time to first byte.

- Start render time.

- Document object model (DOM) elements.

This is useful for determining how a site’s technical elements interact to create the final result or display time.

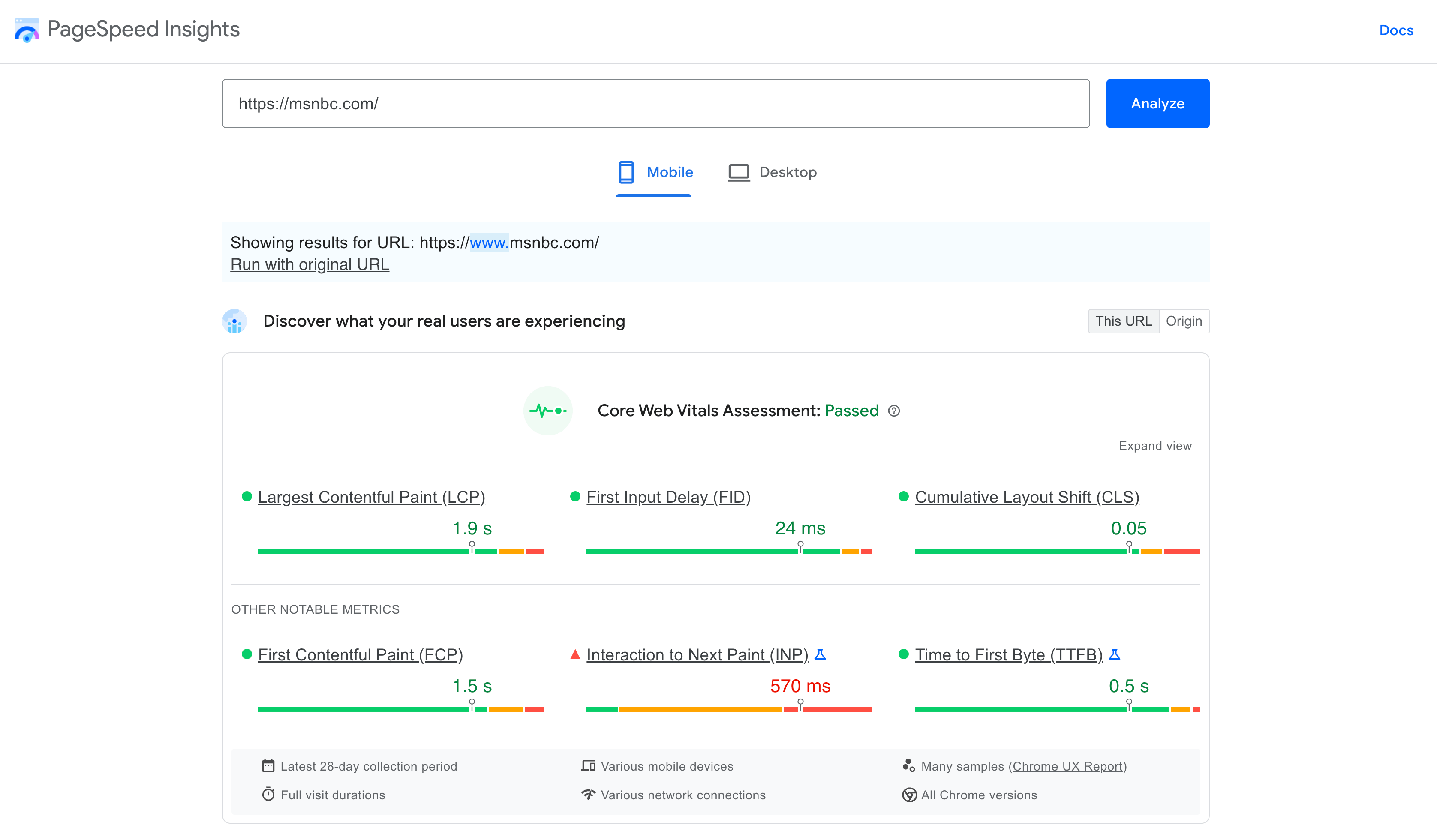

6. Google PageSpeed Insights

Screenshot of Google PageSpeed Insights, December 2022

Screenshot of Google PageSpeed Insights, December 2022Through a combination of speed metrics for both desktop and mobile, Google’s PageSpeed Insights is critical for agencies that want to get their website page speed ducks in a row.

It should not be used as the be-all, end-all of page metrics testing, but it is a good starting point.

Here’s why: PageSpeed Insights does not always use exact page speed. It uses approximations.

While you may get one result with Google PageSpeed, you may also get different results with other tools.

Remember that Google’s PageSpeed provides only part of the picture, and you need more complete data for an effective analysis. Use multiple tools for your analysis to get a full picture of your website’s performance.

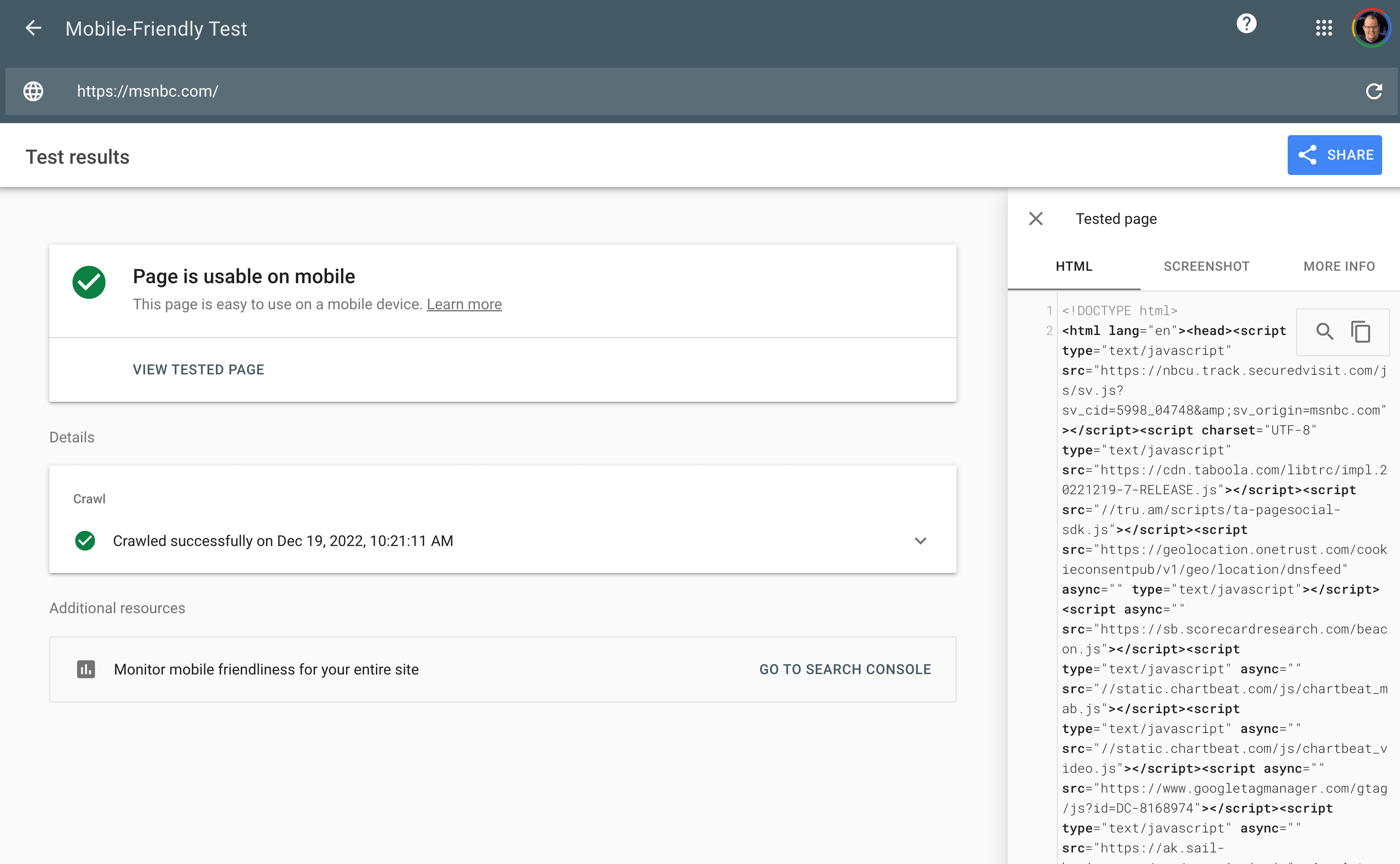

7. Google Mobile-Friendly Testing Tool

Screenshot of Google Mobile-Friendly Testing tool, December 2022

Screenshot of Google Mobile-Friendly Testing tool, December 2022Determining a website’s mobile technical aspects is also critical for any website audit.

When putting a website through its paces, Google’s Mobile-Friendly testing tool can give you insights into a website’s mobile implementation.

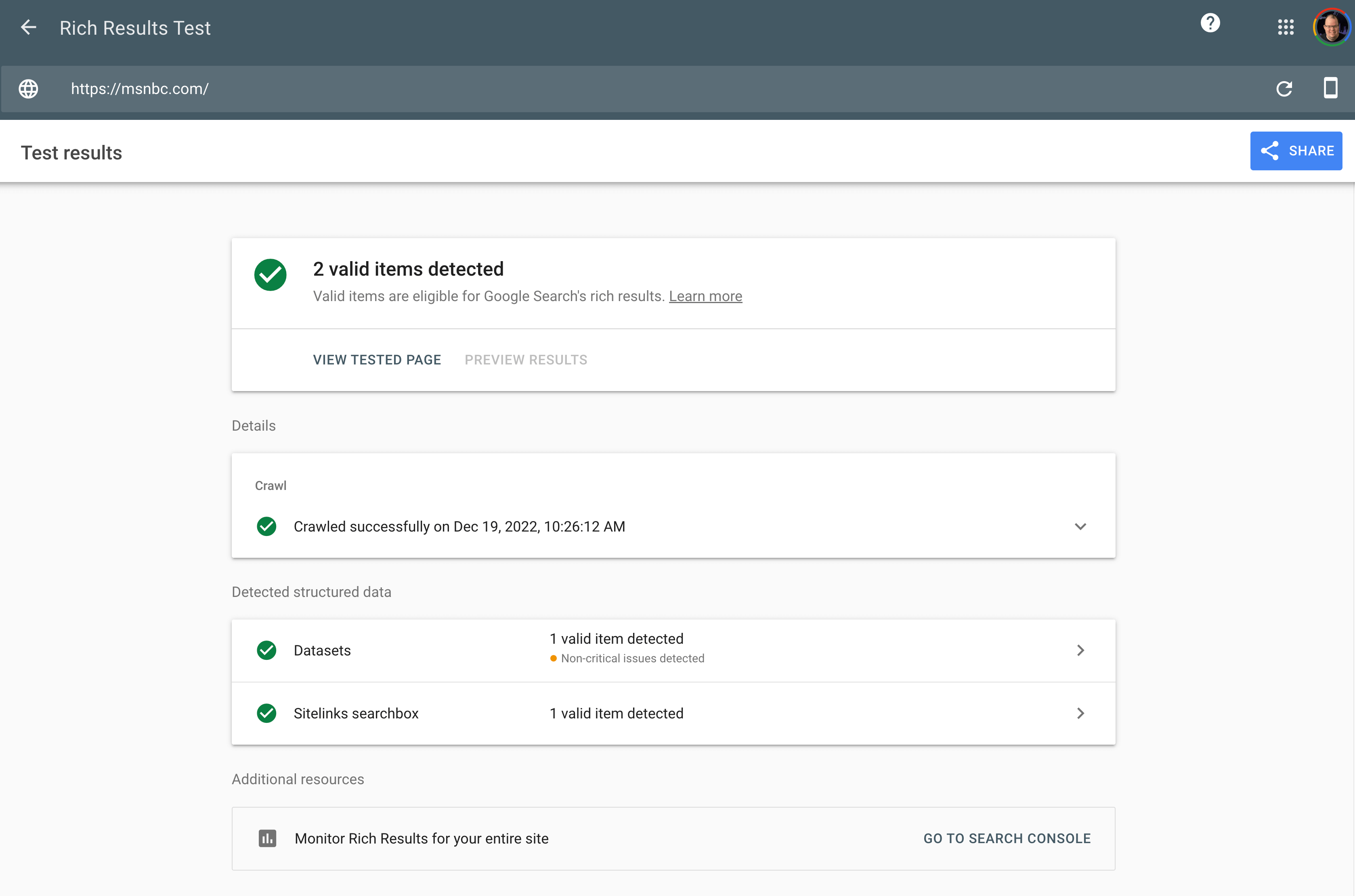

8. Google’s Rich Results Testing Tool

The Google Structured Data Testing Tool has been deprecated and replaced by the Google Rich Results Testing Tool.

This tool performs one function, and performs it well: it helps you test Schema structured data markup against the known data from Schema.org that Google supports.

This is a fantastic way to identify issues with your Schema coding before the code is implemented.

9. GTmetrix Page Speed Report

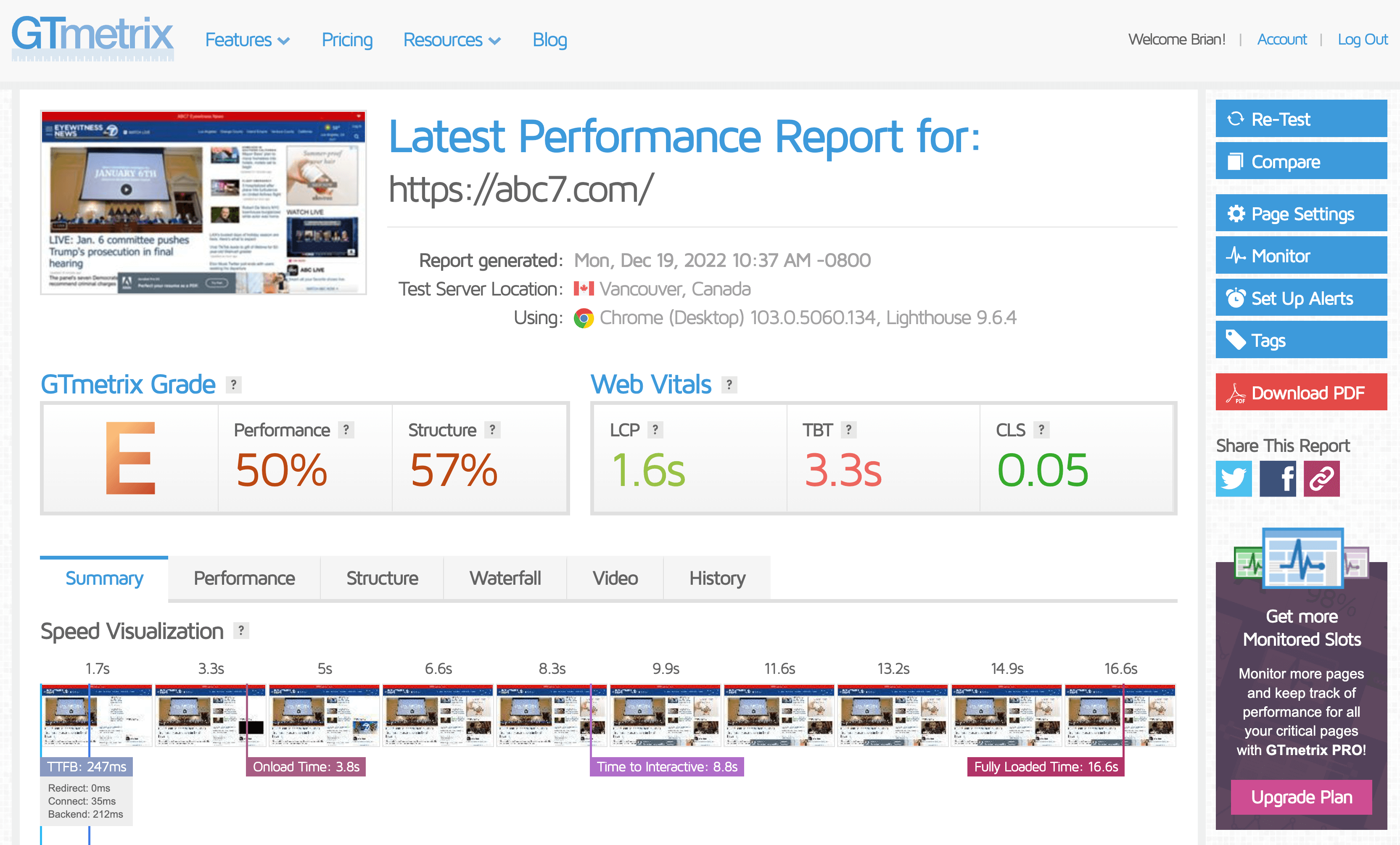

Screenshot of GTmetrix, December 2022

Screenshot of GTmetrix, December 2022GTmetrix is a page speed report card that provides a different perspective on page speed.

By diving deep into page requests, CSS and JavaScript files that need to load, and other website elements, it is possible to clean up many elements that contribute to high page speed.

10. W3C Validator

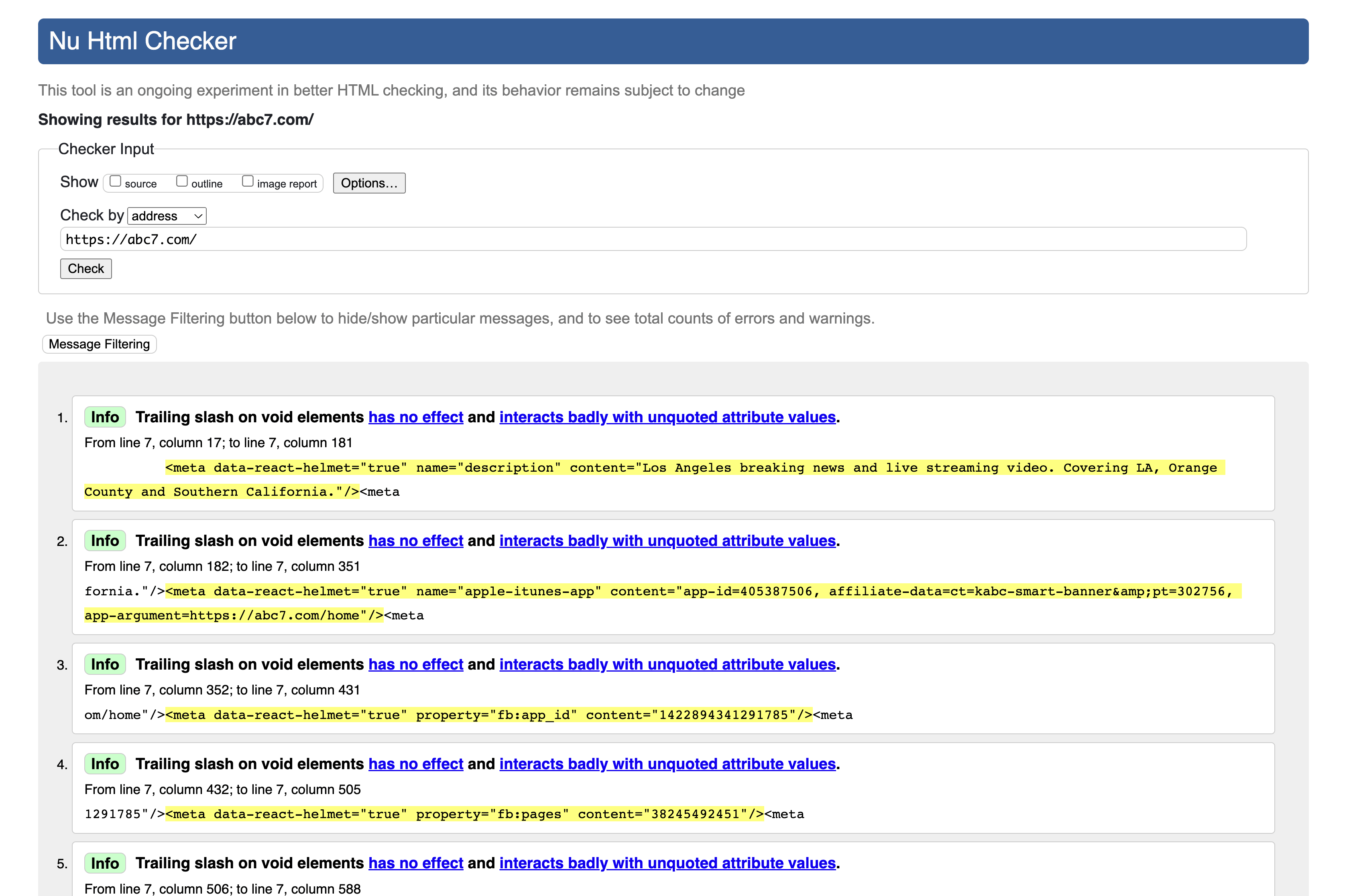

Screenshot of W3C Validator, December 2022

Screenshot of W3C Validator, December 2022You may not normally think of a code validator like W3C Validator as an SEO tool, but it is important just the same.

Be careful! If you don’t know what you are doing, it is easy to misinterpret the results and actually make things worse.

For example, say you are validating code from a site that was developed in XHTML, but the code was ported over to WordPress.

Copying and pasting the entire code into WordPress during development does not change its document type automatically. If during testing, you run across pages that have thousands of errors across the entire document, that is likely why.

A website that was developed in this fashion is more likely to need a complete overhaul with new code, especially if the former code does not exist.

11. Semrush

Screenshot of Semrush, January 2022

Screenshot of Semrush, January 2022Semrush’s greatest claim to fame is accurate data for keyword research and other technical research.

But what makes Semrush so valuable is its competitor analysis data.

You may not normally think of Semrush as a technical analysis tool.

However, if you go deep enough into a competitor analysis, the rankings data and market analysis data can reveal surprising information.

You can use these insights to better tailor your SEO strategy and gain an edge over your competitors.

12. Ahrefs

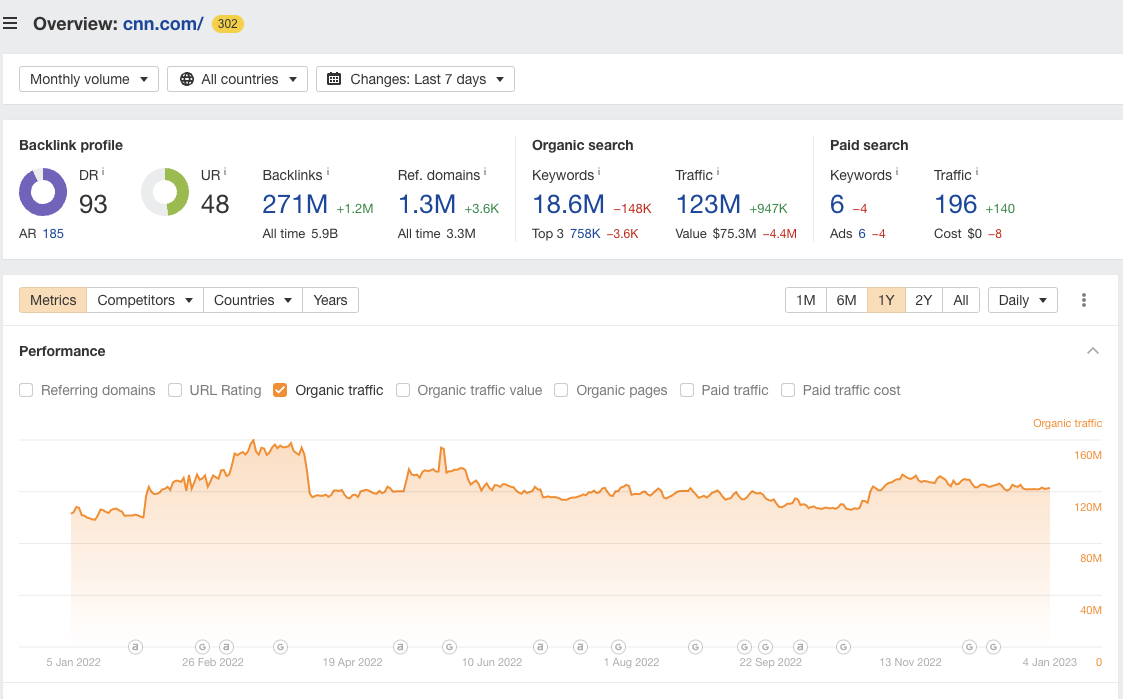

Screenshot of Ahrefs, January 2022

Screenshot of Ahrefs, January 2022Ahrefs is considered by many to be a tool that is a critical component of modern technical link analysis.

By identifying certain patterns in a website’s link profile, you can figure out what a site is doing for its linking strategy.

It is possible to identify anchor text issues that may be impacting a site using its word cloud feature.

Also, you can identify the types of links linking back to the site – whether it’s a blog network, a high-risk link profile with many forum and Web 2.0 links, or other major issues.

Other abilities include identifying when a site’s backlinks started going missing, its linking patterns, and much more.

13. Majestic

Majestic is a long-standing tool in the SEO industry with unique linking insights.

Like Ahrefs, you can identify things like linking patterns by downloading reports of the site’s full link profile.

It is also possible to find things like bad neighborhoods and other domains a website owner owns.

Using this bad neighborhood report, you can diagnose issues with a site’s linking arising out of issues with the site’s website associations.

Like most tools, Majestic has its own values for calculating technical link attributes like Trust Flow, Citation Flow, and other linking elements contributing to trust, relevance, and authority.

It is also possible through its own link graphs to identify any issues occurring with the link profile over time.

Majestic is an exceptional tool in your link diagnostic process.

14. Moz Bar

It is hard to think of something like the MozBar, which lends itself to a little bit of whimsicality, as a serious technical SEO tool. But, there are many metrics that you can gain from detailed analysis.

Things like Moz’s Domain Authority and Page Authority, Google Caching status, other code like social open graph coding, and neat things like the page Metas at-a-glance while in the web browser.

Without diving deep into a crawl, you can also see other advanced elements like rel=”canonical” tags, page load time, Schema Markup, and even the page’s HTTP status.

This is useful for an initial survey of the site before diving deeper into a proper audit, and it can be a good idea to include the findings from this data in an actual audit.

15. Barracuda Panguin

Screenshot of Barracuda Panguin Tool, December 2022

Screenshot of Barracuda Panguin Tool, December 2022If you are investigating a site for a penalty, the Barracuda Panguin tool should be a part of your workflow.

It works by connecting to the Google Analytics account of the site you are investigating. The overlay is intertwined with the GA data, and it will overlay data of when a penalty occurred with your GA data.

Using this overlay, it is possible to easily identify situations where potential penalties occur.

Now, it is important to note that there isn’t an exact science to this, and that correlation isn’t always causation.

It’s important to investigate all avenues of where data is potentially showing something happening, in order to rule out any potential penalty.

Using tools like this can help you zero in on approximations in data events as they occur, which can help for investigative reasons.

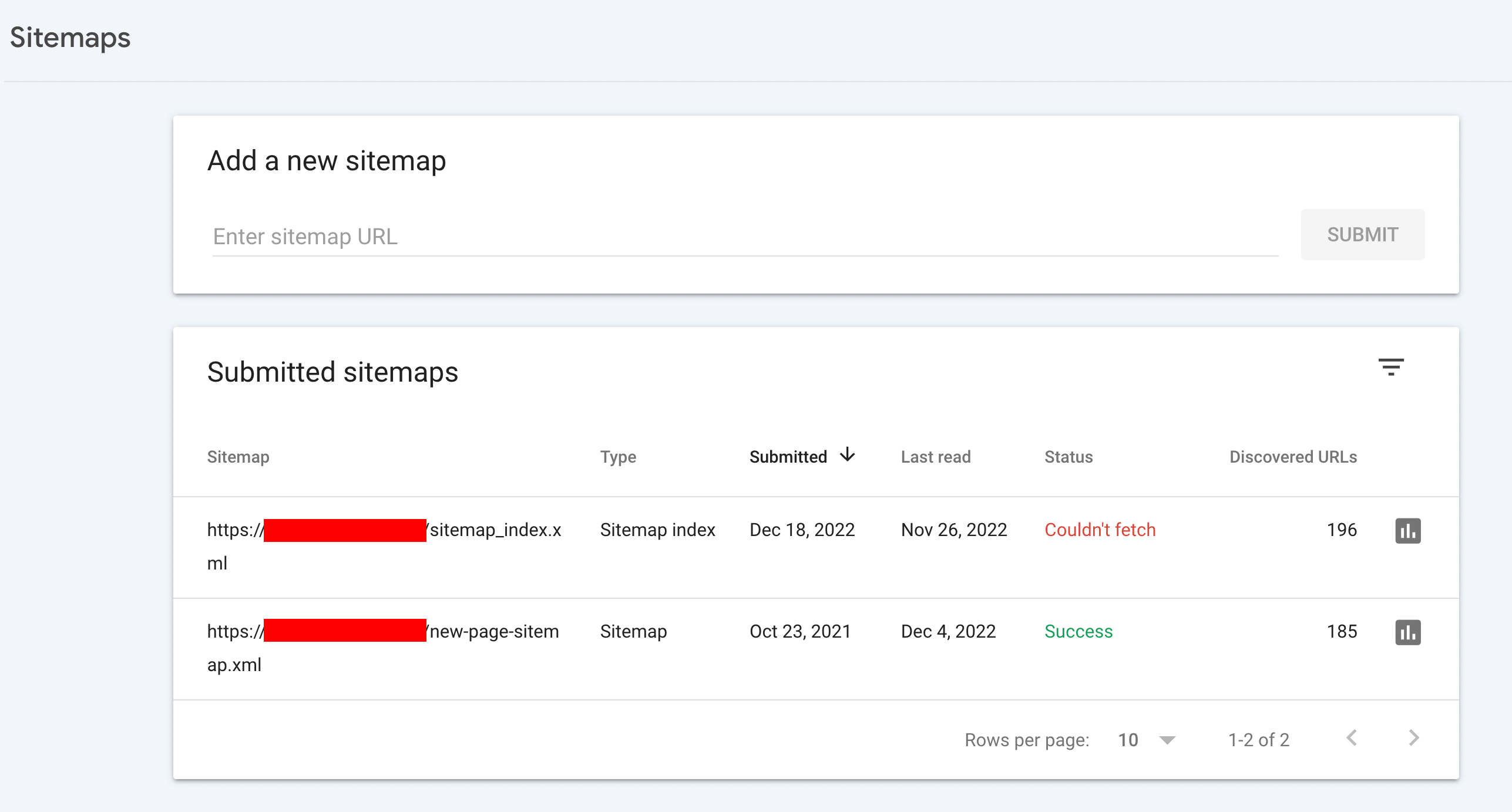

16. Google Search Console XML Sitemap Report

Screenshot of Google Search Console XML Sitemap, December 2022

Screenshot of Google Search Console XML Sitemap, December 2022The Google Search Console XML Sitemap Report is one of those technical SEO tools that should be an important part of any agency’s reporting workflow.

Diagnosing sitemap issues is a critical part of any SEO audit, and this technical insight can help you achieve the all-important 1:1 ratio of URLs added to the site and the sitemap being updated.

For those who don’t know, it is considered an SEO best practice to ensure the following:

- That a sitemap is supposed to contain all 200 OK URLs. No 4xx or 5xx URLs should be showing up in the sitemap.

- There should be a 1:1 ratio of exact URLs in the sitemap as there are on the site. In other words, the sitemap should not have any orphaned pages that are not showing up in the Screaming Frog crawl.

- Any parameter-laden URLs should be removed from the sitemap if they are not considered primary pages. Certain parameters will cause issues with XML sitemaps validating, so make sure that these parameters are not included in URLs.

17. BrightLocal

If you are operating a website for a local business, your SEO strategy should involve local SEO for a significant portion of its link acquisition efforts.

This is where BrightLocal comes in.

It is normally not thought of as a technical SEO tool, but its application can help you uncover technical issues with the site’s local SEO profile.

For example, you can audit the site’s local SEO citations with this tool. Then, you can move forward with identifying and submitting your site to the appropriate citations.

It works kind of like Yext, in that it has a pre-populated list of potential citations.

One of BrightLocal’s essential tools is that it lets you audit, clean, and build citations to the most common citation sites (and others that are less common).

BrightLocal also includes in-depth auditing of your Google Business Profile presence, including in-depth local SEO audits.

If your agency is heavy into local SEO, this is one of those tools that is a no-brainer, from a workflow perspective.

18. Whitespark

Whitespark is more in-depth when compared to BrightLocal.

Its local citation finder allows you to dive deeper into your site’s local SEO by finding where your site is across the competitor space.

To that end, it also lets you identify all of your competitor’s local SEO citations.

In addition, part of its auditing capabilities allows it to track rankings through detailed reporting focused on distinct Google local positions such as the local pack and local finder, as well as detailed organic rankings reports from both Google and Bing.

19. Botify

Botify is one of the most complete technical SEO tools available.

Its claim to fame includes the ability to reconcile search intent and technical SEO with its in-depth keywords analysis tool. You can tie things like crawl budget and technical SEO elements that map to searcher intent.

Not only that, but it’s also possible to identify all the technical SEO factors that are contributing to ranking through Botify’s detailed technical analysis.

In its detailed reporting, you can also use the tool to detect changes in how people are searching, regardless of the industry that you are focused on.

The powerful part of Botify includes its in-depth reports that are capable of tying data to information that you can really act on.

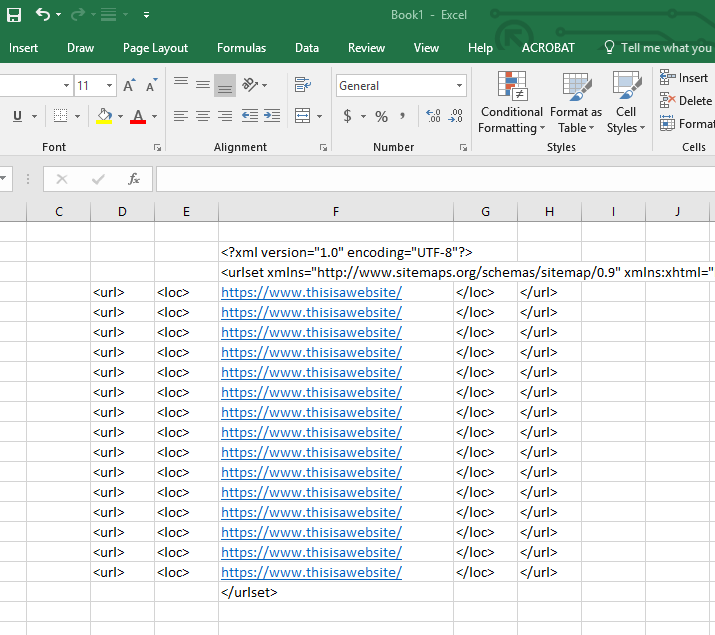

20. Excel

Screenshot by author, December 2022

Screenshot by author, December 2022Many SEO pros aren’t aware that Excel can be considered a technical SEO tool.

Surprising, right?

Well, there are a number of Excel super tricks that one can use to perform technical SEO audits. Tasks that would otherwise take a significantly long time manually can be accomplished much faster.

Let’s look at a few of these “super tricks.”

Super Trick #1: VLOOKUP

With VLOOKUP, it is possible to pull data from multiple sheets based on data that you want to populate in the primary sheet.

This function allows you to do things like perform a link analysis using data gathered from different tools.

If you gathered linking data from GSC’s “who links to you the most” report, other data from Ahrefs, and other data from Moz, you know that it is impossible to reconcile all the information together.

What if you wanted to determine which internal links are valuable in accordance with a site’s inbound linking strategy?

Using this VLOOKUP video, you can combine data from GSC’s report with data from Ahrefs’ report to get the entire picture of what’s happening here.

Super Trick #2: Easy XML Sitemaps

Coding XML Sitemaps manually is a pain, isn’t it?

Not anymore.

Using a process of coding that is implemented quickly, it is possible to code a sitemap in Excel in a matter of minutes – if you work smart.

See the video I created showing this process.

Super Trick #3: Conditional Formatting

Using conditional formatting, it is possible to reconcile long lists of information in Excel.

This is useful in many SEO situations where lists of information are compared daily.

Although Tools Create Efficiencies, They Do Not Replace Manual Work

For any SEO agency that wants a competitive edge, SEO tools run the gamut from crawling to auditing, data gathering, analysis, and much more.

You don’t want to leave your results up to chance.

The right tools can provide another dimension to your analysis that standard analysis might otherwise not provide.

They can also give you an edge in creating an output that will delight your clients and keep them coming back for years to come.

Which of these tools will you use to wow your customers?

Featured Image: Paulo Bobita/Search Engine Journal

SEO

Executive Director Of WordPress Resigns

Josepha Haden Chomphosy, Executive Director of the WordPress Project, officially announced her resignation, ending a nine-year tenure. This comes just two weeks after Matt Mullenweg launched a controversial campaign against a managed WordPress host, which responded by filing a federal lawsuit against him and Automattic.

She posted an upbeat notice on her personal blog, reaffirming her belief in the open source community as positive economic force as well as the importance of strong opinions that are “loosely held.”

She wrote:

“This week marks my last as the Executive Director of the WordPress project. My time with WordPress has transformed me, both as a leader and an advocate. There’s still more to do in our shared quest to secure a self-sustaining future of the open source project that we all love, and my belief in our global community of contributors remains unchanged.

…I still believe that open source is an idea that can transform generations. I believe in the power of a good-hearted group of people. I believe in the importance of strong opinions, loosely held. And I believe the world will always need the more equitable opportunities that well-maintained open source can provide: access to knowledge and learning, easy-to-join peer and business networks, the amplification of unheard voices, and a chance to tap into economic opportunity for those who weren’t born into it.”

Turmoil At WordPress

The resignation comes amidst the backdrop of a conflict between WordPress co-founder Matt Mullenweg and the managed WordPress web host WP Engine, which has brought unprecedented turmoil within the WordPress community, including a federal lawsuit filed by WP Engine accusing Mullenweg of attempted extortion.

Resignation News Was Leaked

The news about the resignation was leaked on October 2nd by the founder of the WordPress news site WP Tavern (now owned by Matt Mullenweg), who tweeted that he had spoken with Josepha that evening, who announced her resignation.

He posted:

“I spoke with Josepha tonight. I can confirm that she’s no longer at Automattic.

She’s working on a statement for the community. She’s in good spirits despite the turmoil.”

Screenshot Of Deleted Tweet

Josepha tweeted the following response the next day:

“Ok, this is not how I expected that news to come to y’all. I apologize that this is the first many of you heard of it. Please don’t speculate about anything.”

Rocky Period For WordPress

While her resignation was somewhat of an open secret it’s still a significant event because of recent events at WordPress, including the resignations of 8.4% of Automattic employees as a result of an offer of a generous severance package to all employees who no longer wished to work there.

Read the official announcement:

Featured Image by Shutterstock/Wirestock Creators

SEO

8% Of Automattic Employees Choose To Resign

WordPress co-founder and Automattic CEO announced today that he offered Automattic employees the chance to resign with a severance pay and a total of 8.4 percent. Mullenweg offered $30,000 or six months of salary, whichever one is higher, with a total of 159 people taking his offer.

Reactions Of Automattic Employees

Given the recent controversies created by Mullenweg, one might be tempted to view the walkout as a vote of no-confidence in Mullenweg. But that would be a mistake because some of the employees announcing their resignations either praised Mullenweg or simply announced their resignation while many others tweeted how happy they are to stay at Automattic.

One former employee tweeted that he was sad about recent developments but also praised Mullenweg and Automattic as an employer.

He shared:

“Today was my last day at Automattic. I spent the last 2 years building large scale ML and generative AI infra and products, and a lot of time on robotics at night and on weekends.

I’m going to spend the next month taking a break, getting married, and visiting family in Australia.

I have some really fun ideas of things to build that I’ve been storing up for a while. Now I get to build them. Get in touch if you’d like to build AI products together.”

Another former employee, Naoko Takano, is a 14 year employee, an organizer of WordCamp conferences in Asia, a full-time WordPress contributor and Open Source Project Manager at Automattic announced on X (formerly Twitter) that today was her last day at Automattic with no additional comment.

She tweeted:

“Today was my last day at Automattic.

I’m actively exploring new career opportunities. If you know of any positions that align with my skills and experience!”

Naoko’s role at at WordPress was working with the global WordPress community to improve contributor experiences through the Five for the Future and Mentorship programs. Five for the Future is an important WordPress program that encourages organizations to donate 5% of their resources back into WordPress. Five for the Future is one of the issues Mullenweg had against WP Engine, asserting that they didn’t donate enough back into the community.

Mullenweg himself was bittersweet to see those employees go, writing in a blog post:

“It was an emotional roller coaster of a week. The day you hire someone you aren’t expecting them to resign or be fired, you’re hoping for a long and mutually beneficial relationship. Every resignation stings a bit.

However now, I feel much lighter. I’m grateful and thankful for all the people who took the offer, and even more excited to work with those who turned down $126M to stay. As the kids say, LFG!”

Read the entire announcement on Mullenweg’s blog:

Featured Image by Shutterstock/sdx15

SEO

YouTube Extends Shorts To 3 Minutes, Adds New Features

YouTube expands Shorts to 3 minutes, adds templates, AI tools, and the option to show fewer Shorts on the homepage.

- YouTube Shorts will allow 3-minute videos.

- New features include templates, enhanced remixing, and AI-generated video backgrounds.

- YouTube is adding a Shorts trends page and comment previews.

-

WORDPRESS3 days ago

WORDPRESS3 days agoWordPress biz Automattic details WP Engine deal demands • The Register

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: September 30, 2024

-

SEO7 days ago

SEO7 days agoYoast Co-Founder Suggests A WordPress Contributor Board

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Volatility With Gains & Losses, Updated Web Spam Policies, Cache Gone & More Search News

-

SEO7 days ago

SEO7 days ago6 Things You Can Do to Compete With Big Sites

-

SEARCHENGINES4 days ago

Daily Search Forum Recap: October 1, 2024

-

SEO6 days ago

SEO6 days agoAn In-Depth Guide For Businesses

-

AFFILIATE MARKETING6 days ago

AFFILIATE MARKETING6 days agoNvidia CEO Jensen Huang Praises Nuclear Energy to Power AI