SEO

Baidu Ranking Factors for 2024: A Comprehensive Data Study

As China’s largest search engine and a global AI and Internet technology leader, Baidu is a powerhouse of innovation. The ERNIE language model, surpassing Google’s BERT in Chinese language processing, positions Baidu at the cutting edge of technological advancement.

In our comprehensive Baidu SEO Ranking Factors Correlation Study*, we analyzed the SERPs for 10,000 Chinese keywords, delving into the top 20 rankings to uncover the factors influencing Baidu’s search engine algorithms.

Search Engine Insights

This study is a goldmine for SEO practitioners globally, not just those targeting the Chinese market. Baidu’s unique approach to search engine technology offers invaluable insights, especially in an era where a deep understanding of algorithms and how search engines work is crucial for SEO success.

Similar to how the SEO community has extensively studied the leaked Yandex papers, understanding Baidu’s SERP construction is equally critical.

Baidu Services in Baidu SERPs

In understanding Baidu’s influence in SEO, it’s important to recognize its array of proprietary services that often dominate the search results. For example, services like Baidu Maps are integral for local searches, similar to the role of Google Maps in other regions.

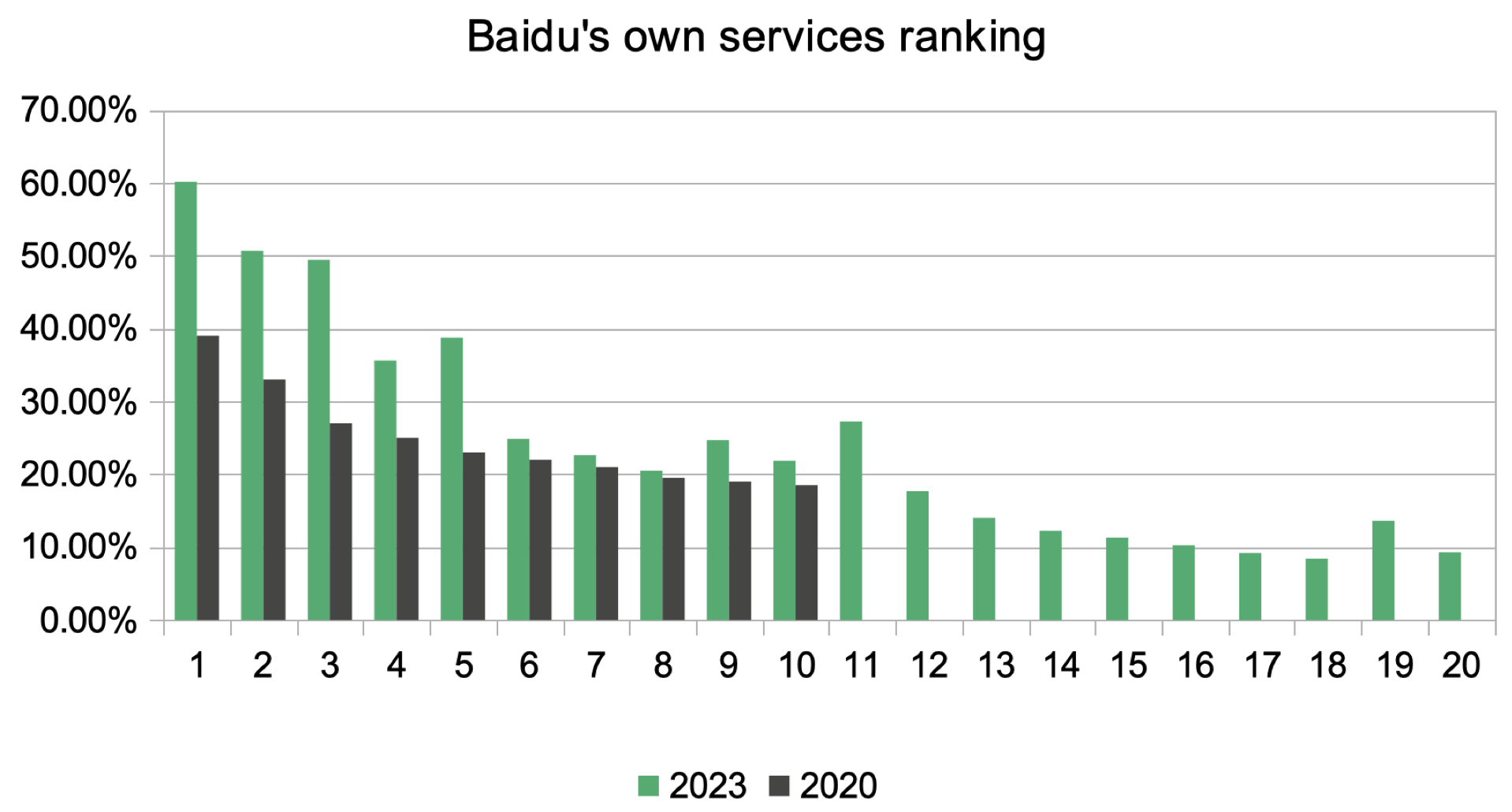

A notable 34.9% of the top 10 search results are dominated by Baidu’s own services, marking a significant increase from 24.7%, as reported in Searchmetrics’ Baidu Ranking Factors Study in 2020**.

| 2020 | 2023 | |

| Percentage of Baidu’s own results in top 10 | 24.70% | 34.91% |

| Percentage of Baidu’s own results in top 20 | NA | 24.91% |

| Percentage of Baidu’s own results on position #1 | 39.00% | 60.13% |

This dominance extends to 60.13% of first-place positions, up from 39%.

This data isn’t just informative; it’s a clear directive for SEO experts to recalibrate their strategies in China’s unique digital space.

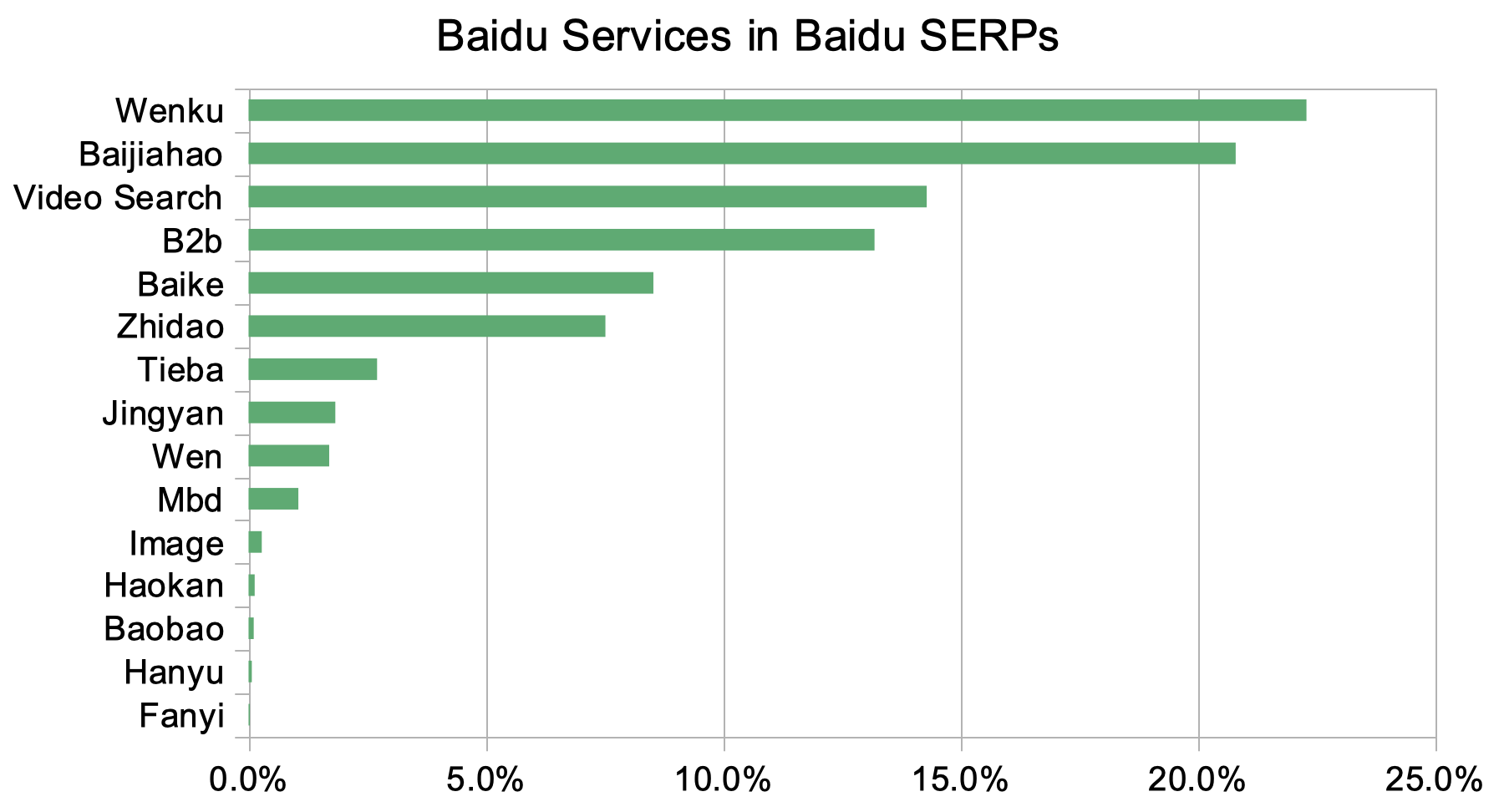

Baidu’s prioritization of its platforms, from Baike to Wenku, signifies more than a preference – it’s a strategic move to retain users within its ecosystem.

Image by author, December 2023

Image by author, December 2023

Baidu Baike, their version of Wikipedia, stands out for its heavily moderated content, ensuring quality but also presenting a challenge for content creators.

The Q&A platform Baidu Zhidao, akin to Quora, and Baidu Wenku, a comprehensive file-sharing service, also frequently appear in search results, reflecting Baidu’s unique algorithm preferences.

These platforms, especially Wenku, tend to have a more prominent presence in Baidu’s SERPs compared to similar platforms in Google’s ecosystem, underscoring the tailored approach Baidu takes in meeting its user’s search needs.

China SEO experts like Stephanie Qian (of The Egg Company) and Veronique Duong (of Rankwell) highlight the potential of leveraging these high-authority domains for enhanced visibility.

This isn’t just a shift in Baidu’s SERPs; it’s a new playbook for Baidu’s SEO success in 2024.

The Unique SEO Landscape In China

Navigating China’s SEO landscape involves understanding unique factors beyond typical SEO strategies. Central to this is China’s rigorous internet regulation, the Great Chinese Firewall, which aims to shield its populace from content considered harmful.

This leads to slower load times for sites hosted outside China due to content scanning and potential blocking. Furthermore, websites on servers flagged for illegal content risk being completely inaccessible in mainland China.

Baidu, the dominant search engine in China, primarily serves the mainland’s Mandarin-speaking audience, favoring content in Simplified Chinese. This contrasts with the Traditional Chinese used in Taiwan and Hong Kong.

Although Baidu indexes global content, its algorithm shows a clear preference for Simplified Chinese, a crucial consideration for SEO targeting this region.

Regarding market share, our study counters the narrative of Bing overtaking Baidu.

In the Chinese market, Baidu remains the primary source of organic traffic, contributing around 70% for our B2B clients, while Bing-China accounts for about 20% – based on analytics data of our B2B clients in China.

This contradicts reports based on StatCounter data, which is used by only 0.01% of top-ranking pages on Baidu, and, as per BuiltWith, is only used by 946 websites.

In-Depth Analysis Of 2024 Baidu Ranking Factors

Domain And URL Structures

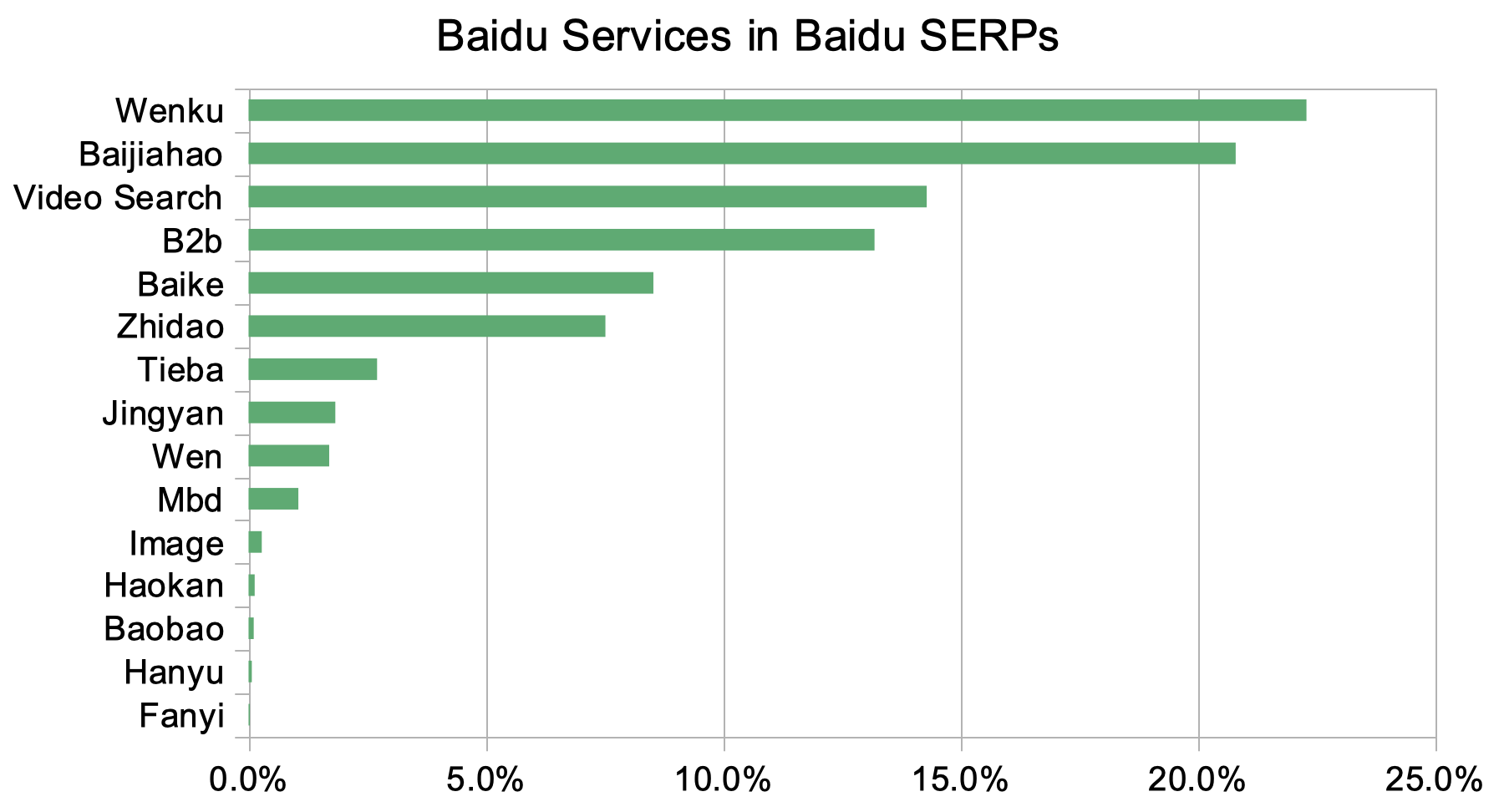

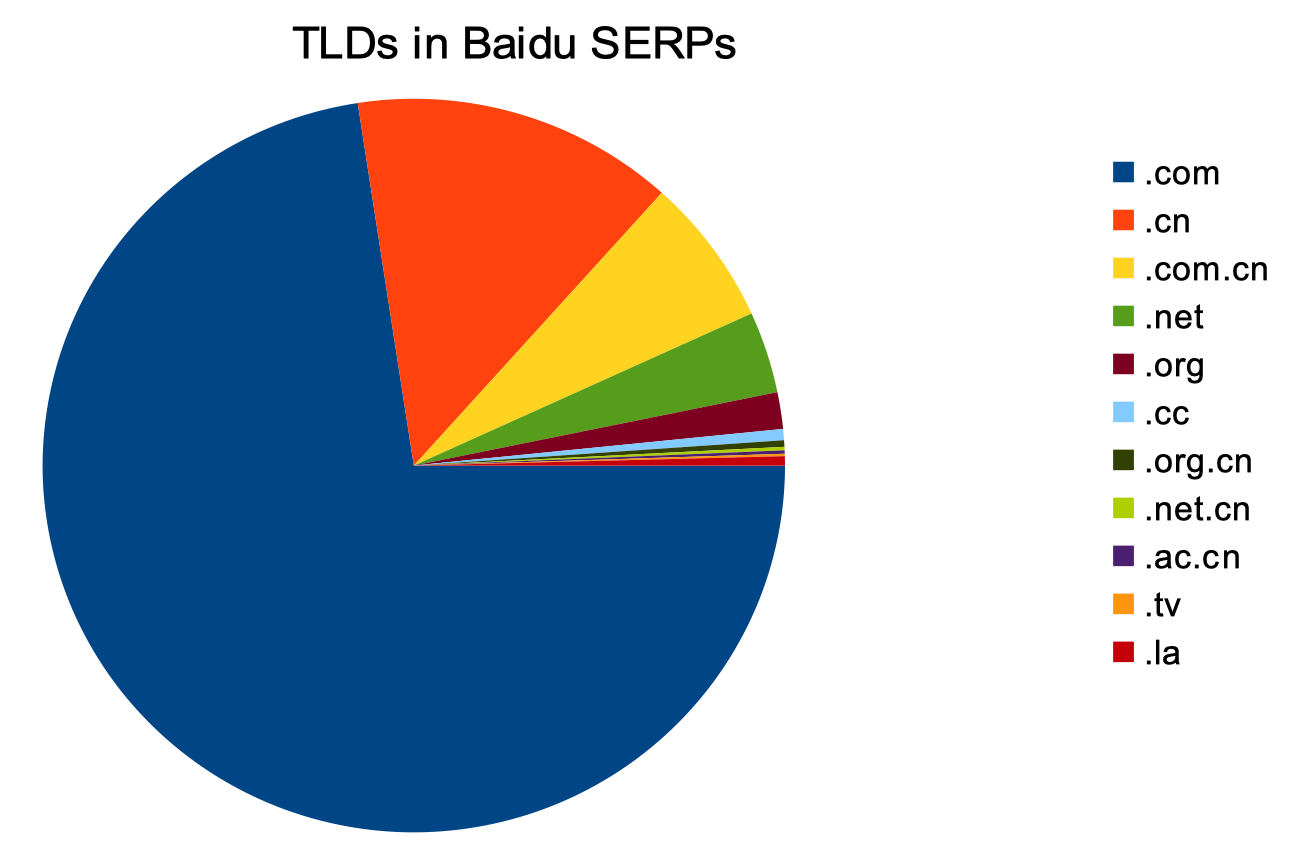

The findings paint a clear picture: Baidu’s ranking algorithm shows a distinct preference for certain TLDs and URL structures, with a notable lean towards Chinese TLDs and simplified, linguistically uniform URLs.

For global clients targeting the Chinese market, adapting to these preferences is key.

TLDs: The Rise Of Chinese Top-Level-Domains

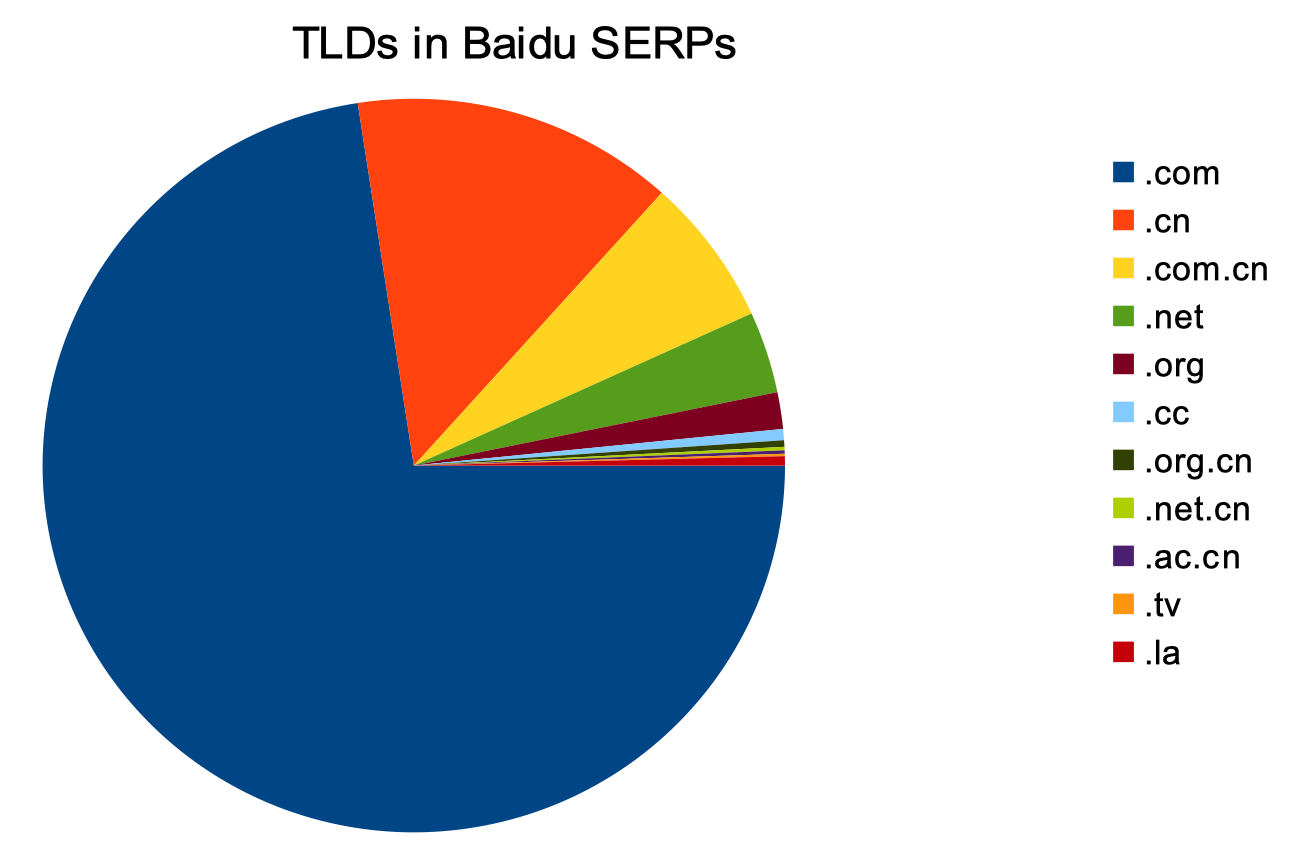

The distribution of Top-Level Domains (TLDs) among Baidu’s top-ranking results shows a clear preference:

Image from author, December 2023

Image from author, December 2023

- .com domains lead with 72.59%.

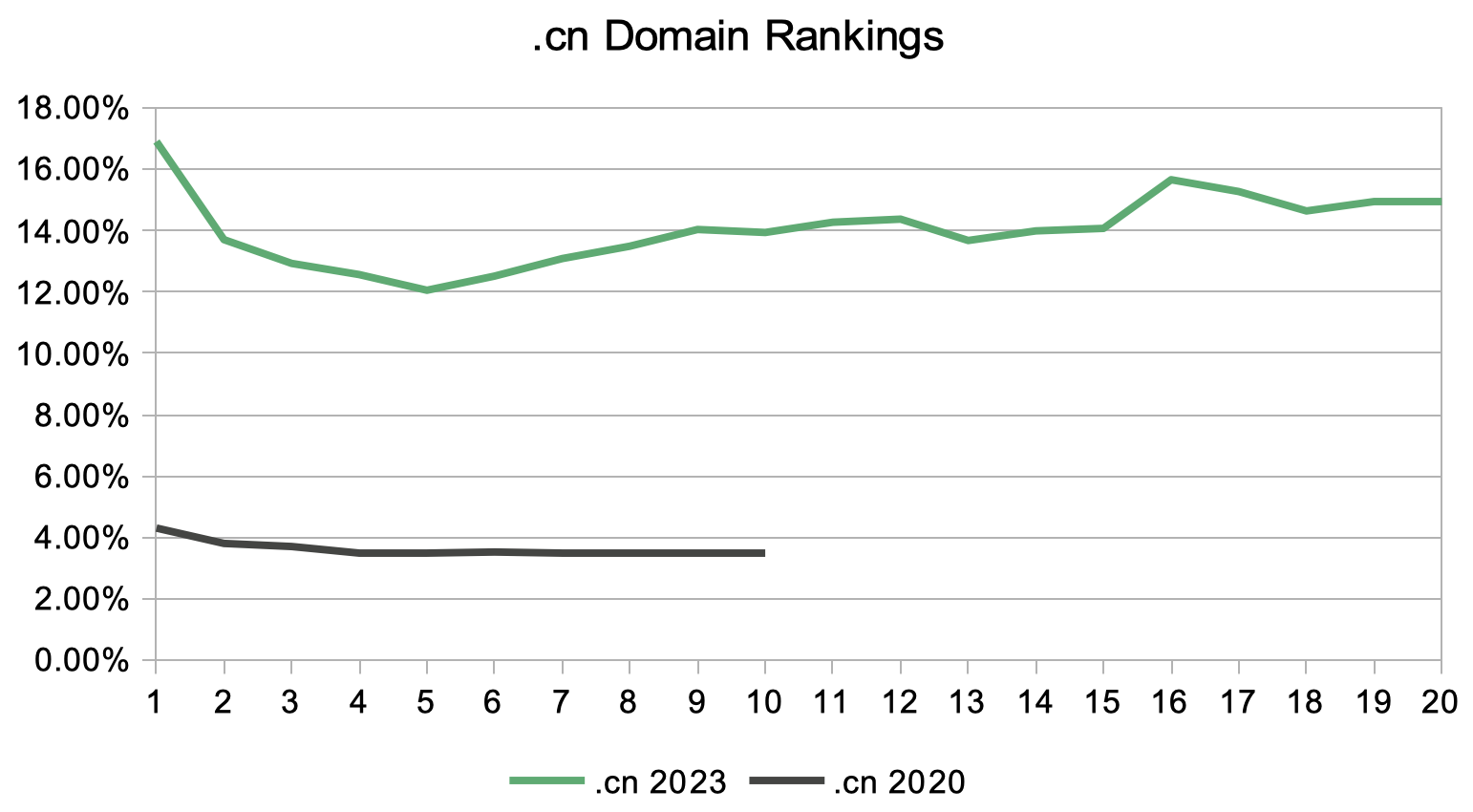

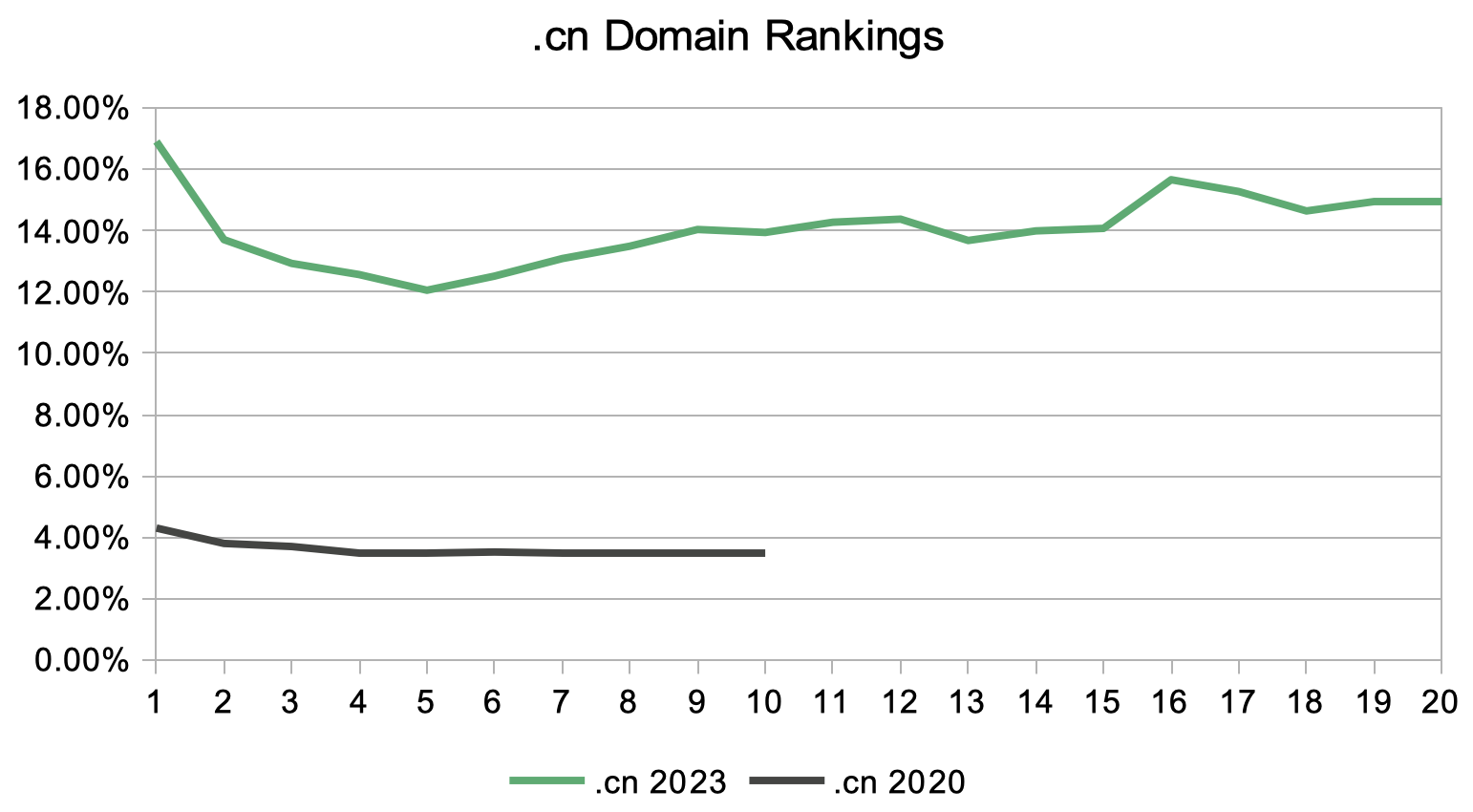

- .cn domains have seen a significant rise, from 3.8% in 2020 (via Searchmetrics) to 14.06% in 2023.

- .com.cn follows with an increase from 5.5% in 2020 to 6.55%.

This upward trend for Chinese TLDs, notably .cn, suggests their growing importance as a potential ranking factor for 2024.

Image from author, December 2023

Image from author, December 2023

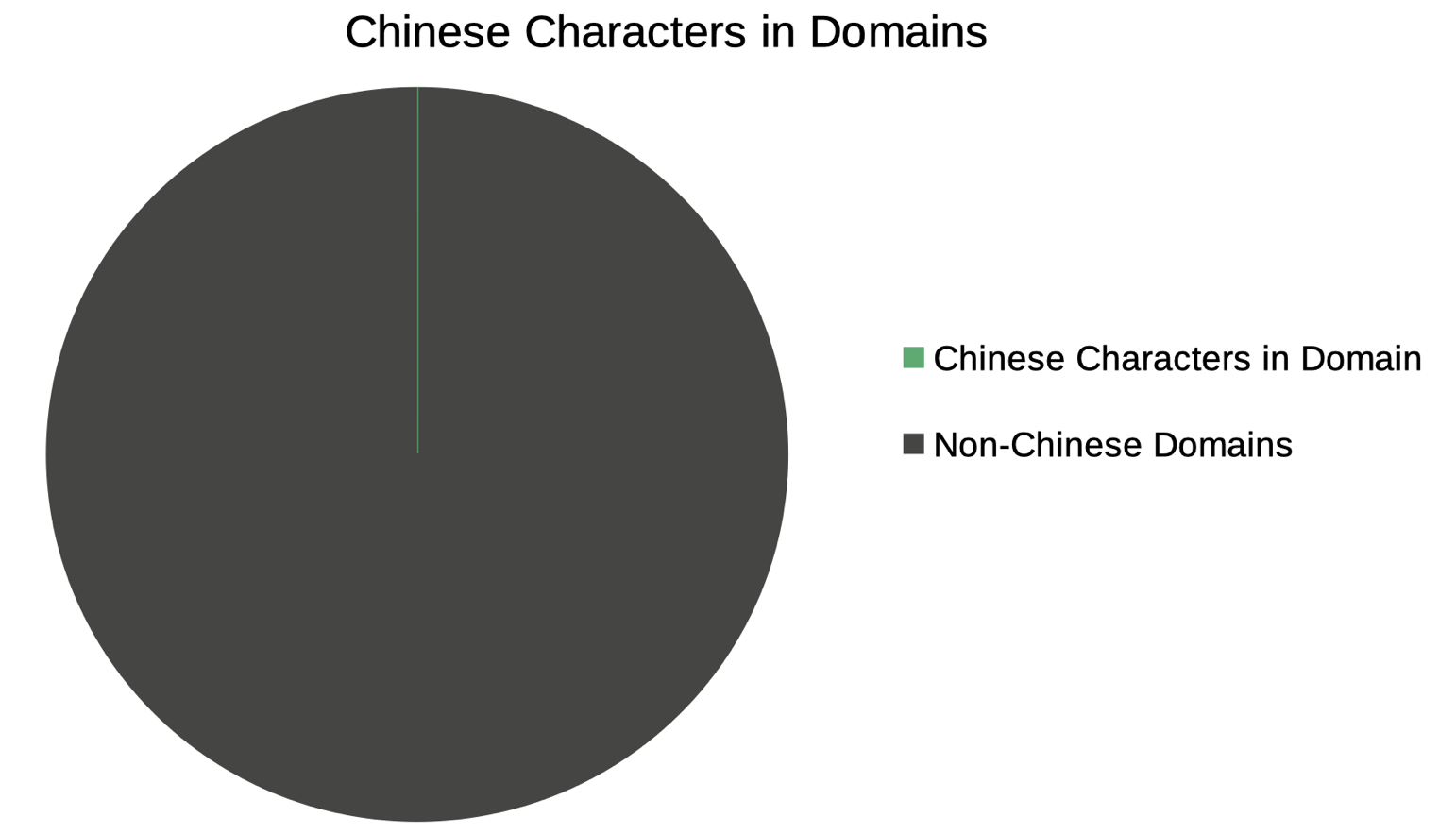

Subdomains and URL Structures

A majority of ranking pages, 58.42%, are found on a ‘www’ subdomain.

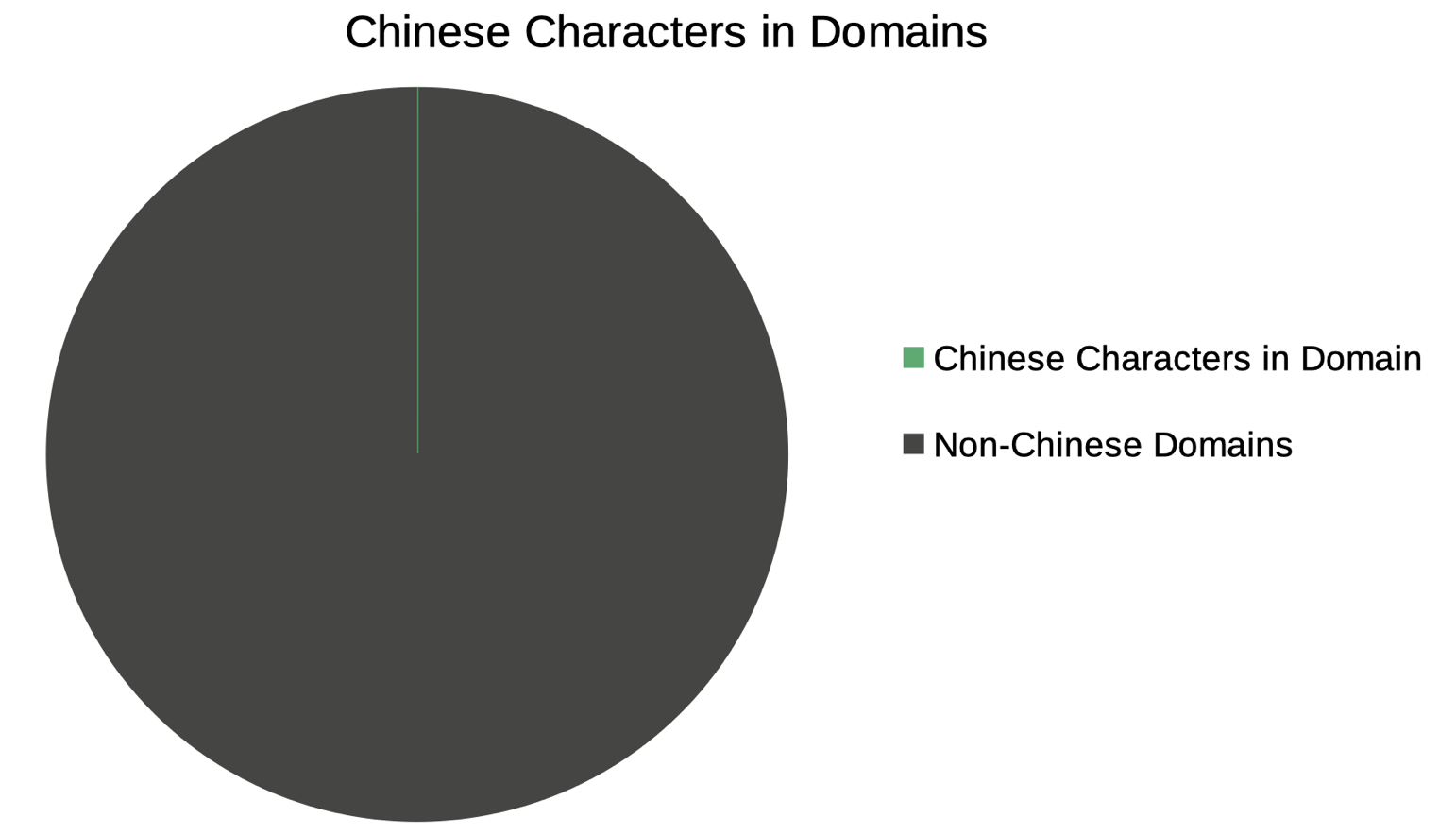

Interestingly, URLs with Chinese characters are rare, constituting only 0.8% of ranking URLs and even fewer in domain names, at just 0.0035%.

Image from author, December 2023

Image from author, December 2023

Stephanie Qian from The Egg Company comments,

“Baidu’s official stance discourages the use of Chinese characters in URLs, dispelling myths about their potential ranking benefits.”

URL Length and Language Indicators

Contrary to the belief that shorter URLs rank better on Baidu, our study found the average URL length of well-ranking pages to be 48.25 characters, with 2.3 folders/directories.

This finding suggests that the internal linking structure might play a more crucial role than URL length or proximity to the root domain.

Further, only 2.3% of top-ranking pages use Chinese language indicators in their URLs (like, for example, ‘cn.’ subdomain or ‘/cn/’ folder), supporting the narrative that Baidu favors mono-lingual Chinese websites.

This insight is particularly relevant for multi-lingual international websites aiming to optimize for Baidu.

Onpage Best Practices For Chinese SEO

For Baidu SEO in 2024, it’s not just about including keywords but strategically placing them within well-structured, relevant content. This approach aligns with modern SEO practices where user experience and content relevance reign supreme.

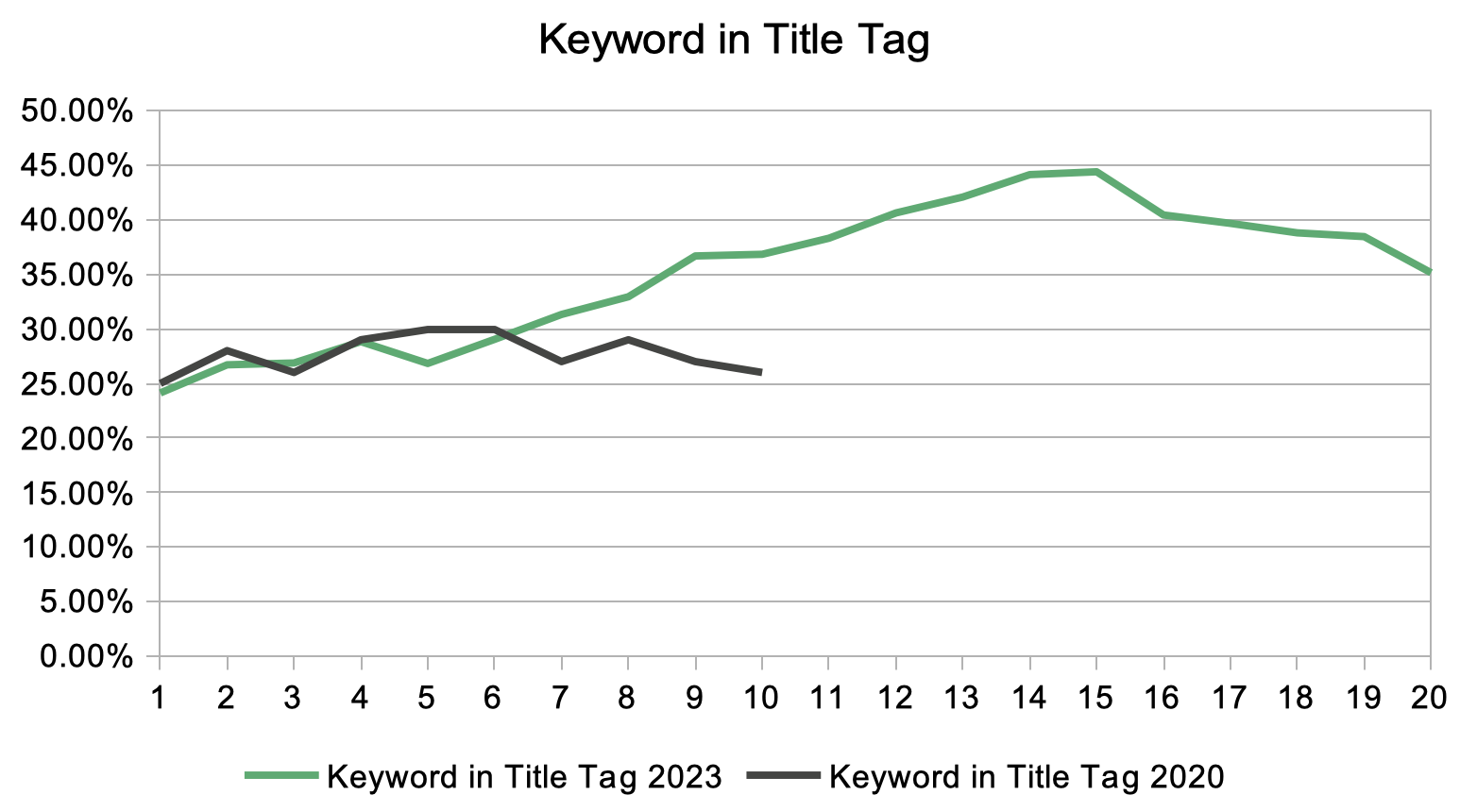

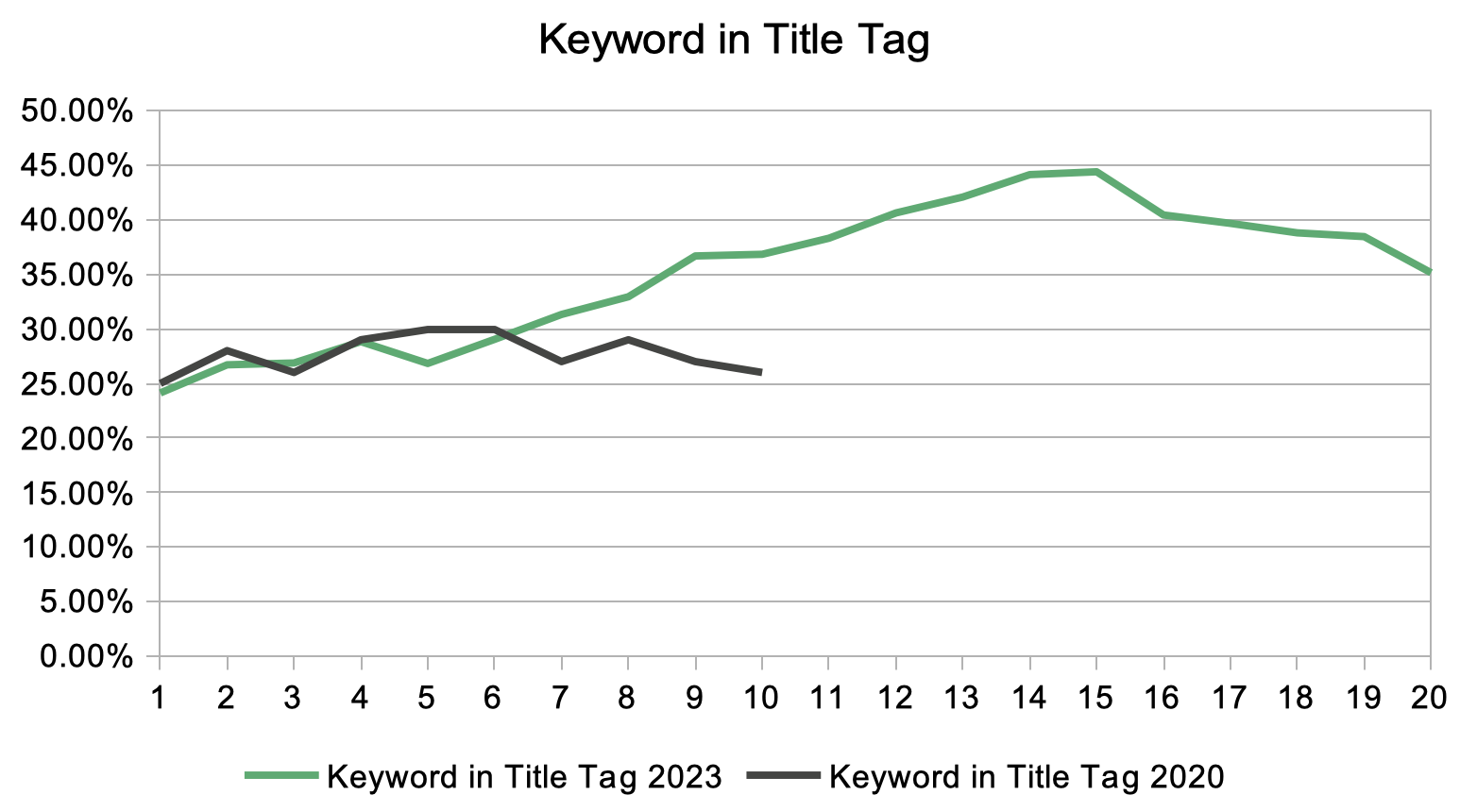

Title Tags And Meta-Descriptions

The average length of title tags on top-ranking pages is 25 Chinese characters, while meta-descriptions average 86 characters. These lengths ensure visibility in Baidu’s SERPs without being truncated.

Interestingly, 36% of top-ranking pages use exact match keywords in the title tags, a figure that rises to 54.4% for more competitive short-head keywords.

Image from author, December 2023

Image from author, December 2023

| Whole keyword set | Shorthead keywords | Midtail keywords | Longtail keywords | |

| Correlation score | -0.1 | -0.17 | -0.14 | -0.02 |

| Percentage | 36% | 54.4% | 41.7% | 18.6% |

For meta-descriptions, 22.2% of top-ranking pages include the exact match keyword, increasing to 34.4% for short-head keywords.

The positioning of the keyword also matters: it’s typically at the front of the title tag but around the 10th position in meta-descriptions.

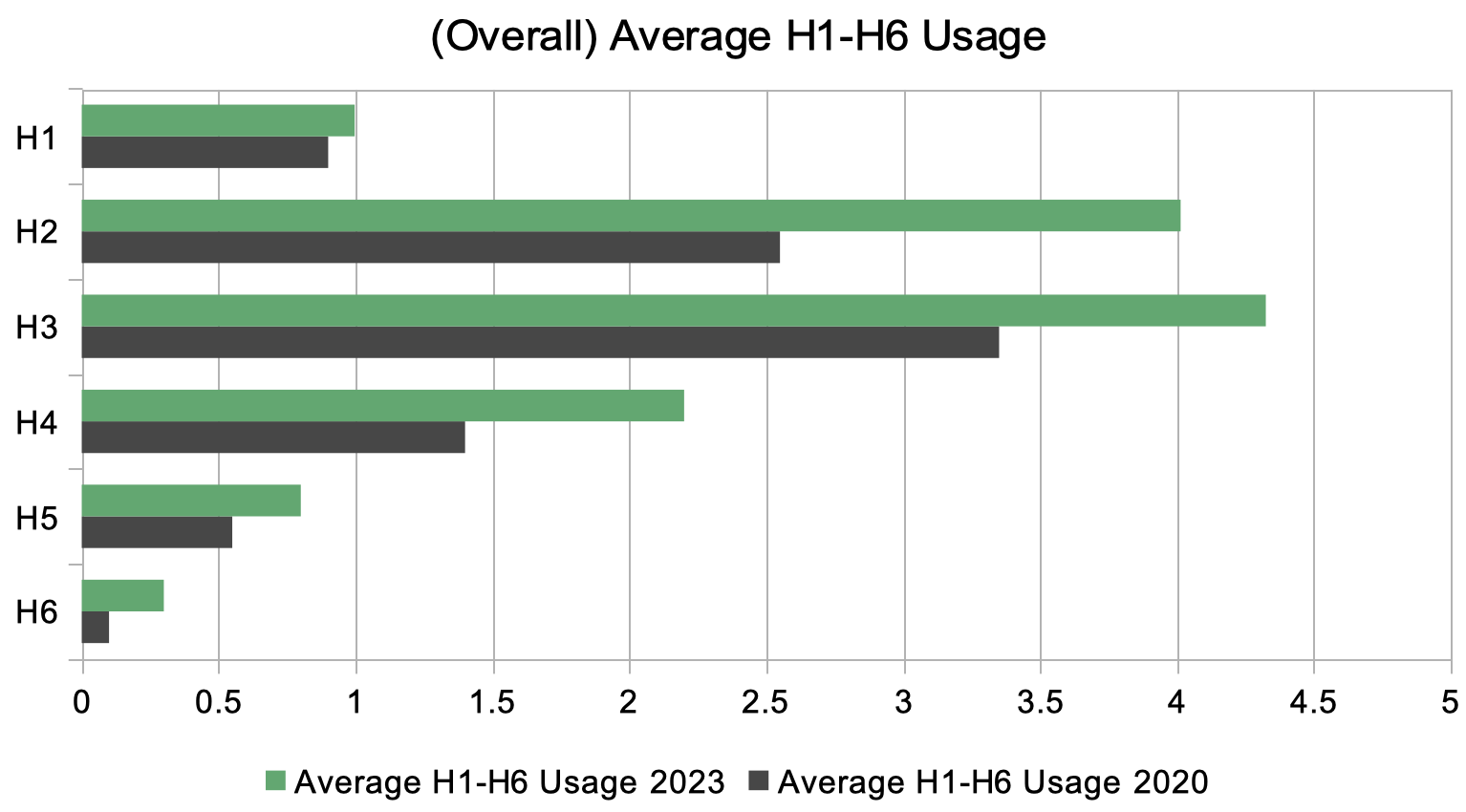

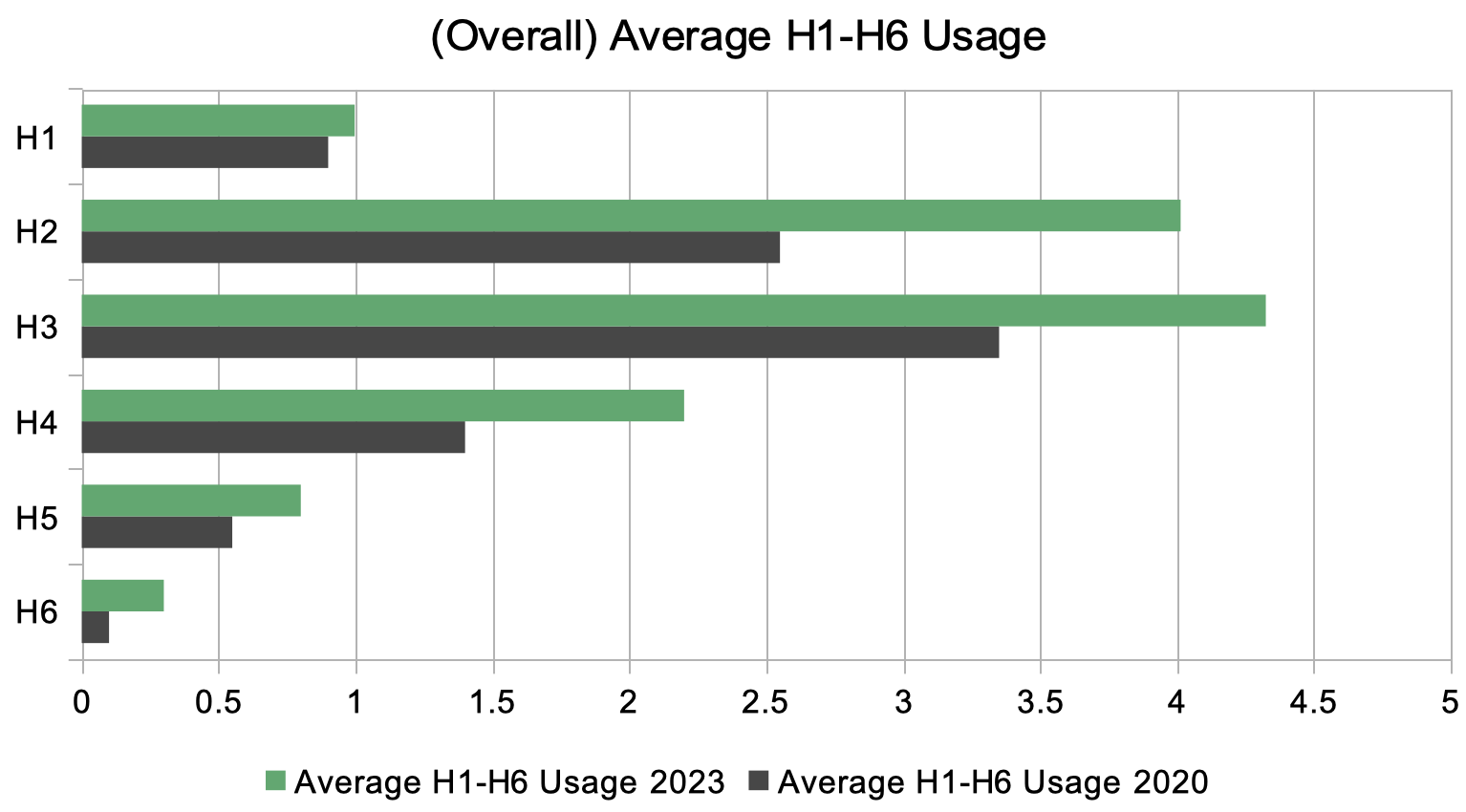

Headings: Hierarchy And Keyword Placement

Headings play a vital role in Baidu SEO:

- 71.2% of top-ranking pages correctly use one H1 tag.

- Nearly half (47.8%) use hierarchical headline structures effectively.

- 21.1% incorporate the exact match keyword in H1, usually around the 4th or 5th position.

- H2 and H3 tags are used by 44% and 46% of top-ranking pages, respectively, averaging around nine headlines each.

- Lesser used H4 headlines appear in 22.4% of top-ranking pages, while H5 and H6 are used by less than 10%.

Image from author, December 2023

Image from author, December 2023

Content And Keyword Density

Content length is a significant factor, with top-ranking pages averaging 4929 characters, although the median is 3147 characters.

About 85% of the content is in Chinese Characters, a vital benchmark for international companies localizing content.

Exact match keywords are used in the content of 49% of top-ranking pages, with the likelihood increasing for more competitive keywords (57% for mid-tail and 66% for short-head keywords).

However, keyword density is less than 1% on average, indicating a move away from over-optimized, spammy content.

The first appearance of the keyword is often within the first 18% of the content.

The Role Of Images

Images are crucial. More than 94% of top-ranking pages feature an average of 27.5 images; 55.4% use alt-tags, and 12.8% include the keyword in at least one alt-tag.

Internal Links

Interestingly, using the keyword in the anchor text of outbound links does not appear to dampen ranking potential, as 20.3% of top-ranking pages do so.

Backlinks: A Key Factor In Baidu’s SEO Rankings

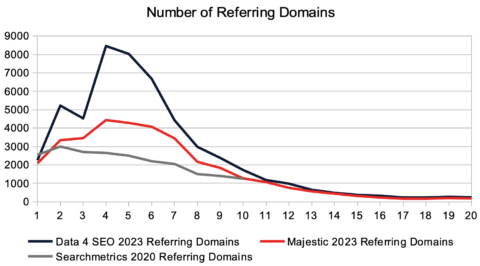

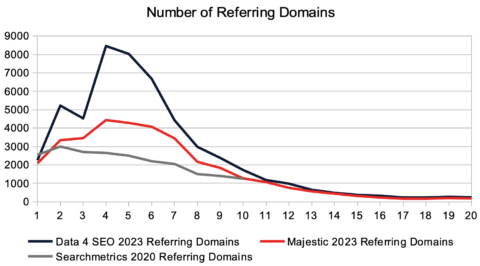

In addition to on-page SEO elements, backlinks play a crucial role in determining rankings on Baidu.

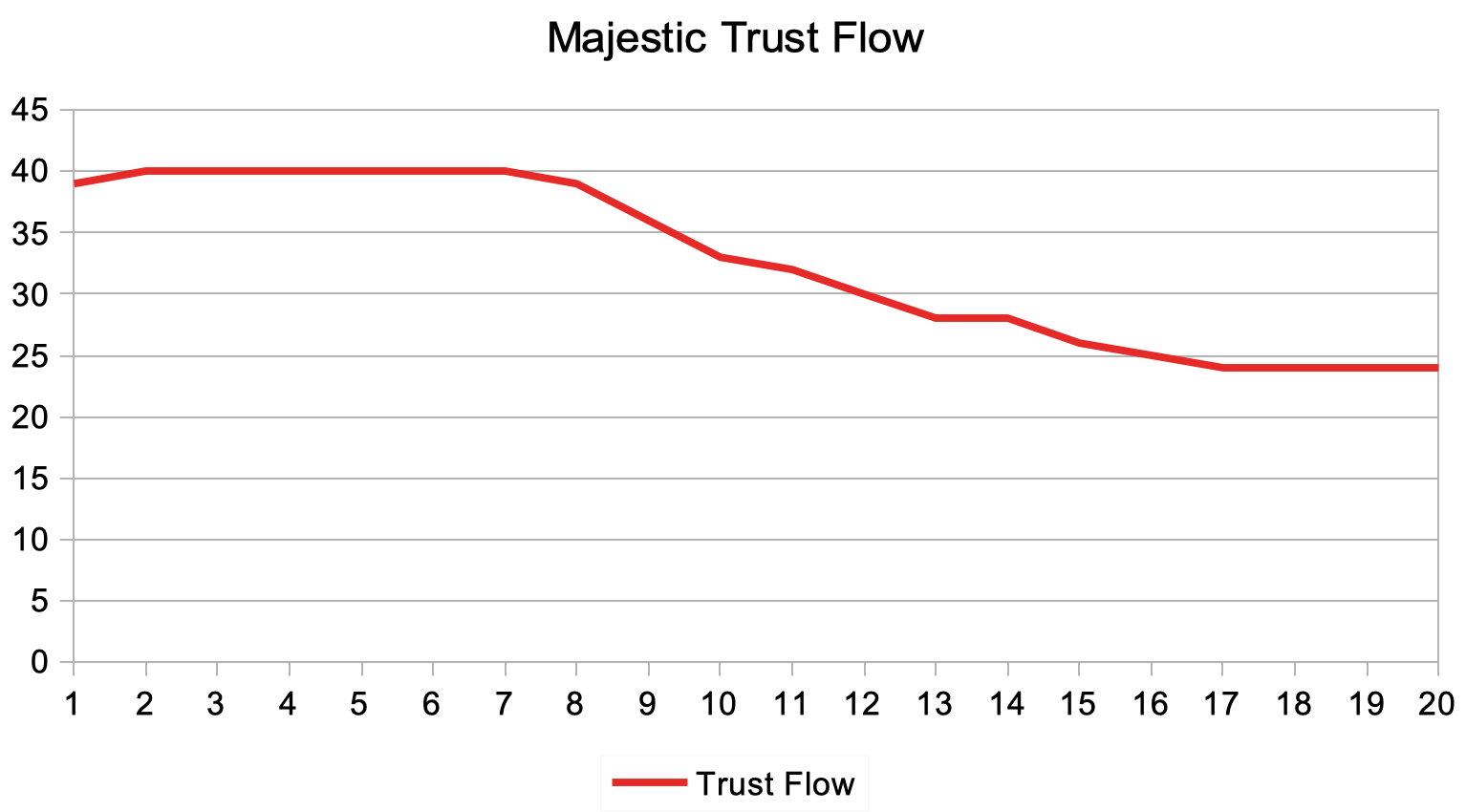

Our analysis, backed by data from DataForSEO and Majestic, reveals a strong positive correlation between the number of referring domains and improved rankings.

Quantity And Quality Of Referring Domains

The quantity of referring domains significantly impacts Baidu rankings. Websites with a higher number of referring domains generally achieve better positions.

Interestingly, data shows that even sites with fewer referring domains can rank well. The 50 lowest-ranked domains had an average of only 1.1 linking domains according to DataForSEO, and 1.3 as per Majestic’s data.

This indicates that while the number of backlinks is important, there are opportunities for sites with fewer links to still perform well on Baidu.

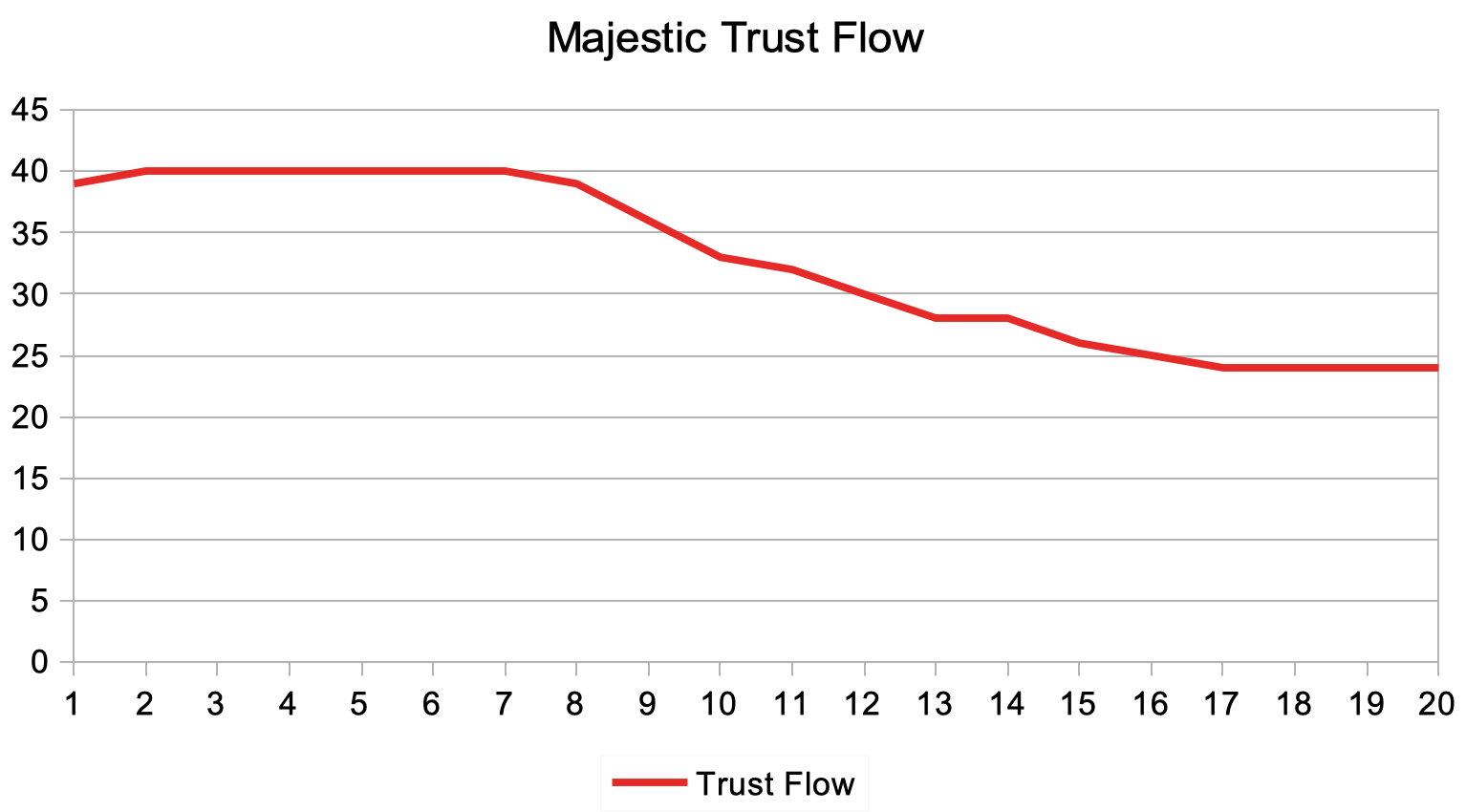

The Impact Of Link Quality

Link quality is equally crucial.

There’s a strong correlation between high-quality links (as measured by Majestic’s Trust Flow/Citation Flow and DataForSEO Rank) and better rankings on Baidu.

Sites with higher-quality links tend to rank more favorably.

Screenshot from Majestic’s Trust Flow/Citation Flow and DataForSEO Rank, December 2023

Screenshot from Majestic’s Trust Flow/Citation Flow and DataForSEO Rank, December 2023

Additionally, top-ranking sites typically have a lower DataForSEO Backlinks Spam Score, underlining the importance of not just the quantity but the quality and trustworthiness of backlinks.

These insights highlight that a well-rounded backlink profile, combining a healthy number of links with high quality, is essential for achieving and maintaining high rankings on Baidu.

It’s a balance of garnering enough attention to be seen as authoritative yet ensuring that attention comes from reputable, high-quality sources.

This approach aligns with broader SEO best practices, emphasizing the importance of building a natural and reputable backlink profile for sustained SEO success.

Emerging Trends And Practical SEO Strategies For Baidu

As SEO strategies evolve, understanding the impact of specific elements like tags, security protocols, and social media integrations is crucial, especially for Baidu.

The analysis sheds light on these advanced aspects.

Tag Usage And Structure

- List Usage: A significant 86.5% of top-ranking pages employ <ul> lists, averaging 10.8 lists per page with 7.9 points each. Interestingly, 12.9% incorporate the target keyword within these lists.

- Tables: 18.2% of top-ranking pages use <table> tags, but a mere 3.1% include the target keyword within these tables, suggesting tables are less about keyword placement and more about structured data presentation.

- Emphasizing Tags: 9.7% of top-ranking pages use emphasizing tags like <strong>, <em>, and <i>, indicating a selective approach to their usage.Technical SEO and Security

Technical SEO And Security

- HTTPS: Now an official ranking factor for Baidu, the adoption of HTTPS has risen from 55% in 2020 (Searchmetrics’ study) to 69.6% among top-ranking pages

- Mobile Optimization: A significant trend is the decline in referencing separate mobile pages, from 35% in 2020 to just 10.3% today, reflecting a shift towards responsive design.

- Google Tag Manager: Usage among top-ranking pages has decreased from 8% in 2020 to only 2.5%, possibly reflecting localization preferences in tools and technologies.

Hreflang And International SEO

- Hreflang Usage: Just 1.5% of top-ranking pages utilize Hreflang, with experts like Dan Taylor and Owain Lloyd-Williams noting Baidu’s not supporting this tag. Simon Lesser’s observation highlights the dominance of domestic Chinese-only sites on Baidu.

Emerging Trends In Code And Markup

- HTML5 Adoption: From less than 30% in 2020, HTML5 usage among top-ranking pages has jumped to 53.2%.

- Schema.org: Despite Baidu’s official non-support, 11% of top-ranking pages implement Schema.org structured data, with expert Owain Lloyd-Williams suggesting its potential benefits, while Adam Di Frisco advises caution due to Baidu’s current stance.

Social Media Integration

- Chinese Social Media: 60% of top-ranking pages include Chinese social media integrations, indicating its significance in Baidu’s SEO.

- Western Social Media: In contrast, only 2% integrate Western platforms like Facebook or YouTube, reflecting Baidu’s regional focus.

These findings underscore the evolving complexities of Baidu SEO. While some global best practices apply, others require adaptation for this unique market.

The strategic use of tags, embracing new technologies like HTML5, and localizing social media integrations emerge as pivotal elements for achieving top rankings on Baidu.

Beyond The Study: Other Influential Factors In Baidu SEO

In Baidu SEO, certain key ranking factors, while not directly measurable, are critical.

Experienced Baidu SEO professionals recognize the importance of user signals, like click-through rates in the SERPs, as influential for rankings. This aligns with insights from Google’s antitrust trial documents, suggesting a similar approach by Baidu.

Equally important is Baidu’s advancement in AI, especially with Baidu ERNIE, surpassing Google’s BERT in understanding Chinese language nuances.

This suggests that Baidu uses advanced NLP in its content analysis algorithms, making techniques like WDF-IDF, tailored for Chinese, vital for creating high-quality content that resonates with both users and Baidu’s AI-driven analysis.

Debunking 4 Common Baidu SEO Myths

Let’s debunk some of the prevalent Baidu SEO myths with insights from our recent study.

Myth 1: Necessity Of A .cn Domain

The common belief is that without a .cn domain, success on Baidu is unattainable.

However, our study shows that .com domains actually dominate Baidu’s search results. While there is a growing trend of Chinese TLDs in top SERPs, the idea that a .cn domain is essential is more myth than reality.

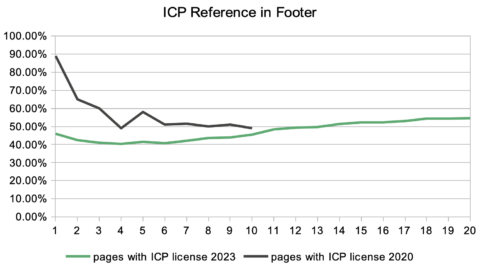

Myth 2: ICP License As A Ranking Requirement

Another myth is that an ICP (Internet Content Provider) license is mandatory for ranking on Baidu.

Contrary to this belief, less than half (48%) of the top-ranking pages have an ICP reference. This is corroborated by our experience with client websites without licenses still achieving rankings.

Myth 3: Only Mainland China-Hosted Websites Rank

The misconception that only websites hosted in Mainland China can rank on Baidu is widespread. In reality, any website accessible in China can rank.

However, it’s worth noting that websites hosted outside of China may experience slower loading speeds, which could impact rankings.

Myth 4: Meta Keywords As A Ranking Factor

Many believe that meta keywords are still a relevant ranking factor for Baidu.

Despite this belief, Baidu’s official stance, as noted by spokesperson Lee, is that meta keywords are no longer considered in their ranking algorithm.

These insights hopefully help clear the air around Baidu SEO. It is important to adapt to factual strategies rather than following outdated myths.

Conclusion: Navigating The Future Of Baidu SEO

As we demystify the landscape of Baidu SEO for 2024, it’s evident that success hinges on a blend of embracing new trends and dismissing outdated myths.

From recognizing the dominance of .com domains, to the rise of .cn and .com.cn TLDs, to understanding the non-essential (but recommended) nature of ICP licenses and the reduced emphasis on meta keywords, SEO strategies must evolve with these insights.

The rise of AI, the significance of user signals, and the nuanced approach to content and backlinks underscore the need for sophisticated, data-driven strategies.

As Baidu continues to refine its algorithms, SEO professionals must adapt, ensuring their tactics not only align with current best practices but are also poised to leverage future advancements.

This journey through Baidu’s SEO terrain equips practitioners with the knowledge and tools to navigate the complexities of ranking on China’s leading search engine, setting the stage for success in the dynamic world of digital marketing.

*We invite you to read the full Baidu SEO Ranking Factors Study we created for you and draw your own conclusions.

**You can also read and compare to the Searchmetrics’ Baidu Ranking Factors Study from 2020.

More resources:

Featured Image: myboys.me/Shutterstock

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)