SEO

How To Get Google To Index Your Site Quickly

If there is one thing in the world of SEO that every SEO professional wants to see, it’s the ability for Google to crawl and index their site quickly.

Indexing is important. It fulfills many initial steps to a successful SEO strategy, including making sure your pages appear on Google search results.

But, that’s only part of the story.

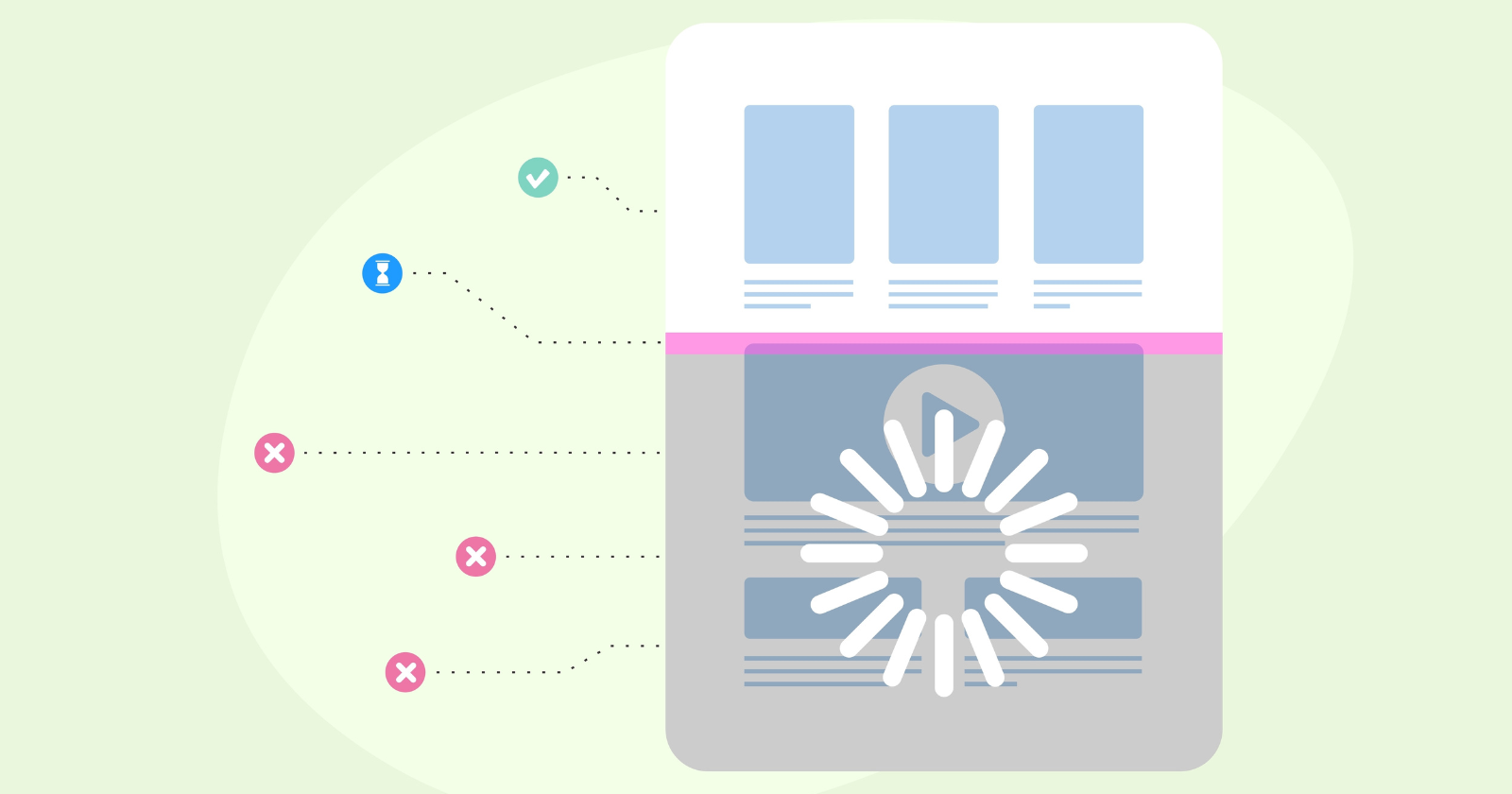

Indexing is but one step in a full series of steps that are required for an effective SEO strategy.

These steps include the following, and they can be boiled down into around three steps total for the entire process:

- Crawling.

- Indexing.

- Ranking.

Although it can be boiled down that far, these are not necessarily the only steps that Google uses. The actual process is much more complicated.

If you’re confused, let’s look at a few definitions of these terms first.

Why definitions?

They are important because if you don’t know what these terms mean, you might run the risk of using them interchangeably – which is the wrong approach to take, especially when you are communicating what you do to clients and stakeholders.

What Is Crawling, Indexing, And Ranking, Anyway?

Quite simply, they are the steps in Google’s process for discovering websites across the World Wide Web and showing them in a higher position in their search results.

Every page discovered by Google goes through the same process, which includes crawling, indexing, and ranking.

First, Google crawls your page to see if it’s worth including in its index.

The step after crawling is known as indexing.

Assuming that your page passes the first evaluations, this is the step in which Google assimilates your web page into its own categorized database index of all the pages available that it has crawled thus far.

Ranking is the last step in the process.

And this is where Google will show the results of your query. While it might take some seconds to read the above, Google performs this process – in the majority of cases – in less than a millisecond.

Finally, the web browser conducts a rendering process so it can display your site properly, enabling it to actually be crawled and indexed.

If anything, rendering is a process that is just as important as crawling, indexing, and ranking.

Let’s look at an example.

Say that you have a page that has code that renders noindex tags, but shows index tags at first load.

Sadly, there are many SEO pros who don’t know the difference between crawling, indexing, ranking, and rendering.

They also use the terms interchangeably, but that is the wrong way to do it – and only serves to confuse clients and stakeholders about what you do.

As SEO professionals, we should be using these terms to further clarify what we do, not to create additional confusion.

Anyway, moving on.

If you are performing a Google search, the one thing that you’re asking Google to do is to provide you results containing all relevant pages from its index.

Often, millions of pages could be a match for what you’re searching for, so Google has ranking algorithms that determine what it should show as results that are the best, and also the most relevant.

So, metaphorically speaking: Crawling is gearing up for the challenge, indexing is performing the challenge, and finally, ranking is winning the challenge.

While those are simple concepts, Google algorithms are anything but.

The Page Not Only Has To Be Valuable, But Also Unique

If you are having problems with getting your page indexed, you will want to make sure that the page is valuable and unique.

But, make no mistake: What you consider valuable may not be the same thing as what Google considers valuable.

Google is also not likely to index pages that are low-quality because of the fact that these pages hold no value for its users.

If you have been through a page-level technical SEO checklist, and everything checks out (meaning the page is indexable and doesn’t suffer from any quality issues), then you should ask yourself: Is this page really – and we mean really – valuable?

Reviewing the page using a fresh set of eyes could be a great thing because that can help you identify issues with the content you wouldn’t otherwise find. Also, you might find things that you didn’t realize were missing before.

One way to identify these particular types of pages is to perform an analysis on pages that are of thin quality and have very little organic traffic in Google Analytics.

Then, you can make decisions on which pages to keep, and which pages to remove.

However, it’s important to note that you don’t just want to remove pages that have no traffic. They can still be valuable pages.

If they cover the topic and are helping your site become a topical authority, then don’t remove them.

Doing so will only hurt you in the long run.

Have A Regular Plan That Considers Updating And Re-Optimizing Older Content

Google’s search results change constantly – and so do the websites within these search results.

Most websites in the top 10 results on Google are always updating their content (at least they should be), and making changes to their pages.

It’s important to track these changes and spot-check the search results that are changing, so you know what to change the next time around.

Having a regular monthly review of your – or quarterly, depending on how large your site is – is crucial to staying updated and making sure that your content continues to outperform the competition.

If your competitors add new content, find out what they added and how you can beat them. If they made changes to their keywords for any reason, find out what changes those were and beat them.

No SEO plan is ever a realistic “set it and forget it” proposition. You have to be prepared to stay committed to regular content publishing along with regular updates to older content.

Remove Low-Quality Pages And Create A Regular Content Removal Schedule

Over time, you might find by looking at your analytics that your pages do not perform as expected, and they don’t have the metrics that you were hoping for.

In some cases, pages are also filler and don’t enhance the blog in terms of contributing to the overall topic.

These low-quality pages are also usually not fully-optimized. They don’t conform to SEO best practices, and they usually do not have ideal optimizations in place.

You typically want to make sure that these pages are properly optimized and cover all the topics that are expected of that particular page.

Ideally, you want to have six elements of every page optimized at all times:

- The page title.

- The meta description.

- Internal links.

- Page headings (H1, H2, H3 tags, etc.).

- Images (image alt, image title, physical image size, etc.).

- Schema.org markup.

But, just because a page is not fully optimized does not always mean it is low quality. Does it contribute to the overall topic? Then you don’t want to remove that page.

It’s a mistake to just remove pages all at once that don’t fit a specific minimum traffic number in Google Analytics or Google Search Console.

Instead, you want to find pages that are not performing well in terms of any metrics on both platforms, then prioritize which pages to remove based on relevance and whether they contribute to the topic and your overall authority.

If they do not, then you want to remove them entirely. This will help you eliminate filler posts and create a better overall plan for keeping your site as strong as possible from a content perspective.

Also, making sure that your page is written to target topics that your audience is interested in will go a long way in helping.

Make Sure Your Robots.txt File Does Not Block Crawling To Any Pages

Are you finding that Google is not crawling or indexing any pages on your website at all? If so, then you may have accidentally blocked crawling entirely.

There are two places to check this: in your WordPress dashboard under General > Reading > Enable crawling, and in the robots.txt file itself.

You can also check your robots.txt file by copying the following address: https://domainnameexample.com/robots.txt and entering it into your web browser’s address bar.

Assuming your site is properly configured, going there should display your robots.txt file without issue.

In robots.txt, if you have accidentally disabled crawling entirely, you should see the following line:

User-agent: * disallow: /

The forward slash in the disallow line tells crawlers to stop indexing your site beginning with the root folder within public_html.

The asterisk next to user-agent tells all possible crawlers and user-agents that they are blocked from crawling and indexing your site.

Check To Make Sure You Don’t Have Any Rogue Noindex Tags

Without proper oversight, it’s possible to let noindex tags get ahead of you.

Take the following situation, for example.

You have a lot of content that you want to keep indexed. But, you create a script, unbeknownst to you, where somebody who is installing it accidentally tweaks it to the point where it noindexes a high volume of pages.

And what happened that caused this volume of pages to be noindexed? The script automatically added a whole bunch of rogue noindex tags.

Thankfully, this particular situation can be remedied by doing a relatively simple SQL database find and replace if you’re on WordPress. This can help ensure that these rogue noindex tags don’t cause major issues down the line.

The key to correcting these types of errors, especially on high-volume content websites, is to ensure that you have a way to correct any errors like this fairly quickly – at least in a fast enough time frame that it doesn’t negatively impact any SEO metrics.

Make Sure That Pages That Are Not Indexed Are Included In Your Sitemap

If you don’t include the page in your sitemap, and it’s not interlinked anywhere else on your site, then you may not have any opportunity to let Google know that it exists.

When you are in charge of a large website, this can get away from you, especially if proper oversight is not exercised.

For example, say that you have a large, 100,000-page health website. Maybe 25,000 pages never see Google’s index because they just aren’t included in the XML sitemap for whatever reason.

That is a big number.

Instead, you have to make sure that the rest of these 25,000 pages are included in your sitemap because they can add significant value to your site overall.

Even if they aren’t performing, if these pages are closely related to your topic and well-written (and high-quality), they will add authority.

Plus, it could also be that the internal linking gets away from you, especially if you are not programmatically taking care of this indexation through some other means.

Adding pages that are not indexed to your sitemap can help make sure that your pages are all discovered properly, and that you don’t have significant issues with indexing (crossing off another checklist item for technical SEO).

Ensure That Rogue Canonical Tags Do Not Exist On-Site

If you have rogue canonical tags, these canonical tags can prevent your site from getting indexed. And if you have a lot of them, then this can further compound the issue.

For example, let’s say that you have a site in which your canonical tags are supposed to be in the format of the following:

But they are actually showing up as:

This is an example of a rogue canonical tag. These tags can wreak havoc on your site by causing problems with indexing. The problems with these types of canonical tags can result in:

- Google not seeing your pages properly – Especially if the final destination page returns a 404 or a soft 404 error.

- Confusion – Google may pick up pages that are not going to have much of an impact on rankings.

- Wasted crawl budget – Having Google crawl pages without the proper canonical tags can result in a wasted crawl budget if your tags are improperly set. When the error compounds itself across many thousands of pages, congratulations! You have wasted your crawl budget on convincing Google these are the proper pages to crawl, when, in fact, Google should have been crawling other pages.

The first step towards repairing these is finding the error and reigning in your oversight. Make sure that all pages that have an error have been discovered.

Then, create and implement a plan to continue correcting these pages in enough volume (depending on the size of your site) that it will have an impact. This can differ depending on the type of site you are working on.

Make Sure That The Non-Indexed Page Is Not Orphaned

An orphan page is a page that appears neither in the sitemap, in internal links, or in the navigation – and isn’t discoverable by Google through any of the above methods.

In other words, it’s an orphaned page that isn’t properly identified through Google’s normal methods of crawling and indexing.

How do you fix this?

If you identify a page that’s orphaned, then you need to un-orphan it. You can do this by including your page in the following places:

- Your XML sitemap.

- Your top menu navigation.

- Ensuring it has plenty of internal links from important pages on your site.

By doing this, you have a greater chance of ensuring that Google will crawl and index that orphaned page, including it in the overall ranking calculation.

Repair All Nofollow Internal Links

Believe it or not, nofollow literally means Google’s not going to follow or index that particular link. If you have a lot of them, then you inhibit Google’s indexing of your site’s pages.

In fact, there are very few situations where you should nofollow an internal link. Adding nofollow to your internal links is something that you should do only if absolutely necessary.

When you think about it, as the site owner, you have control over your internal links. Why would you nofollow an internal link unless it’s a page on your site that you don’t want visitors to see?

For example, think of a a private webmaster login page. If users don’t typically access this page, you don’t want to include it in normal crawling and indexing. So, it should be noindexed, nofollow, and removed from all internal links anyway.

But, if you have a ton of nofollow links, this could raise a quality question in Google’s eyes, in which case your site might get flagged as being a more unnatural site (depending on the severity of the nofollow links).

If you are including nofollows on your links, then it would probably be best to remove them.

Because of these nofollows, you are telling Google not to actually trust these particular links.

More clues as to why these links are not quality internal links come from how Google currently treats nofollow links.

You see, for a long time, there was one type of nofollow link, until very recently when Google changed the rules and how nofollow links are classified.

With the newer nofollow rules, Google has added new classifications for different types of nofollow links.

These new classifications include user-generated content (UGC), and sponsored advertisements (ads).

Anyway, with these new nofollow classifications, if you don’t include them, this may actually be a quality signal that Google uses in order to judge whether or not your page should be indexed.

You may as well plan on including them if you do heavy advertising or UGC such as blog comments.

And because blog comments tend to generate a lot of automated spam, this is the perfect time to flag these nofollow links properly on your site.

Make Sure That You Add Powerful Internal Links

There is a difference between a run-of-the-mill internal link and a “powerful” internal link.

A run-of-the-mill internal link is just an internal link. Adding many of them may – or may not – do much for your rankings of the target page.

But, what if you add links from pages that have backlinks that are passing value? Even better!

What if you add links from more powerful pages that are already valuable?

That is how you want to add internal links.

Why are internal links so great for SEO reasons? Because of the following:

- They help users to navigate your site.

- They pass authority from other pages that have strong authority.

- They also help define the overall website’s architecture.

Before randomly adding internal links, you want to make sure that they are powerful and have enough value that they can help the target pages compete in the search engine results.

Submit Your Page To Google Search Console

If you’re still having trouble with Google indexing your page, you may want to consider submitting your site to Google Search Console immediately after you hit the publish button.

Doing this will tell Google about your page quickly, and it will help you get your page noticed by Google faster than other methods.

In addition, this usually results in indexing within a couple of days’ time if your page is not suffering from any quality issues.

This should help move things along in the right direction.

Use The Rank Math Instant Indexing Plugin

To get your post indexed rapidly, you may want to consider utilizing the Rank Math instant indexing plugin.

Using the instant indexing plugin means that your site’s pages will typically get crawled and indexed quickly.

The plugin allows you to inform Google to add the page you just published to a prioritized crawl queue.

Rank Math’s instant indexing plugin uses Google’s Instant Indexing API.

Improving Your Site’s Quality And Its Indexing Processes Means That It Will Be Optimized To Rank Faster In A Shorter Amount Of Time

Improving your site’s indexing involves making sure that you are improving your site’s quality, along with how it’s crawled and indexed.

This also involves optimizing your site’s crawl budget.

By ensuring that your pages are of the highest quality, that they only contain strong content rather than filler content, and that they have strong optimization, you increase the likelihood of Google indexing your site quickly.

Also, focusing your optimizations around improving indexing processes by using plugins like Index Now and other types of processes will also create situations where Google is going to find your site interesting enough to crawl and index your site quickly.

Making sure that these types of content optimization elements are optimized properly means that your site will be in the types of sites that Google loves to see, and will make your indexing results much easier to achieve.

More resources:

Featured Image: BestForBest/Shutterstock

var s_trigger_pixel_load = false;

function s_trigger_pixel(){

if( !s_trigger_pixel_load ){

setTimeout(function(){ striggerEvent( ‘load2’ ); }, 500);

window.removeEventListener(“scroll”, s_trigger_pixel, false );

window.removeEventListener(“mousemove”, s_trigger_pixel, false );

window.removeEventListener(“click”, s_trigger_pixel, false );

console.log(‘s_trigger_pixel’);

}

s_trigger_pixel_load = true;

}

window.addEventListener( ‘scroll’, s_trigger_pixel, false);

document.addEventListener( ‘mousemove’, s_trigger_pixel, false);

document.addEventListener( ‘click’, s_trigger_pixel, false);

window.addEventListener( ‘load2’, function() {

if( sopp != ‘yes’ && addtl_consent != ‘1~’ && !ss_u ){

!function(f,b,e,v,n,t,s)

{if(f.fbq)return;n=f.fbq=function(){n.callMethod?

n.callMethod.apply(n,arguments):n.queue.push(arguments)};

if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version=’2.0′;

n.queue=[];t=b.createElement(e);t.async=!0;

t.src=v;s=b.getElementsByTagName(e)[0];

s.parentNode.insertBefore(t,s)}(window,document,’script’,

‘https://connect.facebook.net/en_US/fbevents.js’);

if( typeof sopp !== “undefined” && sopp === ‘yes’ ){

fbq(‘dataProcessingOptions’, [‘LDU’], 1, 1000);

}else{

fbq(‘dataProcessingOptions’, []);

}

fbq(‘init’, ‘1321385257908563’);

fbq(‘track’, ‘PageView’);

fbq(‘trackSingle’, ‘1321385257908563’, ‘ViewContent’, {

content_name: ‘how-to-get-google-to-index-your-site-quickly’,

content_category: ‘technical-seo’

});

}

});

SEO

Google Declares It The “Gemini Era” As Revenue Grows 15%

Alphabet Inc., Google’s parent company, announced its first quarter 2024 financial results today.

While Google reported double-digit growth in key revenue areas, the focus was on its AI developments, dubbed the “Gemini era” by CEO Sundar Pichai.

The Numbers: 15% Revenue Growth, Operating Margins Expand

Alphabet reported Q1 revenues of $80.5 billion, a 15% increase year-over-year, exceeding Wall Street’s projections.

Net income was $23.7 billion, with diluted earnings per share of $1.89. Operating margins expanded to 32%, up from 25% in the prior year.

Ruth Porat, Alphabet’s President and CFO, stated:

“Our strong financial results reflect revenue strength across the company and ongoing efforts to durably reengineer our cost base.”

Google’s core advertising units, such as Search and YouTube, drove growth. Google advertising revenues hit $61.7 billion for the quarter.

The Cloud division also maintained momentum, with revenues of $9.6 billion, up 28% year-over-year.

Pichai highlighted that YouTube and Cloud are expected to exit 2024 at a combined $100 billion annual revenue run rate.

Generative AI Integration in Search

Google experimented with AI-powered features in Search Labs before recently introducing AI overviews into the main search results page.

Regarding the gradual rollout, Pichai states:

“We are being measured in how we do this, focusing on areas where gen AI can improve the Search experience, while also prioritizing traffic to websites and merchants.”

Pichai reports that Google’s generative AI features have answered over a billion queries already:

“We’ve already served billions of queries with our generative AI features. It’s enabling people to access new information, to ask questions in new ways, and to ask more complex questions.”

Google reports increased Search usage and user satisfaction among those interacting with the new AI overview results.

The company also highlighted its “Circle to Search” feature on Android, which allows users to circle objects on their screen or in videos to get instant AI-powered answers via Google Lens.

Reorganizing For The “Gemini Era”

As part of the AI roadmap, Alphabet is consolidating all teams building AI models under the Google DeepMind umbrella.

Pichai revealed that, through hardware and software improvements, the company has reduced machine costs associated with its generative AI search results by 80% over the past year.

He states:

“Our data centers are some of the most high-performing, secure, reliable and efficient in the world. We’ve developed new AI models and algorithms that are more than one hundred times more efficient than they were 18 months ago.

How Will Google Make Money With AI?

Alphabet sees opportunities to monetize AI through its advertising products, Cloud offerings, and subscription services.

Google is integrating Gemini into ad products like Performance Max. The company’s Cloud division is bringing “the best of Google AI” to enterprise customers worldwide.

Google One, the company’s subscription service, surpassed 100 million paid subscribers in Q1 and introduced a new premium plan featuring advanced generative AI capabilities powered by Gemini models.

Future Outlook

Pichai outlined six key advantages positioning Alphabet to lead the “next wave of AI innovation”:

- Research leadership in AI breakthroughs like the multimodal Gemini model

- Robust AI infrastructure and custom TPU chips

- Integrating generative AI into Search to enhance the user experience

- A global product footprint reaching billions

- Streamlined teams and improved execution velocity

- Multiple revenue streams to monetize AI through advertising and cloud

With upcoming events like Google I/O and Google Marketing Live, the company is expected to share further updates on its AI initiatives and product roadmap.

Featured Image: Sergei Elagin/Shutterstock

SEO

brightonSEO Live Blog

Hello everyone. It’s April again, so I’m back in Brighton for another two days of Being the introvert I am, my idea of fun isn’t hanging around our booth all day explaining we’ve run out of t-shirts (seriously, you need to be fast if you want swag!). So I decided to do something useful and live-blog the event instead.

Follow below for talk takeaways and (very) mildly humorous commentary. sun, sea, and SEO!

SEO

Google Further Postpones Third-Party Cookie Deprecation In Chrome

Google has again delayed its plan to phase out third-party cookies in the Chrome web browser. The latest postponement comes after ongoing challenges in reconciling feedback from industry stakeholders and regulators.

The announcement was made in Google and the UK’s Competition and Markets Authority (CMA) joint quarterly report on the Privacy Sandbox initiative, scheduled for release on April 26.

Chrome’s Third-Party Cookie Phaseout Pushed To 2025

Google states it “will not complete third-party cookie deprecation during the second half of Q4” this year as planned.

Instead, the tech giant aims to begin deprecating third-party cookies in Chrome “starting early next year,” assuming an agreement can be reached with the CMA and the UK’s Information Commissioner’s Office (ICO).

The statement reads:

“We recognize that there are ongoing challenges related to reconciling divergent feedback from the industry, regulators and developers, and will continue to engage closely with the entire ecosystem. It’s also critical that the CMA has sufficient time to review all evidence, including results from industry tests, which the CMA has asked market participants to provide by the end of June.”

Continued Engagement With Regulators

Google reiterated its commitment to “engaging closely with the CMA and ICO” throughout the process and hopes to conclude discussions this year.

This marks the third delay to Google’s plan to deprecate third-party cookies, initially aiming for a Q3 2023 phaseout before pushing it back to late 2024.

The postponements reflect the challenges in transitioning away from cross-site user tracking while balancing privacy and advertiser interests.

Transition Period & Impact

In January, Chrome began restricting third-party cookie access for 1% of users globally. This percentage was expected to gradually increase until 100% of users were covered by Q3 2024.

However, the latest delay gives websites and services more time to migrate away from third-party cookie dependencies through Google’s limited “deprecation trials” program.

The trials offer temporary cookie access extensions until December 27, 2024, for non-advertising use cases that can demonstrate direct user impact and functional breakage.

While easing the transition, the trials have strict eligibility rules. Advertising-related services are ineligible, and origins matching known ad-related domains are rejected.

Google states the program aims to address functional issues rather than relieve general data collection inconveniences.

Publisher & Advertiser Implications

The repeated delays highlight the potential disruption for digital publishers and advertisers relying on third-party cookie tracking.

Industry groups have raised concerns that restricting cross-site tracking could push websites toward more opaque privacy-invasive practices.

However, privacy advocates view the phaseout as crucial in preventing covert user profiling across the web.

With the latest postponement, all parties have more time to prepare for the eventual loss of third-party cookies and adopt Google’s proposed Privacy Sandbox APIs as replacements.

Featured Image: Novikov Aleksey/Shutterstock

-

PPC7 days ago

PPC7 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 19, 2024

-

WORDPRESS7 days ago

WORDPRESS7 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

WORDPRESS5 days ago

WORDPRESS5 days ago13 Best HubSpot Alternatives for 2024 (Free + Paid)

-

MARKETING6 days ago

MARKETING6 days agoBattling for Attention in the 2024 Election Year Media Frenzy

-

SEO7 days ago

SEO7 days ago25 WordPress Alternatives Best For SEO

-

WORDPRESS6 days ago

WORDPRESS6 days ago7 Best WooCommerce Points and Rewards Plugins (Free & Paid)

-

AFFILIATE MARKETING7 days ago

AFFILIATE MARKETING7 days agoAI Will Transform the Workplace. Here’s How HR Can Prepare for It.

You must be logged in to post a comment Login