SEO

The 5 Best Long-tail Keyword Generator Tools

Long-tail keywords are nothing more than keywords with low monthly search volumes. It doesn’t matter if they’re made up of two, three, or 10 words.

Thanks to their low popularity, they’re often less competitive and easier to rank for than short-tail keywords. This makes them an excellent target for new websites.

In this guide, you’ll learn how to find long-tail keywords using five main tools.

IMPORTANT NOTE

There are two types of long-tail keywords, and it only makes sense to target one of them. We’ll focus on finding that type below. If you’re curious about the differences between the two types, read our guide to long-tail keywords. Otherwise, just follow along.

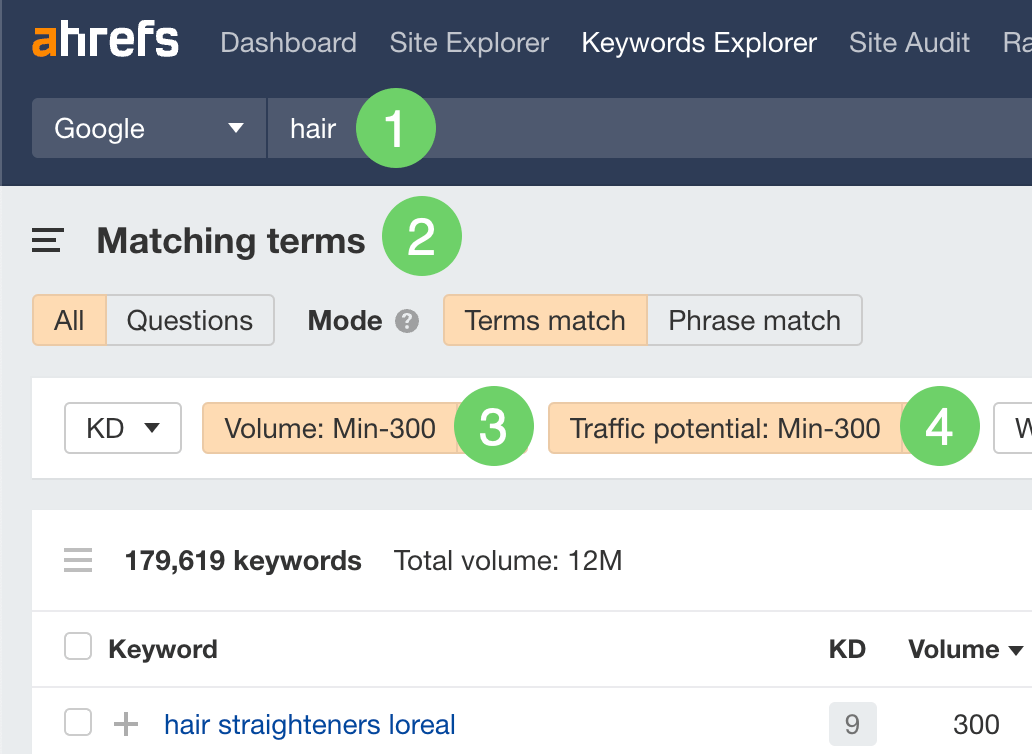

Keywords Explorer is a keyword research tool that runs on a database of billions of keywords.

Here’s how to use it to find long-tail keywords:

- Search for an industry-defining word or phrase

- Go to the Matching terms report

- Filter for keywords with a monthly search volume up to 300

- Filter for keywords with a Traffic Potential (TP) up to 300

If you’re wondering what the TP filter does, it filters out long-tail keywords where the current top-ranking page gets lots of search traffic. Because these keywords are less common ways of searching for popular topics, they’re usually hard to rank for.

For example, “hair loss patches” is a long-tail keyword. But it has a high Keyword Difficulty (KD) score, as it’s just a less common way to search for “alopecia.”

Sidenote.

Feel free to be less strict with search volume and Traffic Potential (TP) numbers if you want more results. However, I wouldn’t recommend going above a few hundred for each. Otherwise you won’t really be looking at long-tail keywords.

Let’s look at how to find long-tail keywords for various websites and content types.

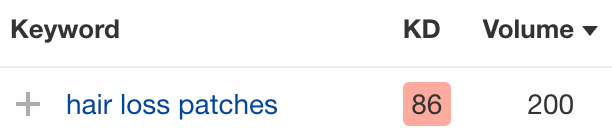

Finding e-commerce long-tail keywords

- Enter a product or product type as a seed keyword

- Go to the Matching terms report

- Filter for keywords with a monthly search volume up to 300

- Filter for keywords with a Traffic Potential (TP) up to 300

For example, if you run a clothing store, you may enter seeds like “sweater” and “sweaters.”

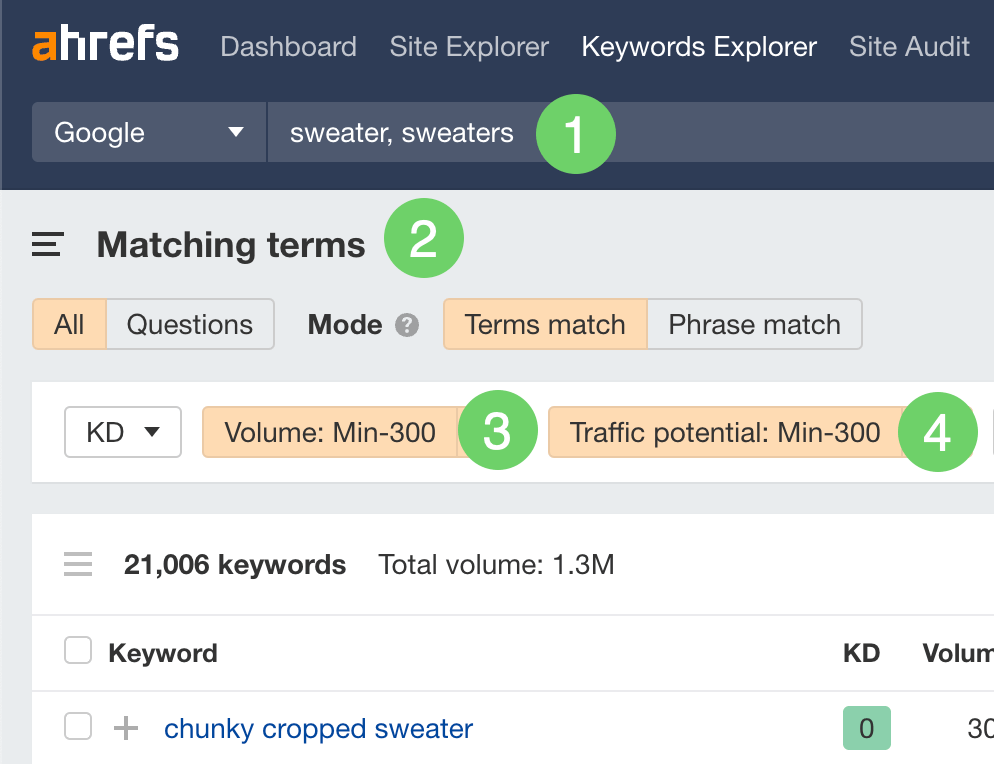

Finding long-tail keywords for blog posts

- Enter a broad topic as a seed keyword

- Go to the Matching terms report

- Hit the “Questions” toggle

- Filter for keywords with a monthly search volume up to 300

- Filter for keywords with a Traffic Potential (TP) up to 300

For example, if you run a clothing store, you may enter a seed like “shoes.”

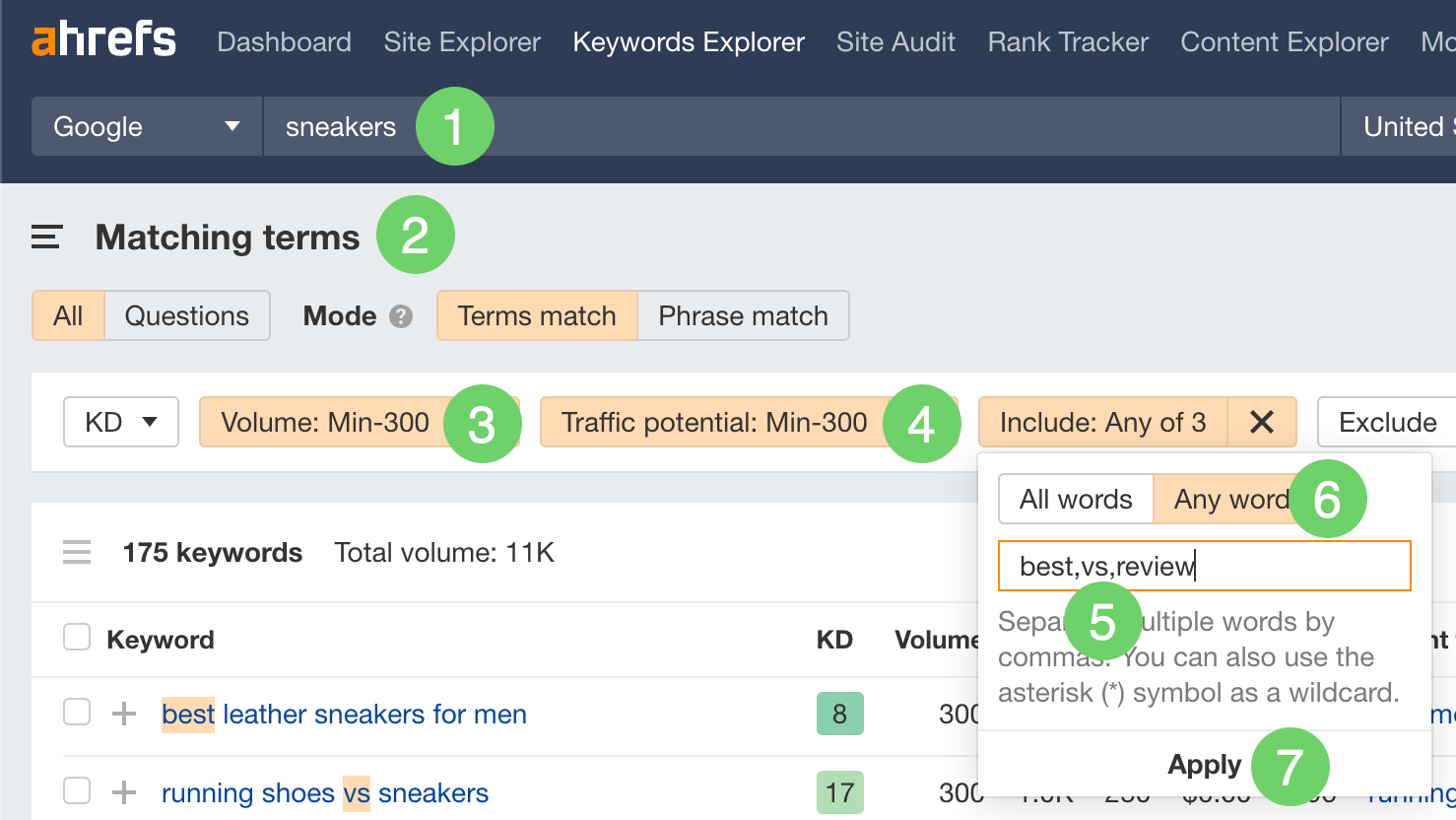

Finding affiliate long-tail keywords

- Enter a broad topic or brand as a seed keyword

- Go to the Matching terms report

- Filter for keywords with a monthly search volume up to 300

- Filter for keywords with a Traffic Potential (TP) up to 300

- Add the keywords “best,” “review,” and “vs” to the Include filter

- Set the Include filter to “Any word”

- Click “Apply”

For example, if you run a fashion affiliate site, you may enter a seed like “sneakers.”

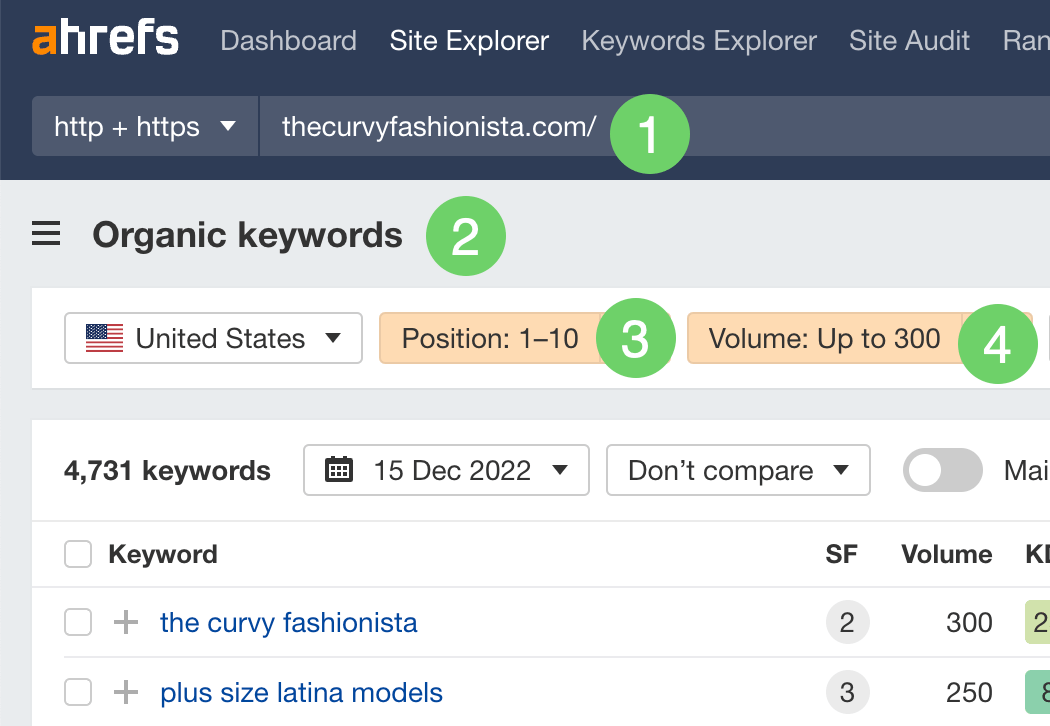

Site Explorer is a competitive research tool. One of its main features is to show the keywords your competitors rank for.

Here’s how to use it to find your competitor’s long-tail keywords:

- Enter a competitor’s domain

- Go to the Organic keywords report

- Filter for keywords that rank in positions 1–10

- Filter for keywords with a monthly search volume up to 300

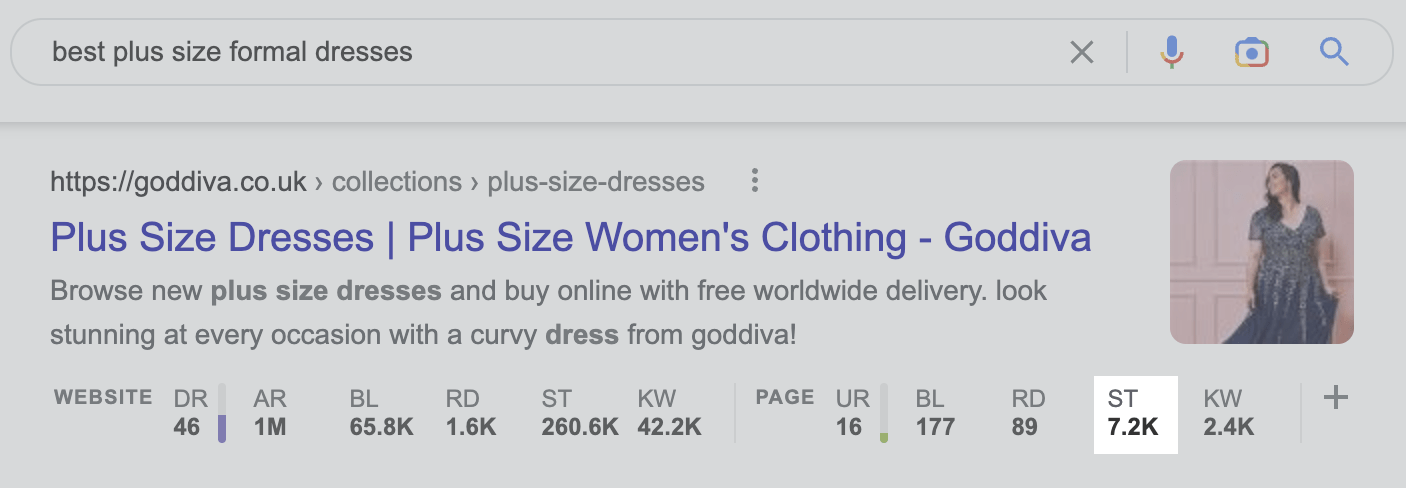

As there is currently no Traffic Potential (TP) filter in Site Explorer, you’ll need to check the traffic potential of these long-tails manually to decide if they’re worthwhile targets. To do this, install Ahrefs’ SEO Toolbar, then check the traffic to the top-ranking page in Google.

Sidenote.

Make sure “SERP tools” is active on the toolbar. To do this, click the orange “a” logo in your browser and toggle the switch on.

If the top-ranking page gets more than a few hundred monthly search visits, it’s probably not the best keyword to target, as ranking will usually be difficult.

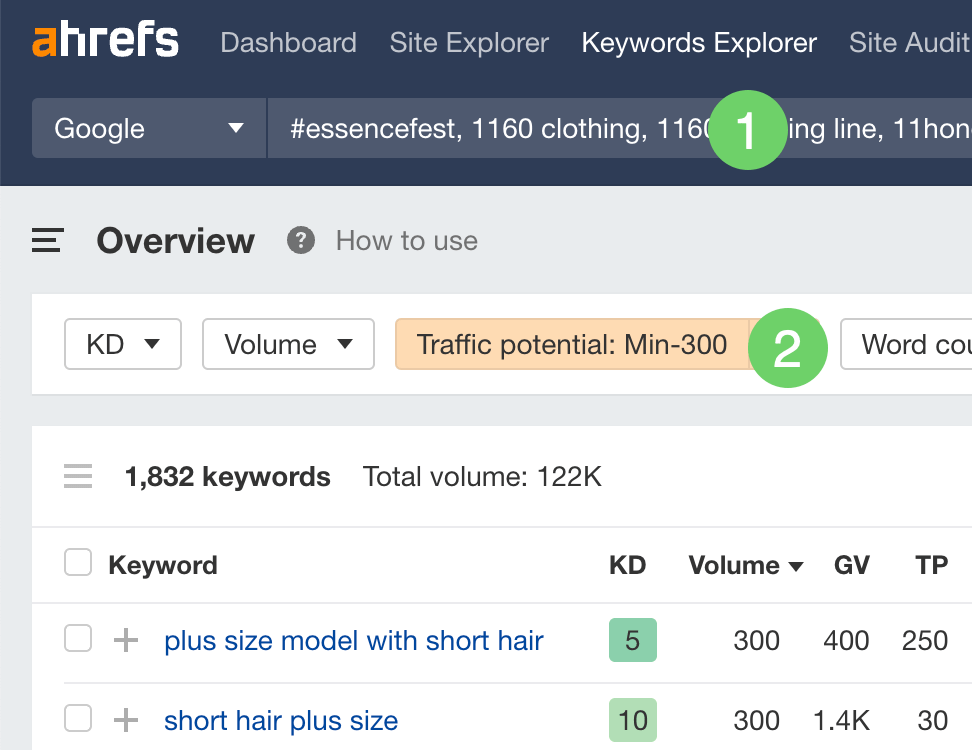

Looking for a way to check Traffic Potential in bulk?

Export the site’s keywords from Site Explorer, then:

- Paste the keyword list into Keywords Explorer

- Filter for keywords with a Traffic Potential (TP) up to 300

Note

The long-tail keywords tools and processes below are completely free. However, the results aren’t as good, and it takes a bit more effort to get there.

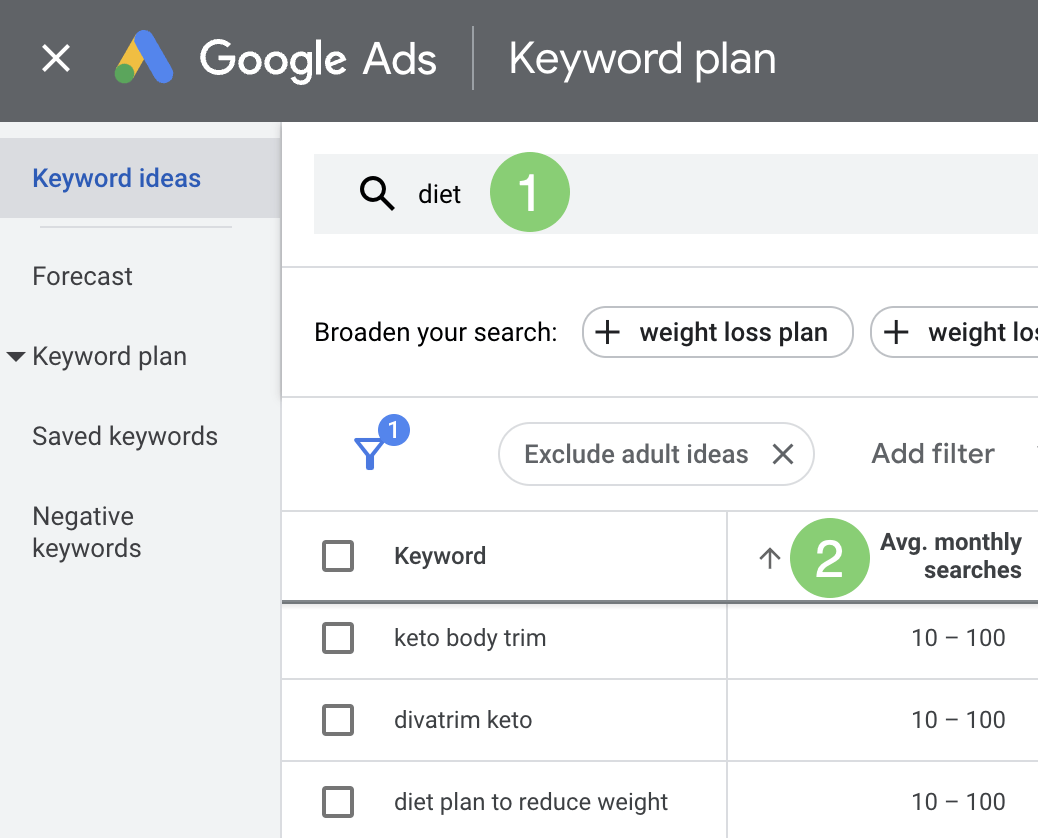

Google Keyword Planner is free to use. It’s made for advertisers, but you can still use it to find long-tail keywords. To do that, click “discover new keywords,” then:

- Enter a broad topic as a seed keyword.

- Sort the results by average monthly searches from low to high.

Keywords with a monthly search volume range of 10 to 100 or less are long-tails. But they may not be the best targets if they’re just uncommon ways to search for popular topics.

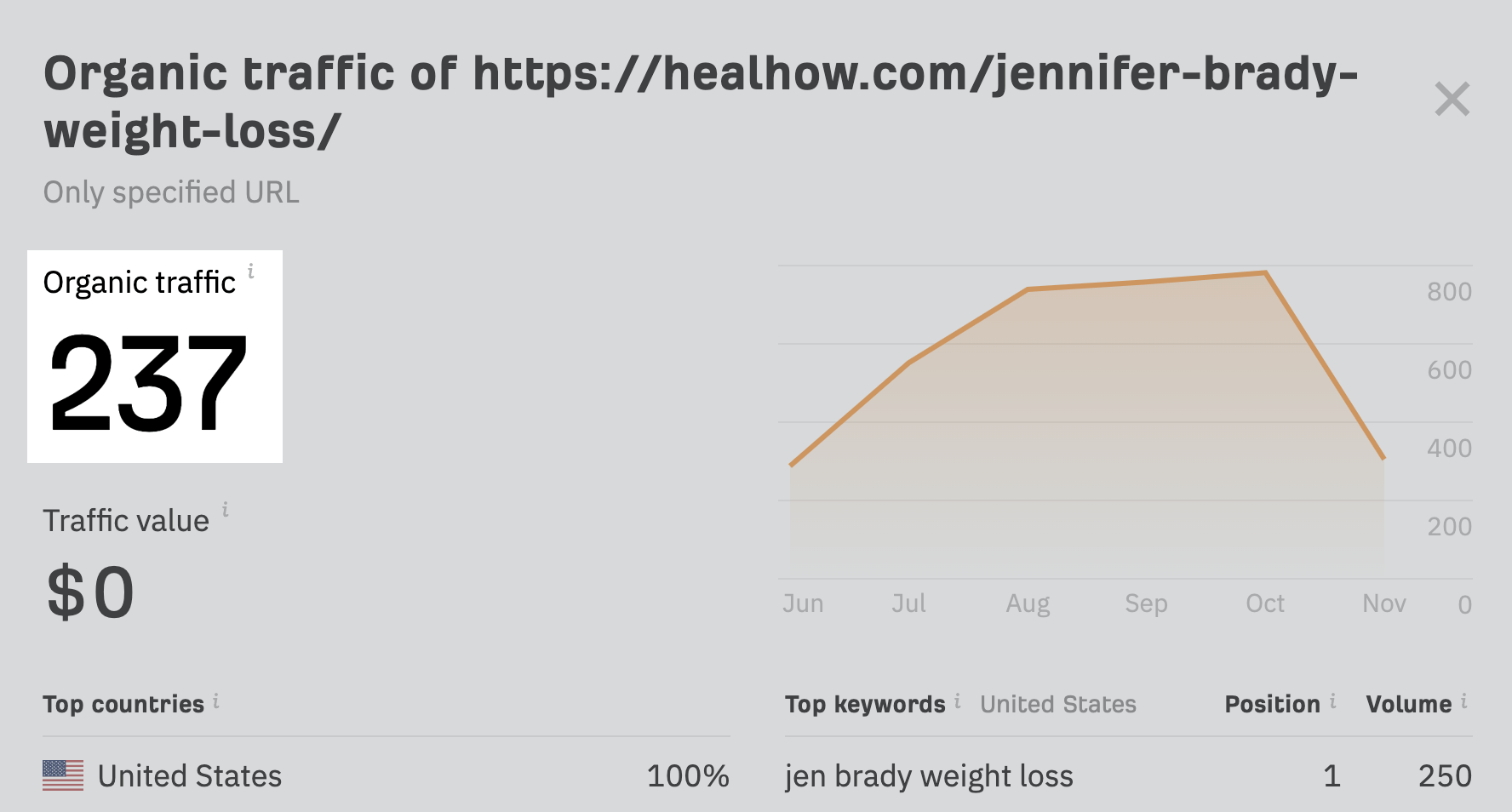

To check, search for the keyword in Google and plug the top-ranking page into Ahrefs’ free traffic checker tool. Generally speaking, you want to see no more than a few hundred monthly search visits to the page.

Although long-tail keywords can have any number of words, our study found that wordier keywords are more likely to be long-tails. This is what makes Google autocomplete a good source of long-tail keyword ideas.

Here’s how to use Google autocomplete to find keywords that are long-tails:

- Search for a simple topic on Google

- Cycle through autocomplete results until you find a long keyword

- Check traffic to the top-ranking page with Ahrefs’ free traffic checker

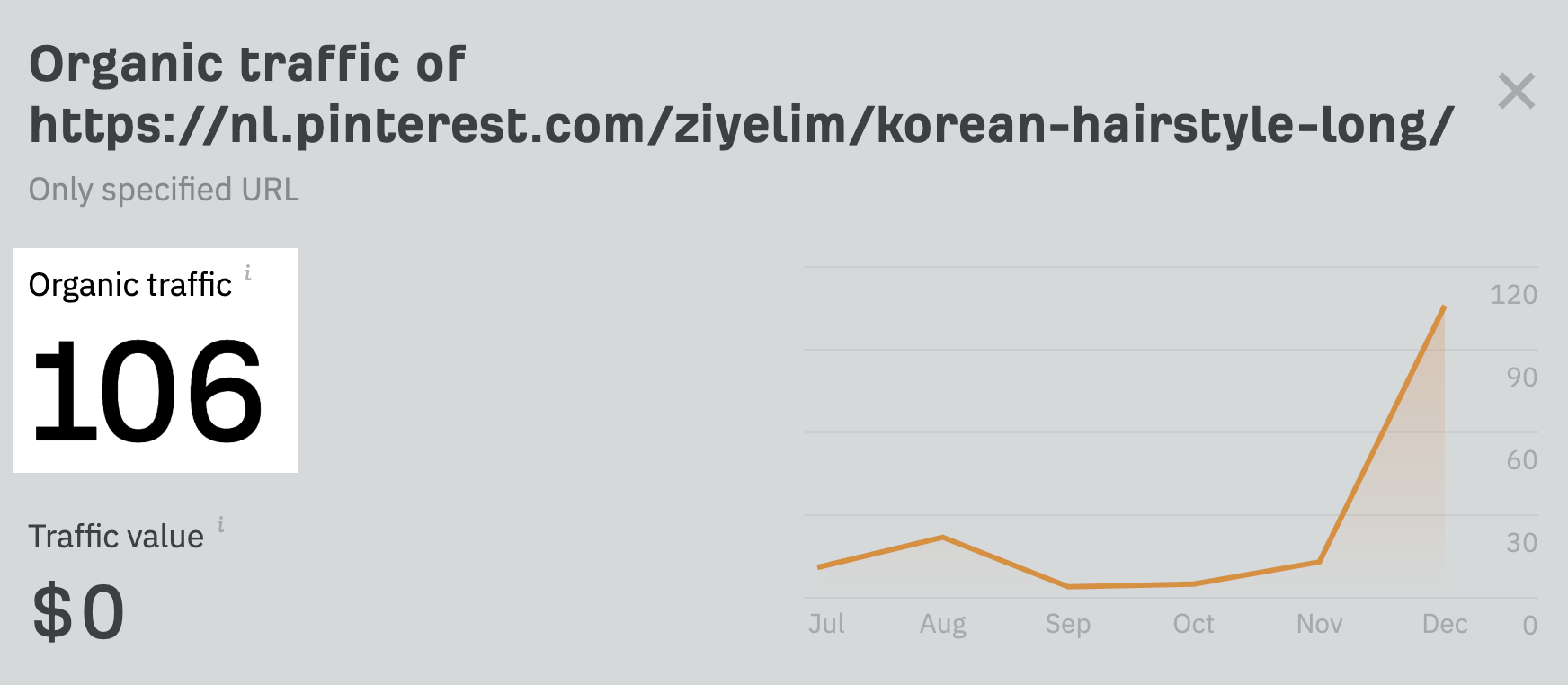

For example, if we start with “hairstyles,” we can easily get to “korean hairstyle for girl long hair” in seconds just by scrolling through the suggested searches.

But we then need to check that this isn’t just an unpopular way of searching for a popular topic. To do that, we can plug the top-ranking page for this query into Ahrefs’ free traffic checker.

If it gets a few hundred visits or fewer, it’s what we’re looking for. If it gets thousands of visits, it’ll probably be hard to rank for.

In this case, it only gets an estimated 106 monthly search visits, which is what we want.

Reddit is another good source of potential long-tail keywords. But once again, you’ll have to check traffic to the top-ranking page for any ideas you find to make sure they’re what you’re looking for.

For example, here are a couple of hyper-specific topics I came across on the SEO subreddit:

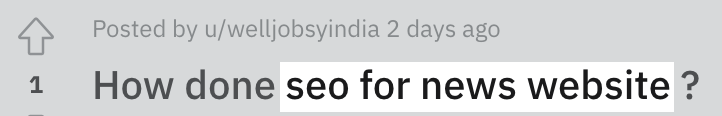

If we plug the top-ranking page for “how to improve off page seo” into Ahrefs’ free traffic checker, we see that it gets thousands of monthly search visits.

This means the keyword isn’t the kind of long-tail we’re looking for. It either has a high search volume in itself or is an uncommon way to search for a popular topic. Either way, it’s probably hard to rank for.

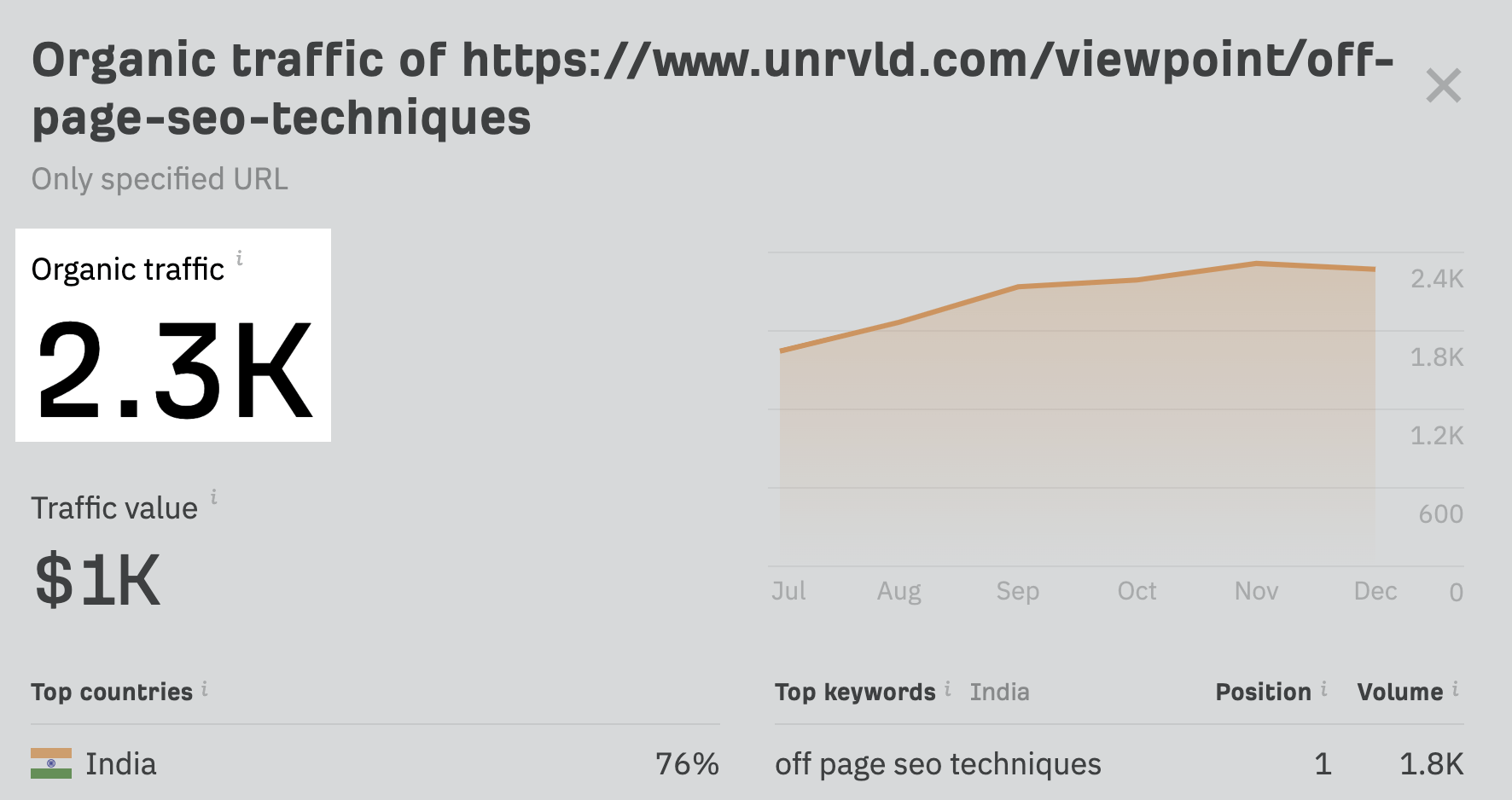

On the other hand, the top-ranking page for “seo for news website” gets under a few hundred monthly search visits.

This means the keyword must be long-tail, and it isn’t an unpopular way of searching for a popular topic. That’s what we want.

Final thoughts

Just because a keyword is a long-tail one doesn’t necessarily mean it’s easy to rank for. There are plenty of examples of high-competition, long-tail keywords.

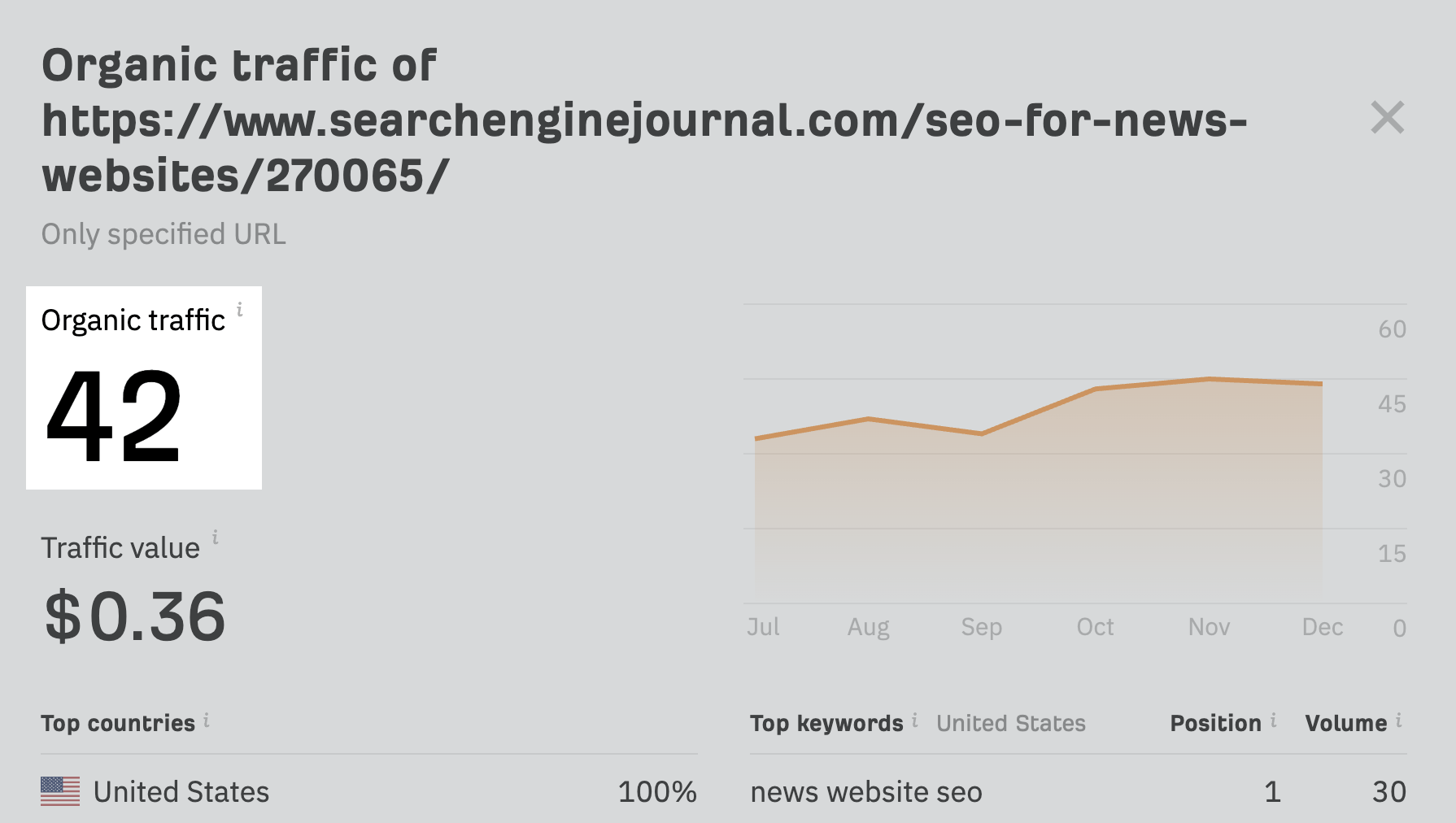

For example, “vpn for beginners” only gets an estimated 40 monthly searches in the U.S., but it has a hard Keyword Difficulty (KD) score of 50/100. This is because VPN affiliate commissions are high, so the keyword still has high competition.

Learn more in our guide to estimating keyword difficulty.

Got questions? Ping me on Twitter.

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)

You must be logged in to post a comment Login