NEWS

The real threat of fake voices in a time of crisis

As federal agencies take increasingly stringent actions to try to limit the spread of the novel coronavirus pandemic within the U.S., how can individual Americans and U.S. companies affected by these rules weigh in with their opinions and experiences? Because many of the new rules, such as travel restrictions and increased surveillance, require expansions of federal power beyond normal circumstances, our laws require the federal government to post these rules publicly and allow the public to contribute their comments to the proposed rules online. But are federal public comment websites — a vital institution for American democracy — secure in this time of crisis? Or are they vulnerable to bot attack?

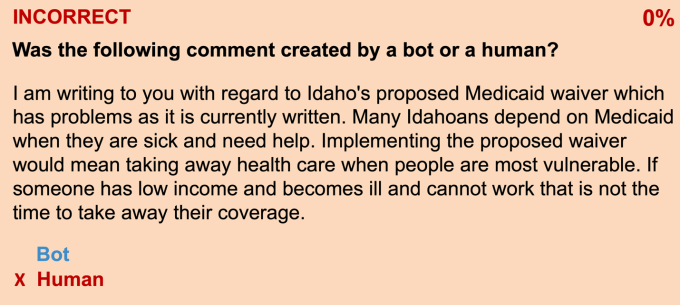

In December 2019, we published a new study to see firsthand just how vulnerable the public comment process is to an automated attack. Using publicly available artificial intelligence (AI) methods, we successfully generated 1,001 comments of deepfake text, computer-generated text that closely mimics human speech, and submitted them to the Centers for Medicare & Medicaid Services’ (CMS) website for a proposed federal rule that would institute mandatory work reporting requirements for citizens on Medicaid in Idaho.

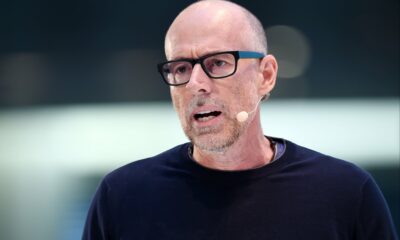

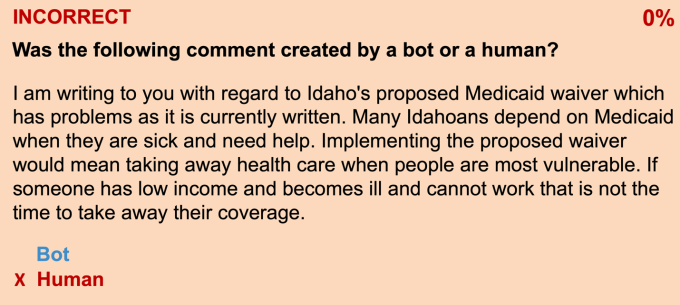

The comments we produced using deepfake text constituted over 55% of the 1,810 total comments submitted during the federal public comment period. In a follow-up study, we asked people to identify whether comments were from a bot or a human. Respondents were only correct half of the time — the same probability as random guessing.

Image Credits: Zang/Weiss/Sweeney

The example above is deepfake text generated by the bot that all survey respondents thought was from a human.

We ultimately informed CMS of our deepfake comments and withdrew them from the public record. But a malicious attacker would likely not do the same.

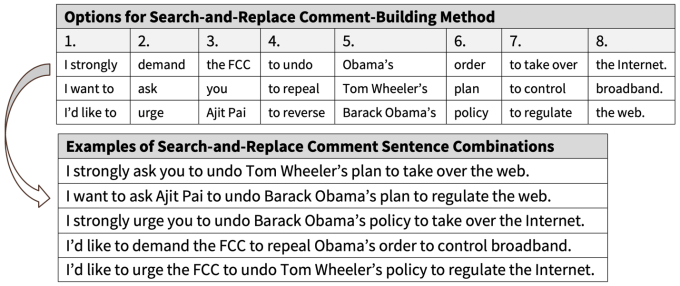

Previous large-scale fake comment attacks on federal websites have occurred, such as the 2017 attack on the FCC website regarding the proposed rule to end net neutrality regulations.

During the net neutrality comment period, firms hired by industry group Broadband for America used bots to create comments expressing support for the repeal of net neutrality. They then submitted millions of comments, sometimes even using the stolen identities of deceased voters and the names of fictional characters, to distort the appearance of public opinion.

A retroactive text analysis of the comments found that 96-97% of the more than 22 million comments on the FCC’s proposal to repeal net neutrality were likely coordinated bot campaigns. These campaigns used relatively unsophisticated and conspicuous search-and-replace methods — easily detectable even on this mass scale. But even after investigations revealed the comments were fraudulent and made using simple search-and-replace-like computer techniques, the FCC still accepted them as part of the public comment process.

Even these relatively unsophisticated campaigns were able to affect a federal policy outcome. However, our demonstration of the threat from bots submitting deepfake text shows that future attacks can be far more sophisticated and much harder to detect.

The laws and politics of public comments

Let’s be clear: The ability to communicate our needs and have them considered is the cornerstone of the democratic model. As enshrined in the Constitution and defended fiercely by civil liberties organizations, each American is guaranteed a role in participating in government through voting, through self-expression and through dissent.

Image Credits: Zang/Weiss/Sweeney

When it comes to new rules from federal agencies that can have sweeping impacts across America, public comment periods are the legally required method to allow members of the public, advocacy groups and corporations that would be most affected by proposed rules to express their concerns to the agency and require the agency to consider these comments before they decide on the final version of the rule. This requirement for public comments has been in place since the passage of the Administrative Procedure Act of 1946. In 2002, the e-Government Act required the federal government to create an online tool to receive public comments. Over the years, there have been multiple court rulings requiring the federal agency to demonstrate that they actually examined the submitted comments and publish any analysis of relevant materials and justification of decisions made in light of public comments [see Citizens to Preserve Overton Park, Inc. v. Volpe, 401 U. S. 402, 416 (1971); Home Box Office, supra, 567 F.2d at 36 (1977), Thompson v. Clark, 741 F. 2d 401, 408 (CADC 1984)].

In fact, we only had a public comment website from CMS to test for vulnerability to deepfake text submissions in our study, because in June 2019, the U.S. Supreme Court ruled in a 7-1 decision that CMS could not skip the public comment requirements of the Administrative Procedure Act in reviewing proposals from state governments to add work reporting requirements to Medicaid eligibility rules within their state.

The impact of public comments on the final rule by a federal agency can be substantial based on political science research. For example, in 2018, Harvard University researchers found that banks that commented on Dodd-Frank-related rules by the Federal Reserve obtained $7 billion in excess returns compared to non-participants. When they examined the submitted comments to the “Volcker Rule” and the debit card interchange rule, they found significant influence from submitted comments by different banks during the “sausage-making process” from the initial proposed rule to the final rule.

Beyond commenting directly using their official corporate names, we’ve also seen how an industry group, Broadband for America, in 2017 would submit millions of fake comments in support of the FCC’s rule to end net neutrality in order to create the false perception of broad political support for the FCC’s rule amongst the American public.

Technology solutions to deepfake text on public comments

While our study highlights the threat of deepfake text to disrupt public comment websites, this doesn’t mean we should end this long-standing institution of American democracy, but rather we need to identify how technology can be used for innovative solutions that accepts public comments from real humans while rejecting deepfake text from bots.

There are two stages in the public comment process — (1) comment submission and (2) comment acceptance — where technology can be used as potential solutions.

In the first stage of comment submission, technology can be used to prevent bots from submitting deepfake comments in the first place; thus raising the cost for an attacker to need to recruit large numbers of humans instead. One technological solution that many are already familiar with are the CAPTCHA boxes that we see at the bottom of internet forms that ask us to identify a word — either visually or audibly — before being able to click submit. CAPTCHAs provide an extra step that makes the submission process increasingly difficult for a bot. While these tools can be improved for accessibility for disabled individuals, they would be a step in the right direction.

However, CAPTCHAs would not prevent an attacker willing to pay for low-cost labor abroad to solve any CAPTCHA tests in order to submit deepfake comments. One way to get around that may be to require strict identification to be provided along with every submission, but that would remove the possibility for anonymous comments that are currently accepted by agencies such as CMS and the Food and Drug Administration (FDA). Anonymous comments serve as a method of privacy protection for individuals who may be significantly affected by a proposed rule on a sensitive topic such as healthcare without needing to disclose their identity. Thus, the technological challenge would be to build a system that can separate the user authentication step from the comment submission step so only authenticated individuals can submit a comment anonymously.

Finally, in the second stage of comment acceptance, better technology can be used to distinguish between deepfake text and human submissions. While our study found that our sample of over 100 people surveyed were not able to identify the deepfake text examples, more sophisticated spam detection algorithms in the future may be more successful. As machine learning methods advance over time, we may see an arms race between deepfake text generation and deepfake text identification algorithms.

The challenge today

While future technologies may offer more comprehensive solutions, the threat of deepfake text to our American democracy is real and present today. Thus, we recommend that all federal public comment websites adopt state-of-the-art CAPTCHAs as an interim measure of security, a position that is also supported by the 2019 U.S. Senate Subcommittee on Investigations’ Report on Abuses of the Federal Notice-and-Comment Rulemaking Process.

In order to develop more robust future technological solutions, we will need to build a collaborative effort between the government, researchers and our innovators in the private sector. That’s why we at Harvard University have joined the Public Interest Technology University Network along with 20 other education institutions, New America, the Ford Foundation and the Hewlett Foundation. Collectively, we are dedicated to helping inspire a new generation of civic-minded technologists and policy leaders. Through curriculum, research and experiential learning programs, we hope to build the field of public interest technology and a future where technology is made and regulated with the public in mind from the beginning.

While COVID-19 has disrupted many parts of American society, it hasn’t stopped federal agencies under the Trump administration from continuing to propose new deregulatory rules that can have long-lasting legacies that will be felt long after the current pandemic has ended. For example, on March 18, 2020, the Environmental Protection Agency (EPA) proposed new rules about limiting which research studies can be used to support EPA regulations, which have received over 610,000 comments as of April 6, 2020. On April 2, 2020, the Department of Education proposed new rules for permanently relaxing regulations for online education and distance learning. On February 19, 2020, the FCC re-opened public comments on its net neutrality rules, which in 2017 saw 22 million comments submitted by bots, after a federal court ruled that the FCC ignored how ending net neutrality would affect public safety and cellphone access programs for low-income Americans.

Federal public comment websites offer the only way for the American public and organizations to express their concerns to the federal agency before the final rules are determined. We must adopt better technological defenses to ensure that deepfake text doesn’t further threaten American democracy during a time of crisis.

NEWS

OpenAI Introduces Fine-Tuning for GPT-4 and Enabling Customized AI Models

OpenAI has today announced the release of fine-tuning capabilities for its flagship GPT-4 large language model, marking a significant milestone in the AI landscape. This new functionality empowers developers to create tailored versions of GPT-4 to suit specialized use cases, enhancing the model’s utility across various industries.

Fine-tuning has long been a desired feature for developers who require more control over AI behavior, and with this update, OpenAI delivers on that demand. The ability to fine-tune GPT-4 allows businesses and developers to refine the model’s responses to better align with specific requirements, whether for customer service, content generation, technical support, or other unique applications.

Why Fine-Tuning Matters

GPT-4 is a very flexible model that can handle many different tasks. However, some businesses and developers need more specialized AI that matches their specific language, style, and needs. Fine-tuning helps with this by letting them adjust GPT-4 using custom data. For example, companies can train a fine-tuned model to keep a consistent brand tone or focus on industry-specific language.

Fine-tuning also offers improvements in areas like response accuracy and context comprehension. For use cases where nuanced understanding or specialized knowledge is crucial, this can be a game-changer. Models can be taught to better grasp intricate details, improving their effectiveness in sectors such as legal analysis, medical advice, or technical writing.

Key Features of GPT-4 Fine-Tuning

The fine-tuning process leverages OpenAI’s established tools, but now it is optimized for GPT-4’s advanced architecture. Notable features include:

- Enhanced Customization: Developers can precisely influence the model’s behavior and knowledge base.

- Consistency in Output: Fine-tuned models can be made to maintain consistent formatting, tone, or responses, essential for professional applications.

- Higher Efficiency: Compared to training models from scratch, fine-tuning GPT-4 allows organizations to deploy sophisticated AI with reduced time and computational cost.

Additionally, OpenAI has emphasized ease of use with this feature. The fine-tuning workflow is designed to be accessible even to teams with limited AI experience, reducing barriers to customization. For more advanced users, OpenAI provides granular control options to achieve highly specialized outputs.

Implications for the Future

The launch of fine-tuning capabilities for GPT-4 signals a broader shift toward more user-centric AI development. As businesses increasingly adopt AI, the demand for models that can cater to specific business needs, without compromising on performance, will continue to grow. OpenAI’s move positions GPT-4 as a flexible and adaptable tool that can be refined to deliver optimal value in any given scenario.

By offering fine-tuning, OpenAI not only enhances GPT-4’s appeal but also reinforces the model’s role as a leading AI solution across diverse sectors. From startups seeking to automate niche tasks to large enterprises looking to scale intelligent systems, GPT-4’s fine-tuning capability provides a powerful resource for driving innovation.

OpenAI announced that fine-tuning GPT-4o will cost $25 for every million tokens used during training. After the model is set up, it will cost $3.75 per million input tokens and $15 per million output tokens. To help developers get started, OpenAI is offering 1 million free training tokens per day for GPT-4o and 2 million free tokens per day for GPT-4o mini until September 23. This makes it easier for developers to try out the fine-tuning service.

As AI continues to evolve, OpenAI’s focus on customization and adaptability with GPT-4 represents a critical step in making advanced AI accessible, scalable, and more aligned with real-world applications. This new capability is expected to accelerate the adoption of AI across industries, creating a new wave of AI-driven solutions tailored to specific challenges and opportunities.

This Week in Search News: Simple and Easy-to-Read Update

Here’s what happened in the world of Google and search engines this week:

1. Google’s June 2024 Spam Update

Google finished rolling out its June 2024 spam update over a period of seven days. This update aims to reduce spammy content in search results.

2. Changes to Google Search Interface

Google has removed the continuous scroll feature for search results. Instead, it’s back to the old system of pages.

3. New Features and Tests

- Link Cards: Google is testing link cards at the top of AI-generated overviews.

- Health Overviews: There are more AI-generated health overviews showing up in search results.

- Local Panels: Google is testing AI overviews in local information panels.

4. Search Rankings and Quality

- Improving Rankings: Google said it can improve its search ranking system but will only do so on a large scale.

- Measuring Quality: Google’s Elizabeth Tucker shared how they measure search quality.

5. Advice for Content Creators

- Brand Names in Reviews: Google advises not to avoid mentioning brand names in review content.

- Fixing 404 Pages: Google explained when it’s important to fix 404 error pages.

6. New Search Features in Google Chrome

Google Chrome for mobile devices has added several new search features to enhance user experience.

7. New Tests and Features in Google Search

- Credit Card Widget: Google is testing a new widget for credit card information in search results.

- Sliding Search Results: When making a new search query, the results might slide to the right.

8. Bing’s New Feature

Bing is now using AI to write “People Also Ask” questions in search results.

9. Local Search Ranking Factors

Menu items and popular times might be factors that influence local search rankings on Google.

10. Google Ads Updates

- Query Matching and Brand Controls: Google Ads updated its query matching and brand controls, and advertisers are happy with these changes.

- Lead Credits: Google will automate lead credits for Local Service Ads. Google says this is a good change, but some advertisers are worried.

- tROAS Insights Box: Google Ads is testing a new insights box for tROAS (Target Return on Ad Spend) in Performance Max and Standard Shopping campaigns.

- WordPress Tag Code: There is a new conversion code for Google Ads on WordPress sites.

These updates highlight how Google and other search engines are continuously evolving to improve user experience and provide better advertising tools.

Facebook Faces Yet Another Outage: Platform Encounters Technical Issues Again

Uppdated: It seems that today’s issues with Facebook haven’t affected as many users as the last time. A smaller group of people appears to be impacted this time around, which is a relief compared to the larger incident before. Nevertheless, it’s still frustrating for those affected, and hopefully, the issues will be resolved soon by the Facebook team.

Facebook had another problem today (March 20, 2024). According to Downdetector, a website that shows when other websites are not working, many people had trouble using Facebook.

This isn’t the first time Facebook has had issues. Just a little while ago, there was another problem that stopped people from using the site. Today, when people tried to use Facebook, it didn’t work like it should. People couldn’t see their friends’ posts, and sometimes the website wouldn’t even load.

Downdetector, which watches out for problems on websites, showed that lots of people were having trouble with Facebook. People from all over the world said they couldn’t use the site, and they were not happy about it.

When websites like Facebook have problems, it affects a lot of people. It’s not just about not being able to see posts or chat with friends. It can also impact businesses that use Facebook to reach customers.

Since Facebook owns Messenger and Instagram, the problems with Facebook also meant that people had trouble using these apps. It made the situation even more frustrating for many users, who rely on these apps to stay connected with others.

During this recent problem, one thing is obvious: the internet is always changing, and even big websites like Facebook can have problems. While people wait for Facebook to fix the issue, it shows us how easily things online can go wrong. It’s a good reminder that we should have backup plans for staying connected online, just in case something like this happens again.