SEO

Optimizing Interaction To Next Paint: A Step-By-Step Guide

This post was sponsored by DebugBear. The opinions expressed in this article are the sponsor’s own.

Keeping your website fast is important for user experience and SEO.

The Core Web Vitals initiative by Google provides a set of metrics to help you understand the performance of your website.

The three Core Web Vitals metrics are:

This post focuses on the recently introduced INP metric and what you can do to improve it.

How Is Interaction To Next Paint Measured?

INP measures how quickly your website responds to user interactions – for example, a click on a button. More specifically, INP measures the time in milliseconds between the user input and when the browser has finished processing the interaction and is ready to display any visual updates on the page.

Your website needs to complete this process in under 200 milliseconds to get a “Good” score. Values over half a second are considered “Poor”. A poor score in a Core Web Vitals metric can negatively impact your search engine rankings.

Google collects INP data from real visitors on your website as part of the Chrome User Experience Report (CrUX). This CrUX data is what ultimately impacts rankings.

-

Image created by DebugBear, May 2024

How To Identify & Fix Slow INP Times

The factors causing poor Interaction to Next Paint can often be complex and hard to figure out. Follow this step-by-step guide to understand slow interactions on your website and find potential optimizations.

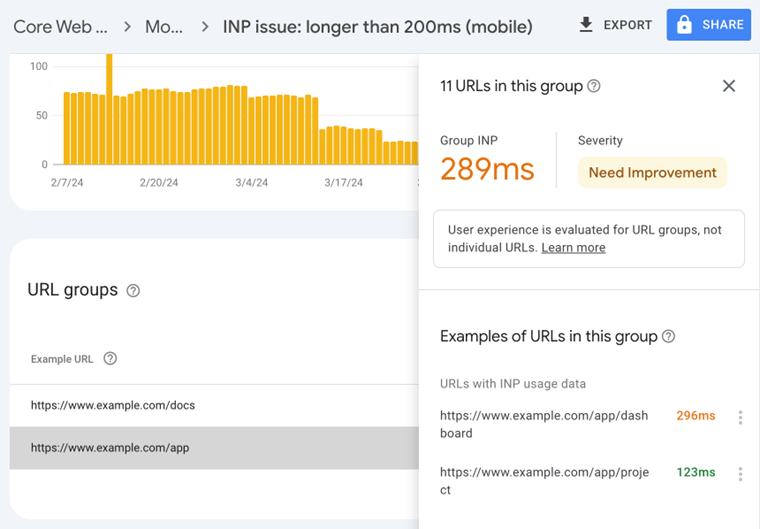

1. How To Identify A Page With Slow INP Times

Different pages on your website will have different Core Web Vitals scores. So you need to identify a slow page and then investigate what’s causing it to be slow.

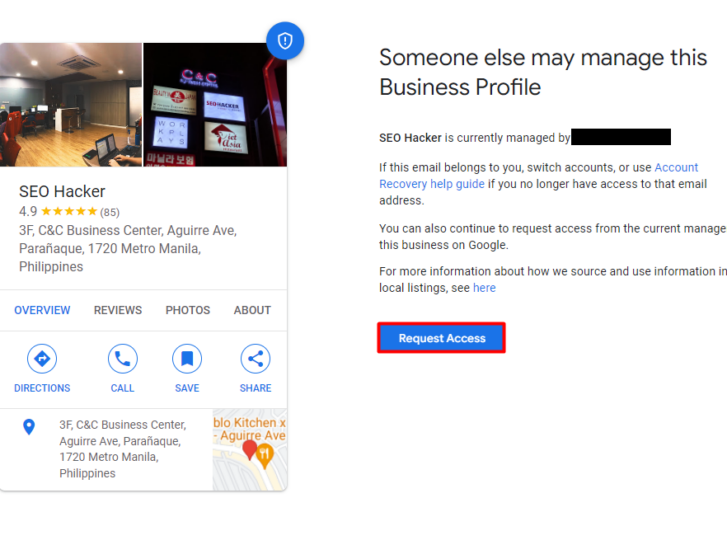

Using Google Search Console

One easy way to check your INP scores is using the Core Web Vitals section in Google Search Console, which reports data based on the Google CrUX data we’ve discussed before.

By default, page URLs are grouped into URL groups that cover many different pages. Be careful here – not all pages might have the problem that Google is reporting. Instead, click on each URL group to see if URL-specific data is available for some pages and then focus on those.

-

Screenshot of Google Search Console, May 2024

Screenshot of Google Search Console, May 2024

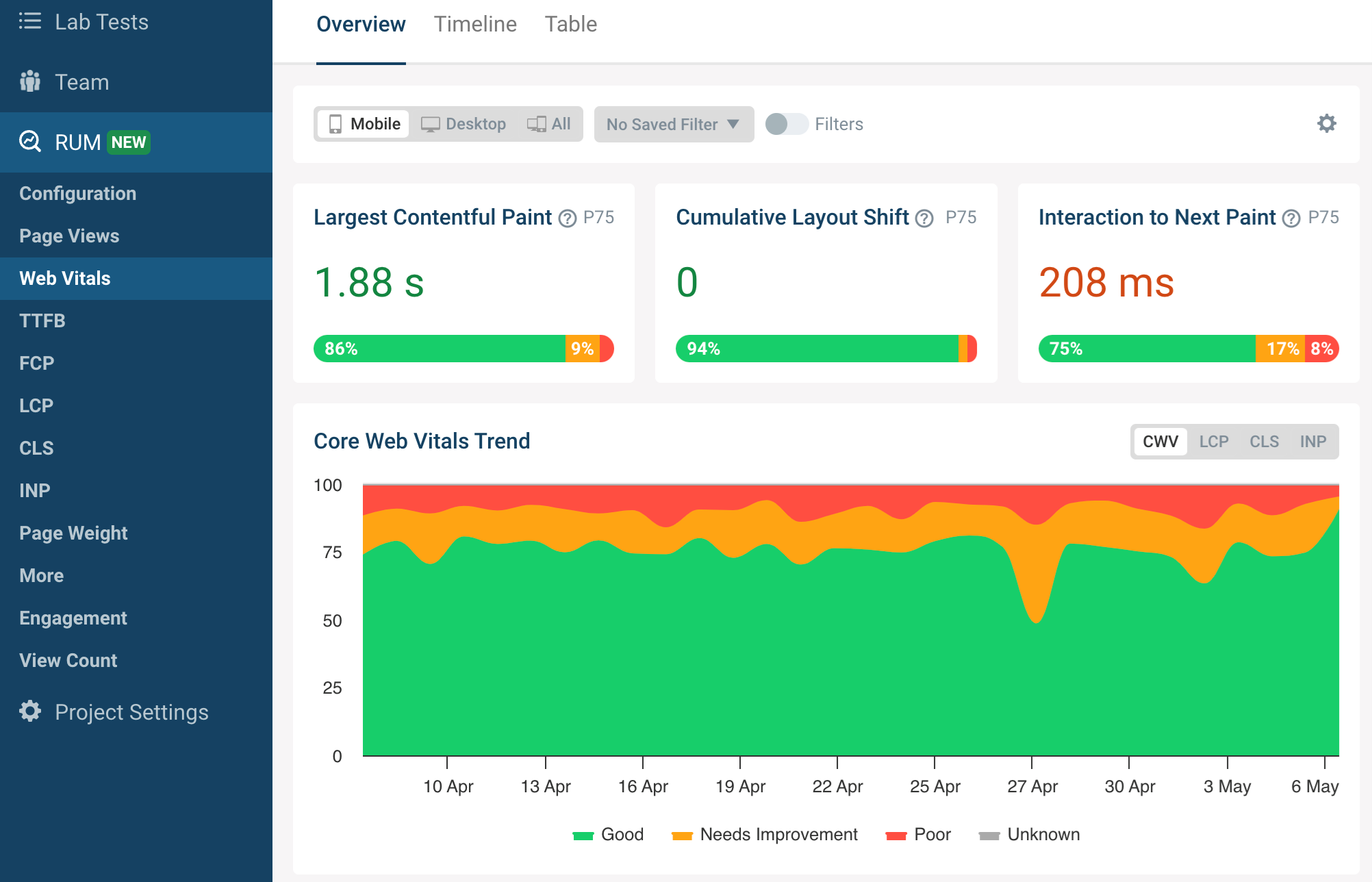

Using A Real-User Monitoring (RUM) Service

Google won’t report Core Web Vitals data for every page on your website, and it only provides the raw measurements without any details to help you understand and fix the issues. To get that you can use a real-user monitoring tool like DebugBear.

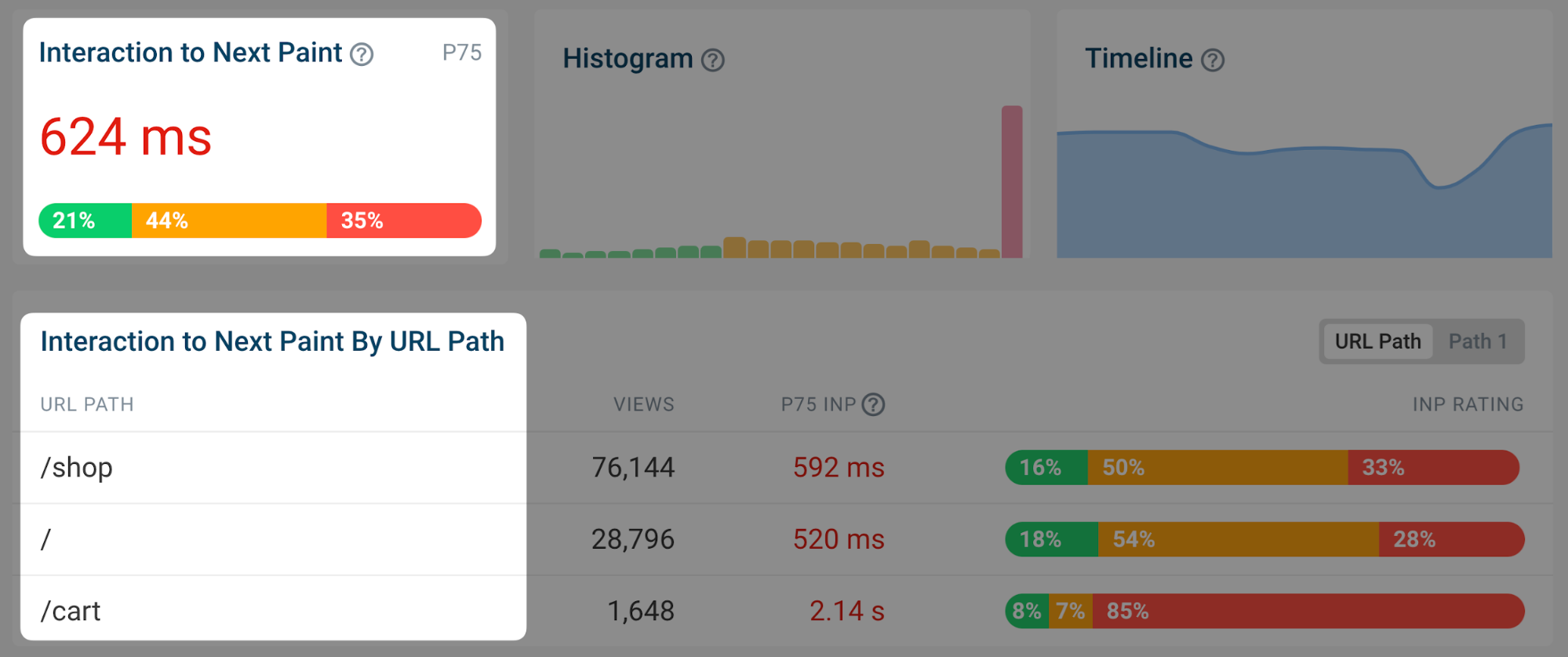

Real-user monitoring works by installing an analytics snippet on your website that measures how fast your website is for your visitors. Once that’s set up you’ll have access to an Interaction to Next Paint dashboard like this:

-

Screenshot of the DebugBear Interaction to Next Paint dashboard, May 2024

Screenshot of the DebugBear Interaction to Next Paint dashboard, May 2024

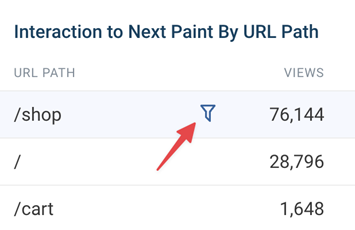

You can identify pages you want to optimize in the list, hover over the URL, and click the funnel icon to look at data for that specific page only.

Image created by DebugBear, May 2024

Image created by DebugBear, May 20242. Figure Out What Element Interactions Are Slow

Different visitors on the same page will have different experiences. A lot of that depends on how they interact with the page: if they click on a background image there’s no risk of the page suddenly freezing, but if they click on a button that starts some heavy processing then that’s more likely. And users in that second scenario will experience much higher INP.

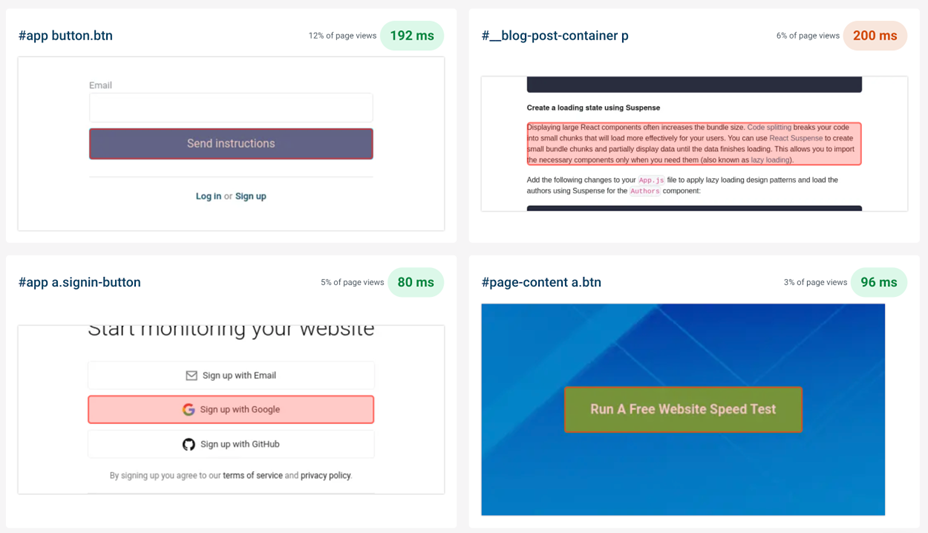

To help with that, RUM data provides a breakdown of what page elements users interacted with and how big the interaction delays were.

-

Screenshot of the DebugBear INP Elements view, May 2024

Screenshot of the DebugBear INP Elements view, May 2024

The screenshot above shows different INP interactions sorted by how frequent these user interactions are. To make optimizations as easy as possible you’ll want to focus on a slow interaction that affects many users.

In DebugBear, you can click on the page element to add it to your filters and continue your investigation.

3. Identify What INP Component Contributes The Most To Slow Interactions

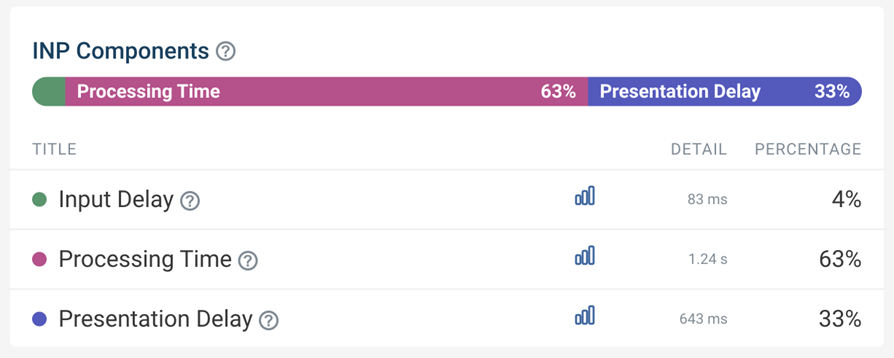

INP delays can be broken down into three different components:

- Input Delay: Background code that blocks the interaction from being processed.

- Processing Time: The time spent directly handling the interaction.

- Presentation Delay: Displaying the visual updates to the screen.

You should focus on which INP component is the biggest contributor to the slow INP time, and ensure you keep that in mind during your investigation.

-

Screenshot of the DebugBear INP Components, May 2024

Screenshot of the DebugBear INP Components, May 2024

In this scenario, Processing Time is the biggest contributor to the slow INP time for the set of pages you’re looking at, but you need to dig deeper to understand why.

High processing time indicates that there is code intercepting the user interaction and running slow performing code. If instead you saw a high input delay, that suggests that there are background tasks blocking the interaction from being processed, for example due to third-party scripts.

4. Check Which Scripts Are Contributing To Slow INP

Sometimes browsers report specific scripts that are contributing to a slow interaction. Your website likely contains both first-party and third-party scripts, both of which can contribute to slow INP times.

A RUM tool like DebugBear can collect and surface this data. The main thing you want to look at is whether you mostly see your own website code or code from third parties.

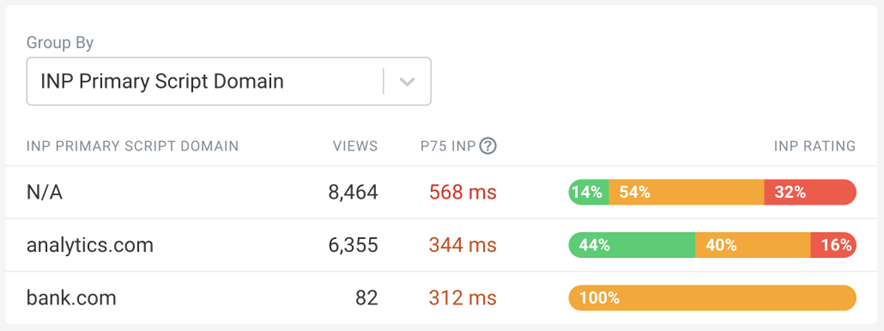

-

Screenshot of the INP Primary Script Domain Grouping in DebugBear, May 2024

Screenshot of the INP Primary Script Domain Grouping in DebugBear, May 2024

Tip: When you see a script, or source code function marked as “N/A”, this can indicate that the script comes from a different origin and has additional security restrictions that prevent RUM tools from capturing more detailed information.

This now begins to tell a story: it appears that analytics/third-party scripts are the biggest contributors to the slow INP times.

5. Identify Why Those Scripts Are Running

At this point, you now have a strong suspicion that most of the INP delay, at least on the pages and elements you’re looking at, is due to third-party scripts. But how can you tell whether those are general tracking scripts or if they actually have a role in handling the interaction?

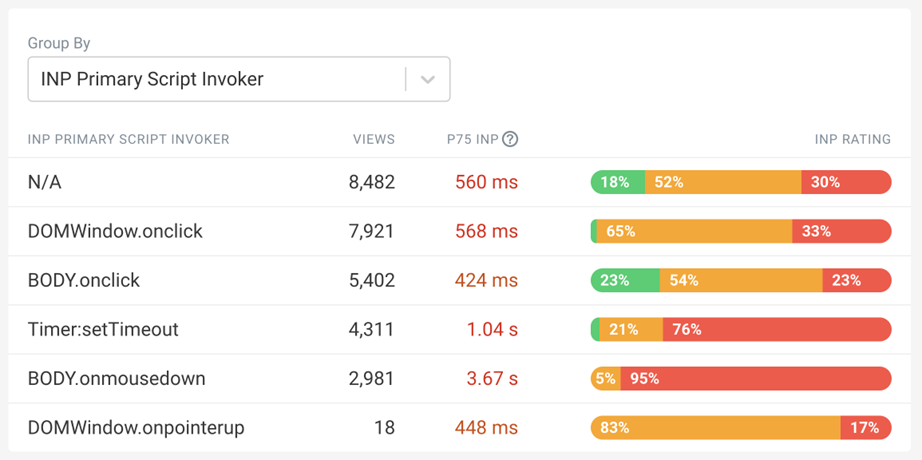

DebugBear offers a breakdown that helps see why the code is running, called the INP Primary Script Invoker breakdown. That’s a bit of a mouthful – multiple different scripts can be involved in slowing down an interaction, and here you just see the biggest contributor. The “Invoker” is just a value that the browser reports about what caused this code to run.

-

Screenshot of the INP Primary Script Invoker Grouping in DebugBear, May 2024

Screenshot of the INP Primary Script Invoker Grouping in DebugBear, May 2024

The following invoker names are examples of page-wide event handlers:

- onclick

- onmousedown

- onpointerup

You can see those a lot in the screenshot above, which tells you that the analytics script is tracking clicks anywhere on the page.

In contrast, if you saw invoker names like these that would indicate event handlers for a specific element on the page:

- .load_more.onclick

- #logo.onclick

6. Review Specific Page Views

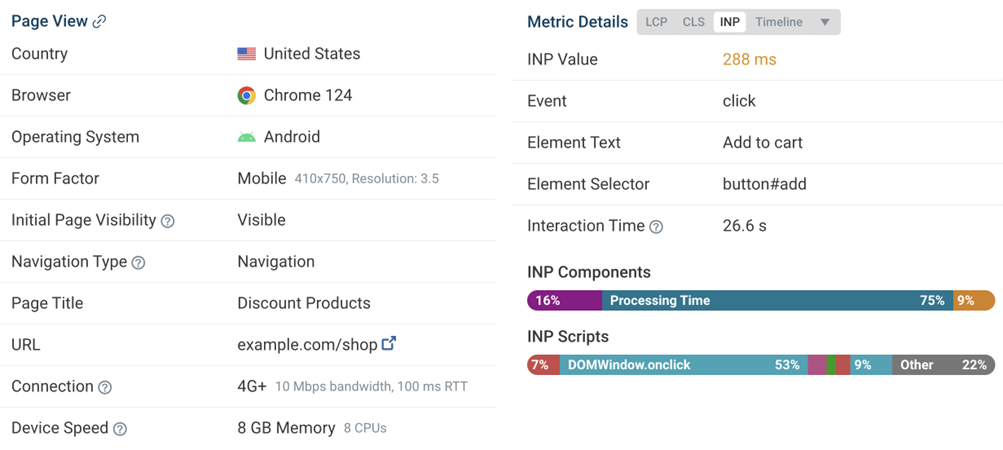

A lot of the data you’ve seen so far is aggregated. It’s now time to look at the individual INP events, to form a definitive conclusion about what’s causing slow INP in this example.

Real user monitoring tools like DebugBear generally offer a way to review specific user experiences. For example, you can see what browser they used, how big their screen is, and what element led to the slowest interaction.

-

Screenshot of a Page View in DebugBear Real User Monitoring, May 2024

Screenshot of a Page View in DebugBear Real User Monitoring, May 2024

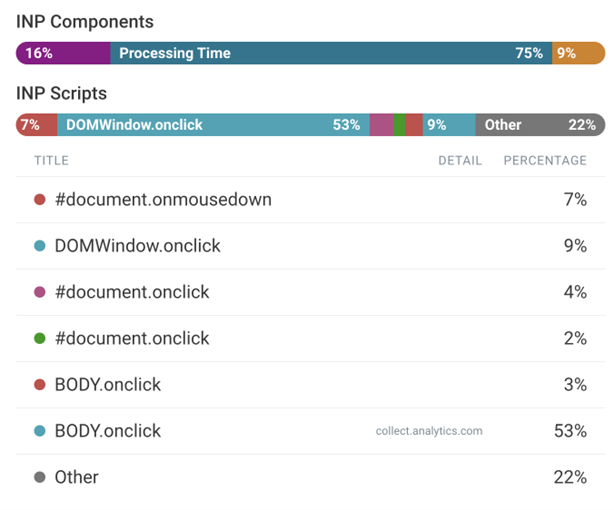

As mentioned before, multiple scripts can contribute to overall slow INP. The INP Scripts section shows you the scripts that were run during the INP interaction:

-

Screenshot of the DebugBear INP script breakdown, May 2024

Screenshot of the DebugBear INP script breakdown, May 2024

You can review each of these scripts in more detail to understand why they run and what’s causing them to take longer to finish.

7. Use The DevTools Profiler For More Information

Real user monitoring tools have access to a lot of data, but for performance and security reasons they can access nowhere near all the available data. That’s why it’s a good idea to also use Chrome DevTools to measure your page performance.

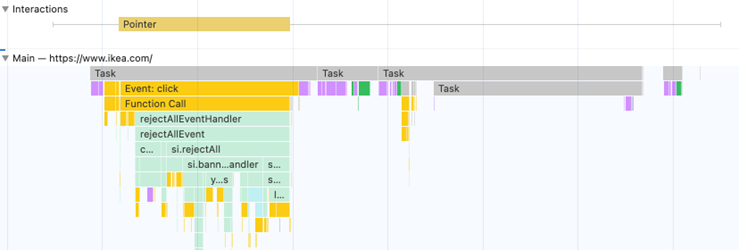

To debug INP in DevTools you can measure how the browser processes one of the slow interactions you’ve identified before. DevTools then shows you exactly how the browser is spending its time handling the interaction.

-

Screenshot of a performance profile in Chrome DevTools, May 2024

Screenshot of a performance profile in Chrome DevTools, May 2024

How You Might Resolve This Issue

In this example, you or your development team could resolve this issue by:

- Working with the third-party script provider to optimize their script.

- Removing the script if it is not essential to the website, or finding an alternative provider.

- Adjusting how your own code interacts with the script

How To Investigate High Input Delay

In the previous example most of the INP time was spent running code in response to the interaction. But often the browser is already busy running other code when a user interaction happens. When investigating the INP components you’ll then see a high input delay value.

This can happen for various reasons, for example:

- The user interacted with the website while it was still loading.

- A scheduled task is running on the page, for example an ongoing animation.

- The page is loading and rendering new content.

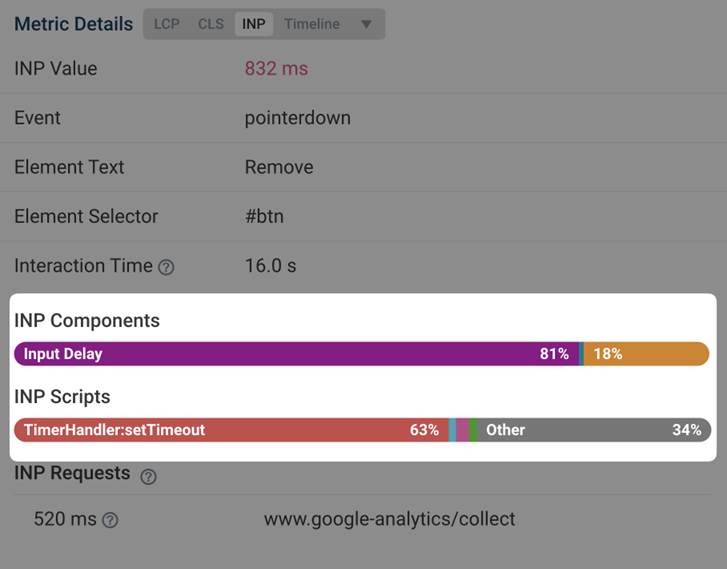

To understand what’s happening, you can review the invoker name and the INP scripts section of individual user experiences.

-

Screenshot of the INP Component breakdown within DebugBear, May 2024

Screenshot of the INP Component breakdown within DebugBear, May 2024

In this screenshot, you can see that a timer is running code that coincides with the start of a user interaction.

The script can be opened to reveal the exact code that is run:

-

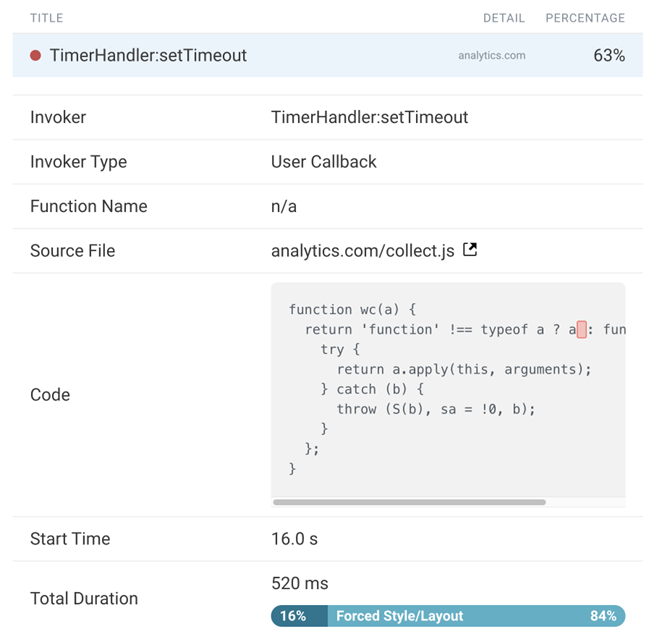

Screenshot of INP script details in DebugBear, May 2024

Screenshot of INP script details in DebugBear, May 2024

The source code shown in the previous screenshot comes from a third-party user tracking script that is running on the page.

At this stage, you and your development team can continue with the INP workflow presented earlier in this article. For example, debugging with browser DevTools or contacting the third-party provider for support.

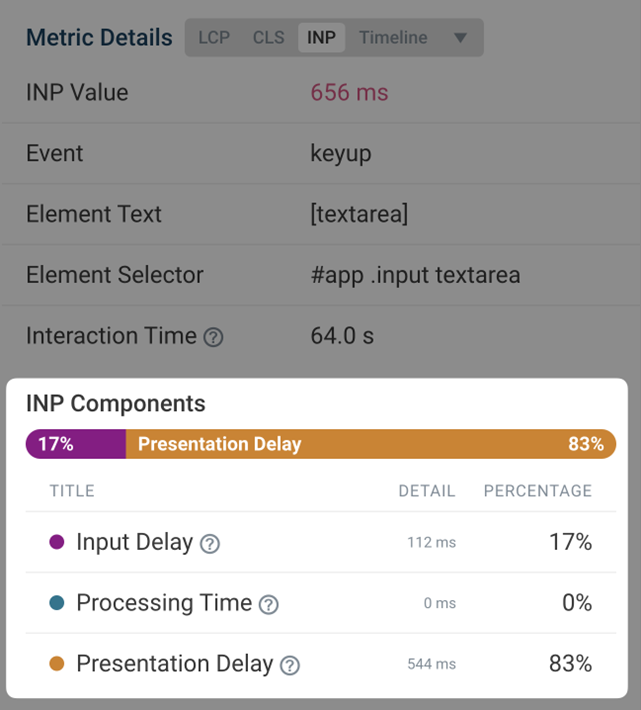

How To Investigate High Presentation Delay

Presentation delay tends to be more difficult to debug than input delay or processing time. Often it’s caused by browser behavior rather than a specific script. But as before, you still start by identifying a specific page and a specific interaction.

You can see an example interaction with high presentation delay here:

-

Screenshot of the an interaction with high presentation delay, May 2024

Screenshot of the an interaction with high presentation delay, May 2024

You see that this happens when the user enters text into a form field. In this example, many visitors pasted large amounts of text that the browser had to process.

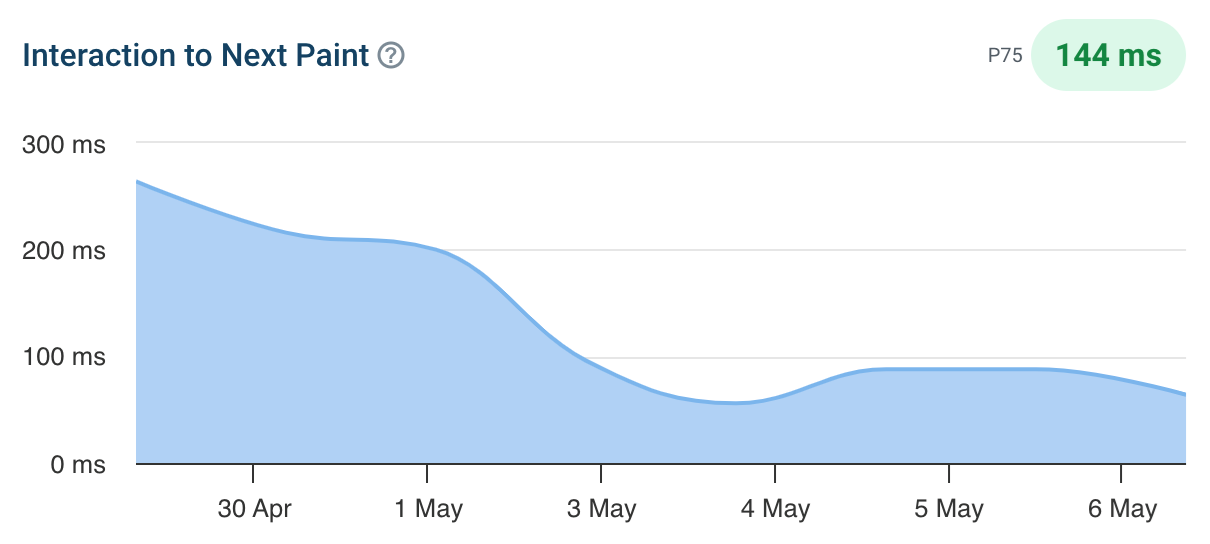

Here the fix was to delay the processing, show a “Waiting…” message to the user, and then complete the processing later on. You can see how the INP score improves from May 3:

-

Screenshot of an Interaction to Next Paint timeline in DebugBear, May 2024

Screenshot of an Interaction to Next Paint timeline in DebugBear, May 2024

Get The Data You Need To Improve Interaction To Next Paint

Setting up real user monitoring helps you understand how users experience your website and what you can do to improve it. Try DebugBear now by signing up for a free 14-day trial.

-

Screenshot of the DebugBear Core Web Vitals dashboard, May 2024

Screenshot of the DebugBear Core Web Vitals dashboard, May 2024

Google’s CrUX data is aggregated over a 28-day period, which means that it’ll take a while before you notice a regression. With real-user monitoring you can see the impact of website changes right away and get alerted automatically when there’s a big change.

DebugBear monitors lab data, CrUX data, and real user data. That way you have all the data you need to optimize your Core Web Vitals in one place.

This article has been sponsored by DebugBear, and the views presented herein represent the sponsor’s perspective.

Ready to start optimizing your website? Sign up for DebugBear and get the data you need to deliver great user experiences.

Image Credits

Featured Image: Image by Redesign.co. Used with permission.

SEO

How To Build A Diverse & Healthy Link Profile

Search is evolving at an incredible pace and new features, formats, and even new search engines are popping up within the space.

Google’s algorithm still prioritizes backlinks when ranking websites. If you want your website to be visible in search results, you must account for backlinks and your backlink profile.

A healthy backlink profile requires a diverse backlink profile.

In this guide, we’ll examine how to build and maintain a diverse backlink profile that powers your website’s search performance.

What Does A Healthy Backlink Profile Look Like?

As Google states in its guidelines, it primarily crawls pages through links from other pages linked to your pages, acquired through promotion and naturally over time.

In practice, a healthy backlink profile can be divided into three main areas: the distribution of link types, the mix of anchor text, and the ratio of followed to nofollowed links.

Let’s look at these areas and how they should look within a healthy backlink profile.

Distribution Of Link Types

One aspect of your backlink profile that needs to be diversified is link types.

It looks unnatural to Google to have predominantly one kind of link in your profile, and it also indicates that you’re not diversifying your content strategy enough.

Some of the various link types you should see in your backlink profile include:

- Anchor text links.

- Image links.

- Redirect links.

- Canonical links.

Here is an example of the breakdown of link types at my company, Whatfix (via Semrush):

Most links should be anchor text links and image links, as these are the most common ways to link on the web, but you should see some of the other types of links as they are picked up naturally over time.

Mix Of Anchor Text

Next, ensure your backlink profile has an appropriate anchor text variance.

Again, if you overoptimize for a specific type of anchor text, it will appear suspicious to search engines like Google and could have negative repercussions.

Here are the various types of anchor text you might find in your backlink profile:

- Branded anchor text – Anchor text that is your brand name or includes your brand name.

- Empty – Links that have no anchor text.

- Naked URLs – Anchor text that is a URL (e.g., www.website.com).

- Exact match keyword-rich anchor text – Anchor text that exactly matches the keyword the linked page targets (e.g., blue shoes).

- Partial match keyword-rich anchor text – Anchor text that partially or closely matches the keyword the linked page targets (e.g., “comfortable blue footwear options”).

- Generic anchor text – Anchor text such as “this website” or “here.”

To maintain a healthy backlink profile, aim for a mix of anchor text within a similar range to this:

- Branded anchor text – 35-40%.

- Partial match keyword-rich anchor text – 15-20%.

- Generic anchor text -10-15%.

- Exact match keyword-rich anchor text – 5-10%.

- Naked URLs – 5-10%.

- Empty – 3-5%.

This distribution of anchor text represents a natural mix of differing anchor texts. It is common for the majority of anchors to be branded or partially branded because most sites that link to your site will default to your brand name when linking. It also makes sense that the following most common anchors would be partial-match keywords or generic anchor text because these are natural choices within the context of a web page.

Exact-match anchor text is rare because it only happens when you are the best resource for a specific term, and the site owner knows your page exists.

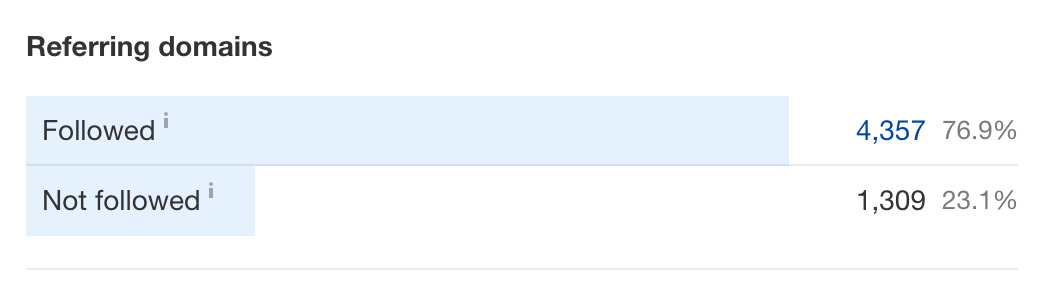

Ratio Of Followed Vs. Nofollowed Backlinks

Lastly, you should monitor the ratio of followed vs. nofollowed links pointing to your website.

If you need a refresher on what nofollowed backlinks are or why someone might apply the nofollow tag to a link pointing to your site, check out Google’s guide on how to qualify outbound links to Google.

Nofollow attributes should only be applied to paid links or links pointing to a site the linking site doesn’t trust.

While it is not uncommon or suspicious to have some nofollow links (people misunderstand the purpose of the nofollow attribute all the time), a healthy backlink profile will have far more followed links.

You should aim for a ratio of 80%:20% or 70%:30% in favor of followed links. For example, here is what the followed vs. nofollowed ratio looks like for my company’s backlink profile (according to Ahrefs):

Screenshot from Ahrefs, May 2024

Screenshot from Ahrefs, May 2024You may see links with other rel attributes, such as UGC or Sponsored.

The “UGC” attribute tags links from user-generated content, while the “Sponsored” attribute tags links from sponsored or paid sources. These attributes are slightly different than the nofollow tag, but they essentially work the same way, letting Google know these links aren’t trusted or endorsed by the linking site. You can simply group these links in with nofollowed links when calculating your ratio.

Importance Of Diversifying Your Backlink Profile

So why is it important to diversify your backlink profile anyway? Well, there are three main reasons you should consider:

- Avoiding overoptimization.

- Diversifying traffic sources.

- And finding new audiences.

Let’s dive into each of these.

Avoiding Overoptimization

First and foremost, diversifying your backlink profile is the best way to protect yourself from overoptimization and the damaging penalties that can come with it.

As SEO pros, our job is to optimize websites to improve performance, but overoptimizing in any facet of our strategy – backlinks, keywords, structure, etc. – can result in penalties that limit visibility within search results.

In the previous section, we covered the elements of a healthy backlink profile. If you stray too far from that model, your site might look suspicious to search engines like Google and you could be handed a manual or algorithmic penalty, suppressing your rankings in search.

Considering how regularly Google updates its search algorithm these days (and how little information surrounds those updates), you could see your performance tank and have no idea why.

This is why it’s so important to keep a watchful eye on your backlink profile and how it’s shaping up.

Diversifying Traffic Sources

Another reason to cultivate a diverse backlink profile is to ensure you’re diversifying your traffic sources.

Google penalties come swiftly and can often be a surprise. If you have all your eggs in that basket when it comes to traffic, your site will suffer badly and might need help to recover.

However, diversifying your traffic sources (search, social, email, etc.) will mitigate risk – similar to a stock portfolio – as you’ll have other traffic sources to provide a steady flow of visitors if another source suddenly dips.

Part of building a diverse backlink profile is acquiring a diverse set of backlinks and backlink types, and this strategy will also help you find differing and varied sources of traffic.

Finding New Audiences

Finally, building a diverse backlink profile is essential, as doing so will also help you discover new audiences.

If you acquire links from the same handful of websites and platforms, you will need help expanding your audience and building awareness for your website.

While it’s important to acquire links from sites that cater to your existing audience, you should also explore ways to build links that can tap into new audiences. The best way to do this is by casting a wide net with various link acquisition tactics and strategies.

A diverse backlink profile indicates a varied approach to SEO and marketing that will help bring new visitors and awareness to your site.

Building A Diverse Backlink Profile

So that you know what a healthy backlink profile looks like and why it’s important to diversify, how do you build diversity into your site’s backlink profile?

This comes down to your link acquisition strategy and the types of backlinks you actively pursue. To guide your strategy, let’s break link building into three main categories:

- Foundational links.

- Content promotion.

- Community involvement.

Here’s how to approach each area.

Foundational Links

Foundational links represent those links that your website simply should have. These are opportunities where a backlink would exist if all sites were known to all site owners.

Some examples of foundational links include:

- Mentions – Websites that mention your brand in some way (brand name, product, employees, proprietary data, etc.) on their website but don’t link.

- Partners – Websites that belong to real-world partners or companies you connect with offline and should also connect (link) with online.

- Associations or groups – Websites for offline associations or groups you belong to where your site should be listed with a link.

- Sponsorships – Any events or organizations your company sponsors might have websites that could (and should) link to your site.

- Sites that link to competitors – If a website is linking to a competitor, there is a strong chance it would make sense for them to link to your site as well.

These link opportunities should set the foundation for your link acquisition efforts.

As the baseline for your link building strategy, you should start by exhausting these opportunities first to ensure you’re not missing highly relevant links to bolster your backlink profile.

Content Promotion

Next, consider content promotion as a strategy for building a healthy, diverse backlink profile.

Content promotion is much more proactive than the foundational link acquisition mentioned above. You must manifest the opportunity by creating link-worthy content rather than simply capitalizing on an existing opportunity.

Some examples of content promotion for links are:

- Digital PR – Digital PR campaigns have numerous benefits and goals beyond link acquisition, but backlinks should be a primary KPI.

- Original research – Similar to digital PR, original research should focus on providing valuable data to your audience. Still, you should also make sure any citations or references to your research are correctly linked.

- Guest content – Whether regular columns or one-off contributions, providing guest content to websites is still a viable link acquisition strategy – when done right. The best way to gauge your guest content strategy is to ask yourself if you would still write the content for a site without guaranteeing a backlink, knowing you’ll still build authority and get your message in front of a new audience.

- Original imagery – Along with research and data, if your company creates original imagery that offers unique value, you should promote those images and ask for citation links.

Content promotion is a viable avenue for building a healthy backlink profile as long as the content you’re promoting is worthy of links.

Community Involvement

Community involvement is the final piece of your link acquisition puzzle when building a diverse backlink profile.

After pursuing all foundational opportunities and manually promoting your content, you should ensure your brand is active and represented in all the spaces and communities where your audience engages.

In terms of backlinks, this could mean:

- Wikipedia links – Wikipedia gets over 4 billion monthly visits, so backlinks here can bring significant referral traffic to your site. However, acquiring these links is difficult as these pages are moderated closely, and your site will only be linked if it is legitimately a top resource on the web.

- Forums (Reddit, Quora, etc.) – Another great place to get backlinks that drive referral traffic is forums like Reddit and Quora. Again, these forums are strictly moderated, and earning link placements on these sites requires a page that delivers significant and unique value to a specific audience.

- Social platforms – Social media platforms and groups represent communities where your brand should be active and engaged. While these strategies are likely handled by other teams outside SEO and focus on different metrics, you should still be intentional about converting these interactions into links when or where possible.

- Offline events – While it may seem counterintuitive to think of offline events as a potential source for link acquisition, legitimate link opportunities exist here. After all, most businesses, brands, and people you interact with at these events also have websites, and networking can easily translate to online connections in the form of links.

While most of the link opportunities listed above will have the nofollow link attribute due to the nature of the sites associated with them, they are still valuable additions to your backlink profile as these are powerful, trusted domains.

These links help diversify your traffic sources by bringing substantial referral traffic, and that traffic is highly qualified as these communities share your audience.

How To Avoid Developing A Toxic Backlink Profile

Now that you’re familiar with the link building strategies that can help you cultivate a healthy, diverse backlink profile, let’s discuss what you should avoid.

As mentioned before, if you overoptimize one strategy or link, it can seem suspicious to search engines and cause your site to receive a penalty. So, how do you avoid filling your backlink profile with toxic links?

Remember The “Golden Rule” Of Link Building

One simple way to guide your link acquisition strategy and avoid running afoul of search engines like Google is to follow one “golden rule.”

That rule is to ask yourself: If search engines like Google didn’t exist, and the only way people could navigate the web was through backlinks, would you want your site to have a link on the prospective website?

Thinking this way strips away all the tactical, SEO-focused portions of the equation and only leaves the human elements of linking where two sites are linked because it makes sense and makes the web easier to navigate.

Avoid Private Blog Networks (PBNs)

Another good rule is to avoid looping your site into private blog networks (PBNs). Of course, it’s not always obvious or easy to spot a PBN.

However, there are some common traits or red flags you can look for, such as:

- The person offering you a link placement mentions they have a list of domains they can share.

- The prospective linking site has little to no traffic and doesn’t appear to have human engagement (blog comments, social media followers, blog views, etc.).

- The website features thin content and little investment into user experience (UX) and design.

- The website covers generic topics and categories, catering to any and all audiences.

- Pages on the site feature numerous external links but only some internal links.

- The prospective domain’s backlink profile features overoptimization in any of the previously discussed forms (high-density of exact match anchor text, abnormal ratio of nofollowed links, only one or two link types, etc.).

Again, diversification – in both tactics and strategies – is crucial to building a healthy backlink profile, but steering clear of obvious PBNs and remembering the ‘golden rule’ of link building will go a long way toward keeping your profile free from toxicity.

Evaluating Your Backlink Profile

As you work diligently to build and maintain a diverse, healthy backlink profile, you should also carve out time to evaluate it regularly from a more analytical perspective.

There are two main ways to evaluate the merit of your backlinks: leverage tools to analyze backlinks and compare your backlink profile to the greater competitive landscape.

Leverage Tools To Analyze Backlink Profile

There are a variety of third-party tools you can use to analyze your backlink profile.

These tools can provide helpful insights, such as the total number of backlinks and referring domains. You can use these tools to analyze your full profile, broken down by:

- Followed vs. nofollowed.

- Authority metrics (Domain Rating, Domain Authority, Authority Score, etc.).

- Backlink types.

- Location or country.

- Anchor text.

- Top-level domain types.

- And more.

You can also use these tools to track new incoming backlinks, as well as lost backlinks, to help you better understand how your backlink profile is growing.

Some of the best tools for analyzing your backlink profile are:

Many of these tools also have features that estimate how toxic or suspicious your profile might look to search engines, which can help you detect potential issues early.

Compare Your Backlink Profile To The Competitive Landscape

Lastly, you should compare your overall backlink profile to those of your competitors and those competing with your site in the search results.

Again, the previously mentioned tools can help with this analysis – as far as providing you with the raw numbers – but the key areas you should compare are:

- Total number of backlinks.

- Total number of referring domains.

- Breakdown of authority metrics of links (Domain Rating, Domain Authority, Authority Score, etc.).

- Authority metrics of competing domains.

- Link growth over the last two years.

Comparing your backlink profile to others within your competitive landscape will help you assess where your domain currently stands and provide insight into how far you must go if you’re lagging behind competitors.

It’s worth noting that it’s not as simple as whoever has the most backlinks will perform the best in search.

These numbers are typically solid indicators of how search engines gauge the authority of your competitors’ domains, and you’ll likely find a correlation between strong backlink profiles and strong search performance.

Approach Link Building With A User-First Mindset

The search landscape continues to evolve at a breakneck pace and we could see dramatic shifts in how people search within the next five years (or sooner).

However, at this time, search engines like Google still rely on backlinks as part of their ranking algorithms, and you need to cultivate a strong backlink profile to be visible in search.

Furthermore, if you follow the advice in this article as you build out your profile, you’ll acquire backlinks that benefit your site regardless of search algorithms, futureproofing your traffic sources.

Approach link acquisition like you would any other marketing endeavor – with a customer-first mindset – and over time, you’ll naturally build a healthy, diverse backlink profile.

More resources:

Featured Image: Sammby/Shutterstock

SEO

Google On Traffic Diversity As A Ranking Factor

Google’s SearchLiaison tweeted encouragement to diversify traffic sources, being clear about the reason he was recommending it. Days later, someone followed up to ask if traffic diversity is a ranking factor, prompting SearchLiaison to reiterate that it is not.

What Was Said

The question of whether diversity of traffic was a ranking factor was elicited from a previous tweet in a discussion about whether a site owner should be focusing on off-site promotion.

Here’s the question from the original discussion that was tweeted:

“Can you please tell me if I’m doing right by focusing on my site and content – writing new articles to be found through search – or if I should be focusing on some off-site effort related to building a readership? It’s frustrating to see traffic go down the more effort I put in.”

SearchLiaison split the question into component parts and answered each one. When it came to the part about off-site promotion, SearchLiaison (who is Danny Sullivan), shared from his decades of experience as a journalist and publisher covering technology and search marketing.

I’m going to break down his answer so that it’s clearer what he meant

This is the part from the tweet that talks about off-site activities:

“As to the off-site effort question, I think from what I know from before I worked at Google Search, as well as my time being part of the search ranking team, is that one of the ways to be successful with Google Search is to think beyond it.”

What he is saying here is simple, don’t limit your thinking about what to do with your site to thinking about how to make it appeal to Google.

He next explains that sites that rank tend to be sites that are created to appeal to people.

SearchLiaison continued:

“Great sites with content that people like receive traffic in many ways. People go to them directly. They come via email referrals. They arrive via links from other sites. They get social media mentions.”

What he’s saying there is that you’ll know that you’re appealing to people if people are discussing your site in social media, if people are referring the site in social media and if other sites are citing it with links.

Other ways to know that a site is doing well is when when people engage in the comments section, send emails asking follow up questions, and send emails of thanks and share anecdotes of their success or satisfaction with a product or advice.

Consider this, fast fashion site Shein at one point didn’t rank for their chosen keyword phrases, I know because I checked out of curiosity. But they were at the time virally popular and making huge amounts of sales by gamifying site interaction and engagement, propelling them to become a global brand. A similar strategy propelled Zappos when they pioneered no-questions asked returns and cheerful customer service.

SearchLiaison continued:

“It just means you’re likely building a normal site in the sense that it’s not just intended for Google but instead for people. And that’s what our ranking systems are trying to reward, good content made for people.”

SearchLiaison explicitly said that building sites with diversified content is not a ranking factor.

He added this caveat to his tweet:

“This doesn’t mean you should get a bunch of social mentions, or a bunch of email mentions because these will somehow magically rank you better in Google (they don’t, from how I know things).”

Despite The Caveat…

A journalist tweeted this:

“Earlier this week, @searchliaison told people to diversify their traffic. Naturally, people started questioning whether that meant diversity of traffic was a ranking factor.

So, I asked @iPullRank what he thought.”

SearchLiaison of course answered that he explicitly said it’s not a ranking factor and linked to his original tweet that I quoted above.

He tweeted:

“I mean that’s not exactly what I myself said, but rather repeat all that I’ll just add the link to what I did say:”

The journalist responded:

“I would say this is calling for publishers to diversify their traffic since you’re saying the great sites do it. It’s the right advice to give.”

And SearchLiaison answered:

“It’s the part of “does it matter for rankings” that I was making clear wasn’t what I myself said. Yes, I think that’s a generally good thing, but it’s not the only thing or the magic thing.”

Not Everything Is About Ranking Factors

There is a longstanding practice by some SEOs to parse everything that Google publishes for clues to how Google’s algorithm works. This happened with the Search Quality Raters guidelines. Google is unintentionally complicit because it’s their policy to (in general) not confirm whether or not something is a ranking factor.

This habit of searching for “ranking factors” leads to misinformation. It takes more acuity to read research papers and patents to gain a general understanding of how information retrieval works but it’s more work to try to understand something than skimming a PDF for ranking papers.

The worst approach to understanding search is to invent hypotheses about how Google works and then pore through a document to confirm those guesses (and falling into the confirmation bias trap).

In the end, it may be more helpful to back off of exclusively optimizing for Google and focus at least equally as much in optimizing for people (which includes optimizing for traffic). I know it works because I’ve been doing it for years.

Featured Image by Shutterstock/Asier Romero

SEO

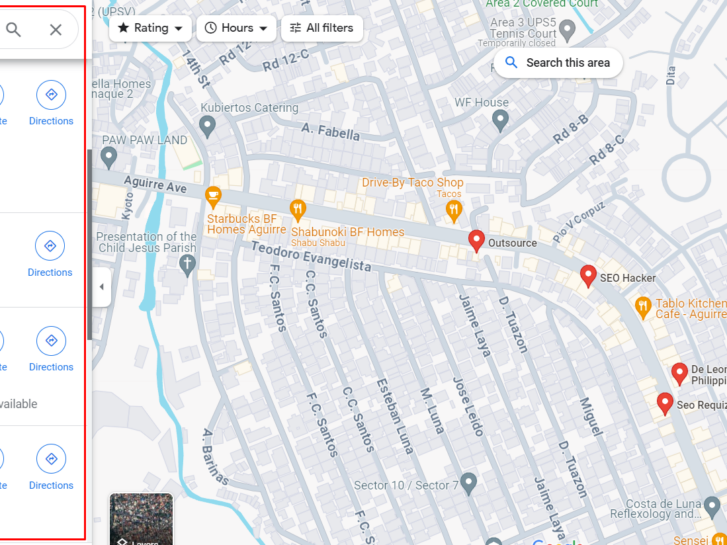

The Complete Guide to Google My Business for Local SEO

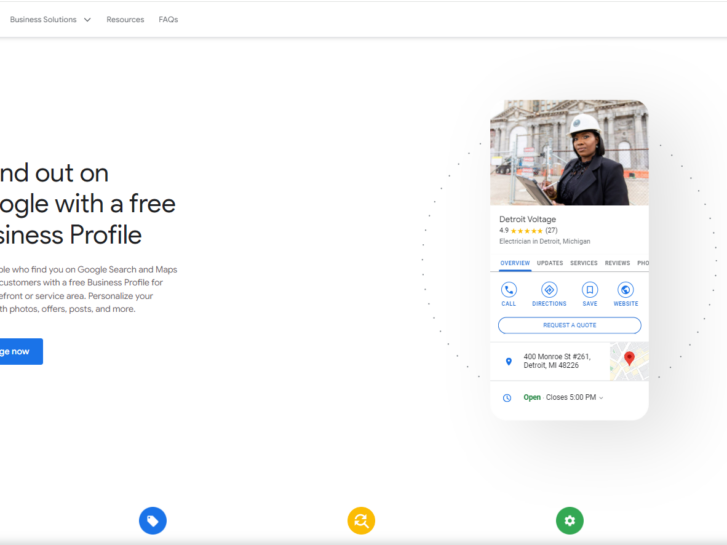

What is Google My Business?

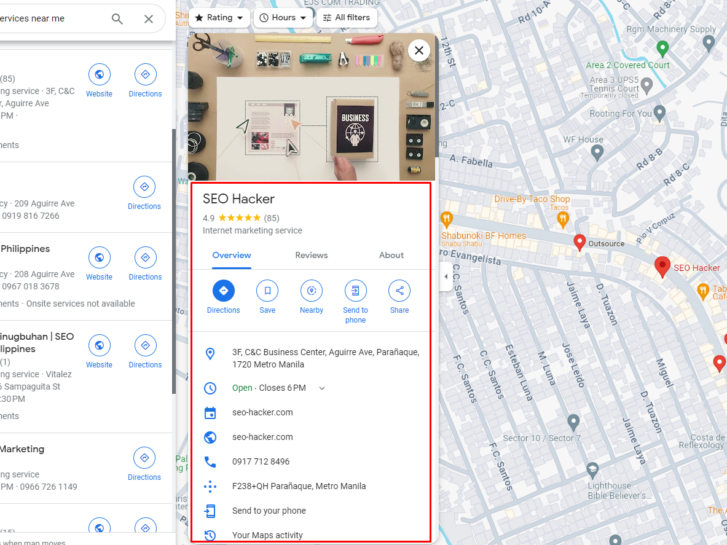

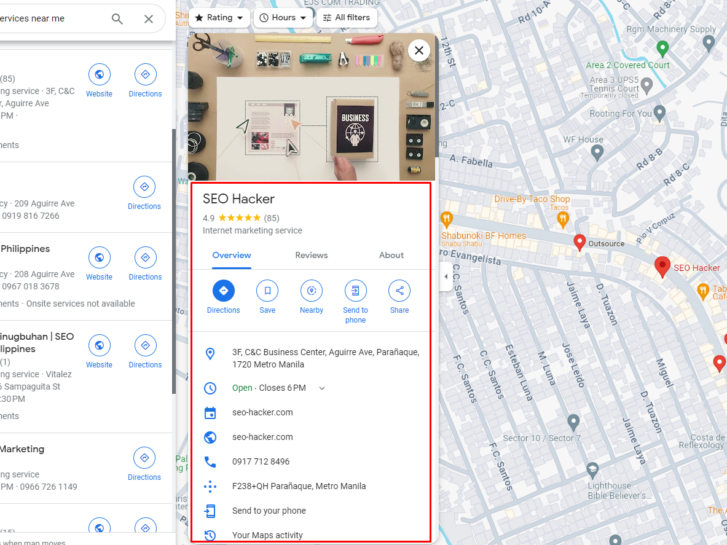

Google My Business (GMB) is a free tool that business owners can use to manage their online presence across Google Search and Google Maps.

This profile also puts out important business details, such as address, phone number, and operating hours, making it easily accessible to potential customers.

When you click on a business listing in the search results it will open a detailed sidebar on the right side of the screen, providing comprehensive information about the business.

This includes popular times, which show when the business is busiest, a Q&A section where potential users can ask questions and receive responses from the business or other customers, and a photos and videos section that showcases products and services. Customer reviews and ratings are also displayed, which are crucial for building trust and credibility.

Using Google My Business for Local SEO

Having an optimized Google Business Profile ensures that your business is visible, searchable, and can attract potential customers who are looking for your products and services.

- Increased reliance on online discovery: More consumers are going online to search and find local businesses, making it crucial to have a GMB listing.

- Be where your customers are searching: GMB ensures your business information is accurate and visible on Google Search and Maps, helping you stay competitive.

- Connect with customers digitally: GMB allows customers to connect with your business through various channels, including messaging and reviews.

- Build your online reputation: GMB makes it easy for customers to leave reviews, which can improve your credibility and trustworthiness.

- Location targeting: GMB enables location-based targeting, showing your ads to people searching for businesses in your exact location.

- Measurable results: GMB provides actionable analytics, allowing you to track your performance and optimize your listing.

How to Set Up Google My Business

If you already have a profile and need help claiming, verifying, and/or optimizing it, skip to the next sections.

If you’re creating a new Google My Business profile, here’s a step-by-step guide:

Step 1: Access or Create your Google Account:

If you don’t already have a Google account, follow these steps to create one:

- Visit the Google Account Sign-up Page: Go to the Google Account sign-up page and click on “Create an account.”

- Enter Your Information: Fill in the required fields, including your name, email address, and password.

- Verify Your Account: Google will send a verification email to your email address. Click on the link in the email to confirm your account.

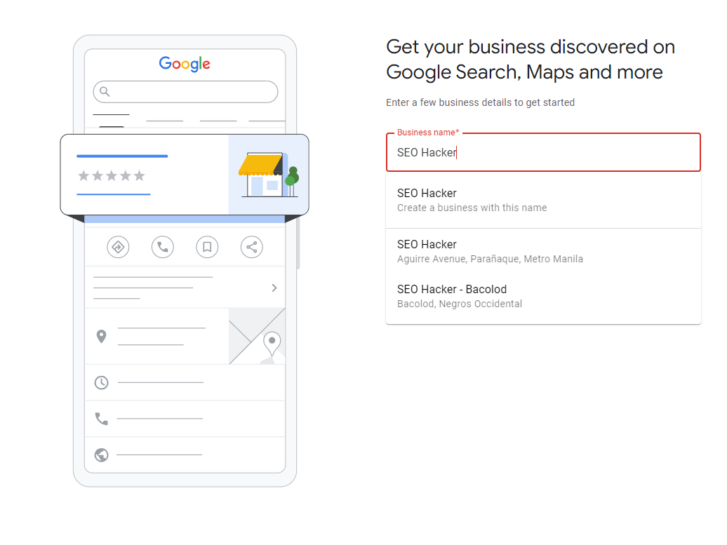

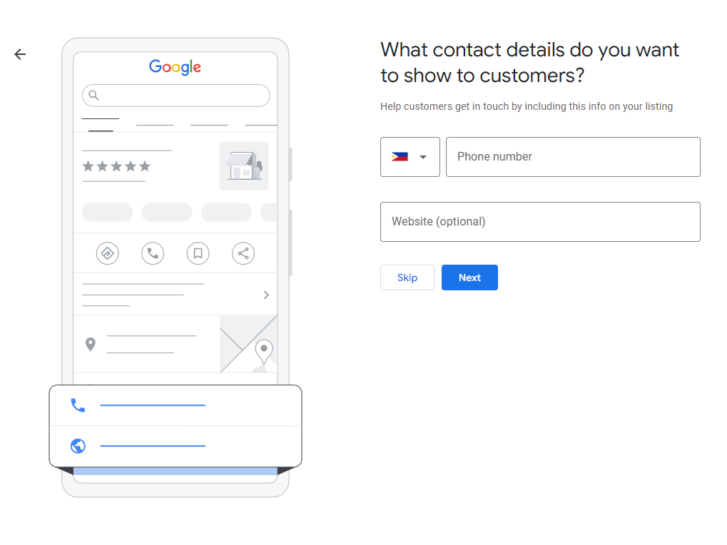

Step 2: Access Google My Business

Step 3: Enter Your Business Name and Category

- Type in your exact business name. Google will suggest existing businesses as you type

- If your business is not listed, fully type out the name as it appears

- Search for and select your primary business category

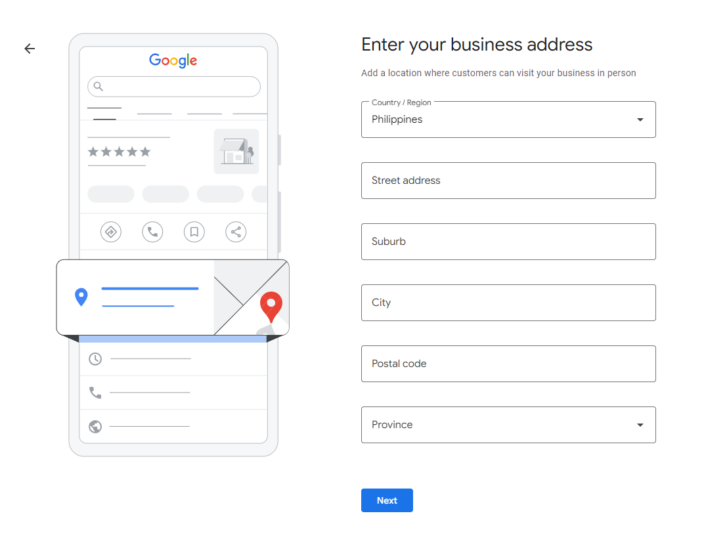

Step 4: Provide Your Business Address

- If you have a physical location where customers can visit, select “Yes” and enter your address.

- If you are a service area business without a physical location, select “No” and enter your service area.

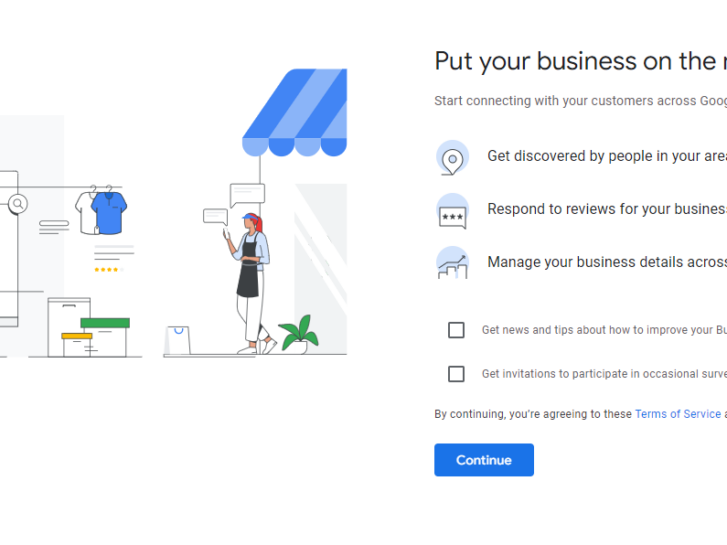

Step 5: Add Your Contact Information

- Enter your business phone number and website URL

- You can also create a free website based on your GMB information

Step 6: Complete Your Profile

To complete your profile, add the following details:

- Hours of Operation: Enter your business’s operating hours to help customers plan their visits.

- Services: List the services your business offers to help customers understand what you do.

- Description: Write a detailed description of your business to help customers understand your offerings.

Now that you know how to set up your Google My Business account, all that’s left is to verify it.

Verification is essential for you to manage and update business information whenever you need to, and for Google to show your business profile to the right users and for the right search queries.

If you are someone who wants to claim their business or is currently on the last step of setting up their GMB, this guide will walk you through the verification process to solidify your business’ online credibility and visibility.

How to Verify Google My Business

There are several ways you can verify your business, including:

- Postcard Verification: Google will send a postcard to your business address with a verification code. Enter the code on your GMB dashboard to verify.

- Phone Verification: Google will call your business phone number and provide a verification code. Enter the code on your GMB dashboard to verify.

- Email Verification: If you have a business email address, you can use it to verify your listing.

- Instant Verification: If you have a Google Analytics account linked to your business, you can use instant verification.

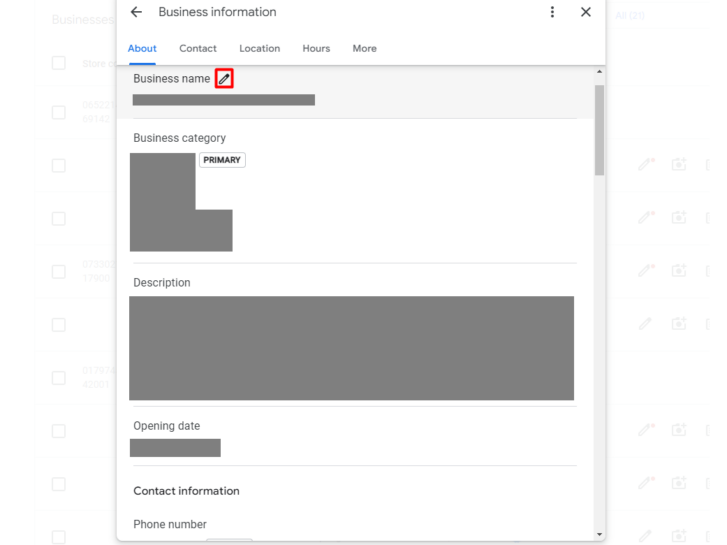

How to Claim & Verify an Existing Google My Business Profile

If your business has an existing Google My Business profile, and you want to claim it, then follow these steps:

Step 1: Sign in to Google My Business

Access Google My Business: Go to the Google My Business website and sign in with your Google account. If you don’t have a Google account, create one by following the sign-up process.

Step 2: Search for Your Business

Enter your business name in the search bar to find your listing. If your business is already listed, you will see it in the search results.

Step 3: Claim Your Listing

If your business is not already claimed, you will see a “Claim this business” button. Click on this button to start the claiming process.

Step 4: Complete Your Profile

Once your listing is verified, you can complete your profile by adding essential business information such as:

- Business Name: Ensure it matches your business name.

- Address: Enter your business address accurately.

- Phone Number: Enter your business phone number.

- Hours of Operation: Specify your business hours.

- Categories: Choose relevant categories that describe your business.

- Description: Write a brief description of your business.

Step 5: Manage Your Listing

Regularly check and update your listing to ensure it remains accurate and up-to-date. Respond to customer reviews and use the insights provided by Google Analytics to improve your business.

Step 6: Verification

Verify your business through postcard, email, or phone numbers as stated above.

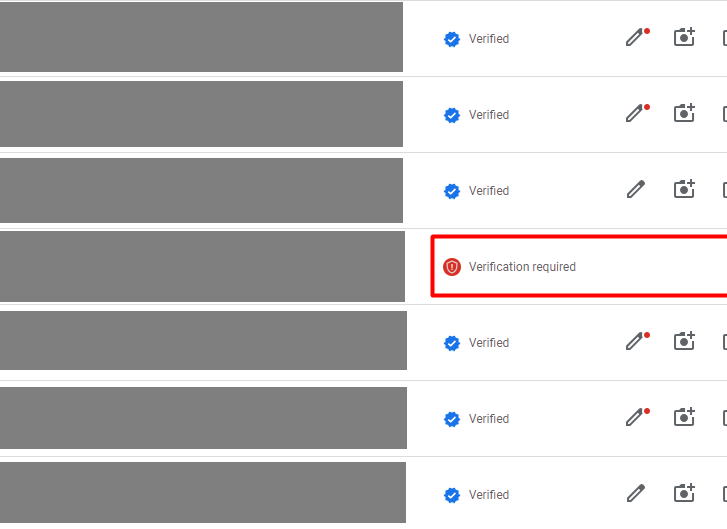

Now that you have successfully set up and verified your Google My Business listing, it’s time to optimize it for maximum visibility and effectiveness. By doing this, you can improve your local search rankings, increase customer engagement, and drive more conversions.

How to Optimize Google My Business

Here are the tips that I usually do when I’m optimizing my GMB account:

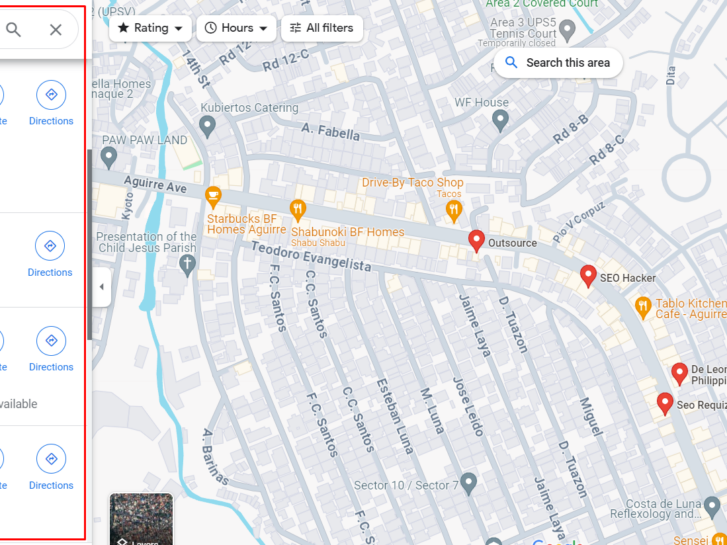

-

- Complete Your Profile: Start by ensuring every section applicable to your business is filled out with accurate and up-to-date information. Use your real business name without keyword stuffing to avoid suspension. Ensure your address and phone number are consistent with those on your website and other online directories, and add a link to your website and social media accounts.

- Optimize for Keywords: Integrate relevant keywords into your business description, services, and posts. However, avoid stuffing your GMB profile with keywords, as this can appear spammy and reduce readability.

- Add Backlinks: Encourage local websites, blogs, and business directories to link to your GMB profile.

- Select Appropriate Categories: Choose the most relevant primary category for your business to help Google understand what your business is about. Additionally, add secondary categories that accurately describe your business’s offerings to capture more relevant search traffic.

- Encourage and Manage Reviews: Ask satisfied customers to leave positive reviews on your profile, as reviews significantly influence potential customers. Respond to all reviews, both positive and negative, in a professional and timely manner. Addressing negative feedback shows that you value customer opinions and are willing to improve.

- Add High-Quality Photos and Videos: Use high-quality images for your profile and cover photos that represent your business well. Upload additional photos of your products, services, team, and premises. Adding short, engaging videos can give potential customers a virtual tour or highlight key services, enhancing their interest.

By following this comprehensive guide, you have successfully set up, verified, and optimized your GMB profile. Remember to continuously maintain and update your profile to ensure maximum impact and success.

Key Takeaway:

With more and more people turning to Google for all their needs, creating, verifying, and optimizing your Google My Business profile is a must if you want your business to be found.

Follow this guide to Google My Business, and you’re going to see increased online presence across Google Search and Google Maps in no time.

-

SEO7 days ago

SEO7 days agoGoogle On How It Manages Disclosure Of Search Incidents

-

SEO6 days ago

SEO6 days ago8 Ways To Promote Your Facebook Page Successfully

-

SEARCHENGINES7 days ago

SEARCHENGINES7 days agoGoogle Ranking Volatility, Coupon Sites Abuse, Mobile Indexing Change, AI Overviews Decline & Ad News

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: June 10, 2024

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Search Ranking Algorithm Volatility On June 8th

-

SEO5 days ago

SEO5 days agoGoogle’s Statement About CTR And HCU

-

WORDPRESS5 days ago

WORDPRESS5 days agoSay Hello to the Hosting Dashboard – WordPress.com News

-

SEO4 days ago

SEO4 days agoHow to Persuade Your Boss to Send You to Ahrefs Evolve