Facebook will reconsider Trump’s ban in two years

The clock is ticking on former President Donald Trump’s ban from Facebook, formerly indefinite and now for a period of two years, the maximum penalty under a newly revealed set of rules for suspending public figures. But when the time comes, the company will reevaluate the ban and make a decision then whether to end or extend it, rendering it indefinitely definite.

The ban of Trump in January was controversial in different ways to different groups, but the issue on which Facebook’s Oversight Board stuck as it chewed over the decision was that there was nothing in the company’s rules that supported an indefinite ban. Either remove him permanently, they said, or else put a definite limit to the suspension.

Facebook has chosen… neither, really. The two-year limit on the ban (backdated to January) is largely decorative, since the option to extend it is entirely Facebook’s prerogative, as VP of public affairs Nick Clegg writes:

At the end of this period, we will look to experts to assess whether the risk to public safety has receded. We will evaluate external factors, including instances of violence, restrictions on peaceful assembly and other markers of civil unrest. If we determine that there is still a serious risk to public safety, we will extend the restriction for a set period of time and continue to re-evaluate until that risk has receded.

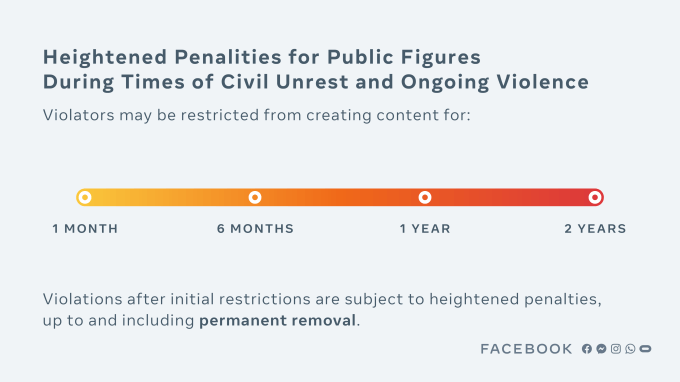

When the suspension is eventually lifted, there will be a strict set of rapidly escalating sanctions that will be triggered if Mr. Trump commits further violations in future, up to and including permanent removal of his pages and accounts.

It sort of fulfills the recommendation of the Oversight Board, but truthfully Trump’s position is no less precarious than before. A ban that can be rescinded or extended whenever the company chooses is certainly “indefinite.”

In a statement, Trump called the ruling “an insult.”

That said, the Facebook decision here does reach beyond the Trump situation. Essentially the Oversight Board suggested they need a rule that defines how they act in situations like Trump’s, so they’ve created a standard… of sorts.

This highly specific “enforcement protocol” is sort of like a visual representation of Facebook saying “we take this very seriously.” While it gives the impression of some kind of sentencing guidelines by which public figures will systematically be given an appropriate ban length, every aspect of the process is arbitrarily decided by Facebook.

What circumstances justify the use of these “heightened penalties”? What kind of violations qualify for bans? How is the severity decided? Who picks the duration of the ban? When that duration expires, can it simply be extended if “there is still a serious risk to public safety”? What are the “rapidly escalating sanctions” these public figures will face post-suspension? Are there time limits on making decisions? Will they be deliberated publicly?

It’s not that we must assume Facebook will be inconsistent or self-deal or make bad decisions on any of these questions and the many more that come to mind, exactly (though that is a real risk), but that this neither adds nor exposes any machinery of the Facebook moderation process during moments of crisis when we most need to see it working.

Despite the new official-looking punishment gradient and re-re-reiterated promise to be transparent, everything involved in what Facebook proposes seems just as obscure and arbitrary as the decision that led to Trump’s ban.

“We know that any penalty we apply — or choose not to apply — will be controversial,” writes Clegg. True, but while some people will be happy with some decisions and others angry, all are united in their desire to have the processes that lead to said penalties elucidated and adhered to. Today’s policy changes do not appear to accomplish that, regarding Trump or anyone else.

Facebook Faces Yet Another Outage: Platform Encounters Technical Issues Again

Uppdated: It seems that today’s issues with Facebook haven’t affected as many users as the last time. A smaller group of people appears to be impacted this time around, which is a relief compared to the larger incident before. Nevertheless, it’s still frustrating for those affected, and hopefully, the issues will be resolved soon by the Facebook team.

Facebook had another problem today (March 20, 2024). According to Downdetector, a website that shows when other websites are not working, many people had trouble using Facebook.

This isn’t the first time Facebook has had issues. Just a little while ago, there was another problem that stopped people from using the site. Today, when people tried to use Facebook, it didn’t work like it should. People couldn’t see their friends’ posts, and sometimes the website wouldn’t even load.

Downdetector, which watches out for problems on websites, showed that lots of people were having trouble with Facebook. People from all over the world said they couldn’t use the site, and they were not happy about it.

When websites like Facebook have problems, it affects a lot of people. It’s not just about not being able to see posts or chat with friends. It can also impact businesses that use Facebook to reach customers.

Since Facebook owns Messenger and Instagram, the problems with Facebook also meant that people had trouble using these apps. It made the situation even more frustrating for many users, who rely on these apps to stay connected with others.

During this recent problem, one thing is obvious: the internet is always changing, and even big websites like Facebook can have problems. While people wait for Facebook to fix the issue, it shows us how easily things online can go wrong. It’s a good reminder that we should have backup plans for staying connected online, just in case something like this happens again.

Christian family goes in hiding after being cleared of blasphemy

LAHORE, Pakistan — A court in Pakistan granted bail to a Christian falsely charged with blasphemy, but he and his family have separated and gone into hiding amid threats to their lives, sources said.

Haroon Shahzad, 45, was released from Sargodha District Jail on Nov. 15, said his attorney, Aneeqa Maria. Shahzad was charged with blasphemy on June 30 after posting Bible verses on Facebook that infuriated Muslims, causing dozens of Christian families in Chak 49 Shumaali, near Sargodha in Punjab Province, to flee their homes.

Lahore High Court Judge Ali Baqir Najfi granted bail on Nov. 6, but the decision and his release on Nov. 15 were not made public until now due to security fears for his life, Maria said.

Shahzad told Morning Star News by telephone from an undisclosed location that the false accusation has changed his family’s lives forever.

“My family has been on the run from the time I was implicated in this false charge and arrested by the police under mob pressure,” Shahzad told Morning Star News. “My eldest daughter had just started her second year in college, but it’s been more than four months now that she hasn’t been able to return to her institution. My other children are also unable to resume their education as my family is compelled to change their location after 15-20 days as a security precaution.”

Though he was not tortured during incarceration, he said, the pain of being away from his family and thinking about their well-being and safety gave him countless sleepless nights.

“All of this is due to the fact that the complainant, Imran Ladhar, has widely shared my photo on social media and declared me liable for death for alleged blasphemy,” he said in a choked voice. “As soon as Ladhar heard about my bail, he and his accomplices started gathering people in the village and incited them against me and my family. He’s trying his best to ensure that we are never able to go back to the village.”

Shahzad has met with his family only once since his release on bail, and they are unable to return to their village in the foreseeable future, he said.

“We are not together,” he told Morning Star News. “They are living at a relative’s house while I’m taking refuge elsewhere. I don’t know when this agonizing situation will come to an end.”

The Christian said the complainant, said to be a member of Islamist extremist party Tehreek-e-Labbaik Pakistan and also allegedly connected with banned terrorist group Lashkar-e-Jhangvi, filed the charge because of a grudge. Shahzad said he and his family had obtained valuable government land and allotted it for construction of a church building, and Ladhar and others had filed multiple cases against the allotment and lost all of them after a four-year legal battle.

“Another probable reason for Ladhar’s jealousy could be that we were financially better off than most Christian families of the village,” he said. “I was running a successful paint business in Sargodha city, but that too has shut down due to this case.”

Regarding the social media post, Shahzad said he had no intention of hurting Muslim sentiments by sharing the biblical verse on his Facebook page.

“I posted the verse a week before Eid Al Adha [Feast of the Sacrifice] but I had no idea that it would be used to target me and my family,” he said. “In fact, when I came to know that Ladhar was provoking the villagers against me, I deleted the post and decided to meet the village elders to explain my position.”

The village elders were already influenced by Ladhar and refused to listen to him, Shahzad said.

“I was left with no option but to flee the village when I heard that Ladhar was amassing a mob to attack me,” he said.

Shahzad pleaded with government authorities for justice, saying he should not be punished for sharing a verse from the Bible that in no way constituted blasphemy.

Similar to other cases

Shahzad’s attorney, Maria, told Morning Star News that events in Shahzad’s case were similar to other blasphemy cases filed against Christians.

“Defective investigation, mala fide on the part of the police and complainant, violent protests against the accused persons and threats to them and their families, forcing their displacement from their ancestral areas, have become hallmarks of all blasphemy allegations in Pakistan,” said Maria, head of The Voice Society, a Christian paralegal organization.

She said that the case filed against Shahzad was gross violation of Section 196 of the Criminal Procedure Code (CrPC), which states that police cannot register a case under the Section 295-A blasphemy statute against a private citizen without the approval of the provincial government or federal agencies.

Maria added that Shahzad and his family have continued to suffer even though there was no evidence of blasphemy.

“The social stigma attached with a blasphemy accusation will likely have a long-lasting impact on their lives, whereas his accuser, Imran Ladhar, would not have to face any consequence of his false accusation,” she said.

The judge who granted bail noted that Shahzad was charged with blasphemy under Section 295-A, which is a non-cognizable offense, and Section 298, which is bailable. The judge also noted that police had not submitted the forensic report of Shahzad’s cell phone and said evidence was required to prove that the social media was blasphemous, according to Maria.

Bail was set at 100,000 Pakistani rupees (US $350) and two personal sureties, and the judge ordered police to further investigate, she said.

Shahzad, a paint contractor, on June 29 posted on his Facebook page 1 Cor. 10:18-21 regarding food sacrificed to idols, as Muslims were beginning the four-day festival of Eid al-Adha, which involves slaughtering an animal and sharing the meat.

A Muslim villager took a screenshot of the post, sent it to local social media groups and accused Shahzad of likening Muslims to pagans and disrespecting the Abrahamic tradition of animal sacrifice.

Though Shahzad made no comment in the post, inflammatory or otherwise, the situation became tense after Friday prayers when announcements were made from mosque loudspeakers telling people to gather for a protest, family sources previously told Morning Star News.

Fearing violence as mobs grew in the village, most Christian families fled their homes, leaving everything behind.

In a bid to restore order, the police registered a case against Shahzad under Sections 295-A and 298. Section 295-A relates to “deliberate and malicious acts intended to outrage religious feelings of any class by insulting its religion or religious beliefs” and is punishable with imprisonment of up to 10 years and fine, or both. Section 298 prescribes up to one year in prison and a fine, or both, for hurting religious sentiments.

Pakistan ranked seventh on Open Doors’ 2023 World Watch List of the most difficult places to be a Christian, up from eighth the previous year.

Morning Star News is the only independent news service focusing exclusively on the persecution of Christians. The nonprofit’s mission is to provide complete, reliable, even-handed news in order to empower those in the free world to help persecuted Christians, and to encourage persecuted Christians by informing them that they are not alone in their suffering.

Free Religious Freedom Updates

Join thousands of others to get the FREEDOM POST newsletter for free, sent twice a week from The Christian Post.

Individual + Team Stats: Hornets vs. Timberwolves

CHARLOTTE HORNETS MINNESOTA TIMBERWOLVES You can follow us for future coverage by liking us on Facebook & following us on X: Facebook – All Hornets X – …

Source link

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 29, 2024

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 30, 2024

-

MARKETING5 days ago

MARKETING5 days agoHow To Develop a Great Creative Brief and Get On-Target Content

-

SEO7 days ago

SEO7 days agoGoogle’s John Mueller On Website Recovery After Core Updates

-

WORDPRESS6 days ago

WORDPRESS6 days ago13 Best Fun WordPress Plugins You’re Missing Out On

-

SEO5 days ago

SEO5 days agoWhy Big Companies Make Bad Content

-

SEO5 days ago

SEO5 days agoHow To Drive Pipeline With A Silo-Free Strategy

-

SEO6 days ago

SEO6 days agoOpenAI To Show Content & Links In Response To Queries