SEO

The Ranking Factors & The Myths We Found

Celebrate the Holidays with some of SEJ’s best articles of 2023.

Our Festive Flashback series runs from December 21 – January 5, featuring daily reads on significant events, fundamentals, actionable strategies, and thought leader opinions.

2023 has been quite eventful in the SEO industry and our contributors produced some outstanding articles to keep pace and reflect these changes.

Catch up on the best reads of 2023 to give you plenty to reflect on as you move into 2024.

Yandex is the search engine with the majority of market share in Russia and the fourth-largest search engine in the world.

On January 27, 2023, it suffered what is arguably one of the largest data leaks that a modern tech company has endured in many years – but is the second leak in less than a decade.

In 2015, a former Yandex employee attempted to sell Yandex’s search engine code on the black market for around $30,000.

The initial leak in January this year revealed 1,922 ranking factors, of which more than 64% were listed as unused or deprecated (superseded and best avoided).

This leak was just the file labeled kernel, but as the SEO community and I delved deeper, more files were found that combined contain approximately 17,800 ranking factors.

When it comes to practicing SEO for Yandex, the guide I wrote two years ago, for the most part, still applies.

Yandex, like Google, has always been public with its algorithm updates and changes, and in recent years, how it has adopted machine learning.

Notable updates from the past two-three years include:

- Vega (which doubled the size of the index).

- Mimicry (penalizing fake websites impersonating brands).

- Y1 update (introducing YATI).

- Y2 update (late 2022).

- Adoption of IndexNow.

- A fresh rollout and assumed update of the PF filter.

On a personal note, this data leak is like a second Christmas.

Since January 2020, I’ve run an SEO news website as a hobby dedicated to covering Yandex SEO and search news in Russia with 600+ articles, so this is probably the peak event of the hobby site.

I’ve also spoken twice at the Optimization conference – the largest SEO conference in Russia.

This is also a good test to see how closely Yandex’s public statements match the codebase secrets.

In 2019, working with Yandex’s PR team, I was able to interview engineers in their Search team and ask a number of questions sourced from the wider Western SEO community.

You can read the interview with the Yandex Search team here.

Whilst Yandex is primarily known for its presence in Russia, the search engine also has a presence in Turkey, Kazakhstan, and Georgia.

The data leak was believed to be politically motivated and the actions of a rogue employee, and contains a number of code fragments from Yandex’s monolithic repository, Arcadia.

Within the 44GB of leaked data, there’s information relating to a number of Yandex products including Search, Maps, Mail, Metrika, Disc, and Cloud.

What Yandex Has Had To Say

As I write this post (January 31st, 2023), Yandex has publicly stated that:

the contents of the archive (leaked code base) correspond to the outdated version of the repository – it differs from the current version used by our services

And:

It is important to note that the published code fragments also contain test algorithms that were used only within Yandex to verify the correct operation of the services.

So, how much of this code base is actively used is questionable.

Yandex has also revealed that during its investigation and audit, it found a number of errors that violate its own internal principles, so it is likely that portions of this leaked code (that are in current use) may be changing in the near future.

Factor Classification

Yandex classifies its ranking factors into three categories.

This has been outlined in Yandex’s public documentation for some time, but I feel is worth including here, as it better helps us understand the ranking factor leak.

- Static factors – Factors that are related directly to the website (e.g. inbound backlinks, inbound internal links, headers, and ads ratio).

- Dynamic factors – Factors that are related to both the website and the search query (e.g. text relevance, keyword inclusions, TF*IDF).

- User search-related factors – Factors relating to the user query (e.g. where is the user located, query language, and intent modifiers).

The ranking factors in the document are tagged to match the corresponding category, with TG_STATIC and TG_DYNAMIC, and then TG_QUERY_ONLY, TG_QUERY, TG_USER_SEARCH, and TG_USER_SEARCH_ONLY.

Yandex Leak Learnings So Far

From the data thus far, below are some of the affirmations and learnings we’ve been able to make.

There is so much data in this leak, it is very likely that we will be finding new things and making new connections in the next few weeks.

These include:

- PageRank (a form of).

- At some point Yandex utilized TF*IDF.

- Yandex still uses meta keywords, which are also highlighted in its documentation.

- Yandex has specific factors for medical, legal, and financial topics (YMYL).

- It also uses a form of page quality scoring, but this is known (ICS score).

- Links from high-authority websites have an impact on rankings.

- There’s nothing new to suggest Yandex can crawl JavaScript yet outside of already publicly documented processes.

- Server errors and excessive 4xx errors can impact ranking.

- The time of day is taken into consideration as a ranking factor.

Below, I’ve expanded on some other affirmations and learnings from the leak.

Where possible, I’ve also tied these leaked ranking factors to the algorithm updates and announcements that relate to them, or where we were told about them being impactful.

MatrixNet

MatrixNet is mentioned in a few of the ranking factors and was announced in 2009, and then superseded in 2017 by Catboost, which was rolled out across the Yandex product sphere.

This further adds validity to comments directly from Yandex, and one of the factor authors DenPlusPlus (Den Raskovalov), that this is, in fact, an outdated code repository.

MatrixNet was originally introduced as a new, core algorithm that took into consideration thousands of ranking factors and assigned weights based on the user location, the actual search query, and perceived search intent.

It is typically seen as an early version of Google’s RankBrain, when they are indeed two very different systems. MatrixNet was launched six years before RankBrain was announced.

MatrixNet has also been built upon, which isn’t surprising, given it is now 14 years old.

In 2016, Yandex introduced the Palekh algorithm that used deep neural networks to better match documents (webpages) and queries, even if they didn’t contain the right “levels” of common keywords, but satisfied the user intents.

Palekh was capable of processing 150 pages at a time, and in 2017 was updated with the Korolyov update, which took into account more depth of page content, and could work off 200,000 pages at once.

URL & Page-Level Factors

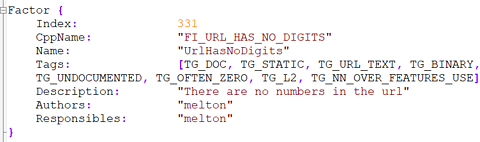

From the leak, we have learned that Yandex takes into consideration URL construction, specifically:

- The presence of numbers in the URL.

- The number of trailing slashes in the URL (and if they are excessive).

- The number of capital letters in the URL is a factor.

The age of a page (document age) and the last updated date are also important, and this makes sense.

As well as document age and last update, a number of factors in the data relate to freshness – particularly for news-related queries.

Yandex formerly used timestamps, specifically not for ranking purposes but “reordering” purposes, but this is now classified as unused.

Also in the deprecated column are the use of keywords in the URL. Yandex has previously measured that three keywords from the search query in the URL would be an “optimal” result.

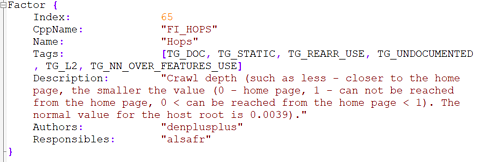

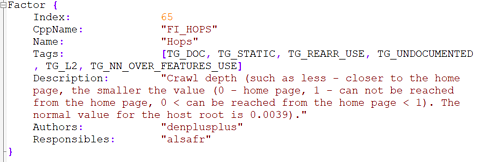

Internal Links & Crawl Depth

Whilst Google has gone on the record to say that for its purposes, crawl depth isn’t explicitly a ranking factor, Yandex appears to have an active piece of code that dictates that URLs that are reachable from the homepage have a “higher” level of importance.

Screenshot from author, January 2023

Screenshot from author, January 2023

This mirrors John Mueller’s 2018 statement that Google gives “a little more weight” to pages found more than one click from the homepage.

The ranking factors also highlight a specific token weighting for webpages that are “orphans” within the website linking structure.

Clicks & CTR

In 2011, Yandex released a blog post talking about how the search engine uses clicks as part of its rankings and also addresses the desires of the SEO pros to manipulate the metric for ranking gain.

Specific click factors in the leak look at things like:

- The ratio of the number of clicks on the URL, relative to all clicks on the search.

- The same as above, but broken down by region.

- How often do users click on the URL for the search?

Manipulating Clicks

Manipulating user behavior, specifically “click-jacking”, is a known tactic within Yandex.

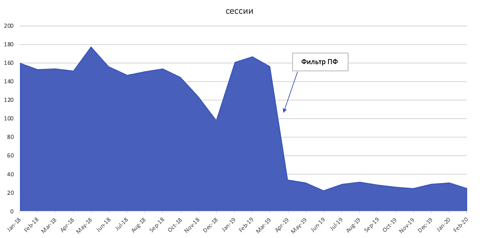

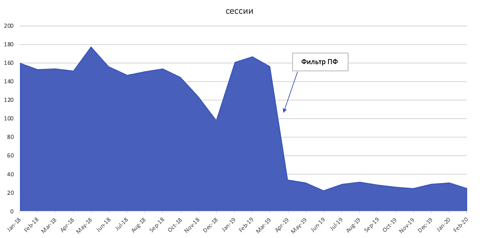

Yandex has a filter, known as the PF filter, that actively seeks out and penalizes websites that engage in this activity using scripts that monitor IP similarities and then the “user actions” of those clicks – and the impact can be significant.

The below screenshot shows the impact on organic sessions (сессии) after being penalized for imitating user clicks.

-

Image from Russian Search News, January 2023

Image from Russian Search News, January 2023

User Behavior

The user behavior takeaways from the leak are some of the more interesting findings.

User behavior manipulation is a common SEO violation that Yandex has been combating for years. At the 2020 Optimization conference, then Head of Yandex Webmaster Tools Mikhail Slevinsky said the company is making good progress in detecting and penalizing this type of behavior.

Yandex penalizes user behavior manipulation with the same PF filter used to combat CTR manipulation.

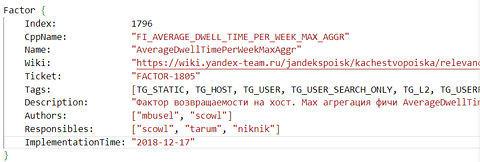

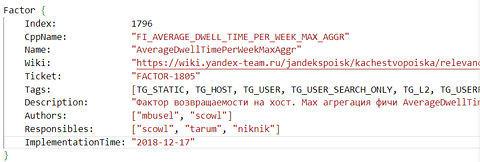

Dwell Time

102 of the ranking factors contain the tag TG_USERFEAT_SEARCH_DWELL_TIME, and reference the device, user duration, and average page dwell time.

All but 39 of these factors are deprecated.

Screenshot from author, January 2023

Screenshot from author, January 2023

Bing first used the term Dwell time in a 2011 blog, and in recent years Google has made it clear that it doesn’t use dwell time (or similar user interaction signals) as ranking factors.

YMYL

YMYL (Your Money, Your Life) is a concept well-known within Google and is not a new concept to Yandex.

Within the data leak, there are specific ranking factors for medical, legal, and financial content that exist – but this was notably revealed in 2019 at the Yandex Webmaster conference when it announced the Proxima Search Quality Metric.

Metrika Data Usage

Six of the ranking factors relate to the usage of Metrika data for the purposes of ranking. However, one of them is tagged as deprecated:

- The number of similar visitors from the YandexBar (YaBar/Ябар).

- The average time spent on URLs from those same similar visitors.

- The “core audience” of pages on which there is a Metrika counter [deprecated].

- The average time a user spends on a host when accessed externally (from another non-search site) from a specific URL.

- Average ‘depth’ (number of hits within the host) of a user’s stay on the host when accessed externally (from another non-search site) from a particular URL.

- Whether or not the domain has Metrika installed.

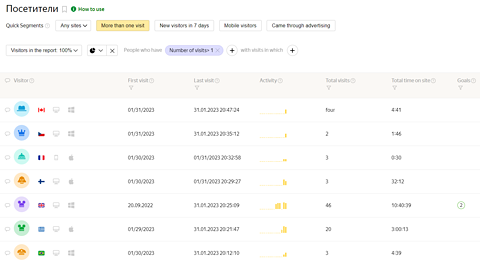

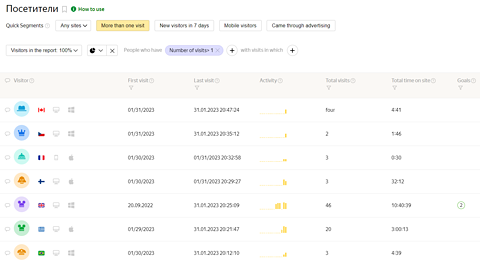

In Metrika, user data is handled differently.

Unlike Google Analytics, there are a number of reports focused on user “loyalty” combining site engagement metrics with return frequency, duration between visits, and source of the visit.

For example, I can see a report in one click to see a breakdown of individual site visitors:

Screenshot from Metrika, January 2023

Screenshot from Metrika, January 2023

Metrika also comes “out of the box” with heatmap tools and user session recording, and in recent years the Metrika team has made good progress in being able to identify and filter bot traffic.

With Google Analytics, there is an argument that Google doesn’t use UA/GA4 data for ranking purposes because of how easy it is to modify or break the tracking code – but with Metrika counters, they are a lot more linear, and a lot of the reports are unchangeable in terms of how the data is collected.

Impact Of Traffic On Rankings

Following on from looking at Metrika data as a ranking factor; These factors effectively confirm that direct traffic and paid traffic (buying ads via Yandex Direct) can impact organic search performance:

- Share of direct visits among all incoming traffic.

- Green traffic share (aka direct visits) – Desktop.

- Green traffic share (aka direct visits) – Mobile.

- Search traffic – transitions from search engines to the site.

- Share of visits to the site not by links (set by hand or from bookmarks).

- The number of unique visitors.

- Share of traffic from search engines.

News Factors

There are a number of factors relating to “News”, including two that mention Yandex.News directly.

Yandex.News was an equivalent of Google News, but was sold to the Russian social network VKontakte in August 2022, along with another Yandex product “Zen”.

So, it’s not clear if these factors related to a product no longer owned or operated by Yandex, or to how news websites are ranked in “regular” search.

Backlink Importance

Yandex has similar algorithms to combat link manipulation as Google – and has since the Nepot filter in 2005.

From reviewing the backlink ranking factors and some of the specifics in the descriptions, we can assume that the best practices for building links for Yandex SEO would be to:

- Build links with a more natural frequency and varying amounts.

- Build links with branded anchor texts as well as use commercial keywords.

- If buying links, avoid buying links from websites that have mixed topics.

Below is a list of link-related factors that can be considered affirmations of best practices:

- The age of the backlink is a factor.

- Link relevance based on topics.

- Backlinks built from homepages carry more weight than internal pages.

- Links from the top 100 websites by PageRank (PR) can impact rankings.

- Link relevance based on the quality of each link.

- Link relevance, taking into account the quality of each link, and the topic of each link.

- Link relevance, taking into account the non-commercial nature of each link.

- Percentage of inbound links with query words.

- Percentage of query words in links (up to a synonym).

- The links contain all the words of the query (up to a synonym).

- Dispersion of the number of query words in links.

However, there are some link-related factors that are additional considerations when planning, monitoring, and analyzing backlinks:

- The ratio of “good” versus “bad” backlinks to a website.

- The frequency of links to the site.

- The number of incoming SEO trash links between hosts.

The data leak also revealed that the link spam calculator has around 80 active factors that are taken into consideration, with a number of deprecated factors.

This creates the question as to how well Yandex is able to recognize negative SEO attacks, given it looks at the ratio of good versus bad links, and how it determines what a bad link is.

A negative SEO attack is also likely to be a short burst (high frequency) link event in which a site will unwittingly gain a high number of poor quality, non-topical, and potentially over-optimized links.

Yandex uses machine learning models to identify Private Blog Networks (PBNs) and paid links, and it makes the same assumption between link velocity and the time period they are acquired.

Typically, paid-for links are generated over a longer period of time, and these patterns (including link origin site analysis) are what the Minusinsk update (2015) was introduced to combat.

Yandex Penalties

There are two ranking factors, both deprecated, named SpamKarma and Pessimization.

Pessimization refers to reducing PageRank to zero and aligns with the expectations of severe Yandex penalties.

SpamKarma also aligns with assumptions made around Yandex penalizing hosts and individuals, as well as individual domains.

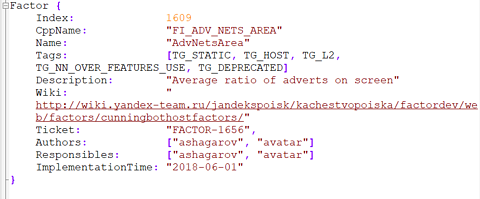

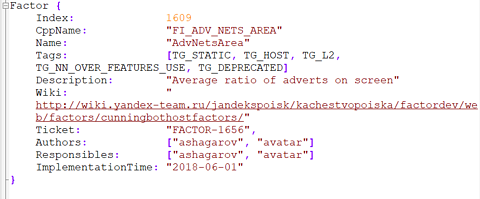

Onpage Advertising

There are a number of factors relating to advertising on the page, some of them deprecated (like the screenshot example below).

Screenshot from author, January 2023

Screenshot from author, January 2023

It’s not known from the description exactly what the thought process with this factor was, but it could be assumed that a high ratio of adverts to visible screen was a negative factor – much like how Google takes umbrage if adverts obfuscate the page’s main content, or are obtrusive.

Tying this back to known Yandex mechanisms, the Proxima update also took into consideration the ratio of useful and advertising content on a page.

Can We Apply Any Yandex Learnings To Google?

Yandex and Google are disparate search engines, with a number of differences, despite the tens of engineers who have worked for both companies.

Because of this fight for talent, we can infer that some of these master builders and engineers will have built things in a similar fashion (though not direct copies), and applied learnings from previous iterations of their builds with their new employers.

What Russian SEO Pros Are Saying About The Leak

Much like the Western world, SEO professionals in Russia have been having their say on the leak across the various Runet forums.

The reaction in these forums has been different to SEO Twitter and Mastodon, with a focus more on Yandex’s filters, and other Yandex products that are optimized as part of wider Yandex optimization campaigns.

It is also worth noting that a number of conclusions and findings from the data match what the Western SEO world is also finding.

Common themes in the Russian search forums:

- Webmasters asking for insights into recent filters, such as Mimicry and the updated PF filter.

- The age and relevance of some of the factors, due to author names no longer being at Yandex, and mentions of long-retired Yandex products.

- The main interesting learnings are around the use of Metrika data, and information relating to the Crawler & Indexer.

- A number of factors outline the usage of DSSM, which in theory was superseded by the release of Palekh in 2016. This was a search algorithm utilizing machine learning, announced by Yandex in 2016.

- A debate around ICS scoring in Yandex, and whether or not Yandex may provide more traffic to a site and influence its own factors by doing so.

The leaked factors, particularly around how Yandex evaluates site quality, have also come under scrutiny.

There is a long-standing sentiment in the Russian SEO community that Yandex oftentimes favors its own products and services in search results ahead of other websites, and webmasters are asking questions like:

Why does it bother going to all this trouble, when it just nails its services to the top of the page anyway?

In loosely translated documents, these are referred to as the Sorcerers or Yandex Sorcerers. In Google, we’d call these search engine results pages (SERPs) features – like Google Hotels, etc.

In October 2022, Kassir (a Russian ticket portal) claimed ₽328m compensation from Yandex due to lost revenue, caused by the “discriminatory conditions” in which Yandex Sorcerers took the customer base away from the private company.

This is off the back of a 2020 class action in which multiple companies raised a case with the Federal Antimonopoly Service (FAS) for anticompetitive promotion of its own services.

More resources:

Featured Image: FGC/Shutterstock

SEO

How To Drive Pipeline With A Silo-Free Strategy

When it comes to B2B strategy, a holistic approach is the only approach.

Revenue organizations usually operate with siloed teams, and often expect a one-size-fits-all solution (usually buying clicks with paid media).

However, without cohesive brand, infrastructure, and pipeline generation efforts, they’re pretty much doomed to fail.

It’s just like rowing crew, where each member of the team must synchronize their movements to propel the boat forward – successful B2B marketing requires an integrated strategy.

So if you’re ready to ditch your disjointed marketing efforts and try a holistic approach, we’ve got you covered.

Join us on May 15, for an insightful live session with Digital Reach Agency on how to craft a compelling brand and PMF.

We’ll walk through the critical infrastructure you need, and the reliances and dependences of the core digital marketing disciplines.

Key takeaways from this webinar:

- Thinking Beyond Traditional Silos: Learn why traditional marketing silos are no longer viable and how they spell doom for modern revenue organizations.

- How To Identify and Fix Silos: Discover actionable strategies for pinpointing and sealing the gaps in your marketing silos.

- The Power of Integration: Uncover the secrets to successfully integrating brand strategy, digital infrastructure, and pipeline generation efforts.

Ben Childs, President and Founder of Digital Reach Agency, and Jordan Gibson, Head of Growth at Digital Reach Agency, will show you how to seamlessly integrate various elements of your marketing strategy for optimal results.

Don’t make the common mistake of using traditional marketing silos – sign up now and learn what it takes to transform your B2B go-to-market.

You’ll also get the opportunity to ask Ben and Jordan your most pressing questions, following the presentation.

And if you can’t make it to the live event, register anyway and we’ll send you a recording shortly after the webinar.

SEO

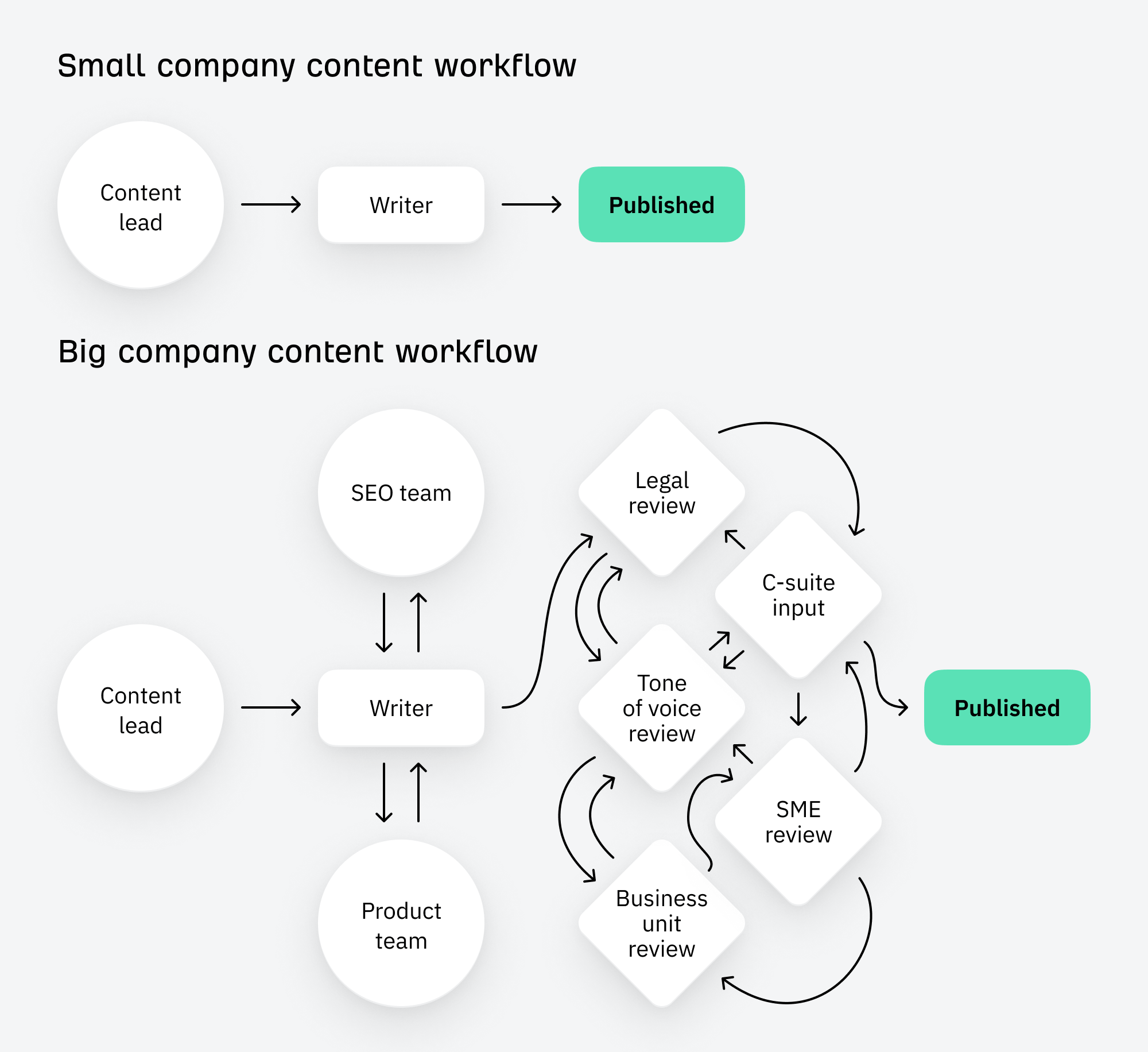

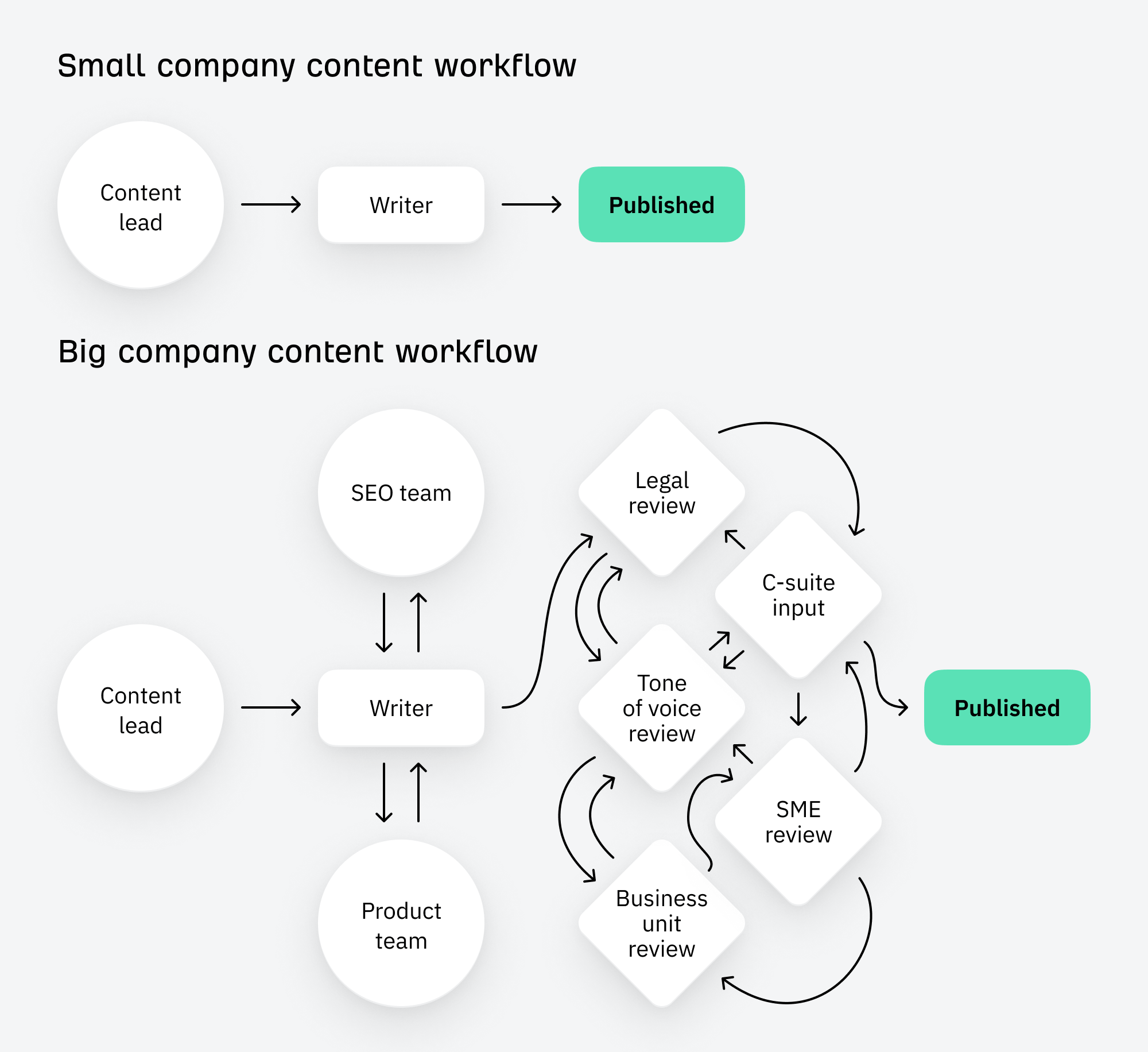

Why Big Companies Make Bad Content

It’s like death and taxes: inevitable. The bigger a company gets, the worse its content marketing becomes.

HubSpot teaching you how to type the shrug emoji or buy bitcoin stock. Salesforce sharing inspiring business quotes. GoDaddy helping you use Bing AI, or Zendesk sharing catchy sales slogans.

Judged by content marketing best practice, these articles are bad.

They won’t resonate with decision-makers. Nobody will buy a HubSpot license after Googling “how to buy bitcoin stock.” It’s the very definition of vanity traffic: tons of visits with no obvious impact on the business.

So why does this happen?

There’s an obvious (but flawed) answer to this question: big companies are inefficient.

As companies grow, they become more complicated, and writing good, relevant content becomes harder. I’ve experienced this firsthand:

- extra rounds of legal review and stakeholder approval creeping into processes.

- content watered down to serve an ever-more generic “brand voice”.

- growing misalignment between search and content teams.

- a lack of content leadership within the company as early employees leave.

Similarly, funded companies have to grow, even when they’re already huge. Content has to feed the machine, continually increasing traffic… even if that traffic never contributes to the bottom line.

There’s an element of truth here, but I’ve come to think that both these arguments are naive, and certainly not the whole story.

It is wrong to assume that the same people that grew the company suddenly forgot everything they once knew about content, and wrong to assume that companies willfully target useless keywords just to game their OKRs.

Instead, let’s assume that this strategy is deliberate, and not oversight. I think bad content—and the vanity traffic it generates—is actually good for business.

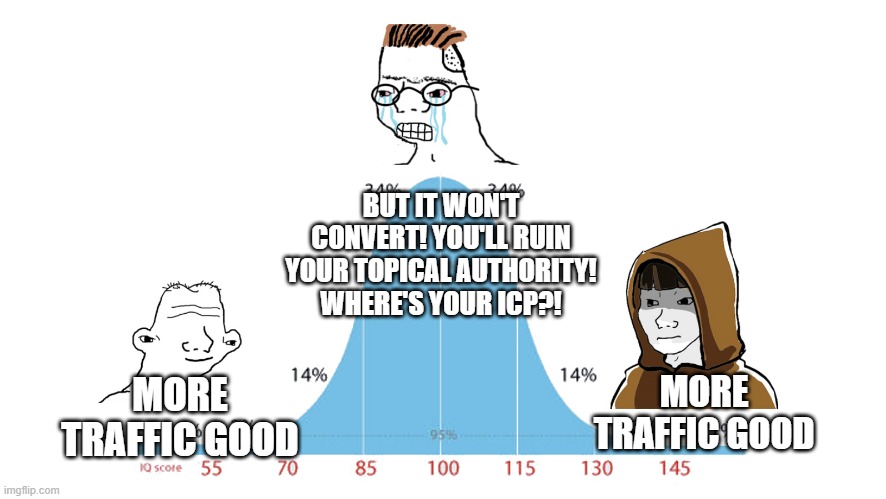

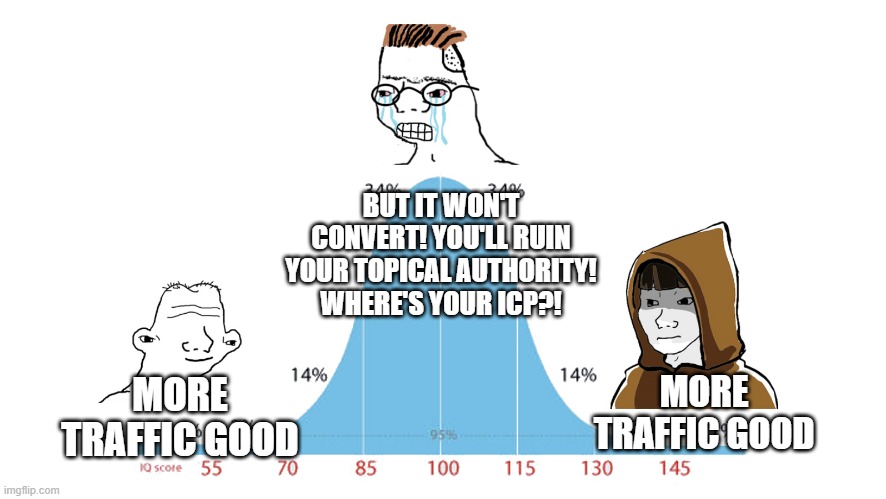

There are benefits to driving tons of traffic, even if that traffic never directly converts. Or put in meme format:

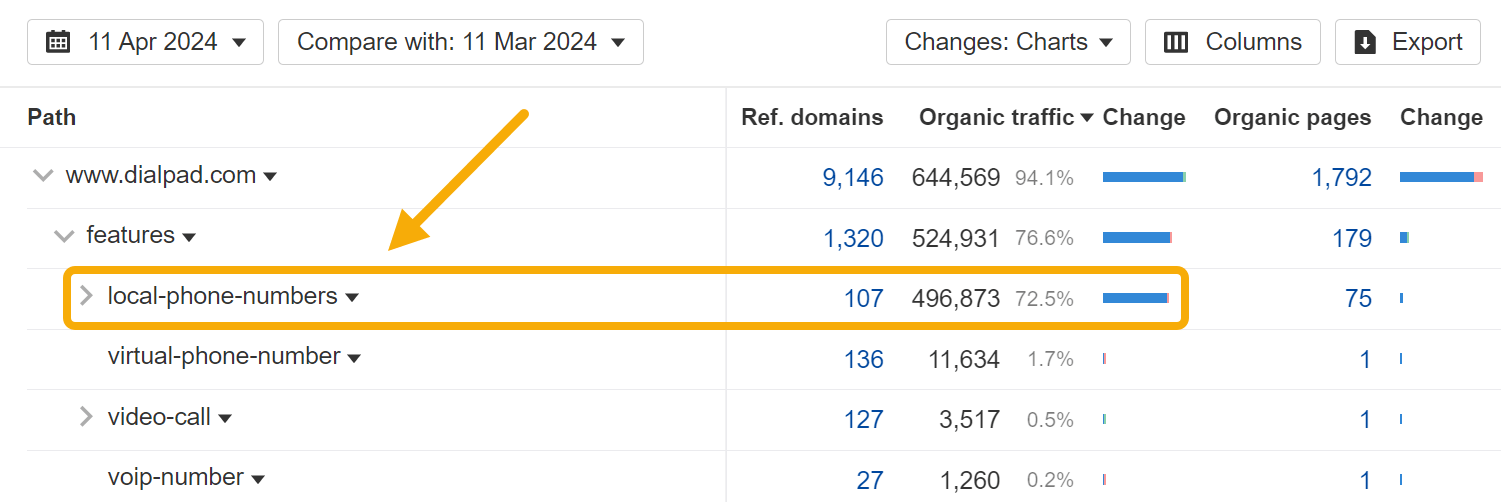

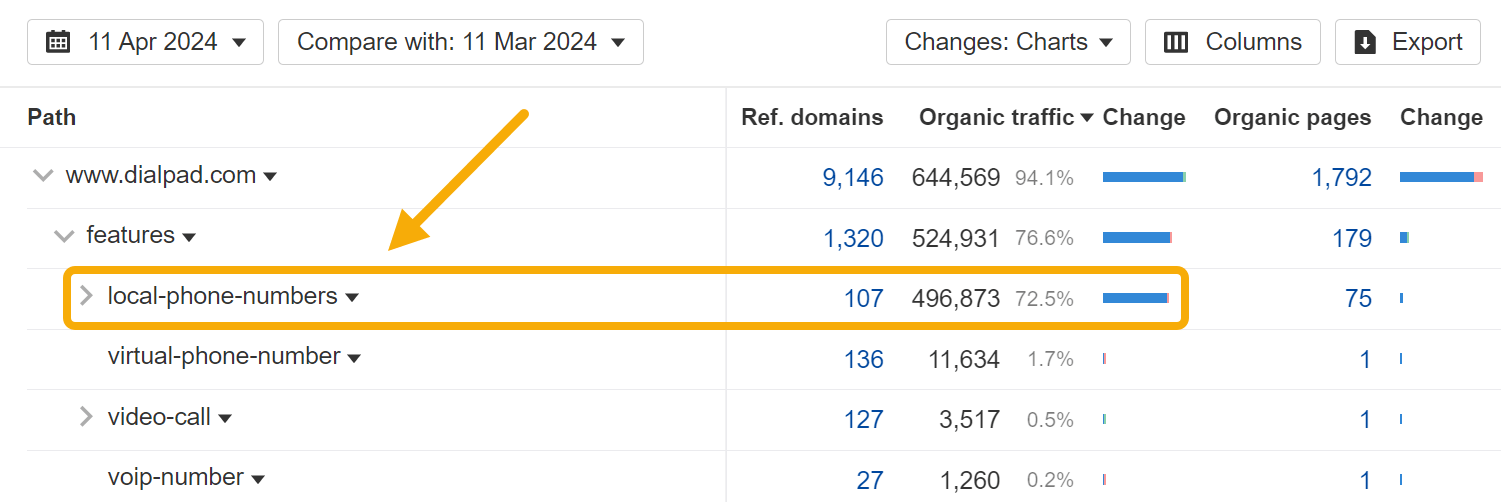

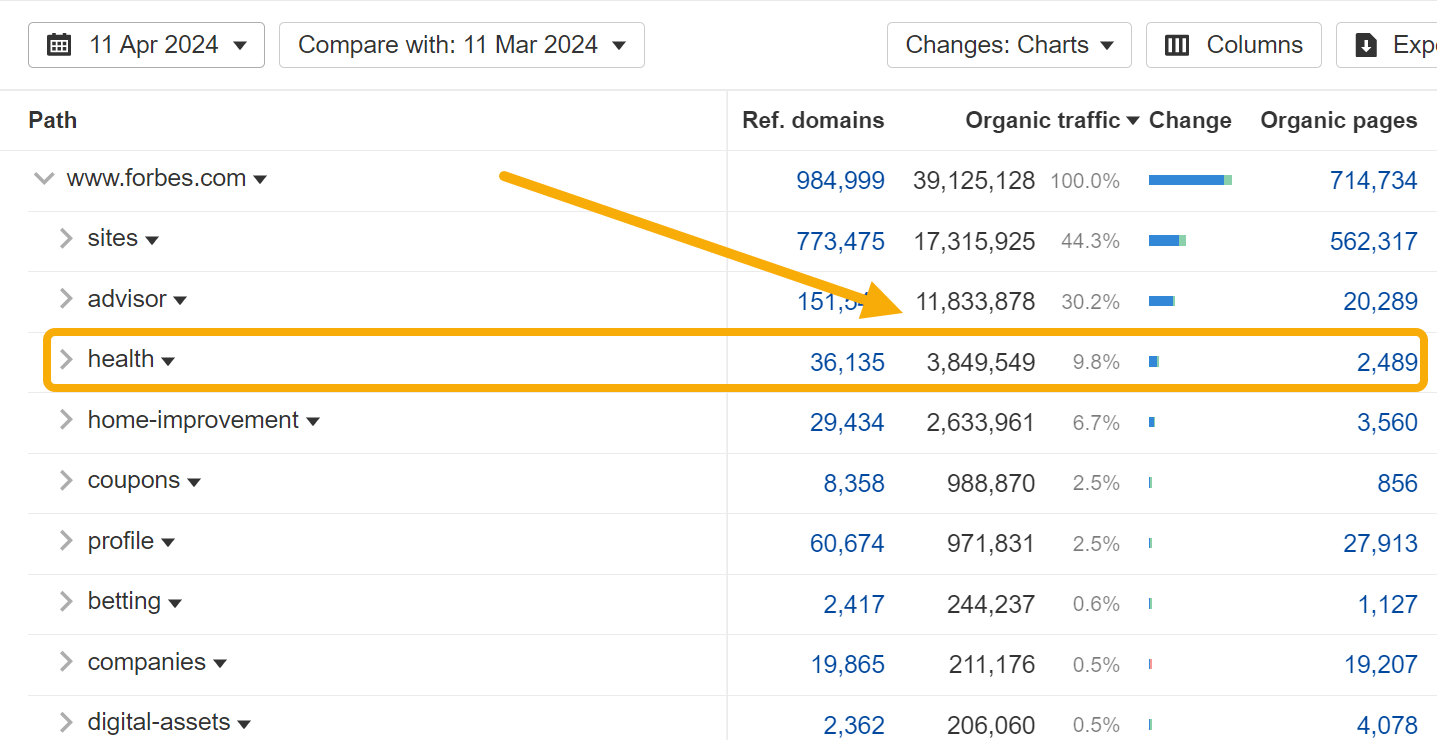

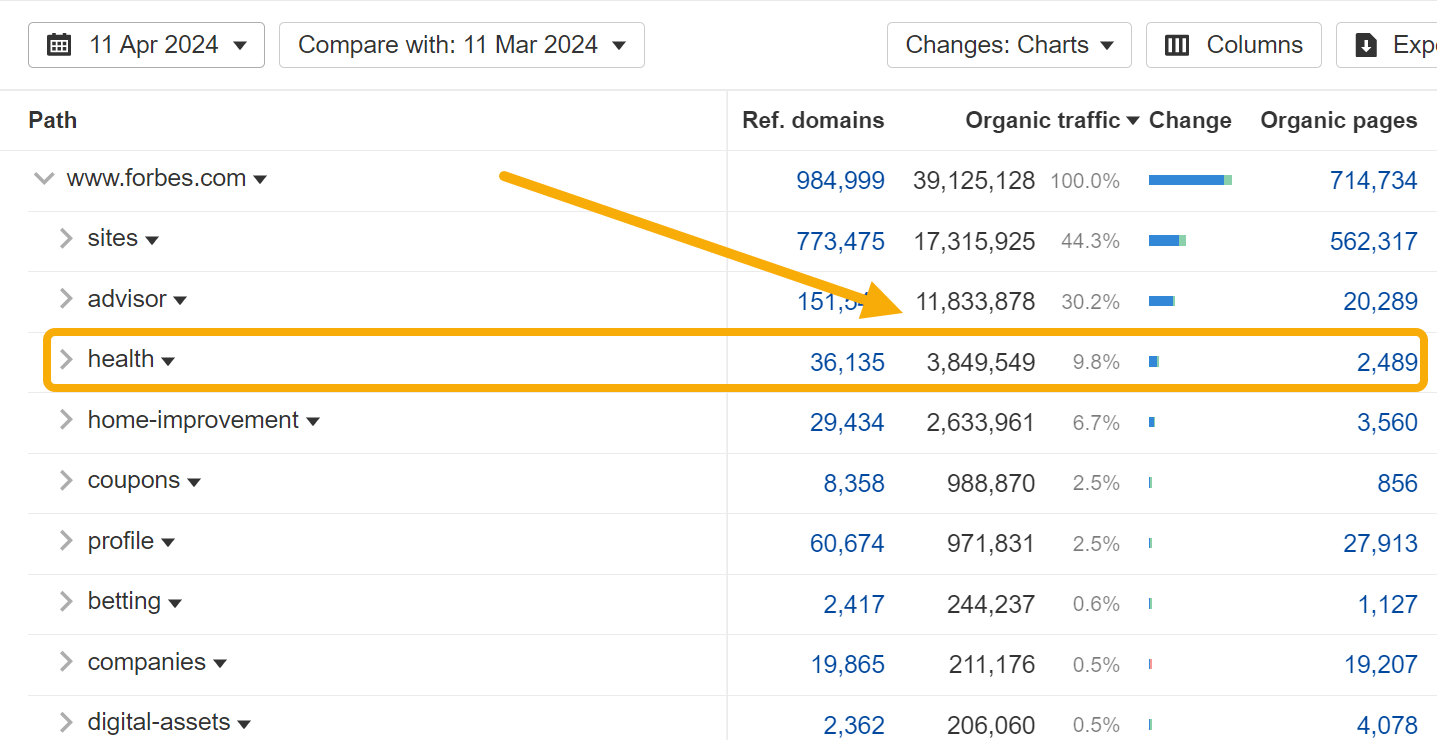

Programmatic SEO is a good example. Why does Dialpad create landing pages for local phone numbers?

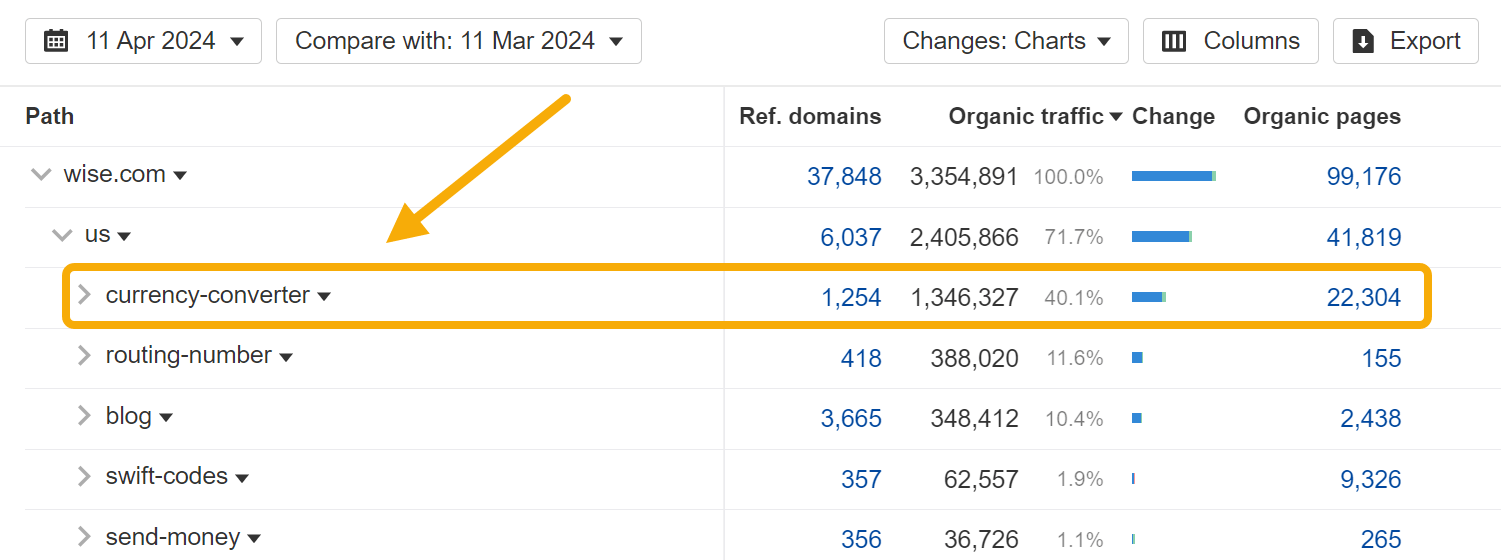

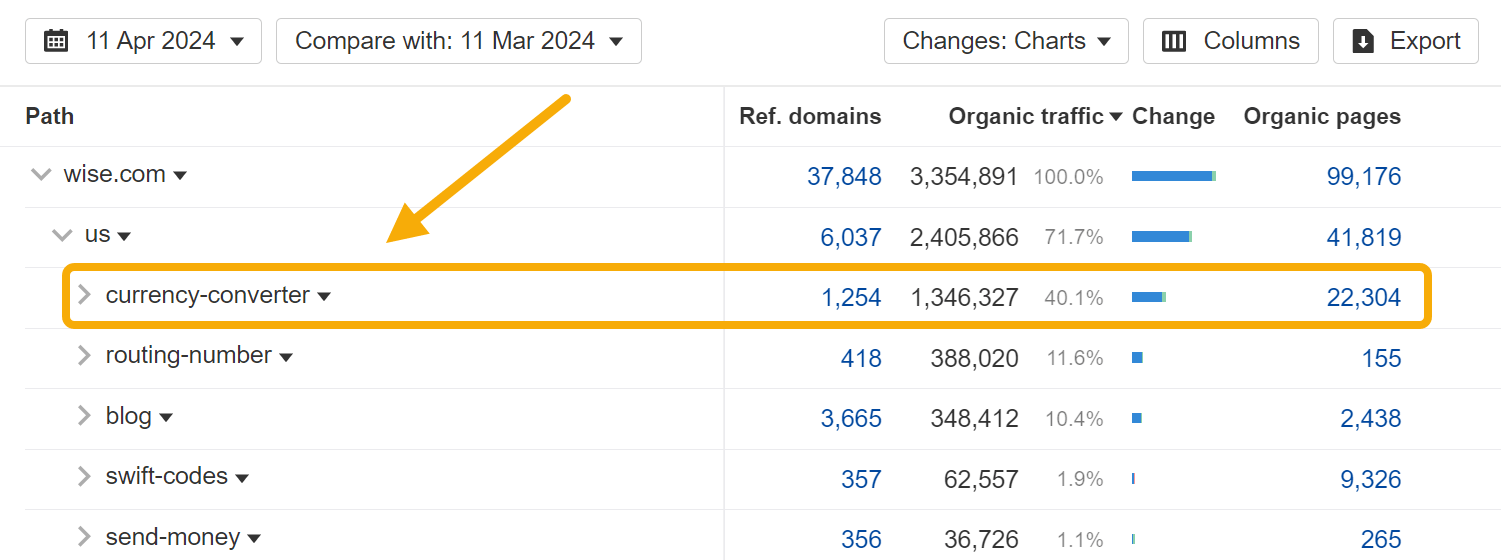

Why does Wise target exchange rate keywords?

Why do we have a list of most popular websites pages?

As this Twitter user points out, these articles will never convert…

…but they don’t need to.

Every published URL and targeted keyword is a new doorway from the backwaters of the internet into your website. It’s a chance to acquire backlinks that wouldn’t otherwise exist, and an opportunity to get your brand in front of thousands of new, otherwise unfamiliar people.

These benefits might not directly translate into revenue, but over time, in aggregate, they can have a huge indirect impact on revenue. They can:

- Strengthen domain authority and the search performance of every other page on the website.

- Boost brand awareness, and encourage serendipitous interactions that land your brand in front of the right person at the right time.

- Deny your competitors traffic and dilute their share of voice.

These small benefits become more worthwhile when multiplied across many hundreds or thousands of pages. If you can minimize the cost of the content, there is relatively little downside.

What about topical authority?

“But what about topical authority?!” I hear you cry. “If you stray too far from your area of expertise, won’t rankings suffer for it?”

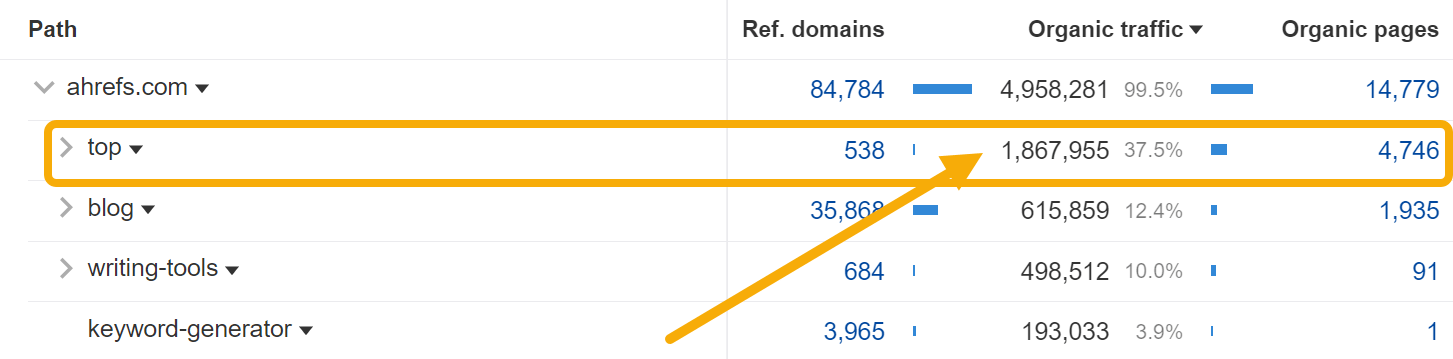

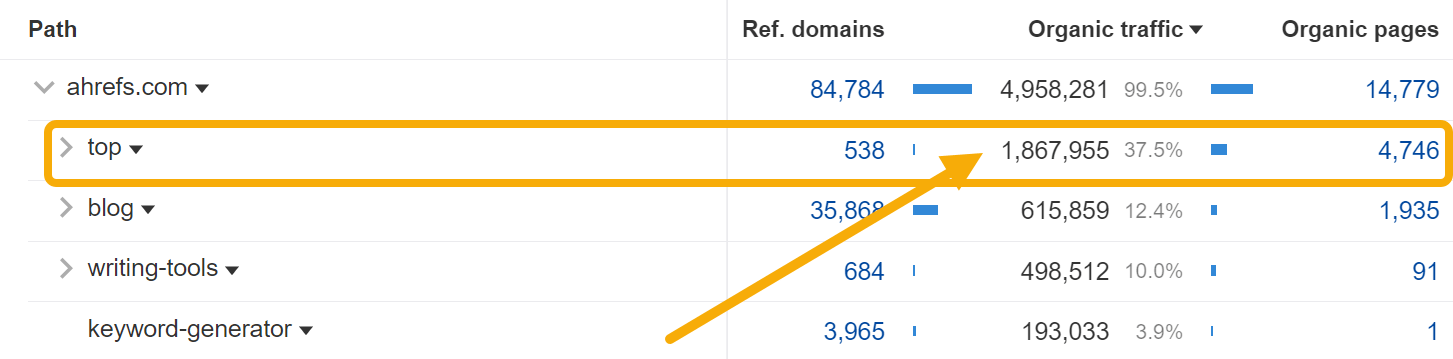

I reply simply with this screenshot of Forbes’ “health” subfolder, generating almost 4 million estimated monthly organic pageviews:

And big companies can minimize cost. For large, established brands, the marginal cost of content creation is relatively low.

Many companies scale their output through networks of freelancer writers, avoiding the cost of fully loaded employees. They have established, efficient processes for research, briefing, editorial review, publication and maintenance. The cost of an additional “unit” of content—or ten, or a hundred—is not that great, especially relative to other marketing channels.

There is also relatively little opportunity cost to consider: the fact that energy spent on “vanity” traffic could be better spent elsewhere, on more business-relevant topics.

In reality, many of the companies engaging in this strategy have already plucked the low-hanging fruit and written almost every product-relevant topic. There are a finite number of high traffic, high relevance topics; blog consistently for a decade and you too will reach these limits.

On top of that, the HubSpots and Salesforces of the world have very established, very efficient sales processes. Content gating, lead capture and scoring, and retargeting allow them to put very small conversion rates to relatively good use.

Even HubSpot’s article on Bitcoin stock has its own relevant call-to-action—and for HubSpot, building a database of aspiring investors is more valuable than it sounds, because…

The bigger a company grows, the bigger its audience needs to be to continue sustaining that growth rate.

Companies generally expand their total addressable market (TAM) as they grow, like HubSpot broadening from marketing to sales and customer success, launching new product lines for new—much bigger—audiences. This means the target audience for their content marketing grows alongside.

As Peep Laja put its:

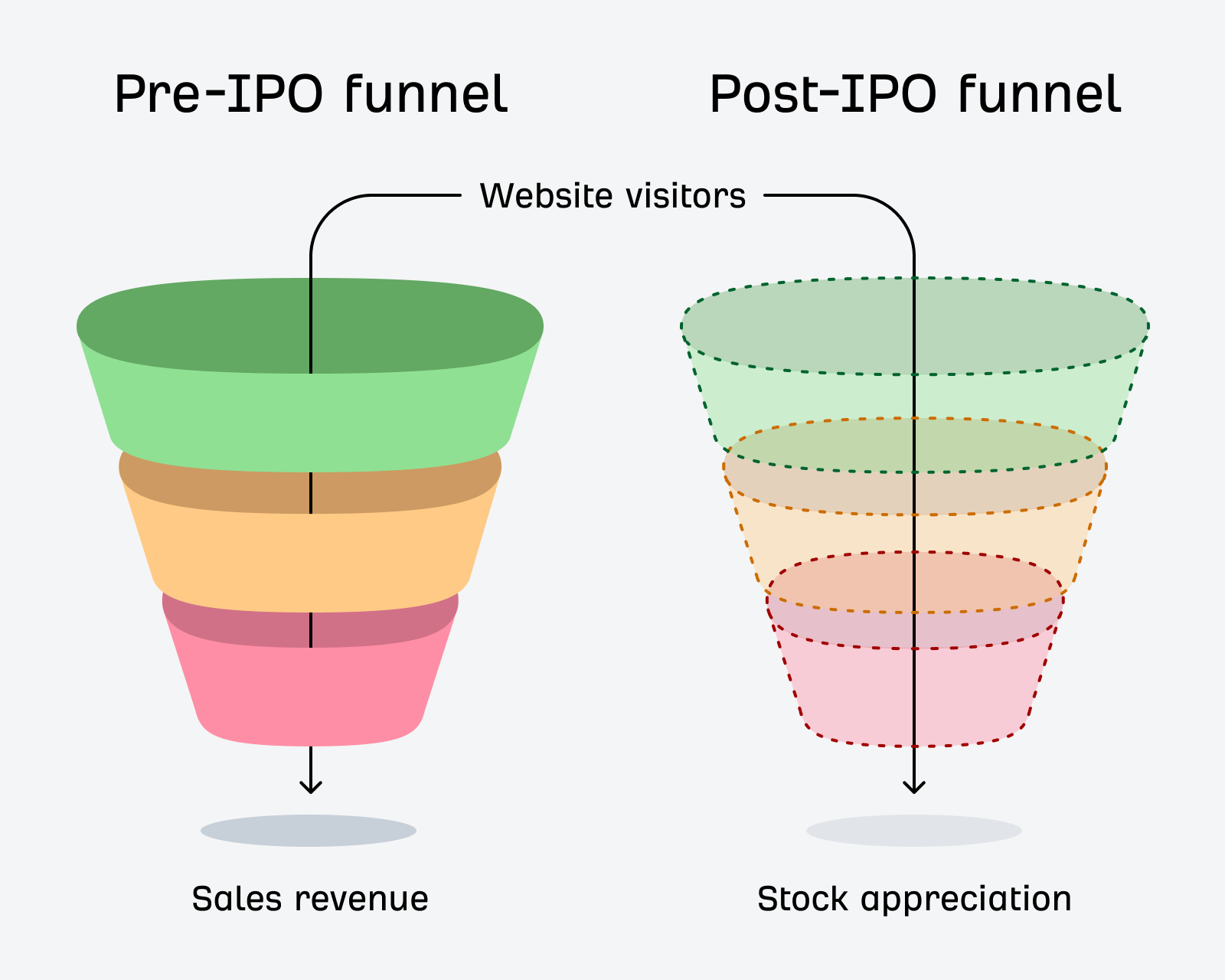

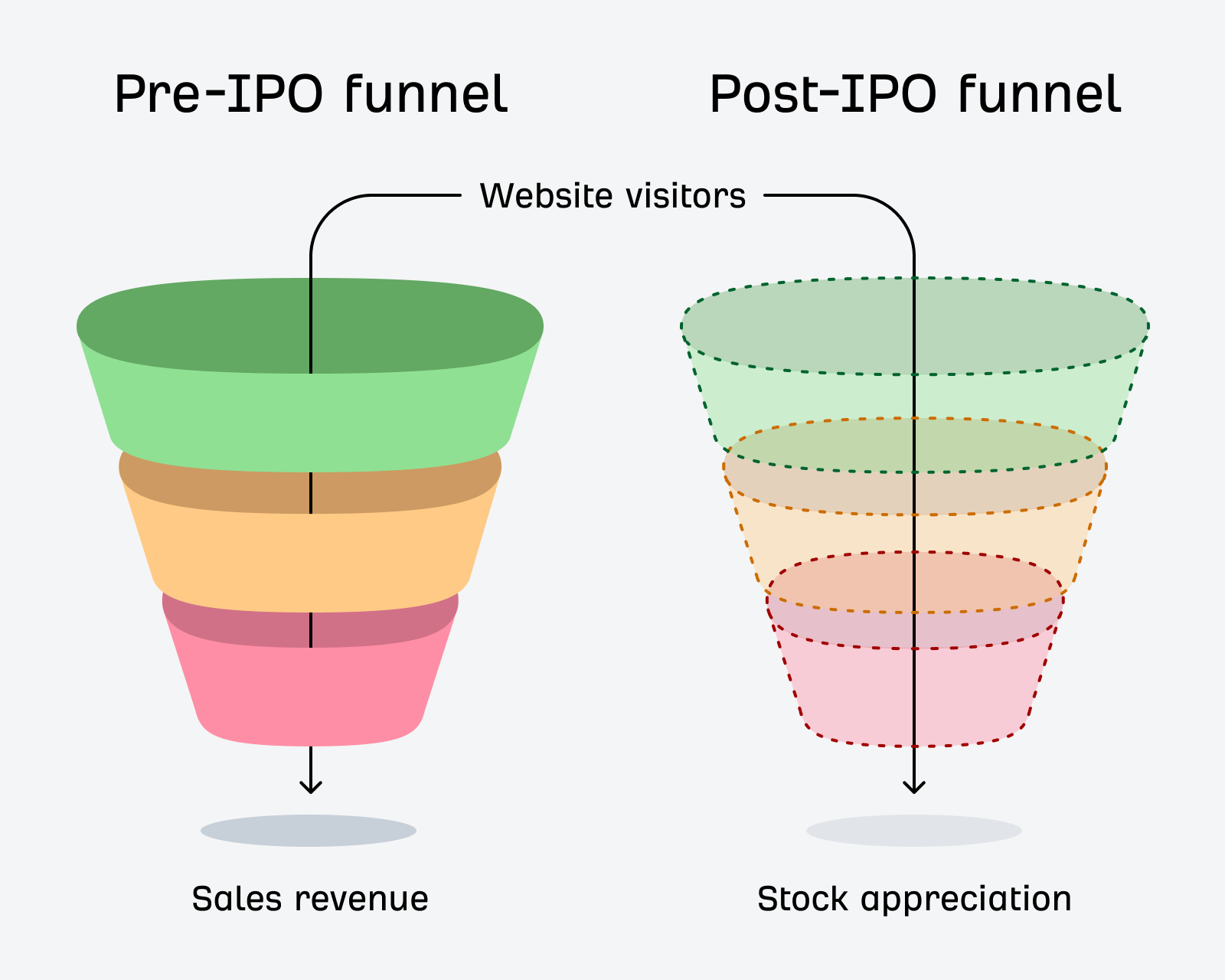

But for the biggest companies, this principle is taken to an extreme. When a company gears up to IPO, its target audience expands to… pretty much everyone.

This was something Janessa Lantz (ex-HubSpot and dbt Labs) helped me understand: the target audience for a post-IPO company is not just end users, but institutional investors, market analysts, journalists, even regular Jane investors.

These are people who can influence the company’s worth in ways beyond simply buying a subscription: they can invest or encourage others to invest and dramatically influence the share price. These people are influenced by billboards, OOH advertising and, you guessed it, seemingly “bad” content showing up whenever they Google something.

You can think of this as a second, additional marketing funnel for post-IPO companies:

These visitors might not purchase a software subscription when they see your article in the SERP, but they will notice your brand, and maybe listen more attentively the next time your stock ticker appears on the news.

They won’t become power users, but they might download your eBook and add an extra unit to the email subscribers reported in your S1.

They might not contribute revenue now, but they will in the future: in the form of stock appreciation, or becoming the target audience for a future product line.

Vanity traffic does create value, but in a form most content marketers are not used to measuring.

If any of these benefits apply, then it makes sense to acquire them for your company—but also to deny them to your competitors.

SEO is an arms race: there are a finite number of keywords and topics, and leaving a rival to claim hundreds, even thousands of SERPs uncontested could very quickly create a headache for your company.

SEO can quickly create a moat of backlinks and brand awareness that can be virtually impossible to challenge; left unchecked, the gap between your company and your rival can accelerate at an accelerating pace.

Pumping out “bad” content and chasing vanity traffic is a chance to deny your rivals unchallenged share of voice, and make sure your brand always has a seat at the table.

Final thoughts

These types of articles are miscategorized—instead of thinking of them as bad content, it’s better to think of them as cheap digital billboards with surprisingly great attribution.

Big companies chasing “vanity traffic” isn’t an accident or oversight—there are good reasons to invest energy into content that will never convert. There is benefit, just not in the format most content marketers are used to.

This is not an argument to suggest that every company should invest in hyper-broad, high-traffic keywords. But if you’ve been blogging for a decade, or you’re gearing up for an IPO, then “bad content” and the vanity traffic it creates might not be so bad.

SEO

Is It Alternatives You’re Looking For?

Whatever the reason, in this article, I’ll share some alternatives to HARO and a few extra ways to get expert quotes and backlinks for your website.

Disclaimer: I am not a PR expert. I did a bit of outreach a few years ago, but I have only been an occasional user of HARO in the past year or so.

So, rather than providing my opinion on the best alternatives to HARO, I thought it would be fun to ask users of the “new HARO” what they thought were the best alternatives.

I wanted to give the “new HARO”—Connectively—the benefit of the doubt.

Still, a few minutes after my pitch was accepted, I got two responses that appeared to be AI-generated from two “visionary directors,” both with “extensive experience.”

My experience of Connectively so far mirrored Josh’s experience of old HARO: The responses were most likely automated.

Although I was off to a bad start, looking through most of the responses afterward, these two were the only blatant automated pitches I could spot.

These responses weren’t included in my survey, and anyone who saw my pitch would have to copy and paste the survey link to complete it—increasing the chance of genuine human responses—hopefully.

So, without further ado, here are the results of the survey.

Sidenote.

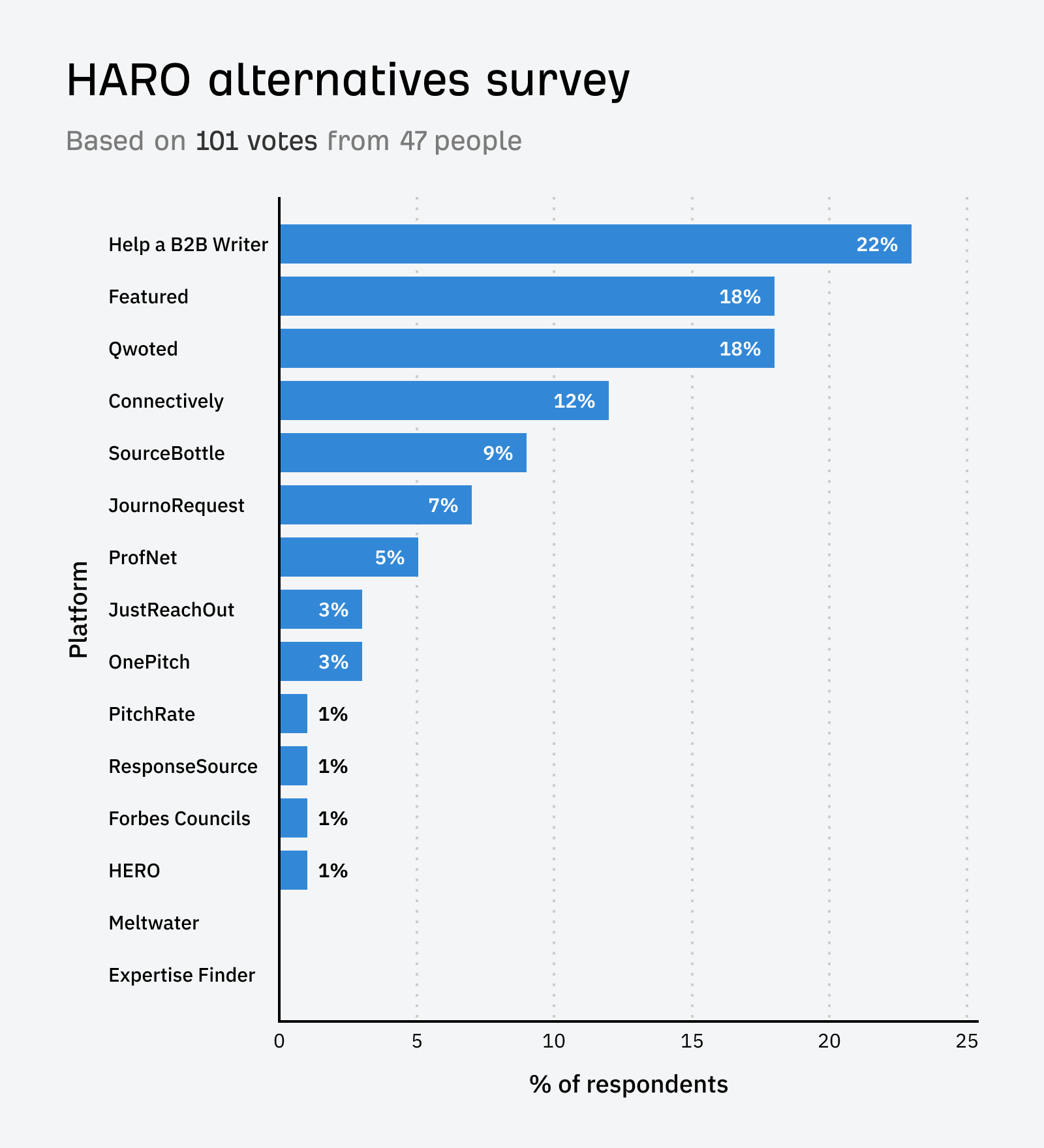

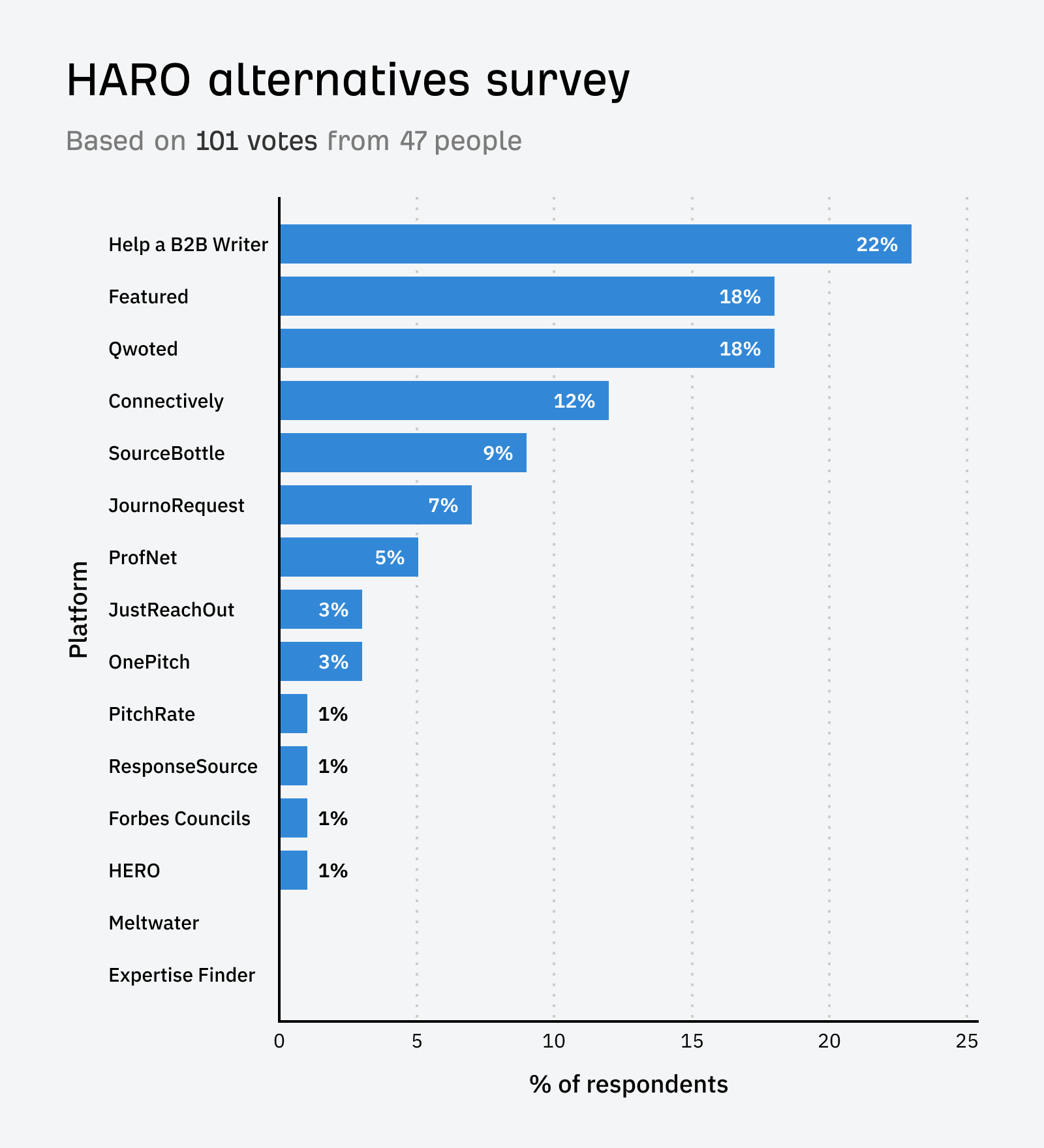

The survey on Connectively ran for a week and received 101 votes. Respondents could vote for their top three HARO alternatives.

Price: Free.

Help a B2B Writer was the #1 alternative platform respondents recommended. In my survey it got 22% of the vote.

Help a B2B Writer is a platform run by Superpath that is similar to HARO but focused on connecting business-to-business (B2B) journalists with industry experts and sources for their stories.

Price: Free and paid plans. Paid plans start at $99 per month.

Coming in joint second place, Featured was popular, scoring 18% of the vote.

Featured connects journalists with experts and thought leaders. It allows experts to create profiles showcasing their expertise and helps journalists find suitable sources for their stories.

Price: Free and paid plans. Paid plans start at $99 per month.

Qwoted is another platform that I’ve heard talked about a lot. It came in joint second place, scoring 18% of the vote.

Qwoted matches journalists with expert sources, allowing them to collaborate on creating high-quality content. It streamlines the process of finding and connecting with relevant sources.

Price: Free for ten pitches per month

Despite being the “new HARO,” Connectively came 4th on my list, scoring 12% of the vote—surprisingly, it wasn’t even the top choice for most users on its own platform.

Connectively connects journalists with sources and experts. It helps journalists find relevant sources for their stories and allows experts to gain media exposure.

Price: Free and paid plans. Paid plans start at $5.95 per month.

SourceBottle is an online platform that connects journalists, bloggers, and media professionals with expert sources. It allows experts to pitch their ideas and insights to journalists looking for story sources. It scored 9% of the vote in my survey.

Price: Free.

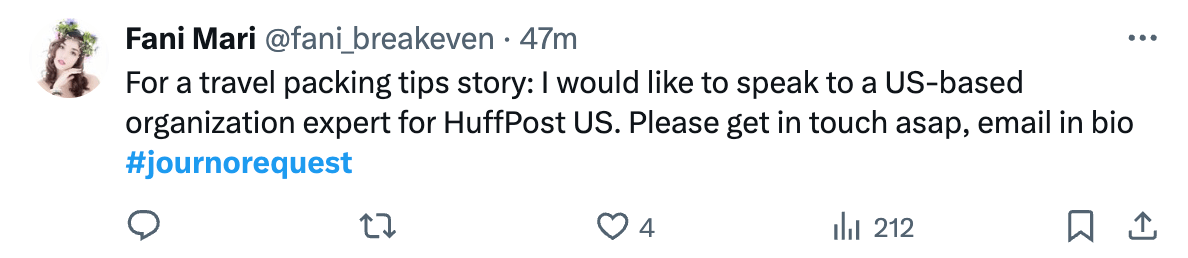

JournoRequest is an X account that shares journalist requests for sources. UK-based journalists and experts often use it, but it can sometimes have international reach. It scored 7% of the vote in my survey.

Price: Paid. Plans start at $1,150 per year.

ProfNet connects journalists to expert sources. It helps journalists find knowledgeable sources for their articles, interviews, and other media content. It helps subject matter experts gain media exposure and share their expertise. It scored 5% of the vote in my survey.

Price: 7-day free trial and paid plans. Paid plans start at $147 per month.

JustReachOut is a PR and influencer outreach platform that helps businesses find and connect with relevant journalists and influencers. It provides tools for personalized outreach and relationship management. It scored 3% of the vote in my survey.

Price: 14-day free trial and paid plans. Paid plans start at $50 per month.

OnePitch is a platform that simplifies the process of pitching story ideas to journalists. Businesses and PR professionals can create and send targeted pitches to relevant media outlets. It scored 3% of the vote in my survey.

Price: Free.

PitchRate is a free PR tool that connects journalists and highly rated experts. Useful for subject matter experts looking for free PR leads, media coverage, or publicity. Or journalists looking for credible sources. It scored 1% of the vote in my survey.

Price: Free and paid plans. Paid plans start at ~$105 per month.

A UK service that connects media professionals with expert sources, press releases, and PR contacts. It scored 1% of the vote in my survey.

Price: Invitation-only platform.

Forbes Councils is an invitation-only community for executives and entrepreneurs. Members can contribute expert insights and thought leadership content to Forbes.com and gain media exposure. It scored 1% of the vote in my survey.

Price: Free.

Yes, you read that right.

HERO was created by Peter Shankman, the original creator of HARO, who said the platform will always be free. It scored 1% of the vote in my survey.

Peter set up the platform after receiving over 2,000 emails asking him to build a new version of HARO.

Price: Paid. Sign up for details.

Meltwater received no votes in my survey, but I included it because I’d seen it shared on social media as a paid alternative to HARO.

It’s a media intelligence and social media monitoring platform. It provides tools for tracking media coverage, analyzing sentiment, and identifying influencers and journalists for outreach.

Price: Free.

Expertise Finder also received no votes in my survey, but it was included as I saw it had been recommended as an HARO alternative on LinkedIn. It’s a platform that helps journalists find and connect with expert sources from universities.

HARO had a dual purpose for SEOs: it was a place to acquire links, but it also was a place to get expert quotes on topics for your next article.

Here are a few more free methods outside the platforms we’ve covered that can help you get expert quotes and links.

We’ve already seen that JournoRequest is a popular X account that shares journalist requests for sources.

But you can also follow hashtags on X to access even more opportunities.

Here are my favorite hashtags to follow:

I used to track the #journorequest hashtag to find opportunities for my clients when I worked agency-side, so I know it can work well for quotes and link acquisition.

Here are two opportunities I found just checking the #journorequest hashtag:

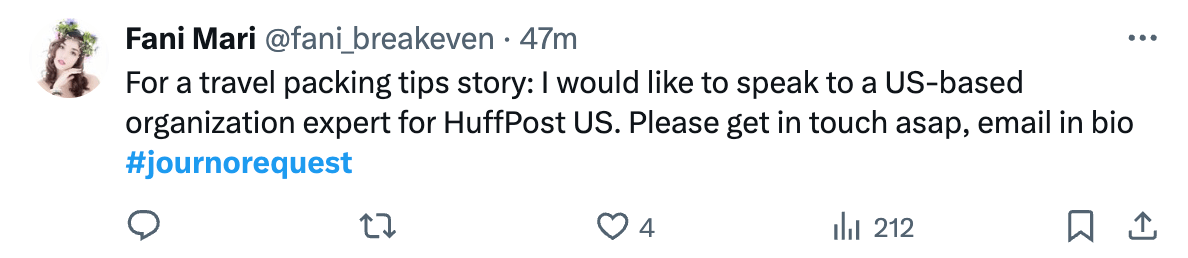

Here’s another example from the Telegraph—a DR 92 website:

Certain types of content are more likely to be shared by journalists and PRs than others.

One of these types of content is statistics-based content. The reason? Journalists often use statistics to support their points.

Once they have included your statistic in their post, they often add a backlink back to your post.

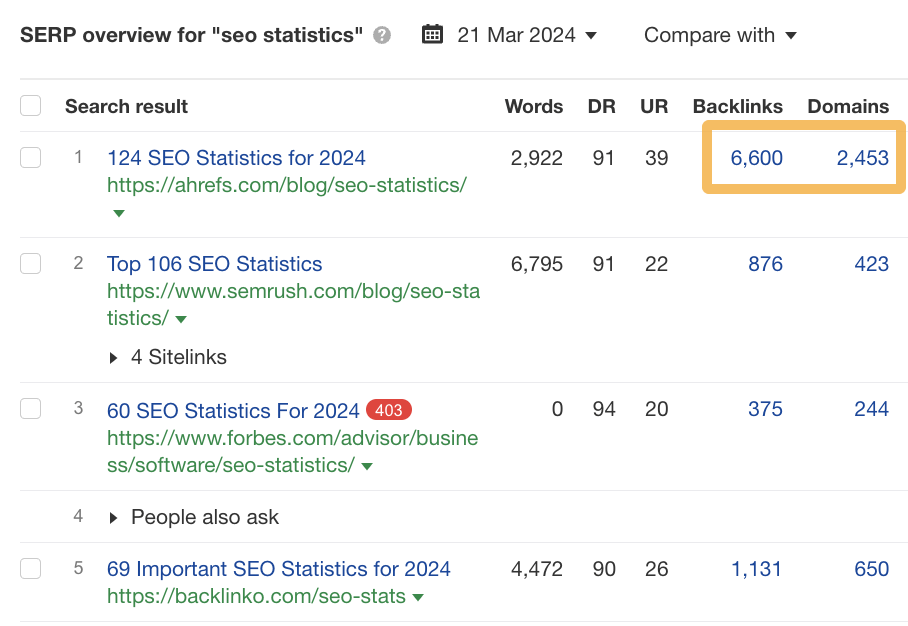

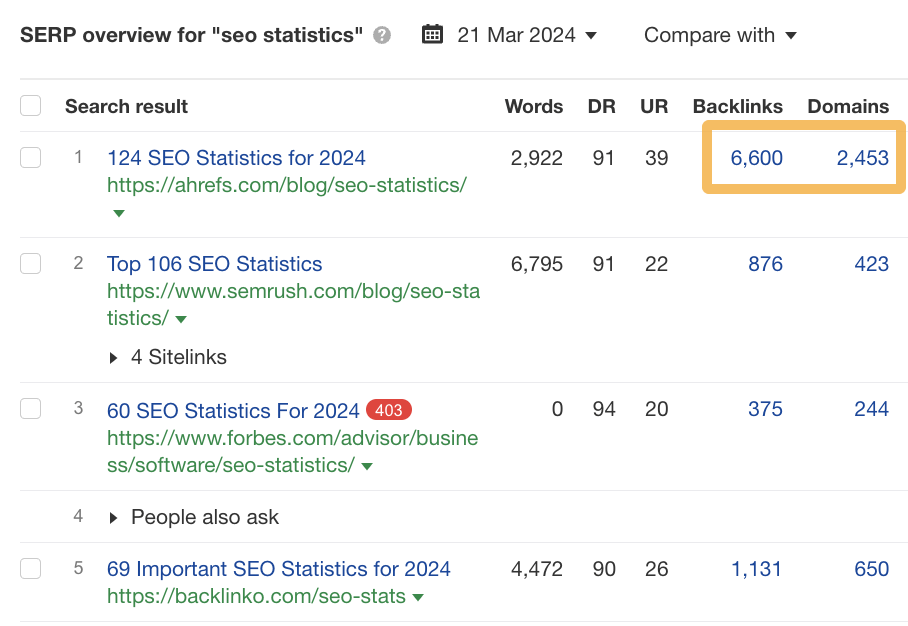

We tested this with our SEO statistics post, and as you can see, it still ranks number one in Google.

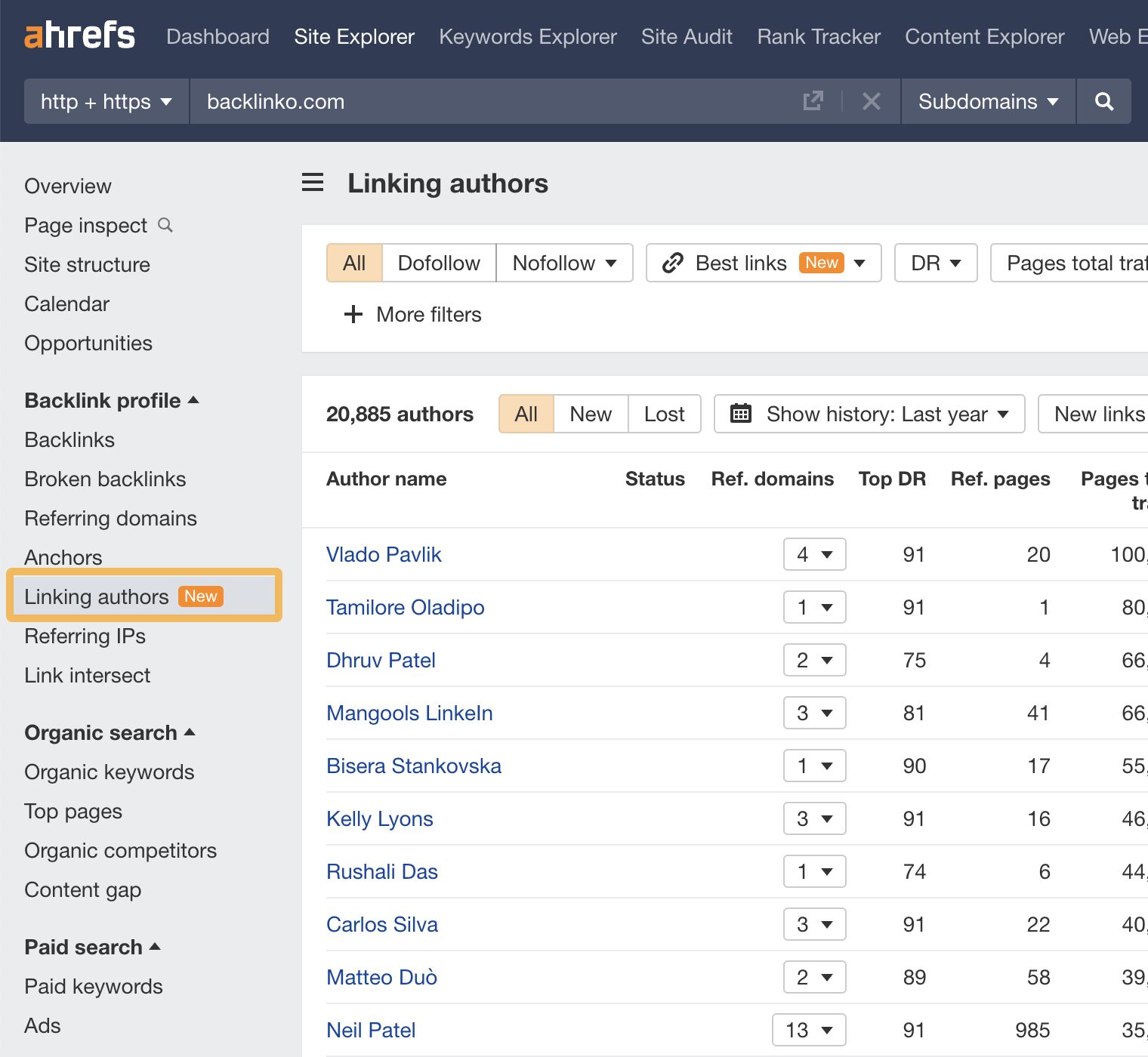

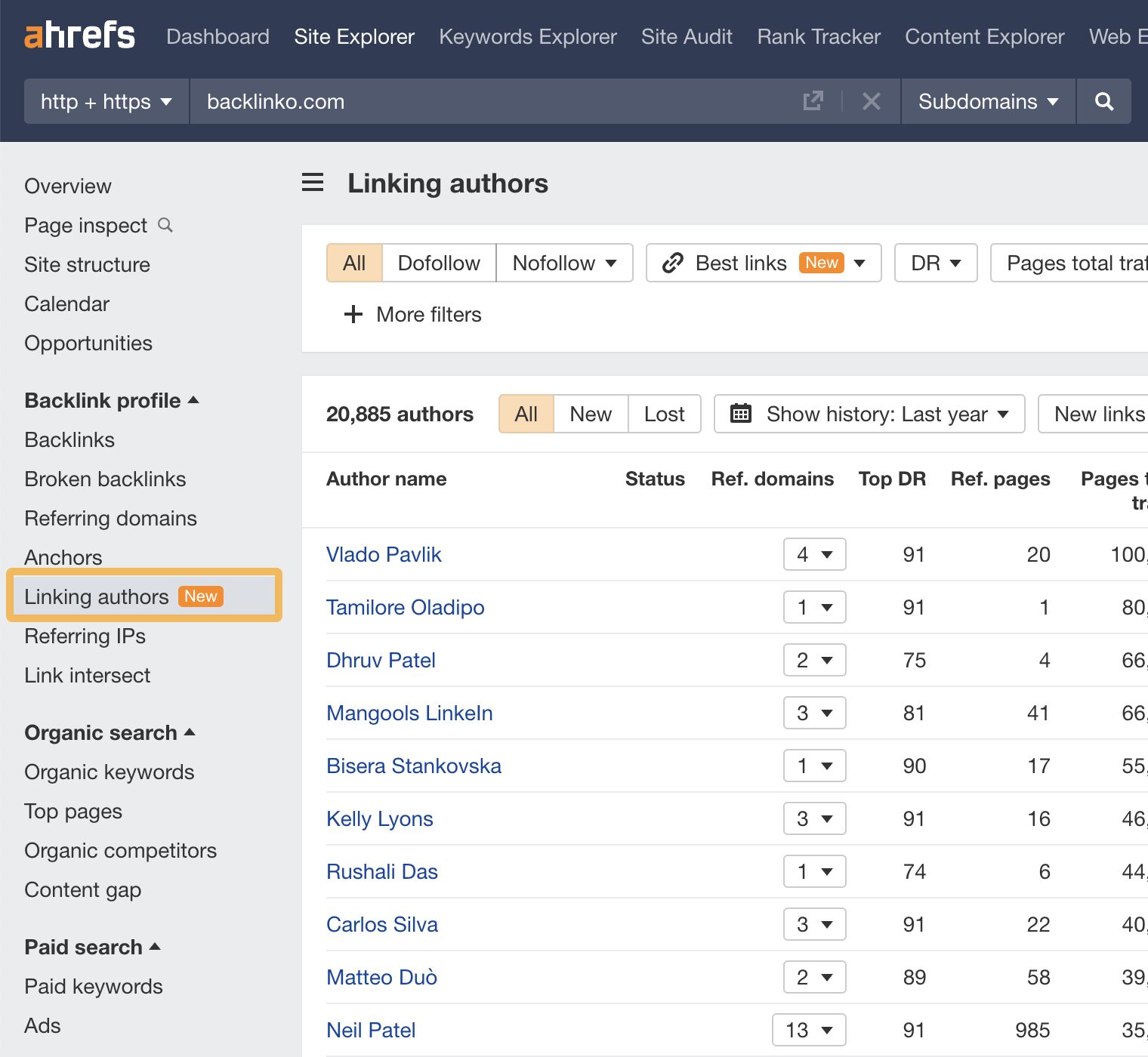

Another method is to use the Linking authors report in Ahrefs’ Site Explorer. This report shows the authors’ names who link to any website you enter.

You can see which authors link to their site by entering your competitor’s domain. Some of these authors may represent outreach opportunities for your website as well.

- Head to Site Explorer, click on Linking authors

- Type in your competitor’s URL

- Contact any authors that you think may be interested in your website and its content

Tip

If you download your website’s linking authors and your competitors into a spreadsheet and put them into separate tabs, you can compare the lists to see which authors are only linking to your competitor’s website.

When I was about to wrap up this article, I was contacted by Greg Heilers of Jolly SEO on LinkedIn.

He said he’d sent 200,000+ pitches over the years and wanted to share the results with me.

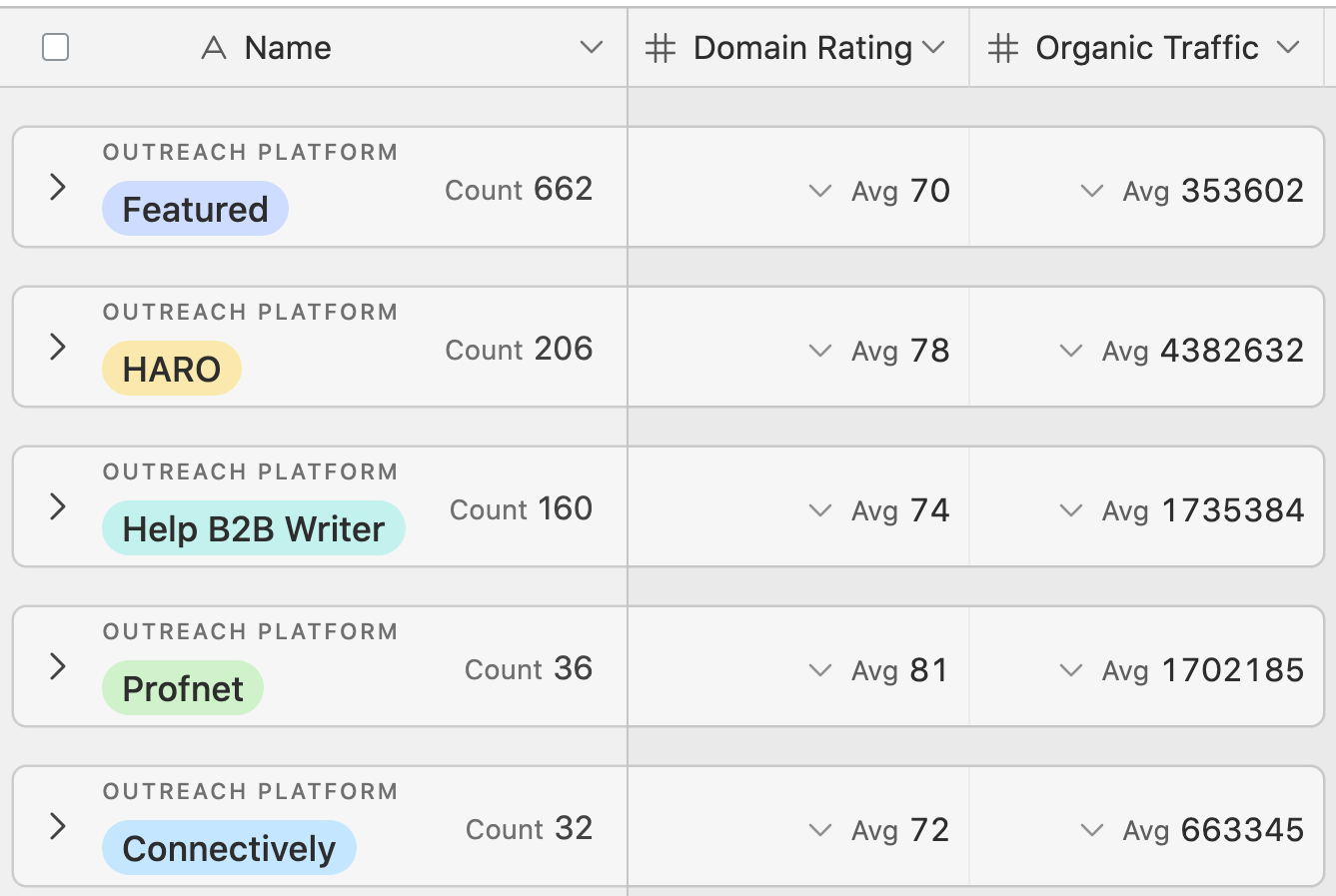

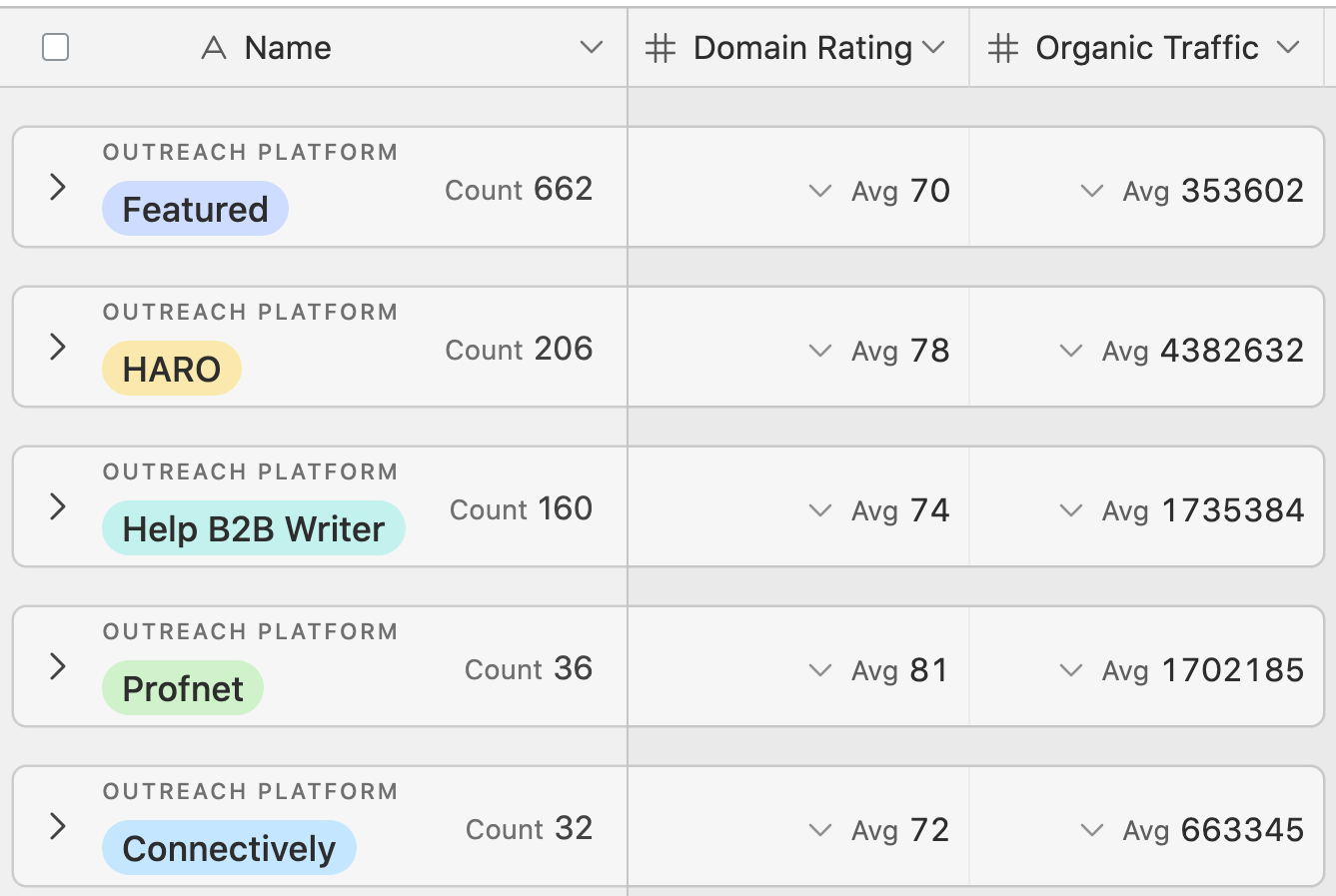

These are his top three platforms over the last 1,000 pitches he sent. Interestingly, we can see that it’s similar to my much smaller-scale survey.

Hopefully, the data here speaks for itself. The high-quality links and traffic from HARO alternatives is considerable.

This research shows that Featured gained the most link placements in this campaign.

We have compiled some helpful content related to link building that you can get your teeth into. These hand-picked guides will take you from beginner to expert in no time.

Here are my favorite resources on this topic:

Final thoughts

There are many options for sourcing expert quotes and getting links for your next marketing campaigns. HARO may be dead, but its legacy lives on.

My highly unscientific survey suggests that most “new HARO” users liked Help a B2B Writer the most, but for HARO purists, there really is only one choice—HERO.

Give your favorites from this list a whirl, and let me know if you have any success. Got more questions? Ping me on X. 🙂

-

WORDPRESS6 days ago

WORDPRESS6 days ago9 Best WooCommerce Multi Vendor Plugins (Compared)

-

SEO6 days ago

SEO6 days agoGoogle March 2024 Core Update Officially Completed A Week Ago

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 25, 2024

-

MARKETING5 days ago

MARKETING5 days agoNavigating the Video Marketing Maze: Short-Form vs. Long-Form

-

SEO6 days ago

SEO6 days agoGoogle Declares It The “Gemini Era” As Revenue Grows 15%

-

![The Current State of Google’s Search Generative Experience [What It Means for SEO in 2024] person typing on laptop with](https://articles.entireweb.com/wp-content/uploads/2024/04/The-Current-State-of-Googles-Search-Generative-Experience-What-It.webp-400x240.webp)

![The Current State of Google’s Search Generative Experience [What It Means for SEO in 2024] person typing on laptop with](https://articles.entireweb.com/wp-content/uploads/2024/04/The-Current-State-of-Googles-Search-Generative-Experience-What-It.webp-80x80.webp) MARKETING6 days ago

MARKETING6 days agoThe Current State of Google’s Search Generative Experience [What It Means for SEO in 2024]

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle March 2024 Core Update Finished April 19, 2024

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 26, 2024