SEO

Google Answers A Crawl Budget Issue Question

Someone on Reddit posted a question about their “crawl budget” issue and asked if a large number of 301 redirects to 410 error responses were causing Googlebot to exhaust their crawl budget. Google’s John Mueller offered a reason to explain why the Redditor may be experiencing a lackluster crawl pattern and clarified a point about crawl budgets in general.

Crawl Budget

It’s a commonly accepted idea that Google has a crawl budget, an idea that SEOs invented to explain why some sites aren’t crawled enough. The idea is that every site is allotted a set number of crawls, a cap on how much crawling a site qualifies for.

It’s important to understand the background of the idea of the crawl budget because it helps understand what it really is. Google has long insisted that there is no one thing at Google that can be called a crawl budget, although how Google crawls a site can give an impression that there is a cap on crawling.

A top Google engineer (at the time) named Matt Cutts alluded to this fact about the crawl budget in a 2010 interview.

Matt answered a question about a Google crawl budget by first explaining that there was no crawl budget in the way that SEOs conceive of it:

“The first thing is that there isn’t really such thing as an indexation cap. A lot of people were thinking that a domain would only get a certain number of pages indexed, and that’s not really the way that it works.

There is also not a hard limit on our crawl.”

In 2017 Google published a crawl budget explainer that brought together numerous crawling-related facts that together resemble what the SEO community was calling a crawl budget. This new explanation is more precise than the vague catch-all phrase “crawl budget” ever was (Google crawl budget document summarized here by Search Engine Journal).

The short list of the main points about a crawl budget are:

- A crawl rate is the number of URLs Google can crawl based on the ability of the server to supply the requested URLs.

- A shared server for example can host tens of thousands of websites, resulting in hundreds of thousands if not millions of URLs. So Google has to crawl servers based on the ability to comply with requests for pages.

- Pages that are essentially duplicates of others (like faceted navigation) and other low-value pages can waste server resources, limiting the amount of pages that a server can give to Googlebot to crawl.

- Pages that are lightweight are easier to crawl more of.

- Soft 404 pages can cause Google to focus on those low-value pages instead of the pages that matter.

- Inbound and internal link patterns can help influence which pages get crawled.

Reddit Question About Crawl Rate

The person on Reddit wanted to know if the perceived low value pages they were creating was influencing Google’s crawl budget. In short, a request for a non-secure URL of a page that no longer exists redirects to the secure version of the missing webpage which serves a 410 error response (it means the page is permanently gone).

It’s a legitimate question.

This is what they asked:

“I’m trying to make Googlebot forget to crawl some very-old non-HTTPS URLs, that are still being crawled after 6 years. And I placed a 410 response, in the HTTPS side, in such very-old URLs.

So Googlebot is finding a 301 redirect (from HTTP to HTTPS), and then a 410.

http://example.com/old-url.php?id=xxxx -301-> https://example.com/old-url.php?id=xxxx (410 response)

Two questions. Is G**** happy with this 301+410?

I’m suffering ‘crawl budget’ issues, and I do not know if this two responses are exhausting Googlebot

Is the 410 effective? I mean, should I return the 410 directly, without a first 301?”

Google’s John Mueller answered:

G*?

301’s are fine, a 301/410 mix is fine.

Crawl budget is really just a problem for massive sites ( https://developers.google.com/search/docs/crawling-indexing/large-site-managing-crawl-budget ). If you’re seeing issues there, and your site isn’t actually massive, then probably Google just doesn’t see much value in crawling more. That’s not a technical issue.”

Reasons For Not Getting Crawled Enough

Mueller responded that “probably” Google isn’t seeing the value in crawling more webpages. That means that the webpages could probably use a review to identify why Google might determine that those pages aren’t worth crawling.

Certain popular SEO tactics tend to create low-value webpages that lack originality. For example, a popular SEO practice is to review the top ranked webpages to understand what factors on those pages explain why those pages are ranking, then taking that information to improve their own pages by replicating what’s working in the search results.

That sounds logical but it’s not creating something of value. If you think of it as a binary One and Zero choice, where zero is what’s already in the search results and One represents something original and different, the popular SEO tactic of emulating what’s already in the search results is doomed to create another Zero, a website that doesn’t offer anything more than what’s already in the SERPs.

Clearly there are technical issues that can affect the crawl rate such as the server health and other factors.

But in terms of what is understood as a crawl budget, that’s something that Google has long maintained is a consideration for massive sites and not for smaller to medium size websites.

Read the Reddit discussion:

Is G**** happy with 301+410 responses for the same URL?

Featured Image by Shutterstock/ViDI Studio

SEO

Google Rolls Out New ‘Web’ Filter For Search Results

Google is introducing a filter that allows you to view only text-based webpages in search results.

The “Web” filter, rolling out globally over the next two days, addresses demand from searchers who prefer a stripped-down, simplified view of search results.

Danny Sullivan, Google’s Search Liaison, states in an announcement:

“We’ve added this after hearing from some that there are times when they’d prefer to just see links to web pages in their search results, such as if they’re looking for longer-form text documents, using a device with limited internet access, or those who just prefer text-based results shown separately from search features.”

We’ve added this after hearing from some that there are times when they’d prefer to just see links to web pages in their search results, such as if they’re looking for longer-form text documents, using a device with limited internet access, or those who just prefer text-based…

— Google SearchLiaison (@searchliaison) May 14, 2024

The new functionality is a throwback to when search results were more straightforward. Now, they often combine rich media like images, videos, and shopping ads alongside the traditional list of web links.

How It Works

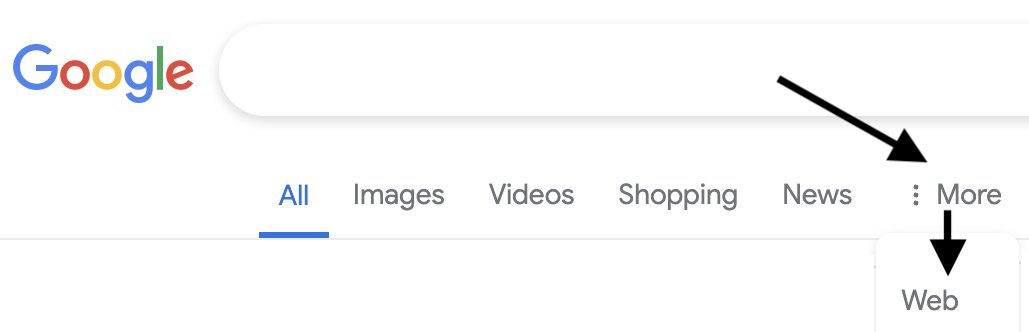

On mobile devices, the “Web” filter will be displayed alongside other filter options like “Images” and “News.”

If Google’s systems don’t automatically surface it based on the search query, desktop users may need to select “More” to access it.

Screenshot from: twitter.com/GoogleSearchLiaison, May 2024.

Screenshot from: twitter.com/GoogleSearchLiaison, May 2024.More About Google Search Filters

Google’s search filters allow you to narrow results by type. The options displayed are dynamically generated based on your search query and what Google’s systems determine could be most relevant.

The “All Filters” option provides access to filters that are not shown automatically.

Alongside filters, Google also displays “Topics” – suggested related terms that can further refine or expand a user’s original query into new areas of exploration.

For more about Google’s search filters, see its official help page.

Featured Image: egaranugrah/Shutterstock

SEO

Why Google Can’t Tell You About Every Ranking Drop

In a recent Twitter exchange, Google’s Search Liaison, Danny Sullivan, provided insight into how the search engine handles algorithmic spam actions and ranking drops.

The discussion was sparked by a website owner’s complaint about a significant traffic loss and the inability to request a manual review.

Sullivan clarified that a site could be affected by an algorithmic spam action or simply not ranking well due to other factors.

He emphasized that many sites experiencing ranking drops mistakenly attribute it to an algorithmic spam action when that may not be the case.

“I’ve looked at many sites where people have complained about losing rankings and decide they have a algorithmic spam action against them, but they don’t. “

Sullivan’s full statement will help you understand Google’s transparency challenges.

Additionally, he explains why the desire for manual review to override automated rankings may be misguided.

Two different things. A site could have an algorithmic spam action. A site could be not ranking well because other systems that *are not about spam* just don’t see it as helpful.

I’ve looked at many sites where people have complained about losing rankings and decide they have a…

— Google SearchLiaison (@searchliaison) May 13, 2024

Challenges In Transparency & Manual Intervention

Sullivan acknowledged the idea of providing more transparency in Search Console, potentially notifying site owners of algorithmic actions similar to manual actions.

However, he highlighted two key challenges:

- Revealing algorithmic spam indicators could allow bad actors to game the system.

- Algorithmic actions are not site-specific and cannot be manually lifted.

Sullivan expressed sympathy for the frustration of not knowing the cause of a traffic drop and the inability to communicate with someone about it.

However, he cautioned against the desire for a manual intervention to override the automated systems’ rankings.

Sullivan states:

“…you don’t really want to think “Oh, I just wish I had a manual action, that would be so much easier.” You really don’t want your individual site coming the attention of our spam analysts. First, it’s not like manual actions are somehow instantly processed. Second, it’s just something we know about a site going forward, especially if it says it has change but hasn’t really.”

Determining Content Helpfulness & Reliability

Moving beyond spam, Sullivan discussed various systems that assess the helpfulness, usefulness, and reliability of individual content and sites.

He acknowledged that these systems are imperfect and some high-quality sites may not be recognized as well as they should be.

“Some of them ranking really well. But they’ve moved down a bit in small positions enough that the traffic drop is notable. They assume they have fundamental issues but don’t, really — which is why we added a whole section about this to our debugging traffic drops page.”

Sullivan revealed ongoing discussions about providing more indicators in Search Console to help creators understand their content’s performance.

“Another thing I’ve been discussing, and I’m not alone in this, is could we do more in Search Console to show some of these indicators. This is all challenging similar to all the stuff I said about spam, about how not wanting to let the systems get gamed, and also how there’s then no button we would push that’s like “actually more useful than our automated systems think — rank it better!” But maybe there’s a way we can find to share more, in a way that helps everyone and coupled with better guidance, would help creators.”

Advocacy For Small Publishers & Positive Progress

In response to a suggestion from Brandon Saltalamacchia, founder of RetroDodo, about manually reviewing “good” sites and providing guidance, Sullivan shared his thoughts on potential solutions.

He mentioned exploring ideas such as self-declaration through structured data for small publishers and learning from that information to make positive changes.

“I have some thoughts I’ve been exploring and proposing on what we might do with small publishers and self-declaring with structured data and how we might learn from that and use that in various ways. Which is getting way ahead of myself and the usual no promises but yes, I think and hope for ways to move ahead more positively.”

Sullivan said he can’t make promises or implement changes overnight, but he expressed hope for finding ways to move forward positively.

Featured Image: Tero Vesalainen/Shutterstock

SEO

56 Google Search Statistics to Bookmark for 2024

If you’re curious about the state of Google search in 2024, look no further.

Each year we pick, vet, and categorize a list of up-to-date statistics to give you insights from trusted sources on Google search trends.

- Google has a web index of “about 400 billion documents”. (The Capitol Forum)

- Google’s search index is over 100 million gigabytes in size. (Google)

- There are an estimated 3.5 billion searches on Google each day. (Internet Live Stats)

- 61.5% of desktop searches and 34.4% of mobile searches result in no clicks. (SparkToro)

- 15% of all Google searches have never been searched before. (Google)

- 94.74% of keywords get 10 monthly searches or fewer. (Ahrefs)

- The most searched keyword in the US and globally is “YouTube,” and youtube.com gets the most traffic from Google. (Ahrefs)

- 96.55% of all pages get zero search traffic from Google. (Ahrefs)

- 50-65% of all number-one spots are dominated by featured snippets. (Authority Hacker)

- Reddit is the most popular domain for product review queries. (Detailed)

- Google is the most used search engine in the world, with a mobile market share of 95.32% and a desktop market share of 81.95%. (Statista)

- Google.com generated 84.2 billion visits a month in 2023. (Statista)

- Google generated $307.4 billion in revenue in 2023. (Alphabet Investor Relations)

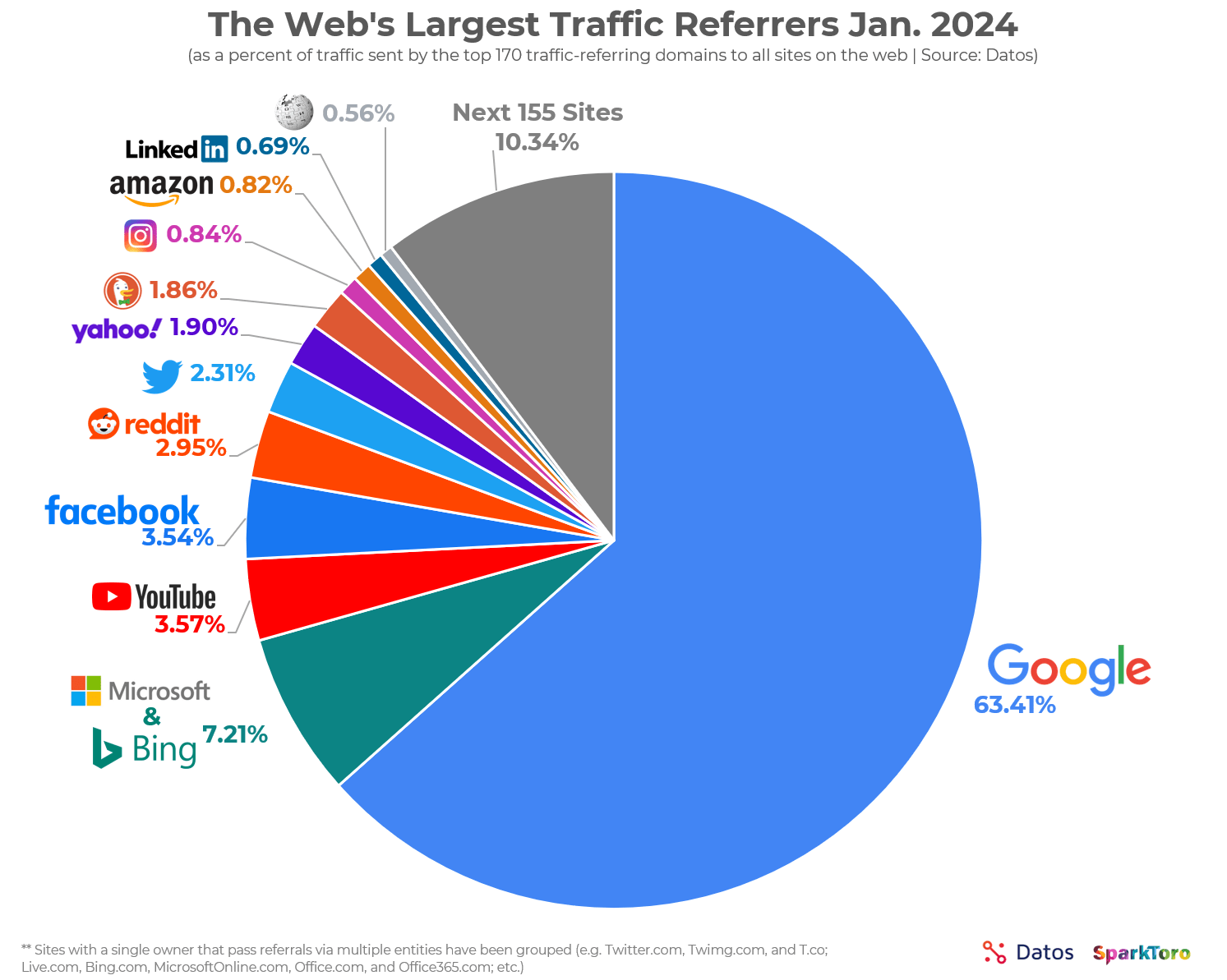

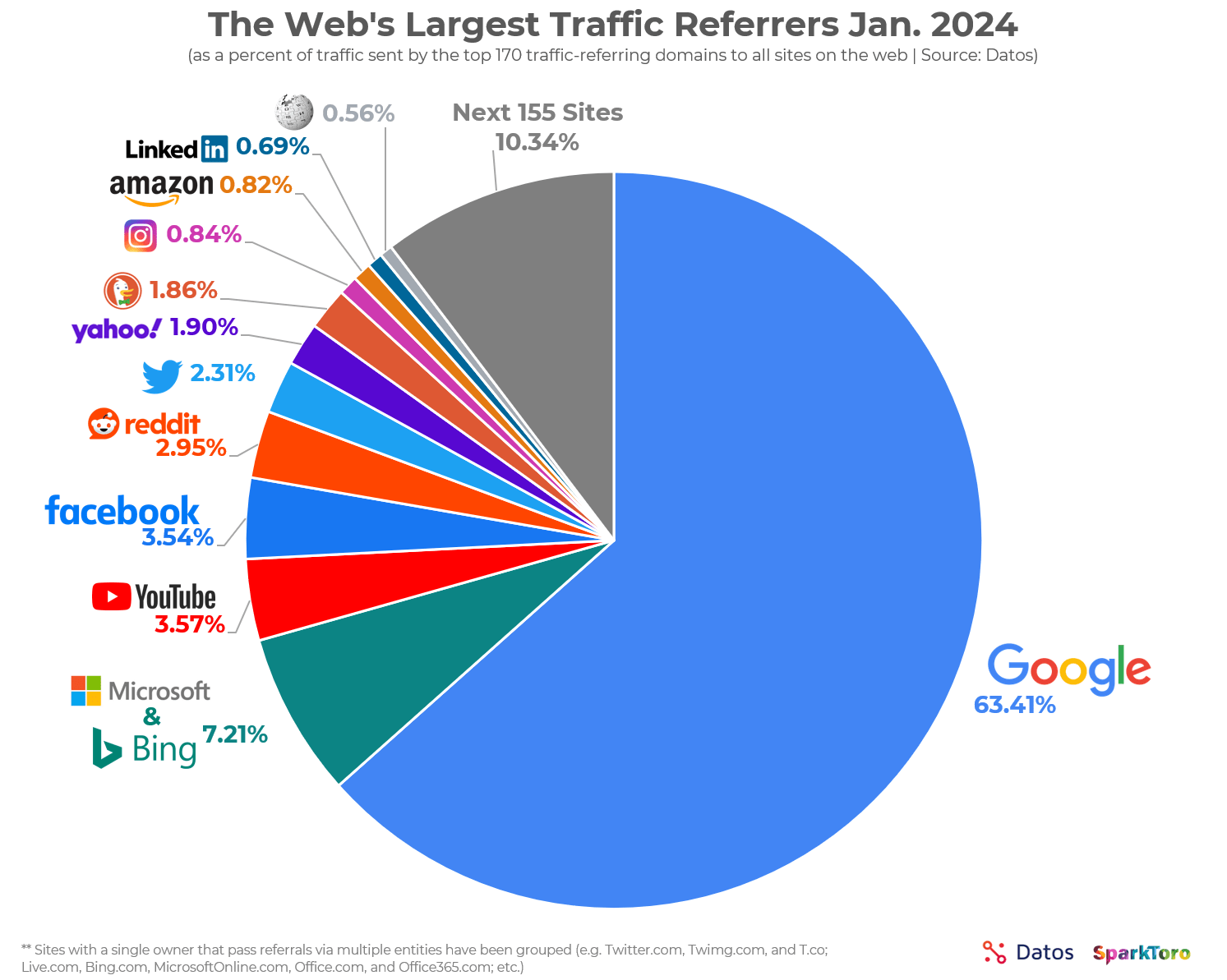

- 63.41% of all US web traffic referrals come from Google. (SparkToro)

- 92.96% of global traffic comes from Google Search, Google Images, and Google Maps. (SparkToro)

- Only 49% of Gen Z women use Google as their search engine. The rest use TikTok. (Search Engine Land)

- 58.67% of all website traffic worldwide comes from mobile phones. (Statista)

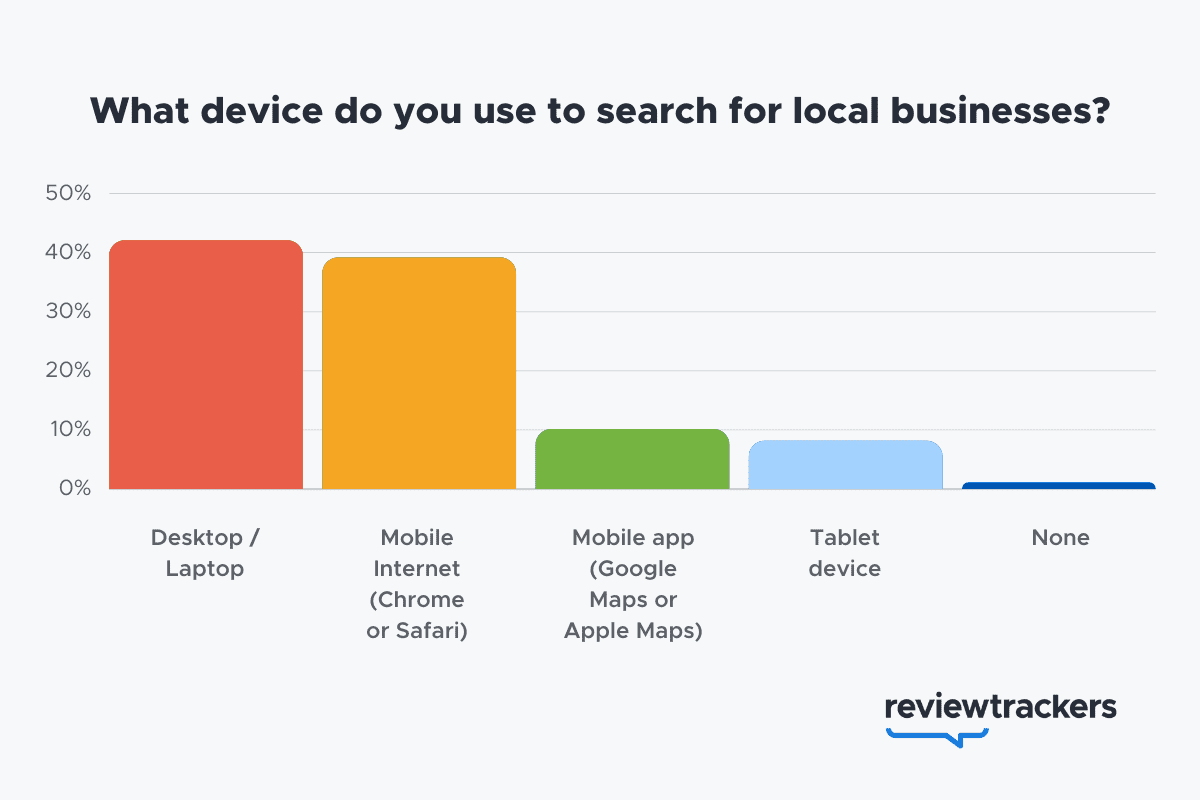

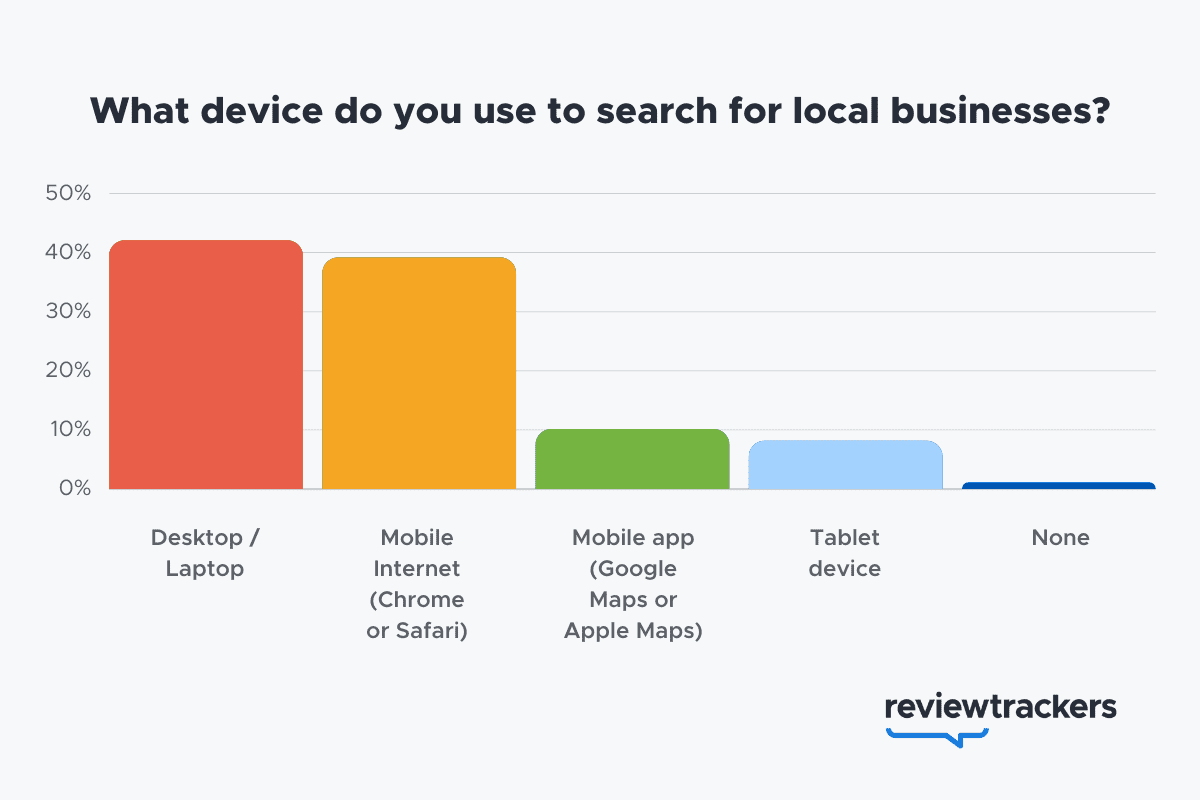

- 57% of local search queries are submitted using a mobile device or tablet. (ReviewTrackers)

- 51% of smartphone users have discovered a new company or product when conducting a search on their smartphones. (Think With Google)

- 54% of smartphone users search for business hours, and 53% search for directions to local stores. (Think With Google)

- 18% of local searches on smartphones lead to a purchase within a day vs. 7% of non-local searches. (Think With Google)

- 56% of in-store shoppers used their smartphones to shop or research items while they were in-store. (Think With Google)

- 60% of smartphone users have contacted a business directly using the search results (e.g., “click to call” option). (Think With Google)

- 63.6% of consumers say they are likely to check reviews on Google before visiting a business location. (ReviewTrackers)

- 88% of consumers would use a business that replies to all of its reviews. (BrightLocal)

- Customers are 2.7 times more likely to consider a business reputable if they find a complete Business Profile on Google Search and Maps. (Google)

- Customers are 70% more likely to visit and 50% more likely to consider purchasing from businesses with a complete Business Profile. (Google)

- 76% of people who search on their smartphones for something nearby visit a business within a day. (Think With Google)

- 28% of searches for something nearby result in a purchase. (Think With Google)

- Mobile searches for “store open near me” (such as, “grocery store open near me” have grown by over 250% in the last two years. (Think With Google)

- People use Google Lens for 12 billion visual searches a month. (Google)

- 50% of online shoppers say images helped them decide what to buy. (Think With Google)

- There are an estimated 136 billion indexed images on Google Image Search. (Photutorial)

- 15.8% of Google SERPs show images. (Moz)

- People click on 3D images almost 50% more than static ones. (Google)

- More than 800 million people use Google Discover monthly to stay updated on their interests. (Google)

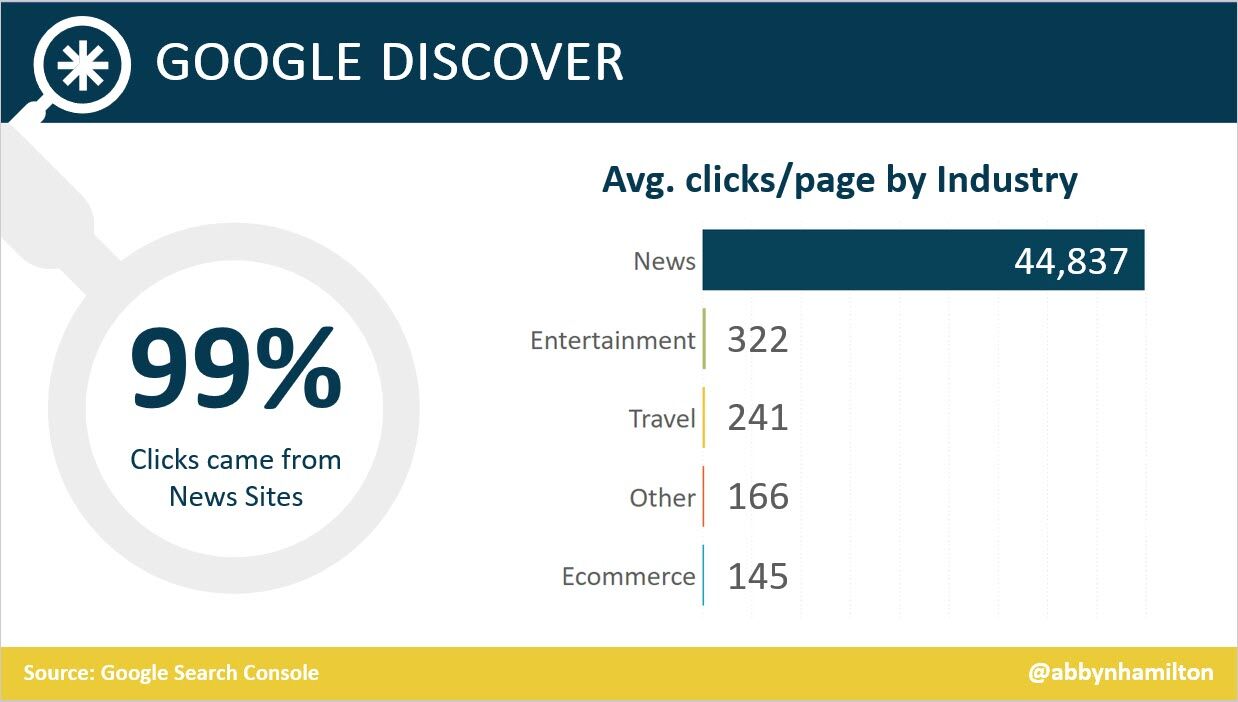

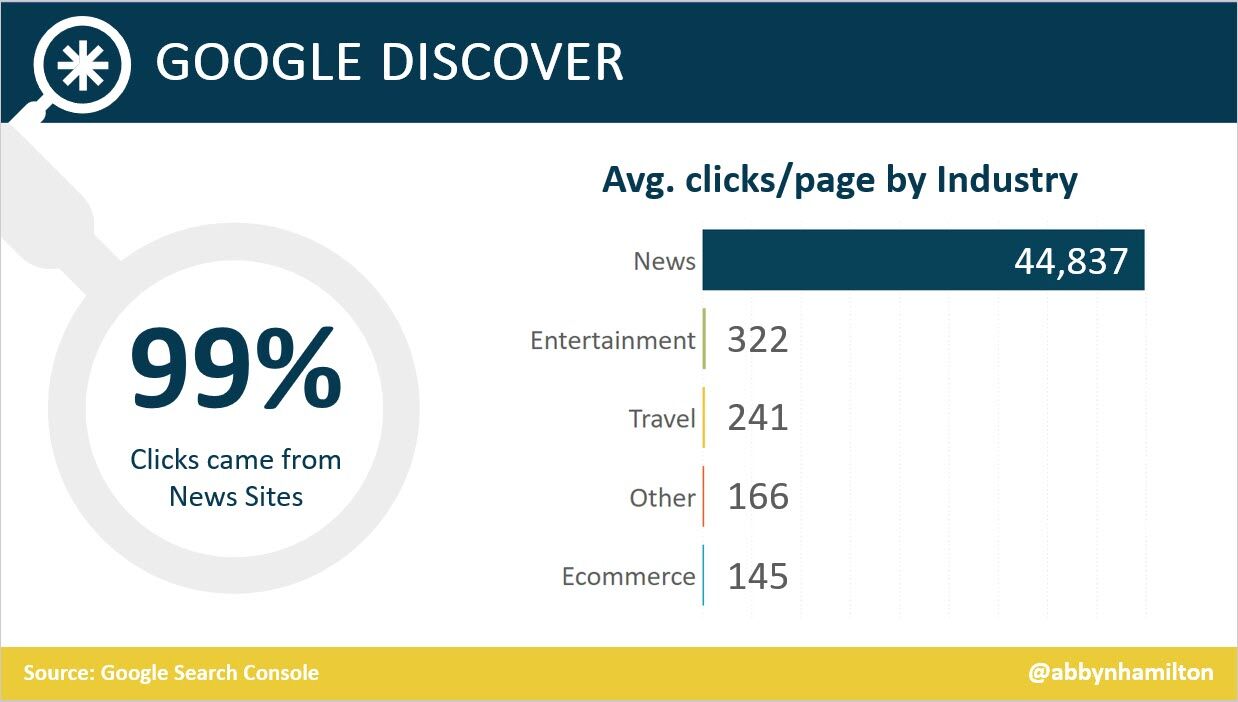

- 46% of Google Discover URLs are news sites, 44% e-commerce, 7% entertainment, and 2% travel. (Search Engine Journal)

- Even though news sites accounted for under 50% of Google Discover URLs, they received 99% of Discover clicks. (Search Engine Journal)

- Most Google Discover URLs only receive traffic for three to four days, with most of that traffic occurring one to two days after publishing. (Search Engine Journal)

- The clickthrough rate (CTR) for Google Discover is 11%. (Search Engine Journal)

- 91.45% of search volumes in Google Ads Keyword Planner are overestimates. (Ahrefs)

- For every $1 a business spends on Google Ads, they receive $8 in profit through Google Search and Ads. (Google)

- Google removed 5.5 billion ads, suspended 12.7 million advertiser accounts, restricted over 6.9 billion ads, and restricted ads from showing up on 2.1 billion publisher pages in 2023. (Google)

- The average shopping click-through rate (CTR) across all industries is 0.86% for Google Ads. (Wordstream)

- The average shopping cost per click (CPC) across all industries is $0.66 for Google Ads. (Wordstream)

- The average shopping conversion rate (CVR) across all industries is 1.91% for Google Ads. (Wordstream)

- 58% of consumers ages 25-34 use voice search daily. (UpCity)

- 16% of people use voice search for local “near me” searches. (UpCity)

- 67% of consumers say they’re very likely to use voice search when seeking information. (UpCity)

- Active users of the Google Assistant grew 4X over the past year, as of 2019. (Think With Google)

- Google Assistant hit 1 billion app installs. (Android Police)

- AI-generated answers from SGE were available for 91% of entertainment queries but only 17% of healthcare queries. (Statista)

- The AI-generated answers in Google’s Search Generative Experience (SGE) do not match any links from the top 10 Google organic search results 93.8% of the time. (Search Engine Journal)

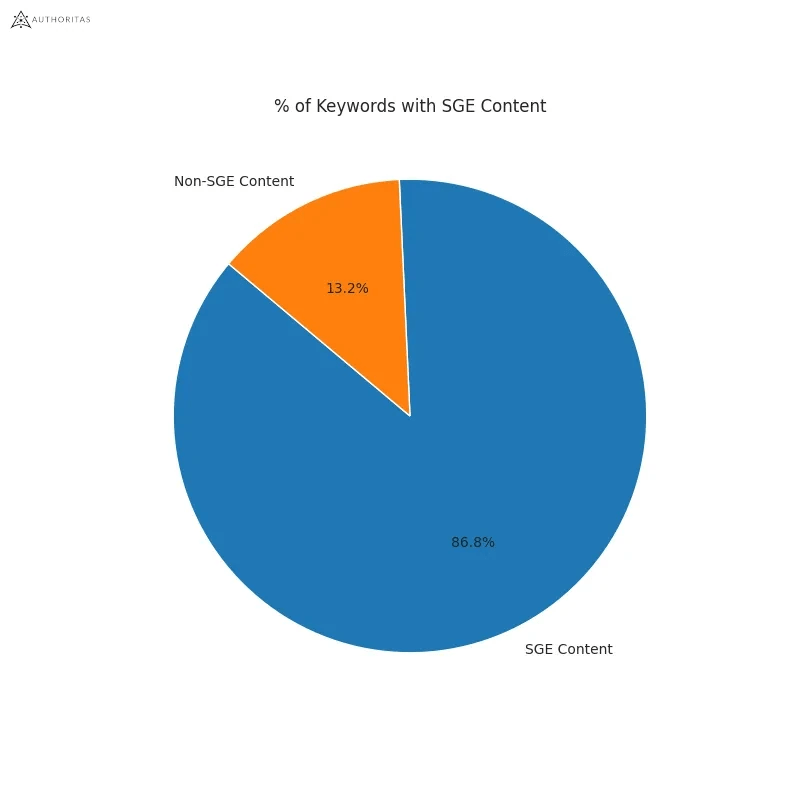

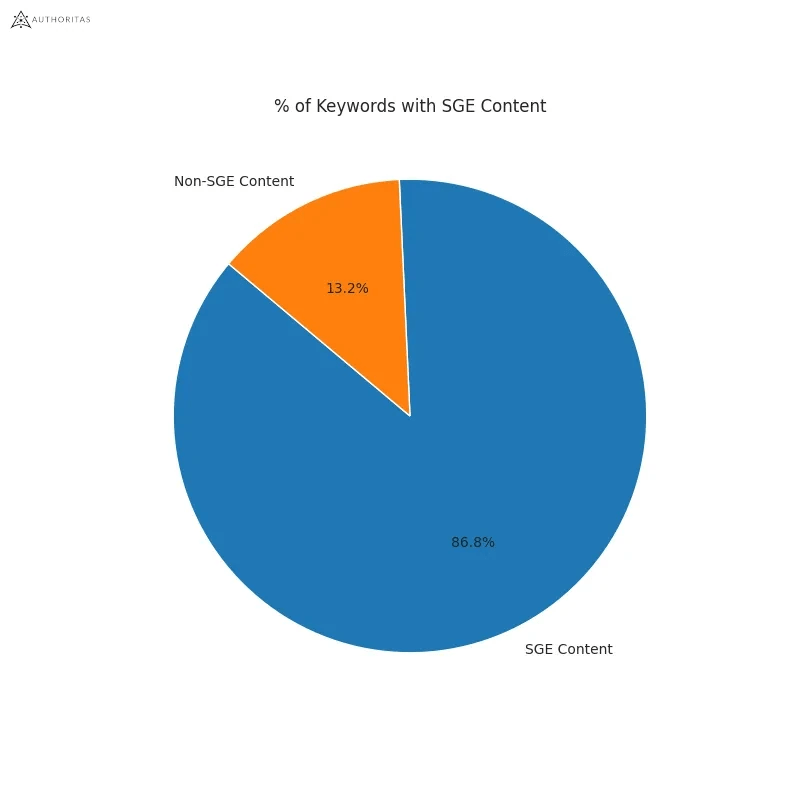

- Google displays a Search Generative element for 86.8% of all search queries. (Authoritas)

- 62% of generative links came from sources outside the top 10 ranking organic domains. Only 20.1% of generative URLs directly match an organic URL ranking on page one. (Authoritas)

- 70% of SEOs said that they were worried about the impact of SGE on organic search (Aira)

Learn more

Check out more resources on how Google works:

-

SEO6 days ago

SEO6 days agoHow to Use Keywords for SEO: The Complete Beginner’s Guide

-

MARKETING7 days ago

MARKETING7 days agoHow To Protect Your People and Brand

-

MARKETING4 days ago

MARKETING4 days agoAdvertising on Hulu: Ad Formats, Examples & Tips

-

MARKETING5 days ago

MARKETING5 days agoUpdates to data build service for better developer experiences

-

MARKETING1 day ago

MARKETING1 day ago18 Events and Conferences for Black Entrepreneurs in 2024

-

MARKETING6 days ago

MARKETING6 days agoThe Ultimate Guide to Email Marketing

-

WORDPRESS4 days ago

WORDPRESS4 days agoBest WordPress Plugins of All Time: Updated List for 2024

-

WORDPRESS5 days ago

WORDPRESS5 days agoShopify Could Be Undervalued Based On A Long-Term Horizon