SEO

HTTP Status Codes: The Complete List

HTTP status codes are server responses to client (typically browser) requests. The status codes are found in the server response. They include a three-digit number and usually have a description of the status. Specifications and their functionality are defined by the World Wide Web Consortium (W3C).

The status codes are how your client and a server communicate with each other. You can view any page’s HTTP status codes for free using Ahrefs’ SEO Toolbar by clicking the toolbar icon.

You can also click and expand this to see the full header response, which helps with troubleshooting many technical issues.

There are five ranges for the codes:

Keep reading to learn what the status codes mean and how Google handles them.

1xx status codes indicate the server has received the request and the processing will continue.

100 Continue – Everything is OK right now. Keep going.

101 Switching Protocols – There is a message, such as an upgrade request, that’s changing things to a different protocol.

102 Processing – Things are happening but are not done yet.

103 Early Hints – Lets you preload resources, which can help improve Largest Contentful Paint for Core Web Vitals.

2xx status codes mean that a client request has been received, understood, and accepted.

200 OK – All good. Everything is successful.

201 Created – Similar to 200, but the measure of success is that a new resource has been created.

202 Accepted – A request has been accepted for processing, but it hasn’t been completed yet. It may not have even started yet.

203 Non-Authoritative Information – Something has changed after it was sent from the server to you.

204 No Content – The request has been sent, but there’s no content in the body.

205 Reset Content – Resets the document to the original state, e.g., clearing a form.

206 Partial Content – Only some of the content has been sent.

207 Multi-Status – There are more response codes that could be 2xx, 3xx, 4xx, or 5xx.

208 Already Reported – The client tells the server the same resource was mentioned earlier.

218 This is fine – Unofficial use by Apache.

226 IM Used – This allows the server to send changes (diffs) of resources to clients.

How Google handles 2xx

Most 2xxs will allow pages to be indexed. However, 204s will be treated as soft 404s and won’t be indexed.

Soft 404s may also be URLs where the server says it is successful (200), but the content of the page says it doesn’t exist. The code should have been a 404, but the server says everything is fine when it isn’t. This can also happen on pages with little or no content.

You can find these soft 404 errors in the Coverage report in Google Search Console.

3xx status codes indicate the client still needs to do something before the request can be successful.

300 Multiple Choices – There’s more than one possible response, and you may have to choose one of them.

301 Moved Permanently – The old resource now redirects to the new resource.

302 Found – The old resource now redirects to the new resource temporarily.

302 Moved Temporarily – The old resource now redirects to the new resource temporarily.

303 See Other – This is another redirect that indicates the resource may be found somewhere else.

304 Not Modified – Says the page hasn’t been modified. Typically used for caching.

305 Use Proxy – The requested resource is only available if you use a proxy.

306 Switch Proxy – Your next requests should use the proxy specified. This code is no longer used.

307 Temporary Redirect – Has the same functionality as a 302 redirect, except you can’t switch between POST and GET.

307 HSTS Policy – Forces the client to use HTTPS when making requests instead of HTTP.

308 Permanent Redirect – Has the same functionality as a 301 redirect, except you can’t switch between POST and GET.

How Google handles 3xx

301s and 302s are canonicalization signals. They pass PageRank and help determine which URL is shown in Google’s index. A 301 consolidates forward to the new URL, and a 302 consolidates backward to the old URL. If a 302 is left in place long enough or if the URL it’s redirected to already exists, a 302 may be treated as a 301 and consolidated forward instead.

302s may also be used for redirecting users to language or country/language-specific homepages, but the same logic shouldn’t be used for deeper pages.

303s have an undefined treatment from Google. They may be treated as 301 or 302, depending on how they function.

A 307 has two different cases. In cases where it’s a temporary redirect, it will be treated the same as a 302 and attempt to consolidate backward. When web servers require clients to only use HTTPS connections (HSTS policy), Google won’t see the 307 because it’s cached in the browser. The initial hit (without cache) will have a server response code that’s likely a 301 or a 302. But your browser will show you a 307 for subsequent requests.

308s are treated the same as 301s and consolidate forward.

Google will follow up to 10 hops in a redirect chain. It typically follows five hops in one session and resumes where it left off in the next session. After this, signals may not consolidate to the redirected pages.

You can find these redirect chains in Ahrefs’ Site Audit or our free Ahrefs Webmaster Tools (AWT).

4xx status codes mean the client has an error. The error is usually explained in the response.

400 Bad Request – Something with the client request is wrong. It’s possibly malformed, invalid, or too large. And now the server can’t understand the request.

401 Unauthorized – The client hasn’t identified or verified itself when needed.

402 Payment Required – This doesn’t have an official use, and it’s reserved for the future for some kind of digital payment system. Some merchants use this for their own reasons, e.g., Shopify uses this when a store hasn’t paid its fees, and Stripe uses this for potentially fraudulent payments.

403 Forbidden – The client is known but doesn’t have access rights.

404 Not Found – The requested resource isn’t found.

405 Method Not Allowed – The request method used isn’t supported, e.g., a form needs to use POST but uses GET instead.

406 Not Acceptable – The accept header requested by the client can’t be fulfilled by the server.

407 Proxy Authentication Required – The authentication needs to be done via proxy.

408 Request Timeout – The server has timed out or decided to close the connection.

409 Conflict – The request conflicts with the state of the server.

410 Gone – Similar to a 404 where the request isn’t found, but this also says it won’t be available again.

411 Length Required – The request doesn’t contain a content-length field when it is required.

412 Precondition Failed – The client puts a condition on the request that the server doesn’t meet.

413 Payload Too Large – The request is larger than what the server allows.

414 URI Too Long – The URI requested is longer than the server allows.

415 Unsupported Media Type – The format requested isn’t supported by the server.

416 Range Not Satisfiable – The client asks for a portion of the file that can’t be supplied by the server, e.g., it asks for a part of the file beyond where the file actually ends.

417 Expectation Failed – The expectation indicated in the “Expect” request header can’t be met by the server.

418 I’m a Teapot – Happens when you try to brew coffee in a teapot. This started as an April Fool’s joke in 1998 but is actually standardized. With everything being smart devices these days, this could potentially be used.

419 Page Expired – Unofficial use by Laravel Framework.

420 Method Failure – Unofficial use by Spring Framework.

420 Enhance Your Calm – Unofficial use by Twitter.

421 Misdirected Request – The server that a request was sent to can’t respond to it.

422 Unprocessable Entity – There are semantic errors in the request.

423 Locked – The requested resource is locked.

424 Failed Dependency – This failure happens because it needs another request that also failed.

425 Too Early – The server is unwilling to process the request at this time because the request is likely to come again later.

426 Upgrade Required – The server refuses the request until the client uses a newer protocol. What needs to be upgraded is indicated in the “Upgrade” header.

428 Precondition Required – The server requires the request to be conditional.

429 Too Many Requests – This is a form of rate-limiting to protect the server because the client sent too many requests to the server too fast.

430 Request Header Fields Too Large – Unofficial use by Shopify.

431 Request Header Fields Too Large – The server won’t process the request because the header fields are too large.

440 Login Time-out – Unofficial use by IIS.

444 No Response – Unofficial use by nginx.

449 Retry With – Unofficial use by IIS.

450 Blocked by Windows Parental Controls – Unofficial use by Microsoft.

451 Unavailable For Legal Reasons – This is blocked for some kind of legal reason. You’ll see it sometimes with country-level blocks, e.g., blocked news or videos, due to privacy or licensing. You may see it for DMCA takedowns. The code itself is a reference to the novel Fahrenheit 451.

451 Redirect – Unofficial use by IIS.

460 – Unofficial use by AWS Elastic Load Balancer.

463 – Unofficial use by AWS Elastic Load Balancer.

494 Request header too large – Unofficial use by nginx.

495 SSL Certificate Error – Unofficial use by nginx.

496 SSL Certificate Required – Unofficial use by nginx.

497 HTTP Request Sent to HTTPS Port – Unofficial use by nginx.

498 Invalid Token – Unofficial use by Esri.

499 Client Closed Request – Unofficial use by nginx.

499 Token Required – Unofficial use by Esri.

How Google handles 4xx

4xxs will cause pages to drop from the index.

404s and 410s have a similar treatment. Both drop pages from the index, but 410s are slightly faster. In practical applications, they’re roughly the same.

421s are used by Google to opt out of crawling with HTTP/2.

429s are a little special because they are generally treated as server errors and will cause Google to slow down crawling. But eventually, Google will drop these pages from the index as well.

You can find 4xx errors in Site Audit or our free Ahrefs Webmaster Tools.

Another thing you may want to check is if any of these 404 pages have links to them. If the links point to a 404 page, they don’t count for your website. More than likely, you just need to 301 redirect each of these pages to a relevant page.

Here’s how to find those opportunities:

- Paste your domain into Site Explorer (also accessible for free in AWT)

- Go to the Best by links report

- Add a “404 not found” HTTP response filter

I usually sort this by “Referring domains.”

5xx status codes mean the server has an error, and it knows it can’t carry out the request. The response will contain a reason for the error.

500 Internal Server Error – The server encounters some kind of issue and doesn’t have a better or more specific error code.

501 Not Implemented – The request method isn’t supported by the server.

502 Bad Gateway – The server was in the middle of a request and used for routing. But it has received a bad response from the server it was routing to.

503 Service Unavailable – The server is overloaded or down for maintenance and can’t handle the request right now. It will probably be back up soon.

504 Gateway Timeout – The server was in the middle of a request and used for routing. But it has not received a timely response from the server it was routing to.

505 HTTP Version Not Supported – This is exactly what it says: The HTTP protocol version in the request isn’t supported by the server.

506 Variant Also Negotiates – Allows the client to get the best variant of a resource when the server has multiple variants.

507 Insufficient Storage – The server can’t store what it needs to store to complete the request.

508 Loop Detected – The server found an infinite loop when trying to process the request.

509 Bandwidth Limit Exceeded – Unofficial use by Apache and cPanel.

510 Not Extended – More extensions to the request are required before the server will fulfill it.

511 Network Authentication Required – The client needs authentication before the server allows network access.

520 Web Server Returned an Unknown Error – Unofficial use by Cloudflare.

521 Web Server is Down – Unofficial use by Cloudflare.

522 Connection Timed Out – Unofficial use by Cloudflare.

523 Origin is Unreachable – Unofficial use by Cloudflare.

524 A Timeout Occurred – Unofficial use by Cloudflare.

525 SSL Handshake Failed – Unofficial use by Cloudflare.

526 Invalid SSL Certificate – Unofficial use by Cloudflare.

527 Railgun Error – Unofficial use by Cloudflare.

529 Site is overloaded – Unofficial use by Qualys.

530 – Unofficial use by Cloudflare.

530 Site is frozen – Unofficial use by Pantheon.

561 Unauthorized – Unofficial use by AWS Elastic Load Balancer.

598 (Informal convention) Network read timeout error – Unofficial use by some HTTP proxies.

How Google handles 5xx

5xx errors will slow down crawling. Eventually, the pages will be dropped from Google’s index. You can find these in Site Audit or Ahrefs Webmaster Tools, but they may be different from the 5xxs that Google sees. Since these are server errors, they may not always be present.

Source link

SEO

56 Google Search Statistics to Bookmark for 2024

If you’re curious about the state of Google search in 2024, look no further.

Each year we pick, vet, and categorize a list of up-to-date statistics to give you insights from trusted sources on Google search trends.

Check out more resources on how Google works:

Learn more

SEO

How To Use ChatGPT For Keyword Research

Anyone not using ChatGPT for keyword research is missing a trick.

You can save time and understand an entire topic in seconds instead of hours.

In this article, I outline my most effective ChatGPT prompts for keyword research and teach you how I put them together so that you, too, can take, edit, and enhance them even further.

But before we jump into the prompts, I want to emphasize that you shouldn’t replace keyword research tools or disregard traditional keyword research methods.

ChatGPT can make mistakes. It can even create new keywords if you give it the right prompt. For example, I asked it to provide me with a unique keyword for the topic “SEO” that had never been searched before.

“Interstellar Internet SEO: Optimizing content for the theoretical concept of an interstellar internet, considering the challenges of space-time and interplanetary communication delays.”

Although I want to jump into my LinkedIn profile and update my title to “Interstellar Internet SEO Consultant,” unfortunately, no one has searched that (and they probably never will)!

You must not blindly rely on the data you get back from ChatGPT.

What you can rely on ChatGPT for is the topic ideation stage of keyword research and inspiration.

ChatGPT is a large language model trained with massive amounts of data to accurately predict what word will come next in a sentence. However, it does not know how to do keyword research yet.

Instead, think of ChatGPT as having an expert on any topic armed with the information if you ask it the right question.

In this guide, that is exactly what I aim to teach you how to do – the most essential prompts you need to know when performing topical keyword research.

Best ChatGPT Keyword Research Prompts

The following ChatGPT keyword research prompts can be used on any niche, even a topic to which you are brand new.

For this demonstration, let’s use the topic of “SEO” to demonstrate these prompts.

Generating Keyword Ideas Based On A Topic

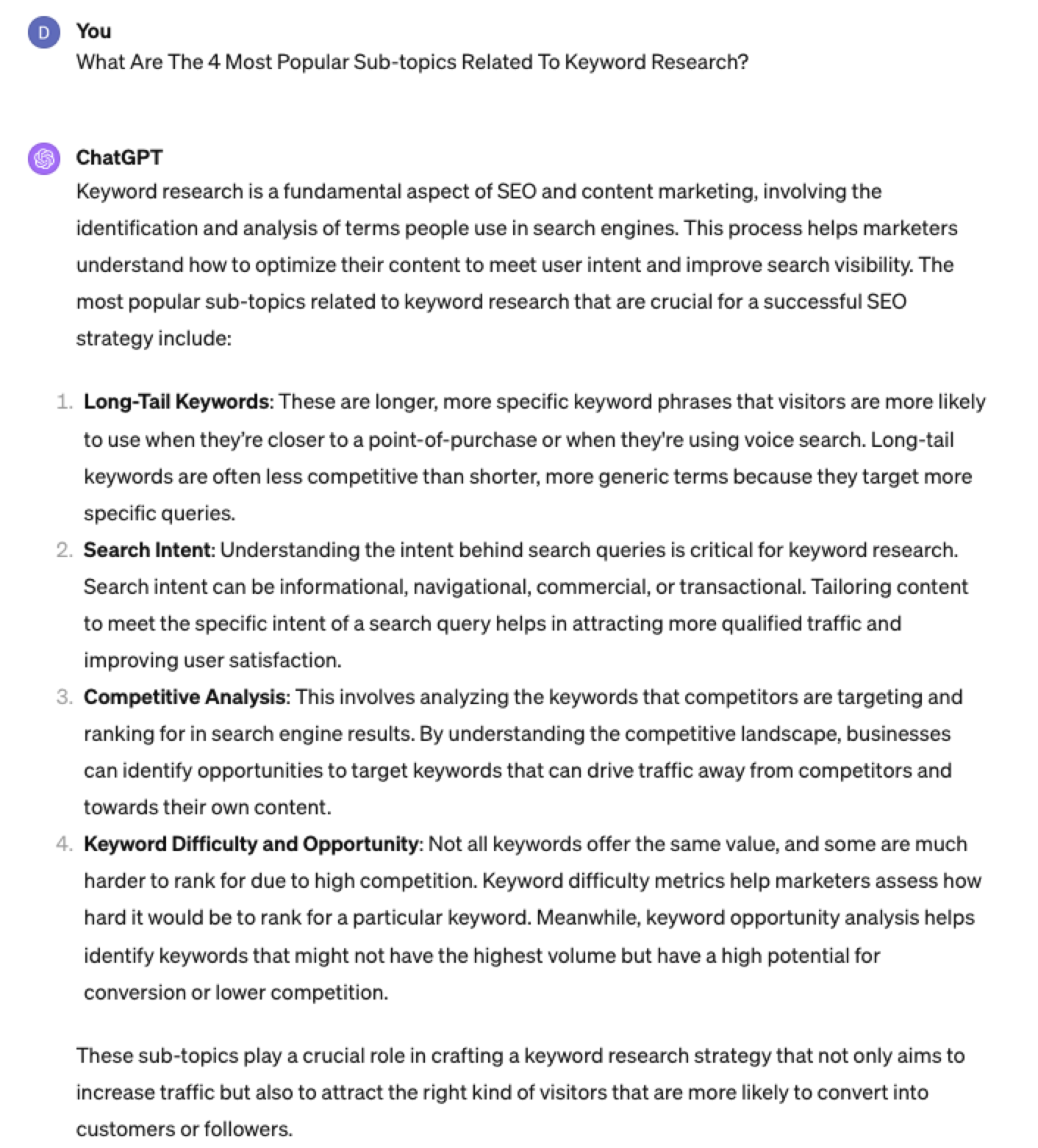

What Are The {X} Most Popular Sub-topics Related To {Topic}?

The first prompt is to give you an idea of the niche.

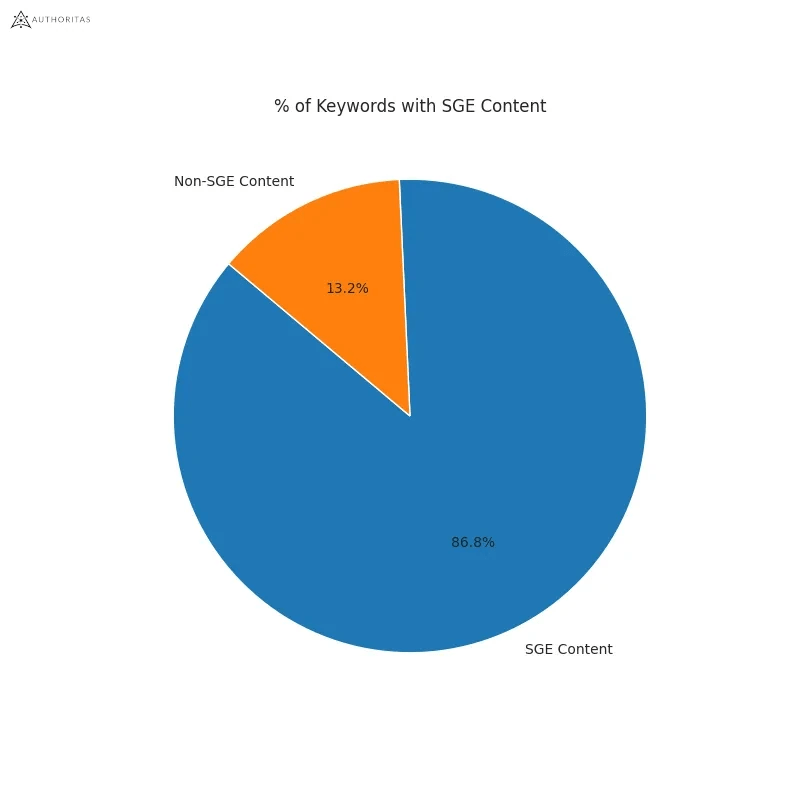

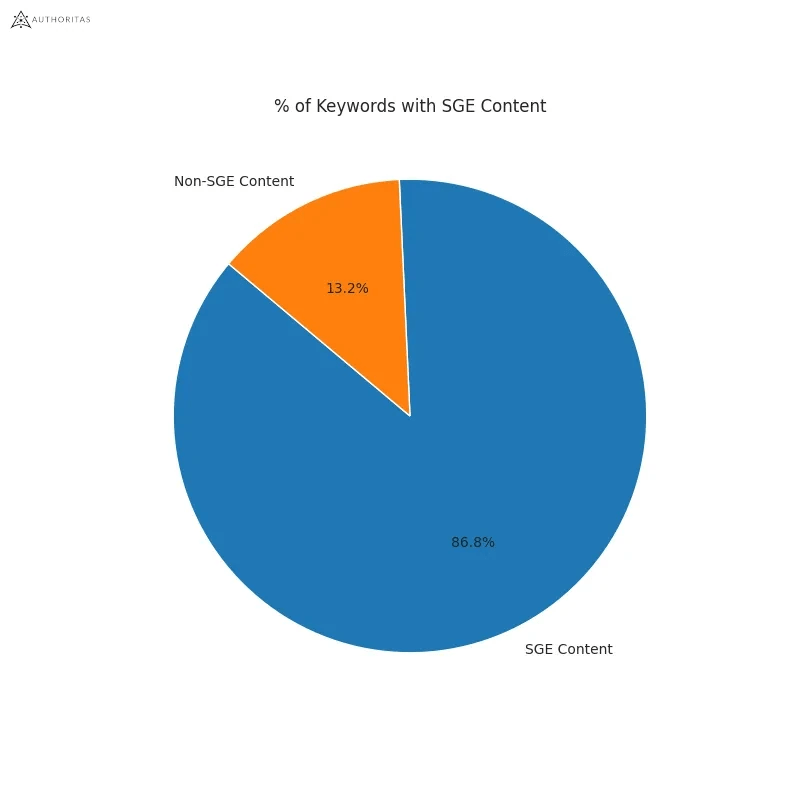

As shown above, ChatGPT did a great job understanding and breaking down SEO into three pillars: on-page, off-page & technical.

The key to the following prompt is to take one of the topics ChatGPT has given and query the sub-topics.

What Are The {X} Most Popular Sub-topics Related To {Sub-topic}?

For this example, let’s query, “What are the most popular sub-topics related to keyword research?”

Having done keyword research for over 10 years, I would expect it to output information related to keyword research metrics, the types of keywords, and intent.

Let’s see.

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024Again, right on the money.

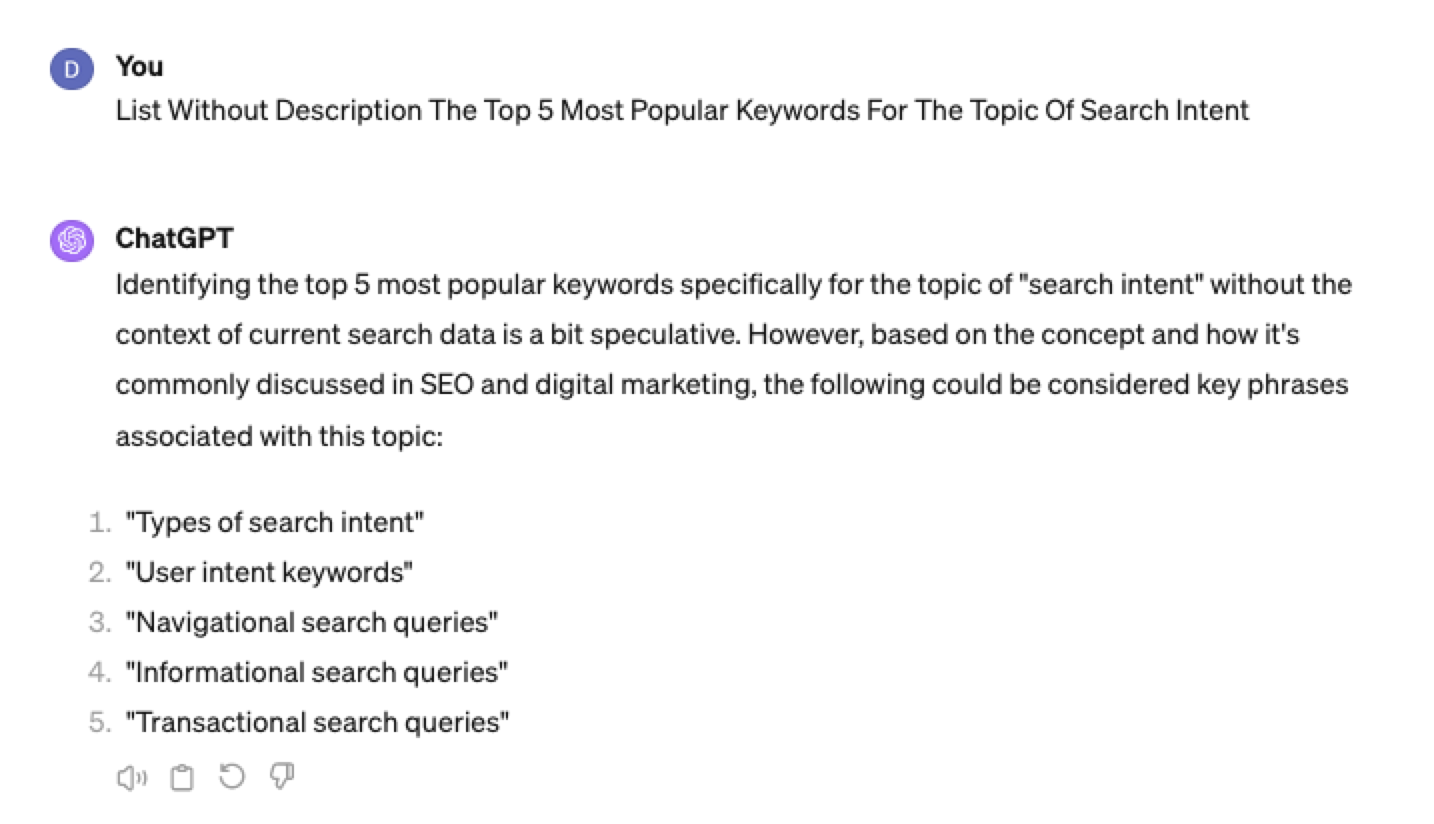

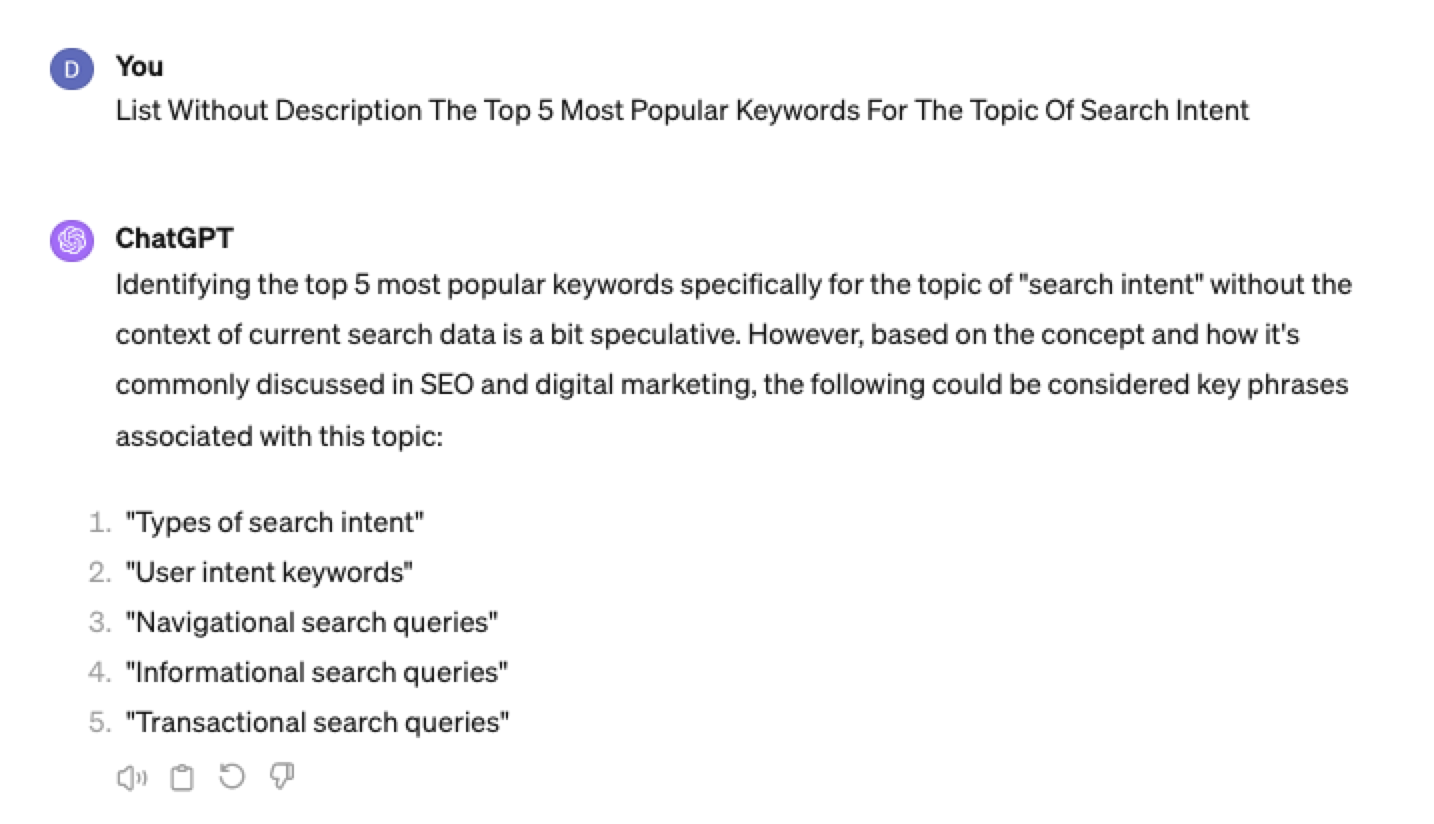

To get the keywords you want without having ChatGPT describe each answer, use the prompt “list without description.”

Here is an example of that.

List Without Description The Top {X} Most Popular Keywords For The Topic Of {X}

You can even branch these keywords out further into their long-tail.

Example prompt:

List Without Description The Top {X} Most Popular Long-tail Keywords For The Topic “{X}”

Screenshot ChatGPT 4,April 2024

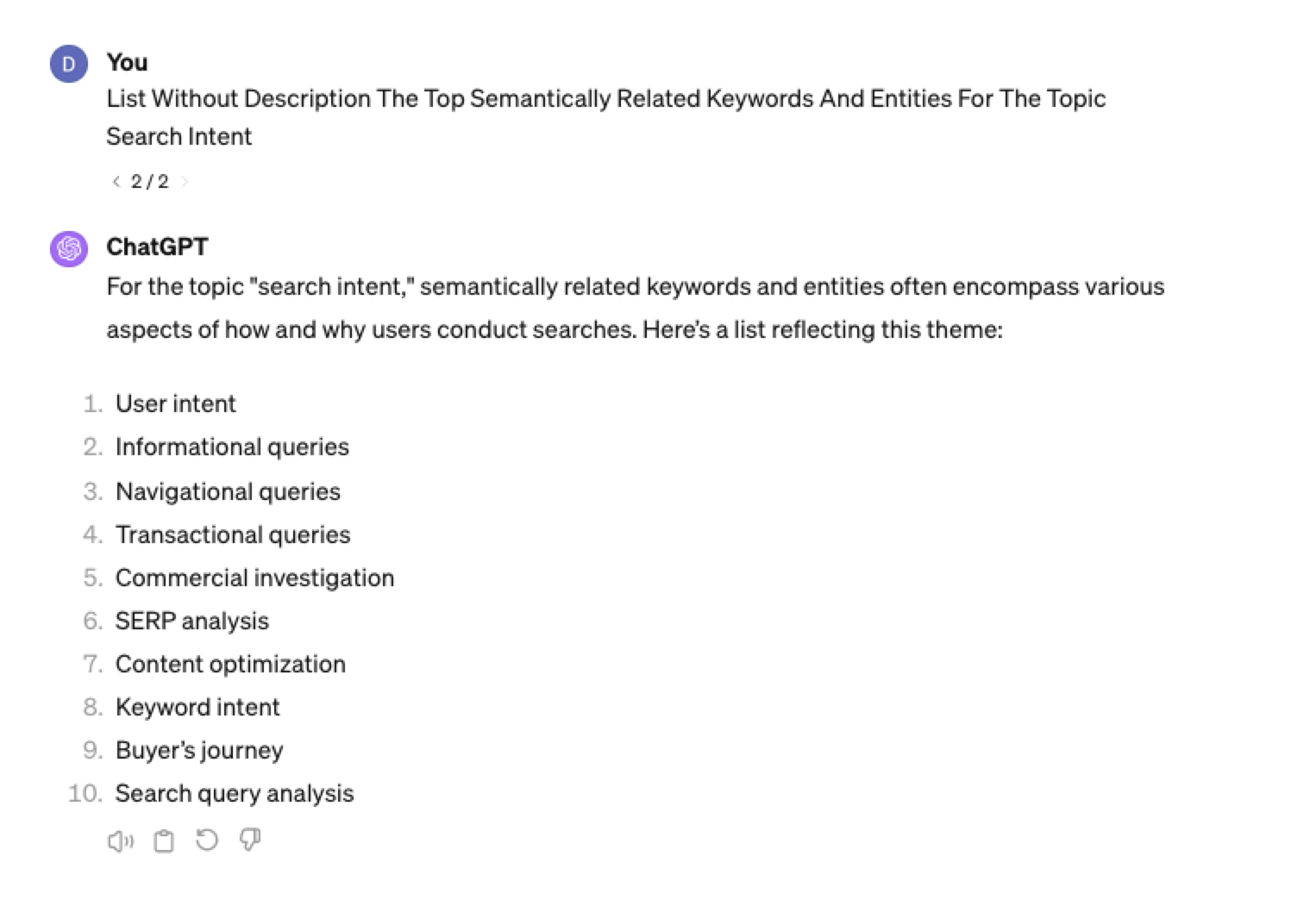

Screenshot ChatGPT 4,April 2024List Without Description The Top Semantically Related Keywords And Entities For The Topic {X}

You can even ask ChatGPT what any topic’s semantically related keywords and entities are!

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 2024Tip: The Onion Method Of Prompting ChatGPT

When you are happy with a series of prompts, add them all to one prompt. For example, so far in this article, we have asked ChatGPT the following:

- What are the four most popular sub-topics related to SEO?

- What are the four most popular sub-topics related to keyword research

- List without description the top five most popular keywords for “keyword intent”?

- List without description the top five most popular long-tail keywords for the topic “keyword intent types”?

- List without description the top semantically related keywords and entities for the topic “types of keyword intent in SEO.”

Combine all five into one prompt by telling ChatGPT to perform a series of steps. Example:

“Perform the following steps in a consecutive order Step 1, Step 2, Step 3, Step 4, and Step 5”

Example:

“Perform the following steps in a consecutive order Step 1, Step 2, Step 3, Step 4 and Step 5. Step 1 – Generate an answer for the 3 most popular sub-topics related to {Topic}?. Step 2 – Generate 3 of the most popular sub-topics related to each answer. Step 3 – Take those answers and list without description their top 3 most popular keywords. Step 4 – For the answers given of their most popular keywords, provide 3 long-tail keywords. Step 5 – for each long-tail keyword offered in the response, a list without descriptions 3 of their top semantically related keywords and entities.”

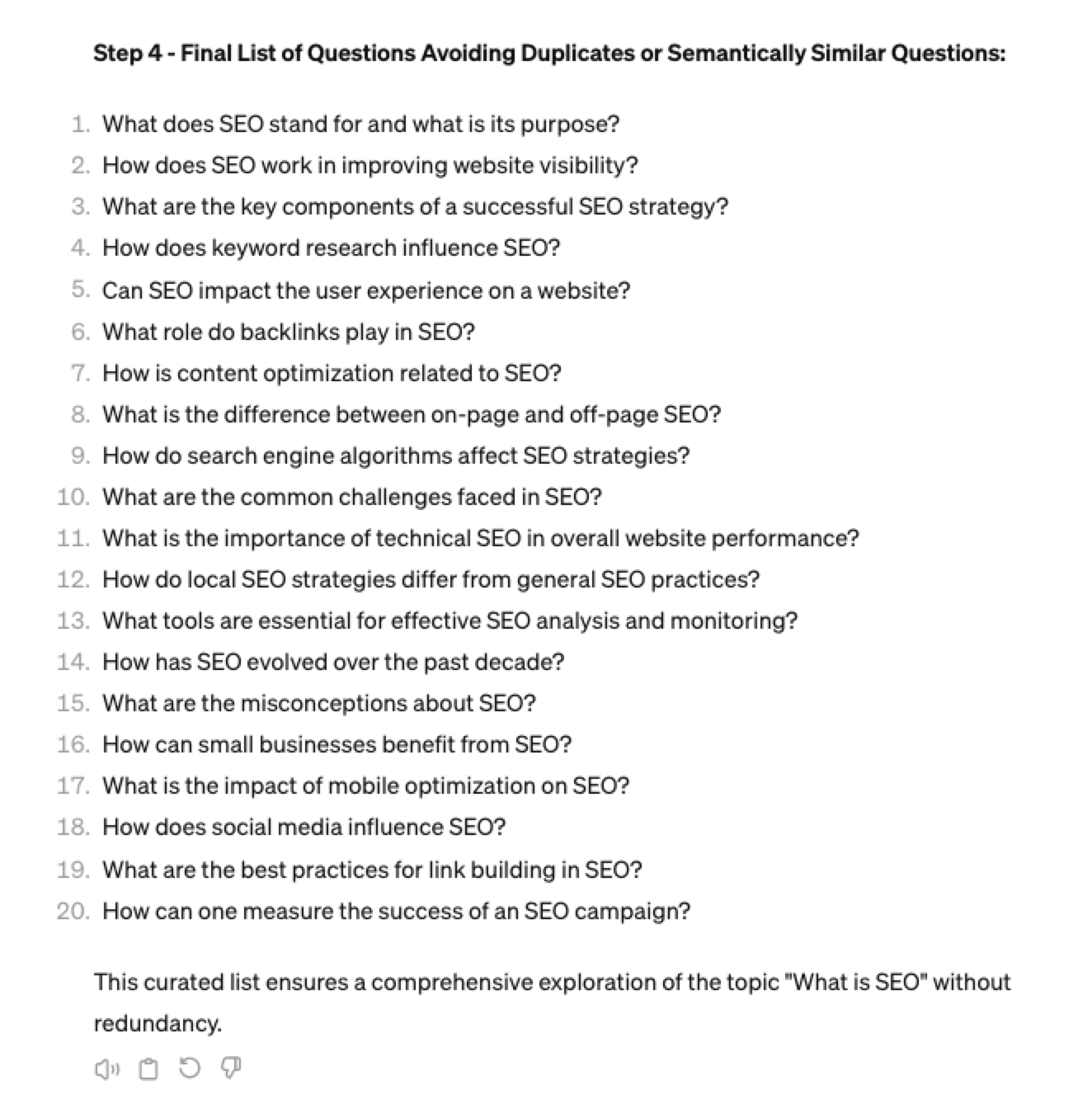

Generating Keyword Ideas Based On A Question

Taking the steps approach from above, we can get ChatGPT to help streamline getting keyword ideas based on a question. For example, let’s ask, “What is SEO?”

“Perform the following steps in a consecutive order Step 1, Step 2, Step 3, and Step 4. Step 1 Generate 10 questions about “{Question}”?. Step 2 – Generate 5 more questions about “{Question}” that do not repeat the above. Step 3 – Generate 5 more questions about “{Question}” that do not repeat the above. Step 4 – Based on the above Steps 1,2,3 suggest a final list of questions avoiding duplicates or semantically similar questions.”

Screenshot ChatGPT 4, April 2024

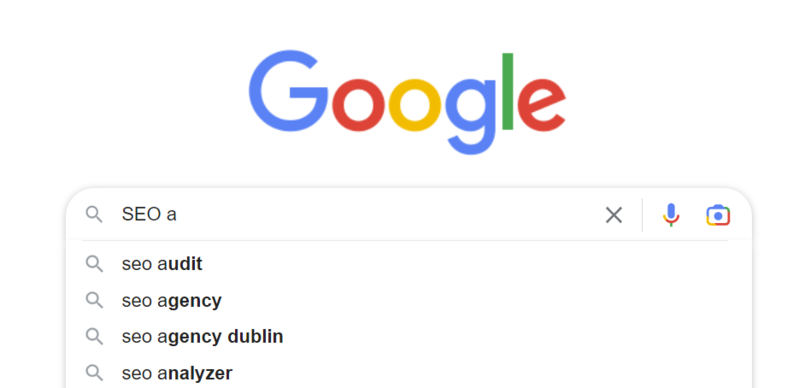

Screenshot ChatGPT 4, April 2024Generating Keyword Ideas Using ChatGPT Based On The Alphabet Soup Method

One of my favorite methods, manually, without even using a keyword research tool, is to generate keyword research ideas from Google autocomplete, going from A to Z.

-

Screenshot from Google autocomplete, April 2024

Screenshot from Google autocomplete, April 2024

You can also do this using ChatGPT.

Example prompt:

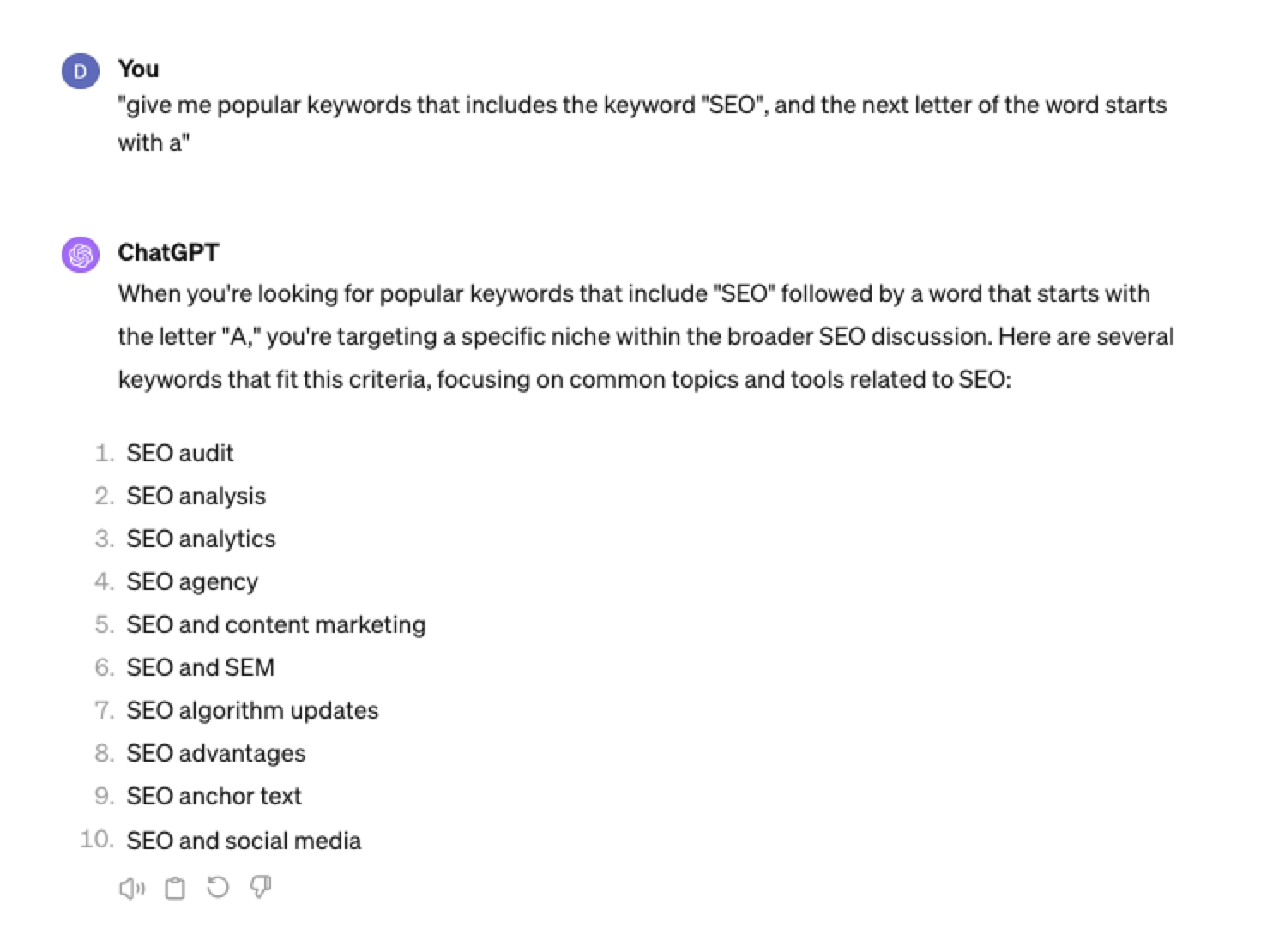

“give me popular keywords that includes the keyword “SEO”, and the next letter of the word starts with a”

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024Tip: Using the onion prompting method above, we can combine all this in one prompt.

“Give me five popular keywords that include “SEO” in the word, and the following letter starts with a. Once the answer has been done, move on to giving five more popular keywords that include “SEO” for each letter of the alphabet b to z.”

Generating Keyword Ideas Based On User Personas

When it comes to keyword research, understanding user personas is essential for understanding your target audience and keeping your keyword research focused and targeted. ChatGPT may help you get an initial understanding of customer personas.

Example prompt:

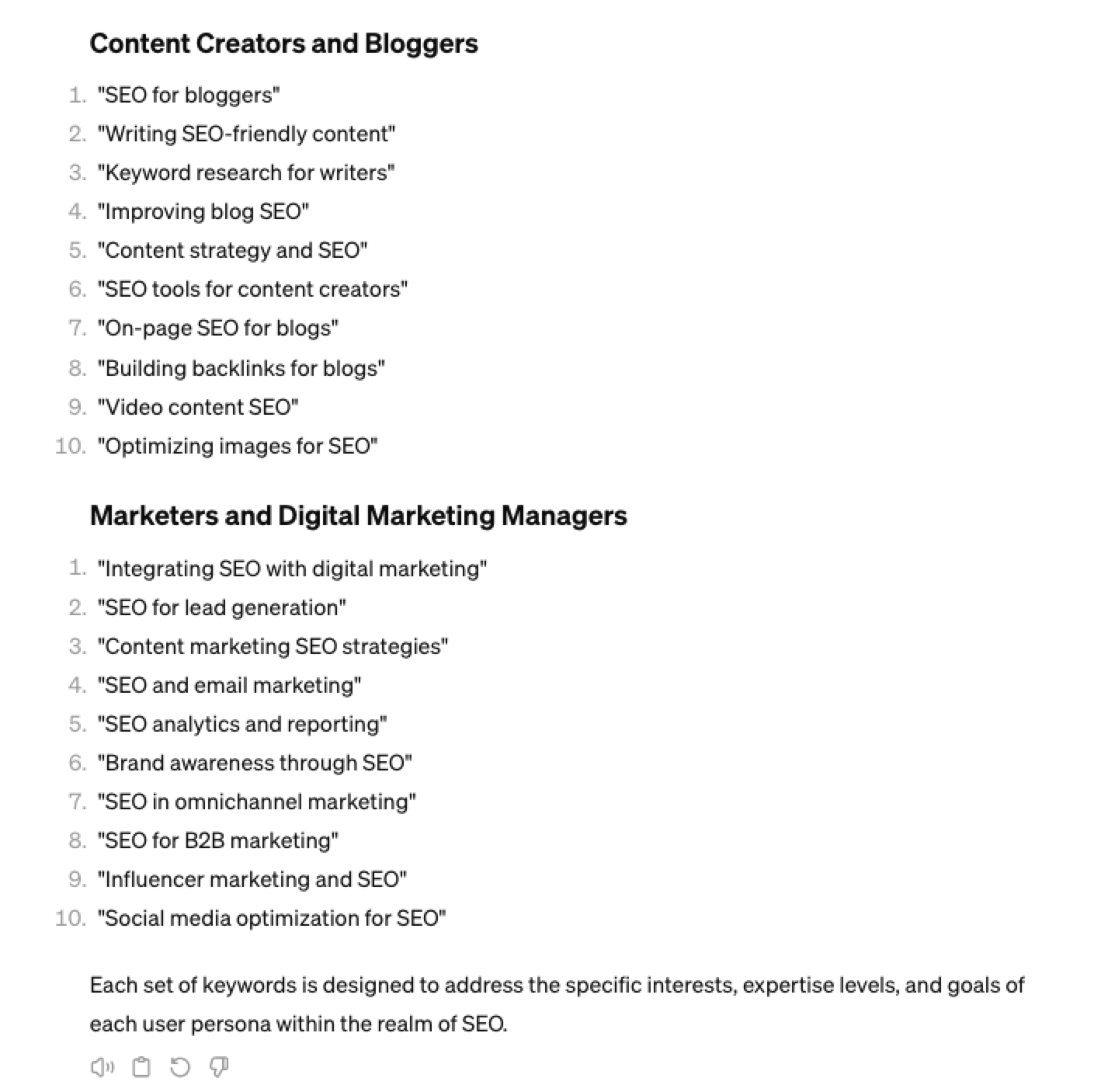

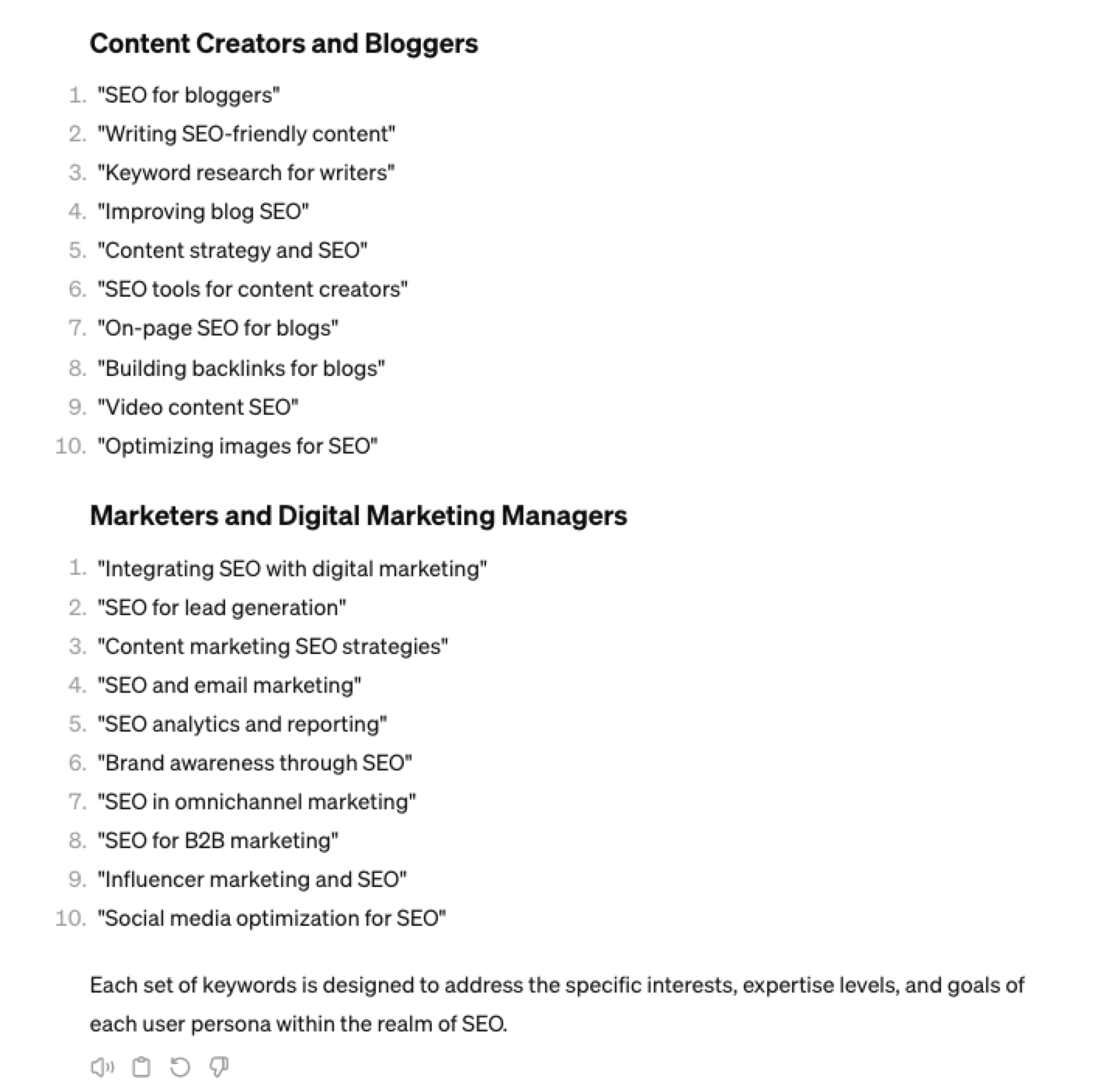

“For the topic of “{Topic}” list 10 keywords each for the different types of user personas”

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024You could even go a step further and ask for questions based on those topics that those specific user personas may be searching for:

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 2024As well as get the keywords to target based on those questions:

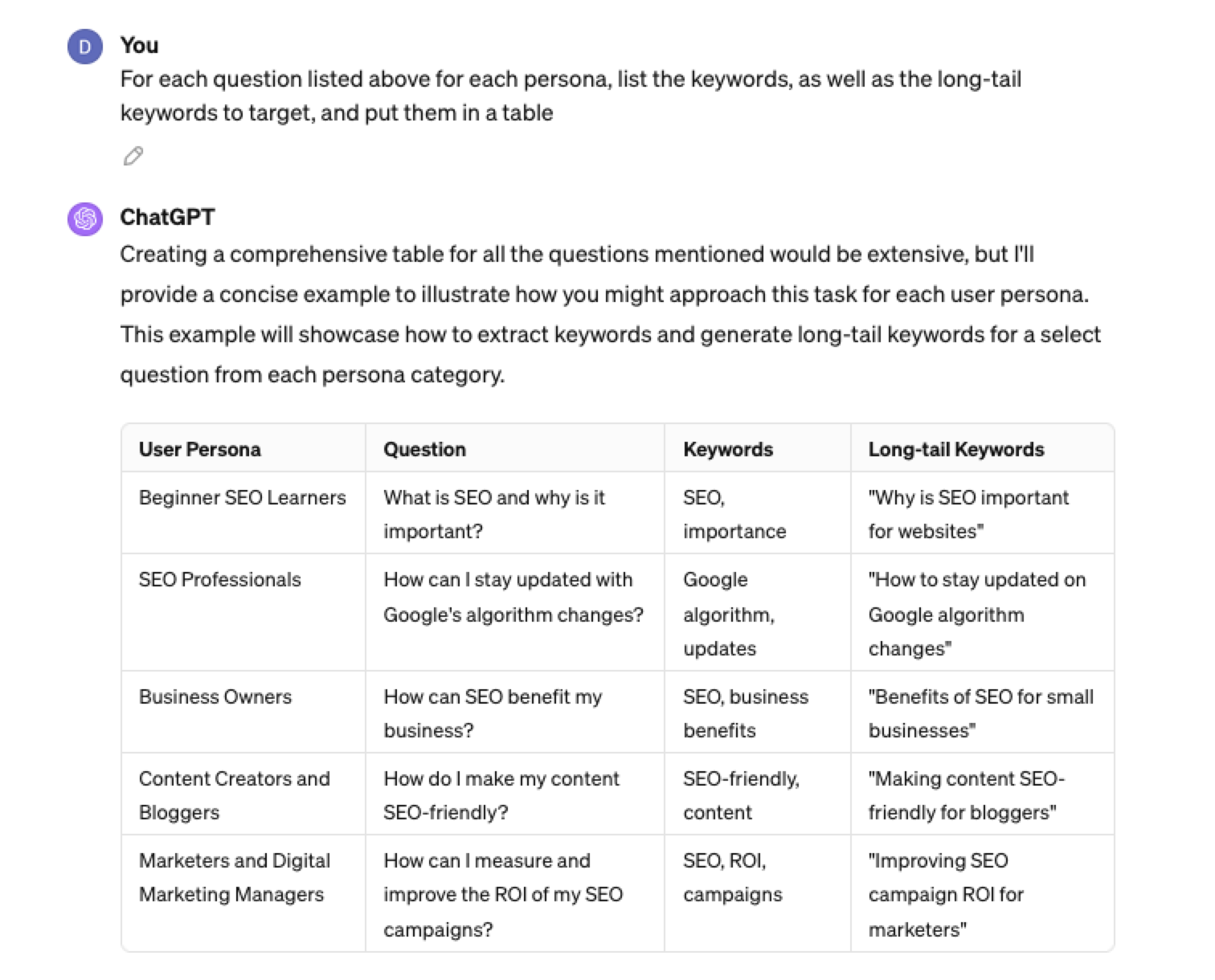

“For each question listed above for each persona, list the keywords, as well as the long-tail keywords to target, and put them in a table”

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024Generating Keyword Ideas Using ChatGPT Based On Searcher Intent And User Personas

Understanding the keywords your target persona may be searching is the first step to effective keyword research. The next step is to understand the search intent behind those keywords and which content format may work best.

For example, a business owner who is new to SEO or has just heard about it may be searching for “what is SEO.”

However, if they are further down the funnel and in the navigational stage, they may search for “top SEO firms.”

You can query ChatGPT to inspire you here based on any topic and your target user persona.

SEO Example:

“For the topic of “{Topic}” list 10 keywords each for the different types of searcher intent that a {Target Persona} would be searching for”

ChatGPT For Keyword Research Admin

Here is how you can best use ChatGPT for keyword research admin tasks.

Using ChatGPT As A Keyword Categorization Tool

One of the use cases for using ChatGPT is for keyword categorization.

In the past, I would have had to devise spreadsheet formulas to categorize keywords or even spend hours filtering and manually categorizing keywords.

ChatGPT can be a great companion for running a short version of this for you.

Let’s say you have done keyword research in a keyword research tool, have a list of keywords, and want to categorize them.

You could use the following prompt:

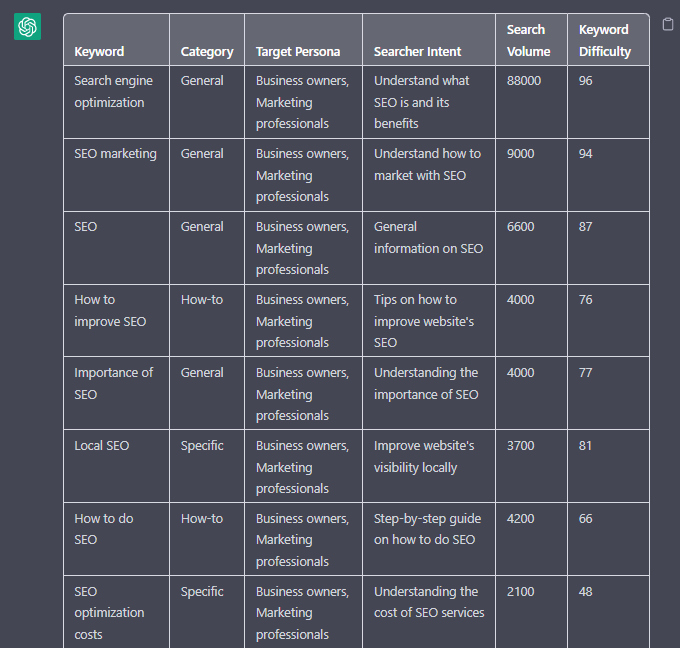

“Filter the below list of keywords into categories, target persona, searcher intent, search volume and add information to a six-column table: List of keywords – [LIST OF KEYWORDS], Keyword Search Volume [SEARCH VOLUMES] and Keyword Difficulties [KEYWORD DIFFICUTIES].”

-

Screenshot from ChatGPT, April 2024

Screenshot from ChatGPT, April 2024

Tip: Add keyword metrics from the keyword research tools, as using the search volumes that a ChatGPT prompt may give you will be wildly inaccurate at best.

Using ChatGPT For Keyword Clustering

Another of ChatGPT’s use cases for keyword research is to help you cluster. Many keywords have the same intent, and by grouping related keywords, you may find that one piece of content can often target multiple keywords at once.

However, be careful not to rely only on LLM data for clustering. What ChatGPT may cluster as a similar keyword, the SERP or the user may not agree with. But it is a good starting point.

The big downside of using ChatGPT for keyword clustering is actually the amount of keyword data you can cluster based on the memory limits.

So, you may find a keyword clustering tool or script that is better for large keyword clustering tasks. But for small amounts of keywords, ChatGPT is actually quite good.

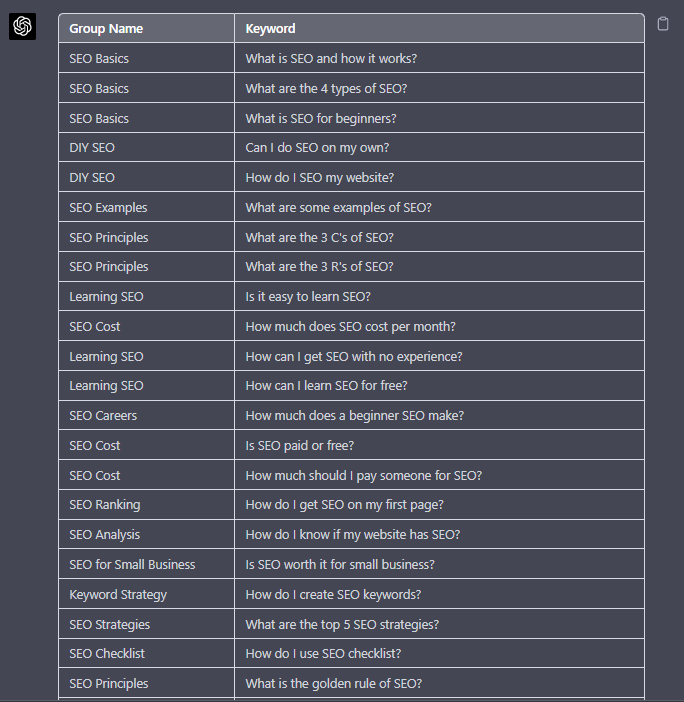

A great use small keyword clustering use case using ChatGPT is for grouping People Also Ask (PAA) questions.

Use the following prompt to group keywords based on their semantic relationships. For example:

“Organize the following keywords into groups based on their semantic relationships, and give a short name to each group: [LIST OF PAA], create a two-column table where each keyword sits on its own row.

-

Screenshot from ChatGPT, April 2024

Screenshot from ChatGPT, April 2024

Using Chat GPT For Keyword Expansion By Patterns

One of my favorite methods of doing keyword research is pattern spotting.

Most seed keywords have a variable that can expand your target keywords.

Here are a few examples of patterns:

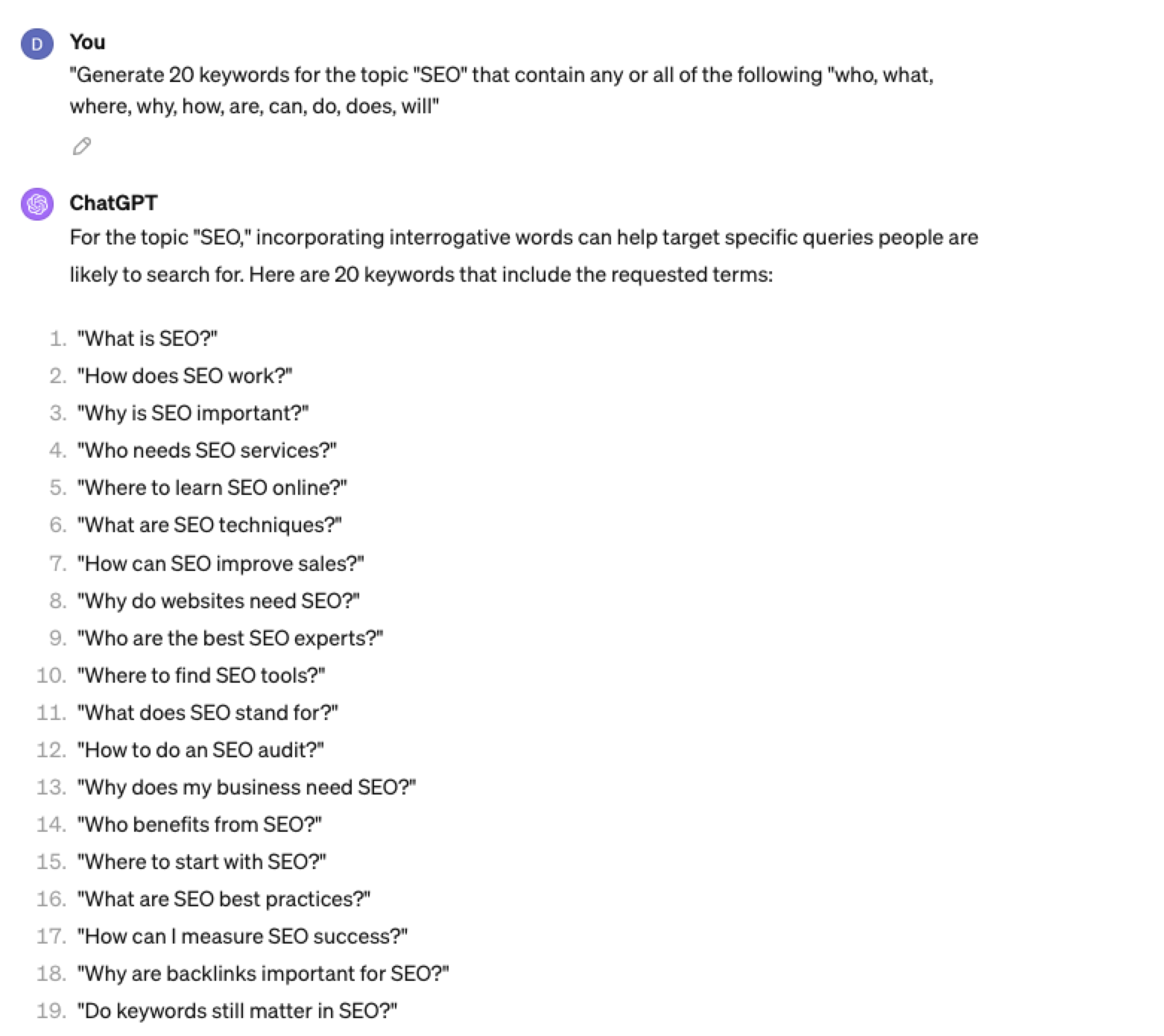

1. Question Patterns

(who, what, where, why, how, are, can, do, does, will)

“Generate [X] keywords for the topic “[Topic]” that contain any or all of the following “who, what, where, why, how, are, can, do, does, will”

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 20242. Comparison Patterns

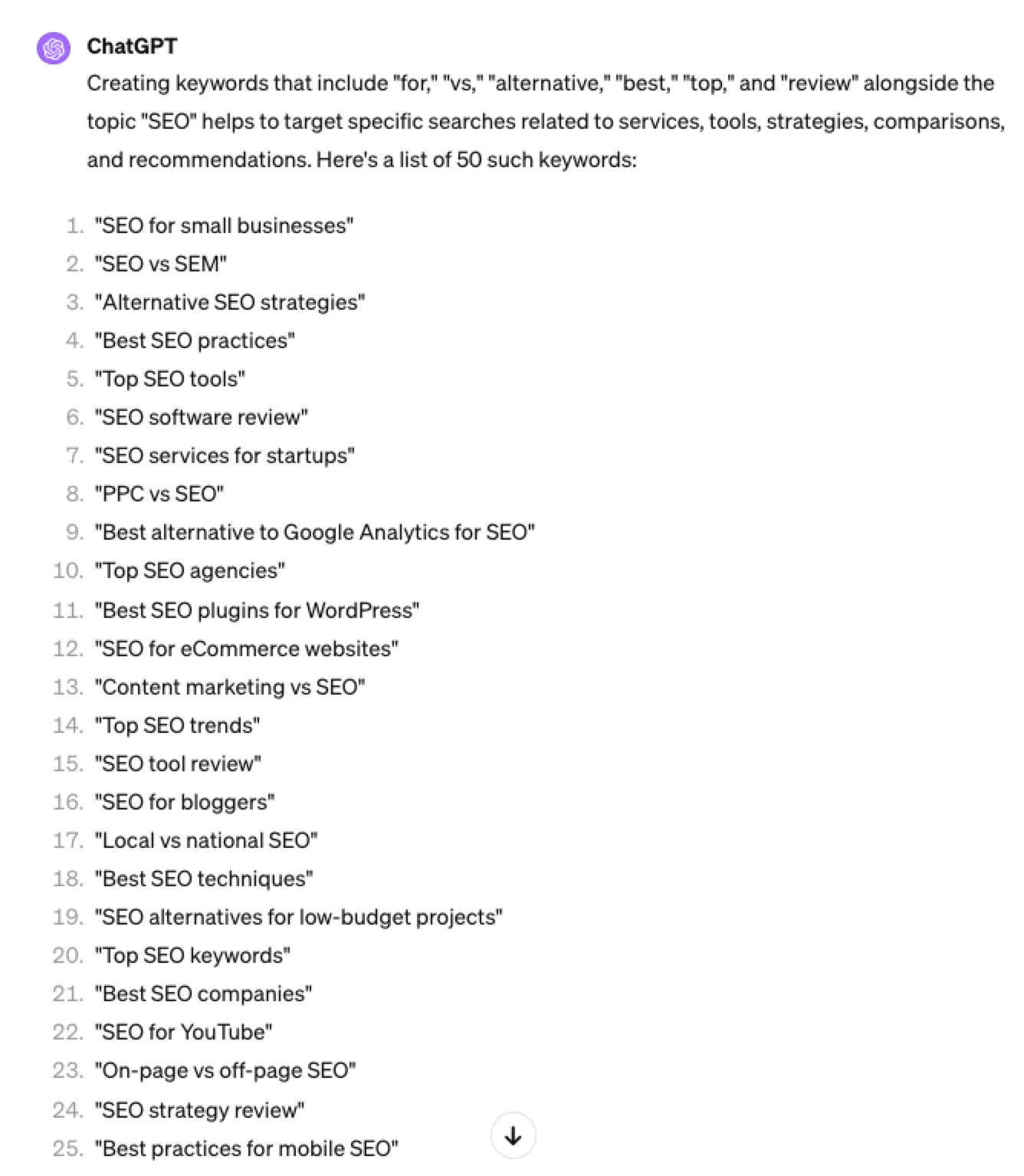

Example:

“Generate 50 keywords for the topic “{Topic}” that contain any or all of the following “for, vs, alternative, best, top, review”

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 20243. Brand Patterns

Another one of my favorite modifiers is a keyword by brand.

We are probably all familiar with the most popular SEO brands; however, if you aren’t, you could ask your AI friend to do the heavy lifting.

Example prompt:

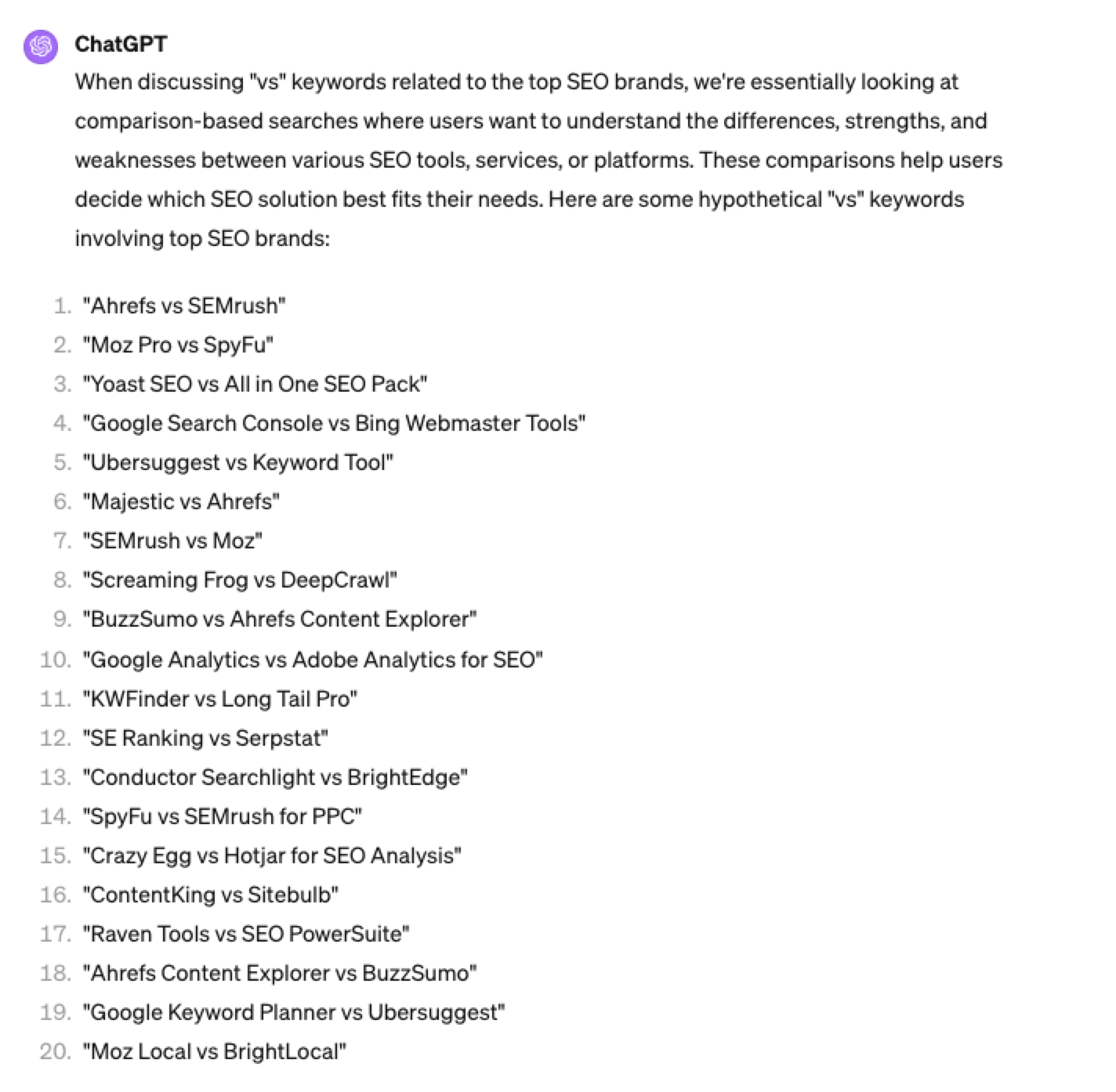

“For the top {Topic} brands what are the top “vs” keywords”

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 20244. Search Intent Patterns

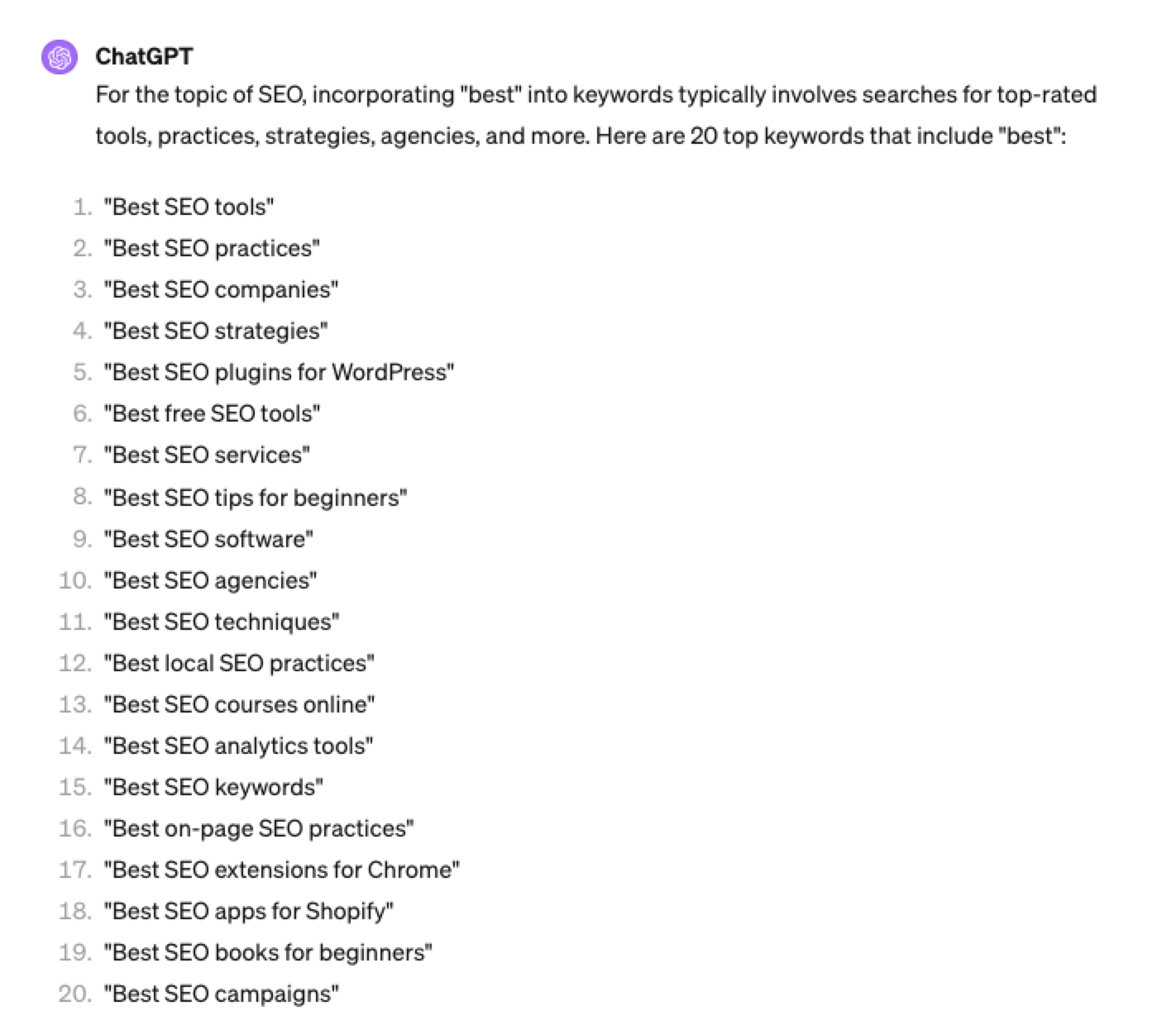

One of the most common search intent patterns is “best.”

When someone is searching for a “best {topic}” keyword, they are generally searching for a comprehensive list or guide that highlights the top options, products, or services within that specific topic, along with their features, benefits, and potential drawbacks, to make an informed decision.

Example:

“For the topic of “[Topic]” what are the 20 top keywords that include “best”

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 2024Again, this guide to keyword research using ChatGPT has emphasized the ease of generating keyword research ideas by utilizing ChatGPT throughout the process.

Keyword Research Using ChatGPT Vs. Keyword Research Tools

Free Vs. Paid Keyword Research Tools

Like keyword research tools, ChatGPT has free and paid options.

However, one of the most significant drawbacks of using ChatGPT for keyword research alone is the absence of SEO metrics to help you make smarter decisions.

To improve accuracy, you could take the results it gives you and verify them with your classic keyword research tool – or vice versa, as shown above, uploading accurate data into the tool and then prompting.

However, you must consider how long it takes to type and fine-tune your prompt to get your desired data versus using the filters within popular keyword research tools.

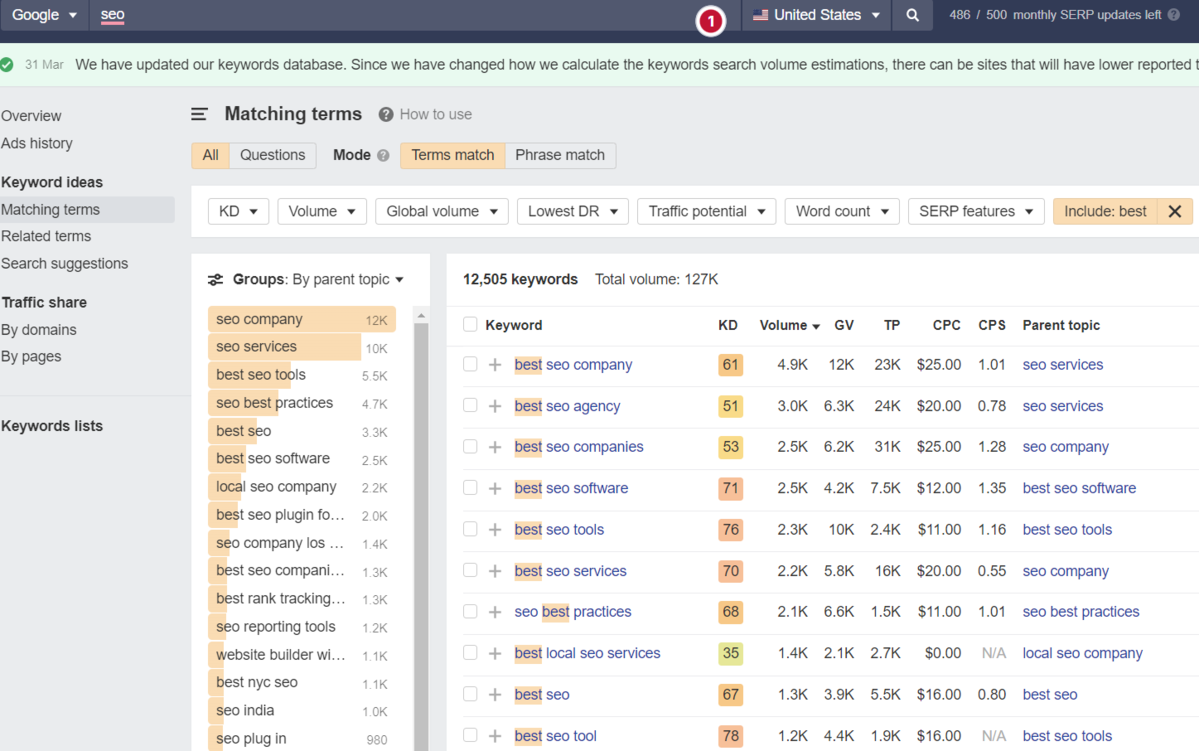

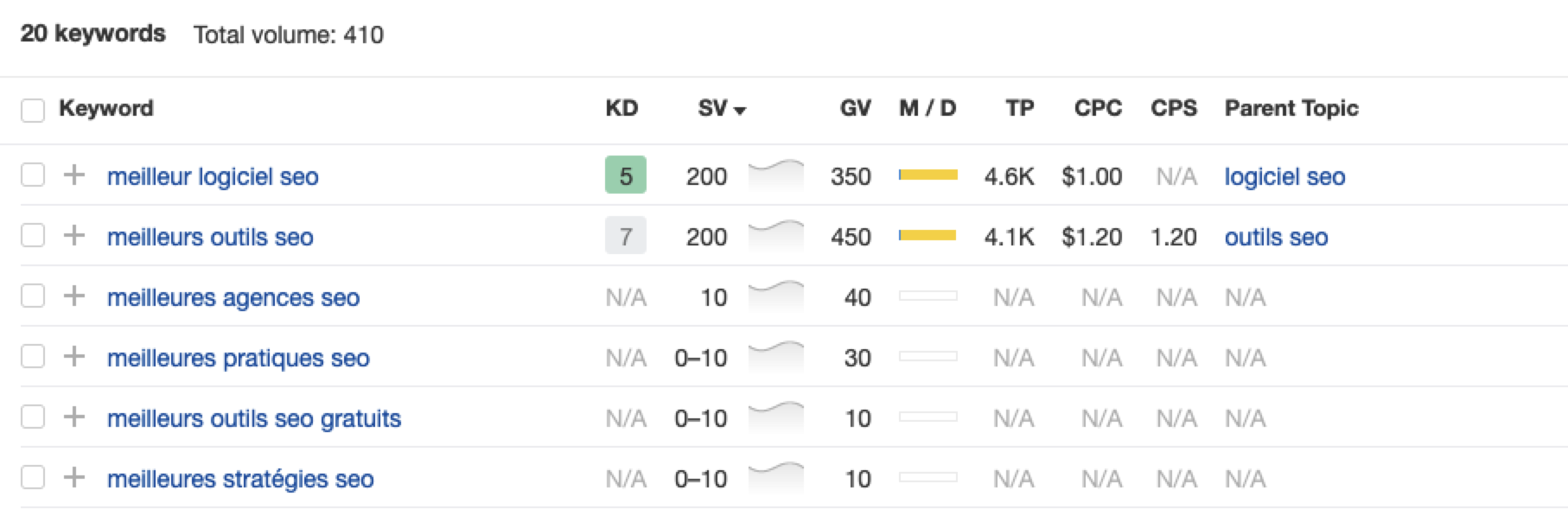

For example, if we use a popular keyword research tool using filters, you could have all of the “best” queries with all of their SEO metrics:

-

Screenshot from Ahrefs Keyword Explorer, March 2024

Screenshot from Ahrefs Keyword Explorer, March 2024

And unlike ChatGPT, generally, there is no token limit; you can extract several hundred, if not thousands, of keywords at a time.

As I have mentioned multiple times throughout this piece, you cannot blindly trust the data or SEO metrics it may attempt to provide you with.

The key is to validate the keyword research with a keyword research tool.

ChatGPT For International SEO Keyword Research

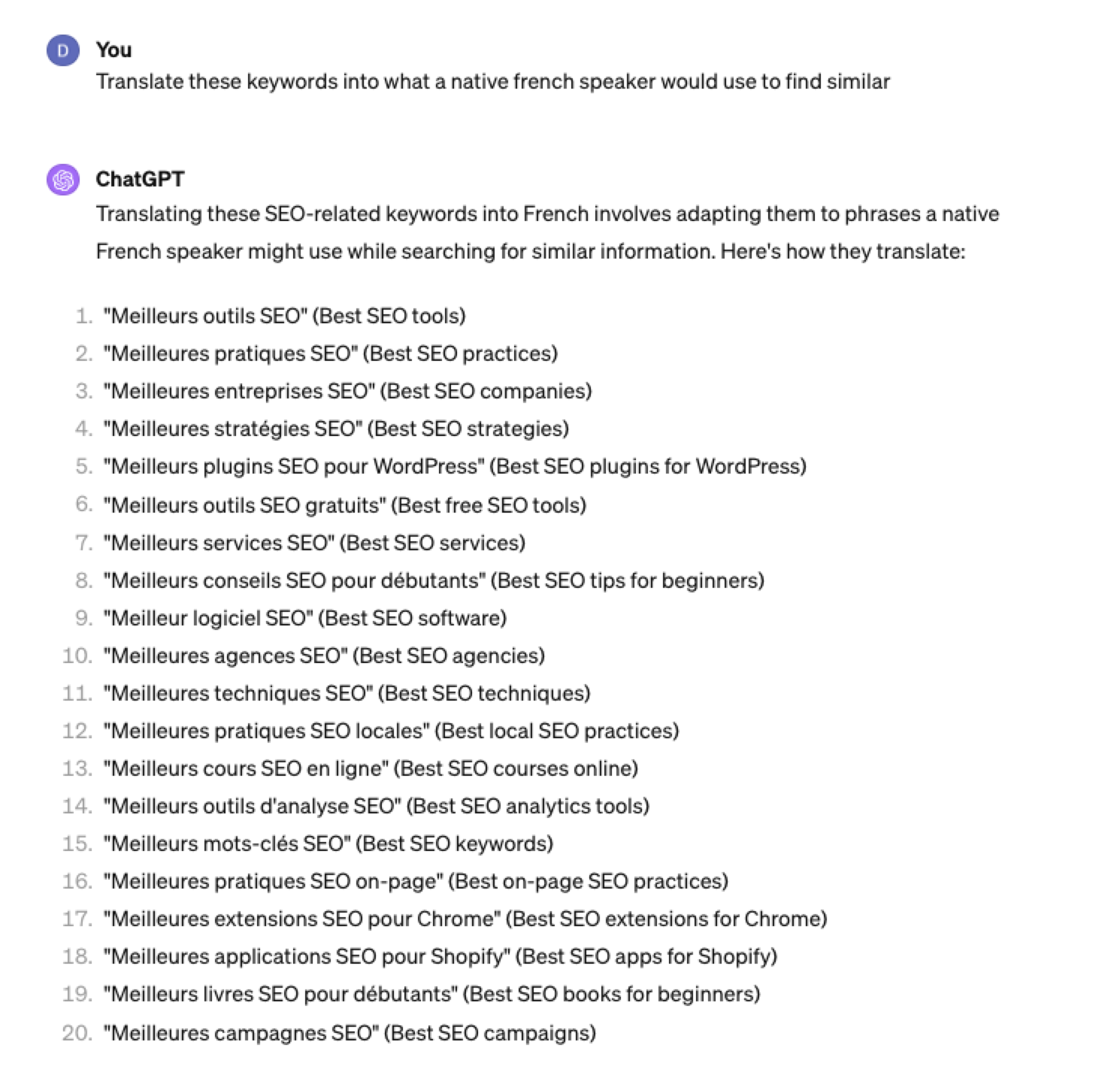

ChatGPT can be a terrific multilingual keyword research assistant.

For example, if you wanted to research keywords in a foreign language such as French. You could ask ChatGPT to translate your English keywords;

Screenshot ChatGPT 4, Apil 2024

Screenshot ChatGPT 4, Apil 2024- The key is to take the data above and paste it into a popular keyword research tool to verify.

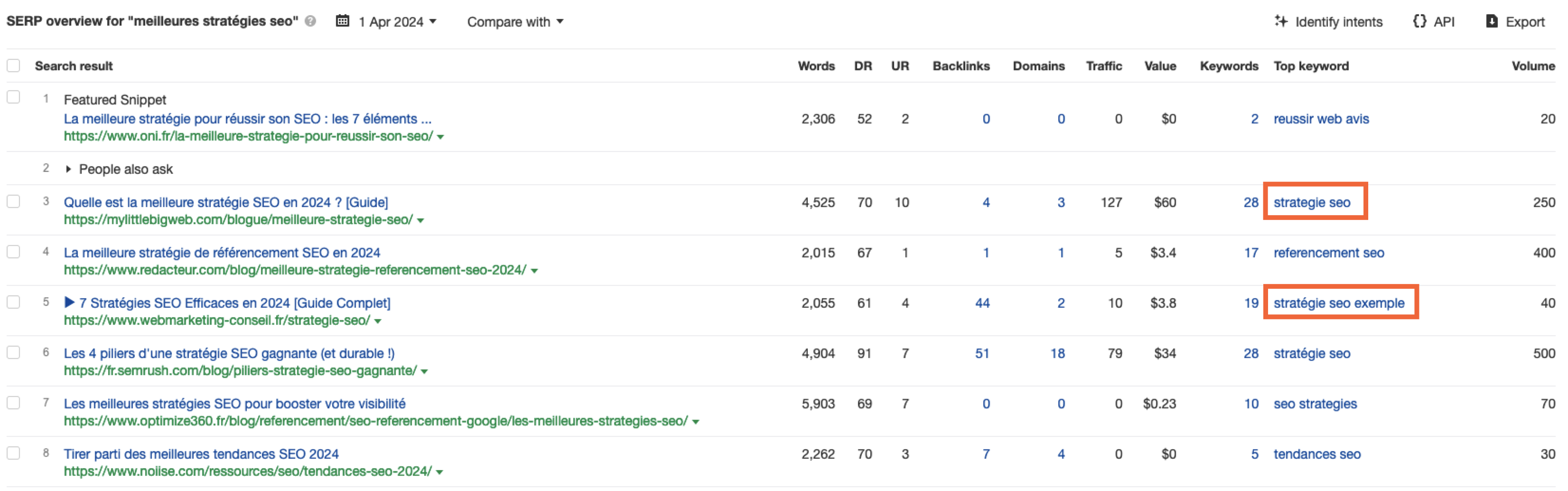

- As you can see below, many of the keyword translations for the English keywords do not have any search volume for direct translations in French.

Screenshot from Ahrefs Keyword Explorer, April 2024

Screenshot from Ahrefs Keyword Explorer, April 2024But don’t worry, there is a workaround: If you have access to a competitor keyword research tool, you can see what webpage is ranking for that query – and then identify the top keyword for that page based on the ChatGPT translated keywords that do have search volume.

-

-

Screenshot from Ahrefs Keyword Explorer, April 2024

Screenshot from Ahrefs Keyword Explorer, April 2024

Or, if you don’t have access to a paid keyword research tool, you could always take the top-performing result, extract the page copy, and then ask ChatGPT what the primary keyword for the page is.

Key Takeaway

-

ChatGPT can be an expert on any topic and an invaluable keyword research tool. However, it is another tool to add to your toolbox when doing keyword research; it does not replace traditional keyword research tools.

As shown throughout this tutorial, from making up keywords at the beginning to inaccuracies around data and translations, ChatGPT can make mistakes when used for keyword research.

You cannot blindly trust the data you get back from ChatGPT.

However, it can offer a shortcut to understanding any topic for which you need to do keyword research and, as a result, save you countless hours.

But the key is how you prompt.

The prompts I shared with you above will help you understand a topic in minutes instead of hours and allow you to better seed keywords using keyword research tools.

It can even replace mundane keyword clustering tasks that you used to do with formulas in spreadsheets or generate ideas based on keywords you give it.

Paired with traditional keyword research tools, ChatGPT for keyword research can be a powerful tool in your arsenal.

More resources:

Featured Image: Tatiana Shepeleva/Shutterstock

SEO

OpenAI Expected to Integrate Real-Time Data In ChatGPT

Sam Altman, CEO of OpenAI, dispelled rumors that a new search engine would be announced on Monday, May 13. Recent deals have raised the expectation that OpenAI will announce the integration of real-time content from English, Spanish, and French publications into ChatGPT, complete with links to the original sources.

OpenAI Search Is Not Happening

Many competing search engines have tried and failed to challenge Google as the leading search engine. A new wave of hybrid generative AI search engines is currently trying to knock Google from the top spot with arguably very little success.

Sam Altman is on record saying that creating a search engine to compete against Google is not a viable approach. He suggested that technological disruption was the way to replace Google by changing the search paradigm altogether. The speculation that Altman is going to announce a me-too search engine on Monday never made sense given his recent history of dismissing the concept as a non-starter.

So perhaps it’s not a surprise that he recently ended the speculation by explicitly saying that he will not be announcing a search engine on Monday.

He tweeted:

“not gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me.”

“New Stuff” May Be Iterative Improvement

It’s quite likely that what’s going to be announced is iterative which means it improves ChatGPT but not replaces it. This fits into how Altman recently expressed his approach with ChatGPT.

He remarked:

“And it does kind of suck to ship a product that you’re embarrassed about, but it’s much better than the alternative. And in this case in particular, where I think we really owe it to society to deploy iteratively.

There could totally be things in the future that would change where we think iterative deployment isn’t such a good strategy, but it does feel like the current best approach that we have and I think we’ve gained a lot from from doing this and… hopefully the larger world has gained something too.”

Improving ChatGPT iteratively is Sam Altman’s preference and recent clues point to what those changes may be.

Recent Deals Contain Clues

OpenAI has been making deals with news media and User Generated Content publishers since December 2023. Mainstream media has reported these deals as being about licensing content for training large language models. But they overlooked a a key detail that we reported on last month which is that these deals give OpenAI access to real-time information that they stated will be used to give attribution to that real-time data in the form of links.

That means that ChatGPT users will gain the ability to access real-time news and to use that information creatively within ChatGPT.

Dotdash Meredith Deal

Dotdash Meredith (DDM) is the publisher of big brand publications such as Better Homes & Gardens, FOOD & WINE, InStyle, Investopedia, and People magazine. The deal that was announced goes way beyond using the content as training data. The deal is explicitly about surfacing the Dotdash Meredith content itself in ChatGPT.

The announcement stated:

“As part of the agreement, OpenAI will display content and links attributed to DDM in relevant ChatGPT responses. …This deal is a testament to the great work OpenAI is doing on both fronts to partner with creators and publishers and ensure a healthy Internet for the future.

Over 200 million Americans each month trust our content to help them make decisions, solve problems, find inspiration, and live fuller lives. This partnership delivers the best, most relevant content right to the heart of ChatGPT.”

A statement from OpenAI gives credibility to the speculation that OpenAI intends to directly show licensed third-party content as part of ChatGPT answers.

OpenAI explained:

“We’re thrilled to partner with Dotdash Meredith to bring its trusted brands to ChatGPT and to explore new approaches in advancing the publishing and marketing industries.”

Something that DDM also gets out of this deal is that OpenAI will enhance DDM’s in-house ad targeting in order show more tightly focused contextual advertising.

Le Monde And Prisa Media Deals

In March 2024 OpenAI announced a deal with two global media companies, Le Monde and Prisa Media. Le Monde is a French news publication and Prisa Media is a Spanish language multimedia company. The interesting aspects of these two deals is that it gives OpenAI access to real-time data in French and Spanish.

Prisa Media is a global Spanish language media company based in Madrid, Spain that is comprised of magazines, newspapers, podcasts, radio stations, and television networks. It’s reach extends from Spain to America. American media companies include publications in the United States, Argentina, Bolivia, Chile, Colombia, Costa Rica, Ecuador, Mexico, and Panama. That is a massive amount of real-time information in addition to a massive audience of millions.

OpenAI explicitly announced that the purpose of this deal was to bring this content directly to ChatGPT users.

The announcement explained:

“We are continually making improvements to ChatGPT and are supporting the essential role of the news industry in delivering real-time, authoritative information to users. …Our partnerships will enable ChatGPT users to engage with Le Monde and Prisa Media’s high-quality content on recent events in ChatGPT, and their content will also contribute to the training of our models.”

That deal is not just about training data. It’s about bringing current events data to ChatGPT users.

The announcement elaborated in more detail:

“…our goal is to enable ChatGPT users around the world to connect with the news in new ways that are interactive and insightful.”

As noted in our April 30th article that revealed that OpenAI will show links in ChatGPT, OpenAI intends to show third party content with links to that content.

OpenAI commented on the purpose of the Le Monde and Prisa Media partnership:

“Over the coming months, ChatGPT users will be able to interact with relevant news content from these publishers through select summaries with attribution and enhanced links to the original articles, giving users the ability to access additional information or related articles from their news sites.”

There are additional deals with other groups like The Financial Times which also stress that this deal will result in a new ChatGPT feature that will allow users to interact with real-time news and current events .

OpenAI’s Monday May 13 Announcement

There are many clues that the announcement on Monday will be that ChatGPT users will gain the ability to interact with content about current events. This fits into the terms of recent deals with news media organizations. There may be other features announced as well but this part is something that there are many clues pointing to.

Watch Altman’s interview at Stanford University

Featured Image by Shutterstock/photosince

-

PPC5 days ago

PPC5 days agoHow the TikTok Algorithm Works in 2024 (+9 Ways to Go Viral)

-

SEO6 days ago

SEO6 days agoBlog Post Checklist: Check All Prior to Hitting “Publish”

-

SEO4 days ago

SEO4 days agoHow to Use Keywords for SEO: The Complete Beginner’s Guide

-

MARKETING5 days ago

MARKETING5 days agoHow To Protect Your People and Brand

-

PPC6 days ago

PPC6 days agoHow to Craft Compelling Google Ads for eCommerce

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Started Enforcing The Site Reputation Abuse Policy

-

MARKETING4 days ago

MARKETING4 days agoThe Ultimate Guide to Email Marketing

-

MARKETING6 days ago

MARKETING6 days agoElevating Women in SEO for a More Inclusive Industry

You must be logged in to post a comment Login