SOCIAL

Facebook Parent Meta Plans Another Round of Job Cuts, May Lay Off Over 1,000 Employees: Report

Facebook-parent Meta Platforms is planning a fresh round of job cuts in a reorganisation and downsizing effort that could affect thousands of workers, the Washington Post reported on Wednesday.

Last year, the social media company let go 13 percent of its workforce — more than 11,000 employees — as it grappled with soaring costs and a weak advertising market.

Meta now plans to push some leaders into lower-level roles without direct reports, flattening the layers of management between top boss Mark Zuckerberg and the company’s interns, the Washington Post reported, citing a person familiar with the matter.

Meta declined a Reuters request for comment, but spokesperson Andy Stone in a series of tweets cited several previous statements by Zuckerberg suggesting that more cuts were on the way.

Zuckerberg told investors earlier this month that last year’s layoffs were “the beginning of our focus on efficiency and not the end.” He said he would work on “flattening our org structure and removing some layers of middle management.”

Last year’s layoffs were the first in Meta’s 18-year history. Other tech companies have cut thousands of jobs, including Google parent Alphabet, Microsoft and Snap.

Meta aggressively hired during the COVID-19 pandemic to meet a surge in social media usage by stuck-at-home consumers. But business suffered in 2022 as advertisers pulled the plug on spending in the face of rapidly rising interest rates.

Meta, once worth more than $1 trillion (nearly Rs. 82,840,100 crore), is now valued at $446 billion (nearly Rs. 36,94,700 crore). Meta shares were down about 0.5 percent on Wednesday.

The company has said it would also reduce office space, lower discretionary spending and extend a hiring freeze into 2023 to rein in expenses.

More than 1,00,000 layoffs were announced at US companies in January, led by technology companies, according to a report from employment firm Challenger, Gray & Christmas.

© Thomson Reuters 2023

(Except for the headline, this story has not been edited by NDTV staff and is published from a press release)

For details of the latest launches and news from Samsung, Xiaomi, Realme, OnePlus, Oppo and other companies at the Mobile World Congress in Barcelona, visit our MWC 2023 hub.

SOCIAL

12 Proven Methods to Make Money Blogging in 2024

This is a contributed article.

This is a contributed article.

The world of blogging continues to thrive in 2024, offering a compelling avenue for creative minds to share their knowledge, build an audience, and even turn their passion into profit. Whether you’re a seasoned blogger or just starting, there are numerous effective strategies to monetize your blog and achieve financial success. Here, we delve into 12 proven methods to make money blogging in 2024:

1. Embrace Niche Expertise:

Standing out in the vast blogosphere requires focus. Carving a niche allows you to cater to a specific audience with targeted content. This not only builds a loyal following but also positions you as an authority in your chosen field. Whether it’s gardening techniques, travel hacking tips, or the intricacies of cryptocurrency, delve deep into a subject you’re passionate and knowledgeable about. Targeted audiences are more receptive to monetization efforts, making them ideal for success.

2. Content is King (and Queen):

High-quality content remains the cornerstone of any successful blog. In 2024, readers crave informative, engaging, and well-written content that solves their problems, answers their questions, or entertains them. Invest time in crafting valuable blog posts, articles, or videos that resonate with your target audience.

- Focus on evergreen content: Create content that remains relevant for a long time, attracting consistent traffic and boosting your earning potential.

- Incorporate multimedia: Spice up your content with captivating images, infographics, or even videos to enhance reader engagement and improve SEO.

- Maintain consistency: Develop a regular publishing schedule to build anticipation and keep your audience coming back for more.

3. The Power of SEO:

Search Engine Optimization (SEO) ensures your blog ranks high in search engine results for relevant keywords. This increases organic traffic, the lifeblood of any monetization strategy.

- Keyword research: Use keyword research tools to identify terms your target audience searches for. Strategically incorporate these keywords into your content naturally.

- Technical SEO: Optimize your blog’s loading speed, mobile responsiveness, and overall technical aspects to improve search engine ranking.

- Backlink building: Encourage other websites to link back to your content, boosting your blog’s authority in the eyes of search engines.

4. Monetization Magic: Affiliate Marketing

Affiliate marketing allows you to earn commissions by promoting other companies’ products or services. When a reader clicks on your affiliate link and makes a purchase, you get a commission.

- Choose relevant affiliates: Promote products or services that align with your niche and resonate with your audience.

- Transparency is key: Disclose your affiliate relationships clearly to your readers and build trust.

- Integrate strategically: Don’t just bombard readers with links. Weave affiliate promotions naturally into your content, highlighting the value proposition.

5. Display Advertising: A Classic Approach

Display advertising involves placing banner ads, text ads, or other visual elements on your blog. When a reader clicks on an ad, you earn revenue.

- Choose reputable ad networks: Partner with established ad networks that offer competitive rates and relevant ads for your audience.

- Strategic ad placement: Place ads thoughtfully, avoiding an overwhelming experience for readers.

- Track your performance: Monitor ad clicks and conversions to measure the effectiveness of your ad placements and optimize for better results.

6. Offer Premium Content:

Providing exclusive, in-depth content behind a paywall can generate additional income. This could be premium blog posts, ebooks, online courses, or webinars.

- Deliver exceptional value: Ensure your premium content offers significant value that justifies the price tag.

- Multiple pricing options: Consider offering tiered subscription plans to cater to different audience needs and budgets.

- Promote effectively: Highlight the benefits of your premium content and encourage readers to subscribe.

7. Coaching and Consulting:

Leverage your expertise by offering coaching or consulting services related to your niche. Readers who find your content valuable may be interested in personalized guidance.

- Position yourself as an expert: Showcase your qualifications, experience, and client testimonials to build trust and establish your credibility.

- Offer free consultations: Provide a limited free consultation to potential clients, allowing them to experience your expertise firsthand.

- Develop clear packages: Outline different coaching or consulting packages with varying time commitments and pricing structures.

8. The Power of Community: Online Events and Webinars

Host online events or webinars related to your niche. These events offer valuable content while also providing an opportunity to promote other monetization avenues.

- Interactive and engaging: Structure your online events to be interactive with polls, Q&A sessions, or live chats. Click here to learn more about image marketing with Q&A sessions and live chats.

9. Embrace the Power of Email Marketing:

Building an email list allows you to foster stronger relationships with your audience and promote your content and offerings directly.

- Offer valuable incentives: Encourage readers to subscribe by offering exclusive content, discounts, or early access to new products.

- Segmentation is key: Segment your email list based on reader interests to send targeted campaigns that resonate more effectively.

- Regular communication: Maintain consistent communication with your subscribers through engaging newsletters or updates.

10. Sell Your Own Products:

Take your expertise to the next level by creating and selling your own products. This could be physical merchandise, digital downloads, or even printables related to your niche.

- Identify audience needs: Develop products that address the specific needs and desires of your target audience.

- High-quality offerings: Invest in creating high-quality products that offer exceptional value and user experience.

- Utilize multiple platforms: Sell your products through your blog, online marketplaces, or even social media platforms.

11. Sponsorships and Brand Collaborations:

Partner with brands or businesses relevant to your niche for sponsored content or collaborations. This can be a lucrative way to leverage your audience and generate income.

- Maintain editorial control: While working with sponsors, ensure you retain editorial control to maintain your blog’s authenticity and audience trust.

- Disclosures are essential: Clearly disclose sponsored content to readers, upholding transparency and ethical practices.

- Align with your niche: Partner with brands that complement your content and resonate with your audience.

12. Freelancing and Paid Writing Opportunities:

Your blog can serve as a springboard for freelance writing opportunities. Showcase your writing skills and expertise through your blog content, attracting potential clients.

- Target relevant publications: Identify online publications, websites, or magazines related to your niche and pitch your writing services.

- High-quality samples: Include high-quality blog posts from your site as writing samples when pitching to potential clients.

- Develop strong writing skills: Continuously hone your writing skills and stay updated on current trends in your niche to deliver exceptional work.

Conclusion:

Building a successful blog that generates income requires dedication, strategic planning, and high-quality content. In today’s digital age, there are numerous opportunities to make money online through blogging. By utilizing a combination of methods such as affiliate marketing, sponsored content, and selling digital products or services, you can leverage your blog’s potential and achieve financial success.

Remember, consistency in posting, engaging with your audience, and staying adaptable to trends are key to thriving in the ever-evolving blogosphere. Embrace new strategies, refine your approaches, and always keep your readers at the forefront of your content creation journey. With dedication and the right approach, your blog has the potential to become a valuable source of income and a platform for sharing your knowledge and passion with the world, making money online while doing what you love.

Image Credit: DepositPhotos

SOCIAL

Snapchat Explores New Messaging Retention Feature: A Game-Changer or Risky Move?

In a recent announcement, Snapchat revealed a groundbreaking update that challenges its traditional design ethos. The platform is experimenting with an option that allows users to defy the 24-hour auto-delete rule, a feature synonymous with Snapchat’s ephemeral messaging model.

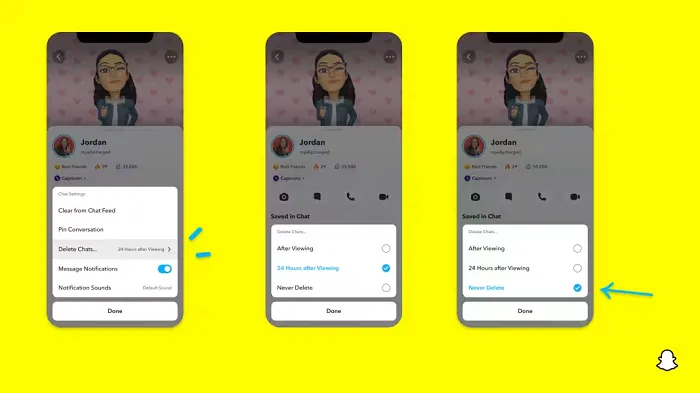

The proposed change aims to introduce a “Never delete” option in messaging retention settings, aligning Snapchat more closely with conventional messaging apps. While this move may blur Snapchat’s distinctive selling point, Snap appears convinced of its necessity.

According to Snap, the decision stems from user feedback and a commitment to innovation based on user needs. The company aims to provide greater flexibility and control over conversations, catering to the preferences of its community.

Currently undergoing trials in select markets, the new feature empowers users to adjust retention settings on a conversation-by-conversation basis. Flexibility remains paramount, with participants able to modify settings within chats and receive in-chat notifications to ensure transparency.

Snapchat underscores that the default auto-delete feature will persist, reinforcing its design philosophy centered on ephemerality. However, with the app gaining traction as a primary messaging platform, the option offers users a means to preserve longer chat histories.

The update marks a pivotal moment for Snapchat, renowned for its disappearing message premise, especially popular among younger demographics. Retaining this focus has been pivotal to Snapchat’s identity, but the shift suggests a broader strategy aimed at diversifying its user base.

This strategy may appeal particularly to older demographics, potentially extending Snapchat’s relevance as users age. By emulating features of conventional messaging platforms, Snapchat seeks to enhance its appeal and broaden its reach.

Yet, the introduction of message retention poses questions about Snapchat’s uniqueness. While addressing user demands, the risk of diluting Snapchat’s distinctiveness looms large.

As Snapchat ventures into uncharted territory, the outcome of this experiment remains uncertain. Will message retention propel Snapchat to new heights, or will it compromise the platform’s uniqueness?

Only time will tell.

SOCIAL

Catering to specific audience boosts your business, says accountant turned coach

While it is tempting to try to appeal to a broad audience, the founder of alcohol-free coaching service Just the Tonic, Sandra Parker, believes the best thing you can do for your business is focus on your niche. Here’s how she did just that.

When running a business, reaching out to as many clients as possible can be tempting. But it also risks making your marketing “too generic,” warns Sandra Parker, the founder of Just The Tonic Coaching.

“From the very start of my business, I knew exactly who I could help and who I couldn’t,” Parker told My Biggest Lessons.

Parker struggled with alcohol dependence as a young professional. Today, her business targets high-achieving individuals who face challenges similar to those she had early in her career.

“I understand their frustrations, I understand their fears, and I understand their coping mechanisms and the stories they’re telling themselves,” Parker said. “Because of that, I’m able to market very effectively, to speak in a language that they understand, and am able to reach them.”Â

“I believe that it’s really important that you know exactly who your customer or your client is, and you target them, and you resist the temptation to make your marketing too generic to try and reach everyone,” she explained.

“If you speak specifically to your target clients, you will reach them, and I believe that’s the way that you’re going to be more successful.

Watch the video for more of Sandra Parker’s biggest lessons.

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoBillions Of Google goo.gl URLs To 404 In The Future

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: July 22, 2024

-

SEO6 days ago

SEO6 days ago11 Copyscape Alternatives To Check Plagiarism

-

SEARCHENGINES7 days ago

SEARCHENGINES7 days agoGoogle Core Update Coming, Ranking Volatility, Bye Search Notes, AI Overviews, Ads & More

-

SEO6 days ago

SEO6 days agoGoogle Warns Of Last Chance To Export Notes Search Data

-

SEARCHENGINES4 days ago

Daily Search Forum Recap: July 23, 2024

-

AFFILIATE MARKETING6 days ago

AFFILIATE MARKETING6 days agoThe Top 5 AI Tools That Can Revolutionize Your Workflow and Boost Productivity

-

SEO4 days ago

SEO4 days agoSystem Builders – How AI Changes The Work Of SEO

You must be logged in to post a comment Login