SOCIAL

Twitter’s Working on a New ‘Safety Mode’ to Limit the Impact of On-Platform Abuse

Amongst Twitter’s various announcements in its Analyst Day presentation today, including subscription tools and on-platform communities, it also outlined its work on a new anti-troll feature, which it’s calling ‘Safety Mode’

As you can see here, the new process would alert users when their tweets are getting negative attention. Tap through on that notification and you’ll be taken to the ‘Safety Mode’ control panel, where you can choose to activate ‘auto-block and mute’, which will then, as it sounds, automatically stop any accounts that are sending abusive or rude replies from engaging with you for one week.

But you won’t have to activate the auto-block function – as you can see below the auto-block toggle, users will also be able to review the accounts and replies Twitter’s system has identified as being potentially harmful. You would then be able to review and block as you see fit. So if your on-platform connections have a habit of mocking your comments, and Twitter’s system incorrectly tags them as abuse, you won’t have to block them, unless you choose to keep Safety Mode active.

It could be a good option, though a lot depends on how good Twitter’s automated detection process is.

Twitter would be looking to utilize the same system it’s testing for its new prompts (on iOS) that alert users to potentially offensive language within their tweets.

Twitter’s been testing that option for almost a year, and the language modeling that it’s developed for that process would give it a good base to go on for this new Safety Mode system.

If Twitter can reliably detect abuse, and stop people from ever having to see it, that could be a good thing, while it could also disincentivize trolls who make such remarks in order to provoke a response. If the risk is that their clever replies could get automatically blocked, and as Twitter notes, will be seen by fewer people as a result, that could make people more cautious about what they say. Which some will see as intrusion on free speech and a violation of some amendment of some kind. But it’s really not.

If it helps people who are experiencing trolls and abuse, there’s definitely merit to the test.

Twitter hasn’t provided any specific detail, or information on where it’s placed in the development cycle. But it looks likely to get a live test soon, and it’ll be interesting to see what sort of response Twitter sees once the option is made available to users.

SOCIAL

Snapchat Explores New Messaging Retention Feature: A Game-Changer or Risky Move?

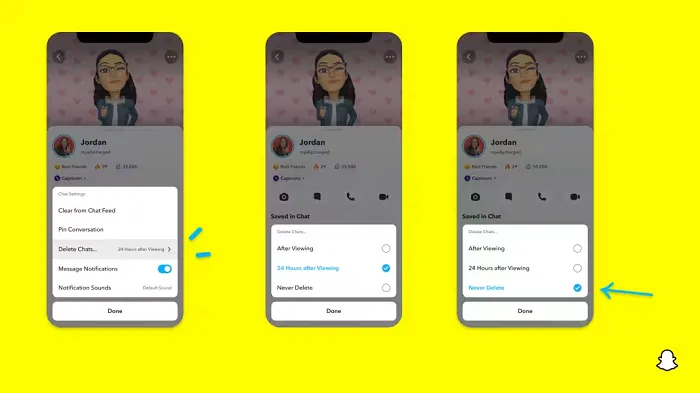

In a recent announcement, Snapchat revealed a groundbreaking update that challenges its traditional design ethos. The platform is experimenting with an option that allows users to defy the 24-hour auto-delete rule, a feature synonymous with Snapchat’s ephemeral messaging model.

The proposed change aims to introduce a “Never delete” option in messaging retention settings, aligning Snapchat more closely with conventional messaging apps. While this move may blur Snapchat’s distinctive selling point, Snap appears convinced of its necessity.

According to Snap, the decision stems from user feedback and a commitment to innovation based on user needs. The company aims to provide greater flexibility and control over conversations, catering to the preferences of its community.

Currently undergoing trials in select markets, the new feature empowers users to adjust retention settings on a conversation-by-conversation basis. Flexibility remains paramount, with participants able to modify settings within chats and receive in-chat notifications to ensure transparency.

Snapchat underscores that the default auto-delete feature will persist, reinforcing its design philosophy centered on ephemerality. However, with the app gaining traction as a primary messaging platform, the option offers users a means to preserve longer chat histories.

The update marks a pivotal moment for Snapchat, renowned for its disappearing message premise, especially popular among younger demographics. Retaining this focus has been pivotal to Snapchat’s identity, but the shift suggests a broader strategy aimed at diversifying its user base.

This strategy may appeal particularly to older demographics, potentially extending Snapchat’s relevance as users age. By emulating features of conventional messaging platforms, Snapchat seeks to enhance its appeal and broaden its reach.

Yet, the introduction of message retention poses questions about Snapchat’s uniqueness. While addressing user demands, the risk of diluting Snapchat’s distinctiveness looms large.

As Snapchat ventures into uncharted territory, the outcome of this experiment remains uncertain. Will message retention propel Snapchat to new heights, or will it compromise the platform’s uniqueness?

Only time will tell.

SOCIAL

Catering to specific audience boosts your business, says accountant turned coach

While it is tempting to try to appeal to a broad audience, the founder of alcohol-free coaching service Just the Tonic, Sandra Parker, believes the best thing you can do for your business is focus on your niche. Here’s how she did just that.

When running a business, reaching out to as many clients as possible can be tempting. But it also risks making your marketing “too generic,” warns Sandra Parker, the founder of Just The Tonic Coaching.

“From the very start of my business, I knew exactly who I could help and who I couldn’t,” Parker told My Biggest Lessons.

Parker struggled with alcohol dependence as a young professional. Today, her business targets high-achieving individuals who face challenges similar to those she had early in her career.

“I understand their frustrations, I understand their fears, and I understand their coping mechanisms and the stories they’re telling themselves,” Parker said. “Because of that, I’m able to market very effectively, to speak in a language that they understand, and am able to reach them.”Â

“I believe that it’s really important that you know exactly who your customer or your client is, and you target them, and you resist the temptation to make your marketing too generic to try and reach everyone,” she explained.

“If you speak specifically to your target clients, you will reach them, and I believe that’s the way that you’re going to be more successful.

Watch the video for more of Sandra Parker’s biggest lessons.

SOCIAL

Instagram Tests Live-Stream Games to Enhance Engagement

Instagram’s testing out some new options to help spice up your live-streams in the app, with some live broadcasters now able to select a game that they can play with viewers in-stream.

As you can see in these example screens, posted by Ahmed Ghanem, some creators now have the option to play either “This or That”, a question and answer prompt that you can share with your viewers, or “Trivia”, to generate more engagement within your IG live-streams.

That could be a simple way to spark more conversation and interaction, which could then lead into further engagement opportunities from your live audience.

Meta’s been exploring more ways to make live-streaming a bigger consideration for IG creators, with a view to live-streams potentially catching on with more users.

That includes the gradual expansion of its “Stars” live-stream donation program, giving more creators in more regions a means to accept donations from live-stream viewers, while back in December, Instagram also added some new options to make it easier to go live using third-party tools via desktop PCs.

Live streaming has been a major shift in China, where shopping live-streams, in particular, have led to massive opportunities for streaming platforms. They haven’t caught on in the same way in Western regions, but as TikTok and YouTube look to push live-stream adoption, there is still a chance that they will become a much bigger element in future.

Which is why IG is also trying to stay in touch, and add more ways for its creators to engage via streams. Live-stream games is another element within this, which could make this a better community-building, and potentially sales-driving option.

We’ve asked Instagram for more information on this test, and we’ll update this post if/when we hear back.

-

MARKETING6 days ago

MARKETING6 days agoA Recap of Everything Marketers & Advertisers Need to Know

-

PPC4 days ago

PPC4 days agoHow the TikTok Algorithm Works in 2024 (+9 Ways to Go Viral)

-

MARKETING4 days ago

MARKETING4 days agoHow To Protect Your People and Brand

-

SEARCHENGINES5 days ago

SEARCHENGINES5 days agoGoogle Started Enforcing The Site Reputation Abuse Policy

-

SEO5 days ago

SEO5 days agoBlog Post Checklist: Check All Prior to Hitting “Publish”

-

SEO3 days ago

SEO3 days agoHow to Use Keywords for SEO: The Complete Beginner’s Guide

-

PPC5 days ago

PPC5 days agoHow to Craft Compelling Google Ads for eCommerce

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Says Again, Sites Hit By The Old Helpful Content Update Can Recover