SEO

Is ChatGPT Use Of Web Content Fair?

Large Language Models (LLMs) like ChatGPT train using multiple sources of information, including web content. This data forms the basis of summaries of that content in the form of articles that are produced without attribution or benefit to those who published the original content used for training ChatGPT.

Search engines download website content (called crawling and indexing) to provide answers in the form of links to the websites.

Website publishers have the ability to opt-out of having their content crawled and indexed by search engines through the Robots Exclusion Protocol, commonly referred to as Robots.txt.

The Robots Exclusions Protocol is not an official Internet standard but it’s one that legitimate web crawlers obey.

Should web publishers be able to use the Robots.txt protocol to prevent large language models from using their website content?

Large Language Models Use Website Content Without Attribution

Some who are involved with search marketing are uncomfortable with how website data is used to train machines without giving anything back, like an acknowledgement or traffic.

Hans Petter Blindheim (LinkedIn profile), Senior Expert at Curamando shared his opinions with me.

Hans commented:

“When an author writes something after having learned something from an article on your site, they will more often than not link to your original work because it offers credibility and as a professional courtesy.

It’s called a citation.

But the scale at which ChatGPT assimilates content and does not grant anything back differentiates it from both Google and people.

A website is generally created with a business directive in mind.

Google helps people find the content, providing traffic, which has a mutual benefit to it.

But it’s not like large language models asked your permission to use your content, they just use it in a broader sense than what was expected when your content was published.

And if the AI language models do not offer value in return – why should publishers allow them to crawl and use the content?

Does their use of your content meet the standards of fair use?

When ChatGPT and Google’s own ML/AI models trains on your content without permission, spins what it learns there and uses that while keeping people away from your websites – shouldn’t the industry and also lawmakers try to take back control over the Internet by forcing them to transition to an “opt-in” model?”

The concerns that Hans expresses are reasonable.

In light of how fast technology is evolving, should laws concerning fair use be reconsidered and updated?

I asked John Rizvi, a Registered Patent Attorney (LinkedIn profile) who is board certified in Intellectual Property Law, if Internet copyright laws are outdated.

John answered:

“Yes, without a doubt.

One major bone of contention in cases like this is the fact that the law inevitably evolves far more slowly than technology does.

In the 1800s, this maybe didn’t matter so much because advances were relatively slow and so legal machinery was more or less tooled to match.

Today, however, runaway technological advances have far outstripped the ability of the law to keep up.

There are simply too many advances and too many moving parts for the law to keep up.

As it is currently constituted and administered, largely by people who are hardly experts in the areas of technology we’re discussing here, the law is poorly equipped or structured to keep pace with technology…and we must consider that this isn’t an entirely bad thing.

So, in one regard, yes, Intellectual Property law does need to evolve if it even purports, let alone hopes, to keep pace with technological advances.

The primary problem is striking a balance between keeping up with the ways various forms of tech can be used while holding back from blatant overreach or outright censorship for political gain cloaked in benevolent intentions.

The law also has to take care not to legislate against possible uses of tech so broadly as to strangle any potential benefit that may derive from them.

You could easily run afoul of the First Amendment and any number of settled cases that circumscribe how, why, and to what degree intellectual property can be used and by whom.

And attempting to envision every conceivable usage of technology years or decades before the framework exists to make it viable or even possible would be an exceedingly dangerous fool’s errand.

In situations like this, the law really cannot help but be reactive to how technology is used…not necessarily how it was intended.

That’s not likely to change anytime soon, unless we hit a massive and unanticipated tech plateau that allows the law time to catch up to current events.”

So it appears that the issue of copyright laws has many considerations to balance when it comes to how AI is trained, there is no simple answer.

OpenAI and Microsoft Sued

An interesting case that was recently filed is one in which OpenAI and Microsoft used open source code to create their CoPilot product.

The problem with using open source code is that the Creative Commons license requires attribution.

According to an article published in a scholarly journal:

“Plaintiffs allege that OpenAI and GitHub assembled and distributed a commercial product called Copilot to create generative code using publicly accessible code originally made available under various “open source”-style licenses, many of which include an attribution requirement.

As GitHub states, ‘…[t]rained on billions of lines of code, GitHub Copilot turns natural language prompts into coding suggestions across dozens of languages.’

The resulting product allegedly omitted any credit to the original creators.”

The author of that article, who is a legal expert on the subject of copyrights, wrote that many view open source Creative Commons licenses as a “free-for-all.”

Some may also consider the phrase free-for-all a fair description of the datasets comprised of Internet content are scraped and used to generate AI products like ChatGPT.

Background on LLMs and Datasets

Large language models train on multiple data sets of content. Datasets can consist of emails, books, government data, Wikipedia articles, and even datasets created of websites linked from posts on Reddit that have at least three upvotes.

Many of the datasets related to the content of the Internet have their origins in the crawl created by a non-profit organization called Common Crawl.

Their dataset, the Common Crawl dataset, is available free for download and use.

The Common Crawl dataset is the starting point for many other datasets that created from it.

For example, GPT-3 used a filtered version of Common Crawl (Language Models are Few-Shot Learners PDF).

This is how GPT-3 researchers used the website data contained within the Common Crawl dataset:

“Datasets for language models have rapidly expanded, culminating in the Common Crawl dataset… constituting nearly a trillion words.

This size of dataset is sufficient to train our largest models without ever updating on the same sequence twice.

However, we have found that unfiltered or lightly filtered versions of Common Crawl tend to have lower quality than more curated datasets.

Therefore, we took 3 steps to improve the average quality of our datasets:

(1) we downloaded and filtered a version of CommonCrawl based on similarity to a range of high-quality reference corpora,

(2) we performed fuzzy deduplication at the document level, within and across datasets, to prevent redundancy and preserve the integrity of our held-out validation set as an accurate measure of overfitting, and

(3) we also added known high-quality reference corpora to the training mix to augment CommonCrawl and increase its diversity.”

Google’s C4 dataset (Colossal, Cleaned Crawl Corpus), which was used to create the Text-to-Text Transfer Transformer (T5), has its roots in the Common Crawl dataset, too.

Their research paper (Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer PDF) explains:

“Before presenting the results from our large-scale empirical study, we review the necessary background topics required to understand our results, including the Transformer model architecture and the downstream tasks we evaluate on.

We also introduce our approach for treating every problem as a text-to-text task and describe our “Colossal Clean Crawled Corpus” (C4), the Common Crawl-based data set we created as a source of unlabeled text data.

We refer to our model and framework as the ‘Text-to-Text Transfer Transformer’ (T5).”

Google published an article on their AI blog that further explains how Common Crawl data (which contains content scraped from the Internet) was used to create C4.

They wrote:

“An important ingredient for transfer learning is the unlabeled dataset used for pre-training.

To accurately measure the effect of scaling up the amount of pre-training, one needs a dataset that is not only high quality and diverse, but also massive.

Existing pre-training datasets don’t meet all three of these criteria — for example, text from Wikipedia is high quality, but uniform in style and relatively small for our purposes, while the Common Crawl web scrapes are enormous and highly diverse, but fairly low quality.

To satisfy these requirements, we developed the Colossal Clean Crawled Corpus (C4), a cleaned version of Common Crawl that is two orders of magnitude larger than Wikipedia.

Our cleaning process involved deduplication, discarding incomplete sentences, and removing offensive or noisy content.

This filtering led to better results on downstream tasks, while the additional size allowed the model size to increase without overfitting during pre-training.”

Google, OpenAI, even Oracle’s Open Data are using Internet content, your content, to create datasets that are then used to create AI applications like ChatGPT.

Common Crawl Can Be Blocked

It is possible to block Common Crawl and subsequently opt-out of all the datasets that are based on Common Crawl.

But if the site has already been crawled then the website data is already in datasets. There is no way to remove your content from the Common Crawl dataset and any of the other derivative datasets like C4 and .

Using the Robots.txt protocol will only block future crawls by Common Crawl, it won’t stop researchers from using content already in the dataset.

How to Block Common Crawl From Your Data

Blocking Common Crawl is possible through the use of the Robots.txt protocol, within the above discussed limitations.

The Common Crawl bot is called, CCBot.

It is identified using the most up to date CCBot User-Agent string: CCBot/2.0

Blocking CCBot with Robots.txt is accomplished the same as with any other bot.

Here is the code for blocking CCBot with Robots.txt.

User-agent: CCBot Disallow: /

CCBot crawls from Amazon AWS IP addresses.

CCBot also follows the nofollow Robots meta tag:

<meta name="robots" content="nofollow">

What If You’re Not Blocking Common Crawl?

Web content can be downloaded without permission, which is how browsers work, they download content.

Google or anybody else does not need permission to download and use content that is published publicly.

Website Publishers Have Limited Options

The consideration of whether it is ethical to train AI on web content doesn’t seem to be a part of any conversation about the ethics of how AI technology is developed.

It seems to be taken for granted that Internet content can be downloaded, summarized and transformed into a product called ChatGPT.

Does that seem fair? The answer is complicated.

Featured image by Shutterstock/Krakenimages.com

SEO

How To Use ChatGPT For Keyword Research

Anyone not using ChatGPT for keyword research is missing a trick.

You can save time and understand an entire topic in seconds instead of hours.

In this article, I outline my most effective ChatGPT prompts for keyword research and teach you how I put them together so that you, too, can take, edit, and enhance them even further.

But before we jump into the prompts, I want to emphasize that you shouldn’t replace keyword research tools or disregard traditional keyword research methods.

ChatGPT can make mistakes. It can even create new keywords if you give it the right prompt. For example, I asked it to provide me with a unique keyword for the topic “SEO” that had never been searched before.

“Interstellar Internet SEO: Optimizing content for the theoretical concept of an interstellar internet, considering the challenges of space-time and interplanetary communication delays.”

Although I want to jump into my LinkedIn profile and update my title to “Interstellar Internet SEO Consultant,” unfortunately, no one has searched that (and they probably never will)!

You must not blindly rely on the data you get back from ChatGPT.

What you can rely on ChatGPT for is the topic ideation stage of keyword research and inspiration.

ChatGPT is a large language model trained with massive amounts of data to accurately predict what word will come next in a sentence. However, it does not know how to do keyword research yet.

Instead, think of ChatGPT as having an expert on any topic armed with the information if you ask it the right question.

In this guide, that is exactly what I aim to teach you how to do – the most essential prompts you need to know when performing topical keyword research.

Best ChatGPT Keyword Research Prompts

The following ChatGPT keyword research prompts can be used on any niche, even a topic to which you are brand new.

For this demonstration, let’s use the topic of “SEO” to demonstrate these prompts.

Generating Keyword Ideas Based On A Topic

What Are The {X} Most Popular Sub-topics Related To {Topic}?

The first prompt is to give you an idea of the niche.

As shown above, ChatGPT did a great job understanding and breaking down SEO into three pillars: on-page, off-page & technical.

The key to the following prompt is to take one of the topics ChatGPT has given and query the sub-topics.

What Are The {X} Most Popular Sub-topics Related To {Sub-topic}?

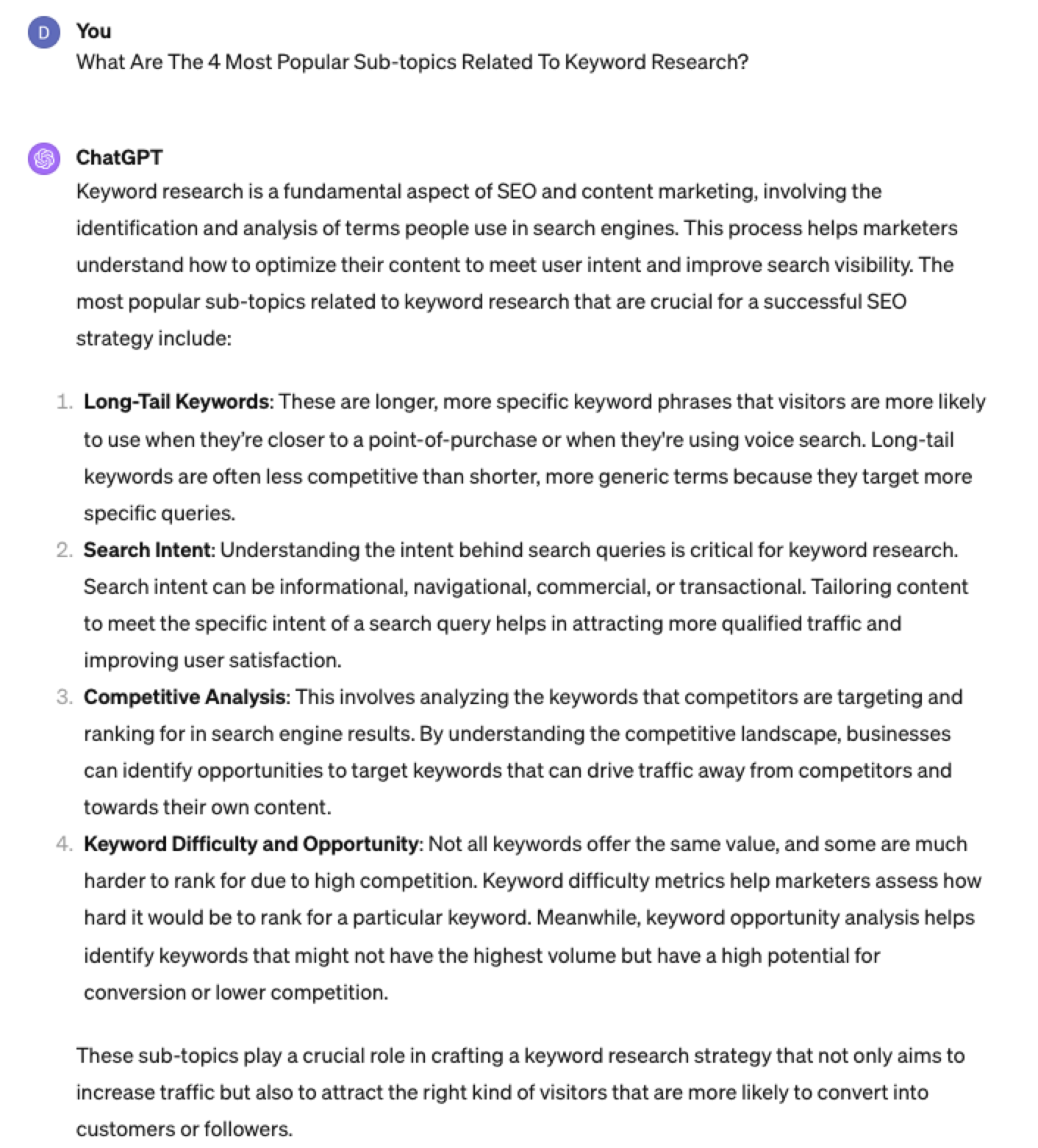

For this example, let’s query, “What are the most popular sub-topics related to keyword research?”

Having done keyword research for over 10 years, I would expect it to output information related to keyword research metrics, the types of keywords, and intent.

Let’s see.

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024Again, right on the money.

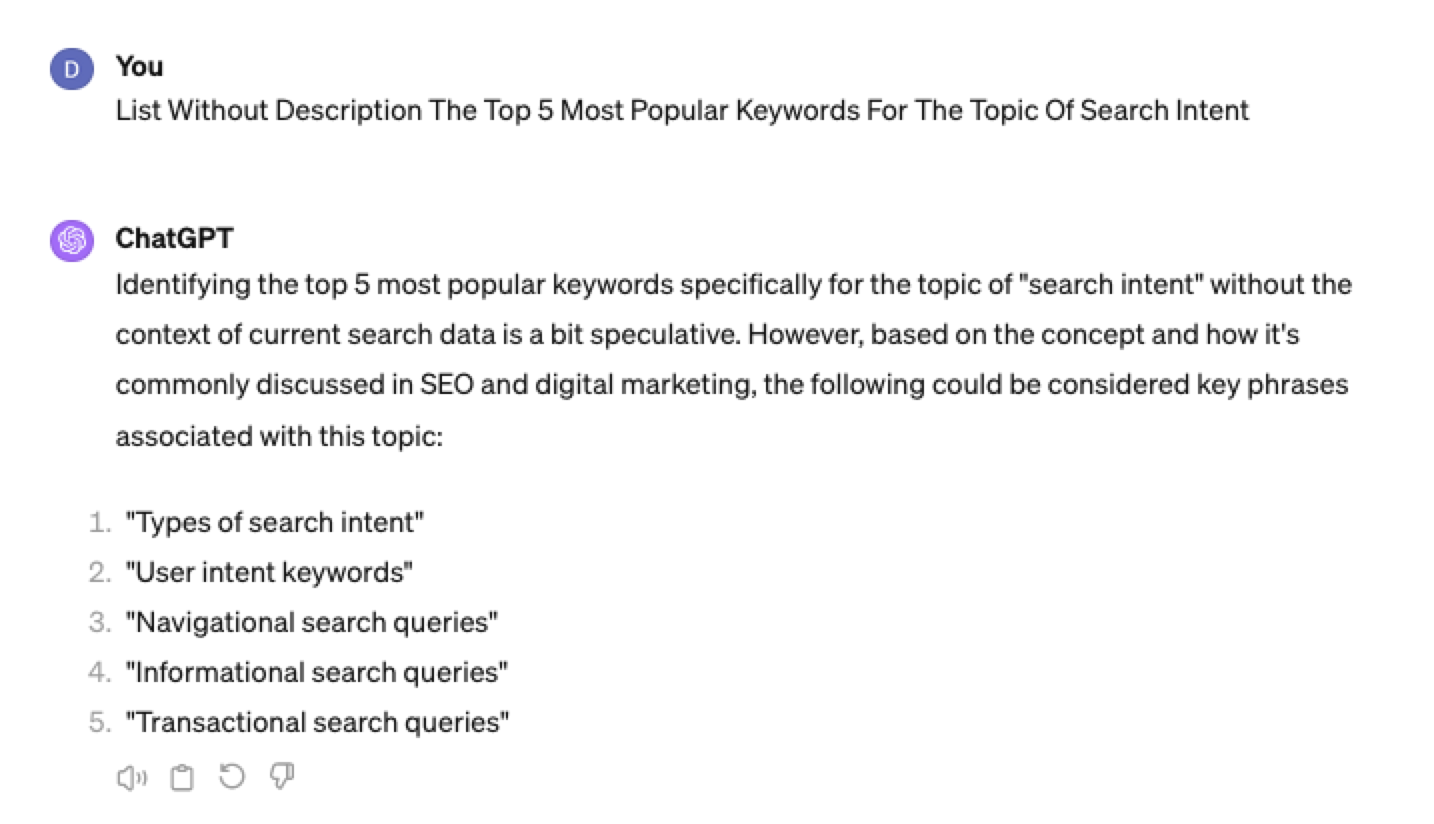

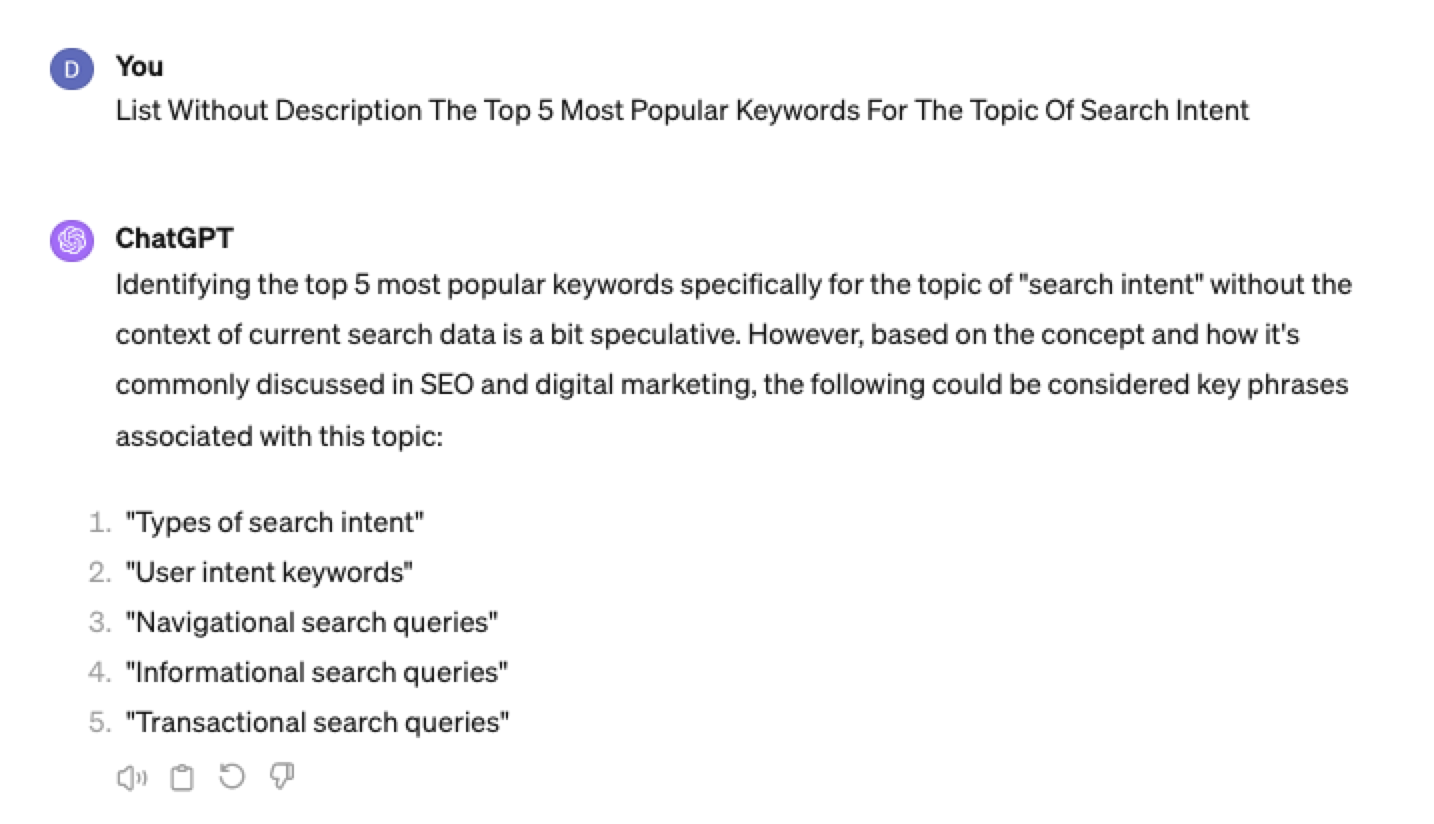

To get the keywords you want without having ChatGPT describe each answer, use the prompt “list without description.”

Here is an example of that.

List Without Description The Top {X} Most Popular Keywords For The Topic Of {X}

You can even branch these keywords out further into their long-tail.

Example prompt:

List Without Description The Top {X} Most Popular Long-tail Keywords For The Topic “{X}”

Screenshot ChatGPT 4,April 2024

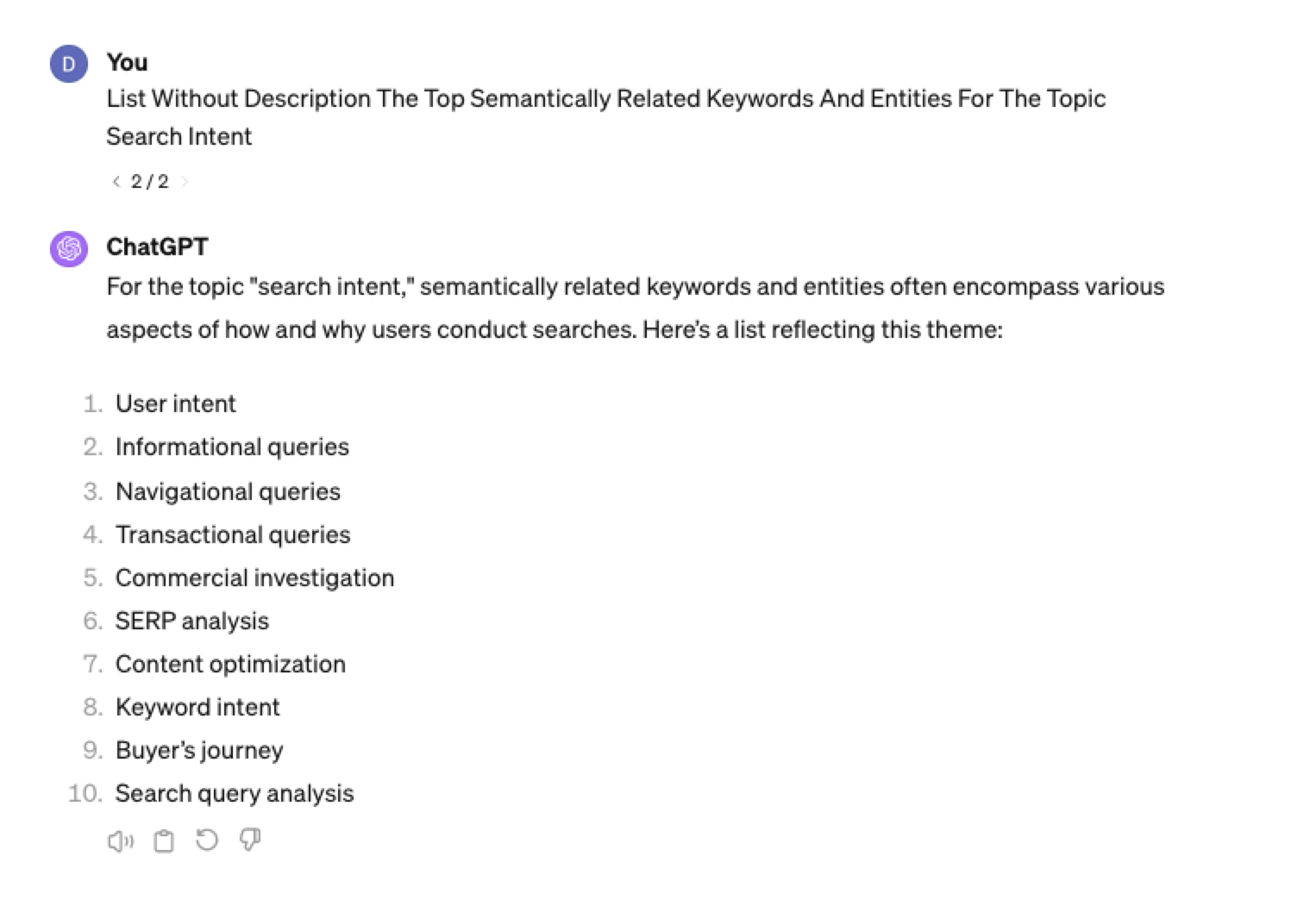

Screenshot ChatGPT 4,April 2024List Without Description The Top Semantically Related Keywords And Entities For The Topic {X}

You can even ask ChatGPT what any topic’s semantically related keywords and entities are!

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 2024Tip: The Onion Method Of Prompting ChatGPT

When you are happy with a series of prompts, add them all to one prompt. For example, so far in this article, we have asked ChatGPT the following:

- What are the four most popular sub-topics related to SEO?

- What are the four most popular sub-topics related to keyword research

- List without description the top five most popular keywords for “keyword intent”?

- List without description the top five most popular long-tail keywords for the topic “keyword intent types”?

- List without description the top semantically related keywords and entities for the topic “types of keyword intent in SEO.”

Combine all five into one prompt by telling ChatGPT to perform a series of steps. Example:

“Perform the following steps in a consecutive order Step 1, Step 2, Step 3, Step 4, and Step 5”

Example:

“Perform the following steps in a consecutive order Step 1, Step 2, Step 3, Step 4 and Step 5. Step 1 – Generate an answer for the 3 most popular sub-topics related to {Topic}?. Step 2 – Generate 3 of the most popular sub-topics related to each answer. Step 3 – Take those answers and list without description their top 3 most popular keywords. Step 4 – For the answers given of their most popular keywords, provide 3 long-tail keywords. Step 5 – for each long-tail keyword offered in the response, a list without descriptions 3 of their top semantically related keywords and entities.”

Generating Keyword Ideas Based On A Question

Taking the steps approach from above, we can get ChatGPT to help streamline getting keyword ideas based on a question. For example, let’s ask, “What is SEO?”

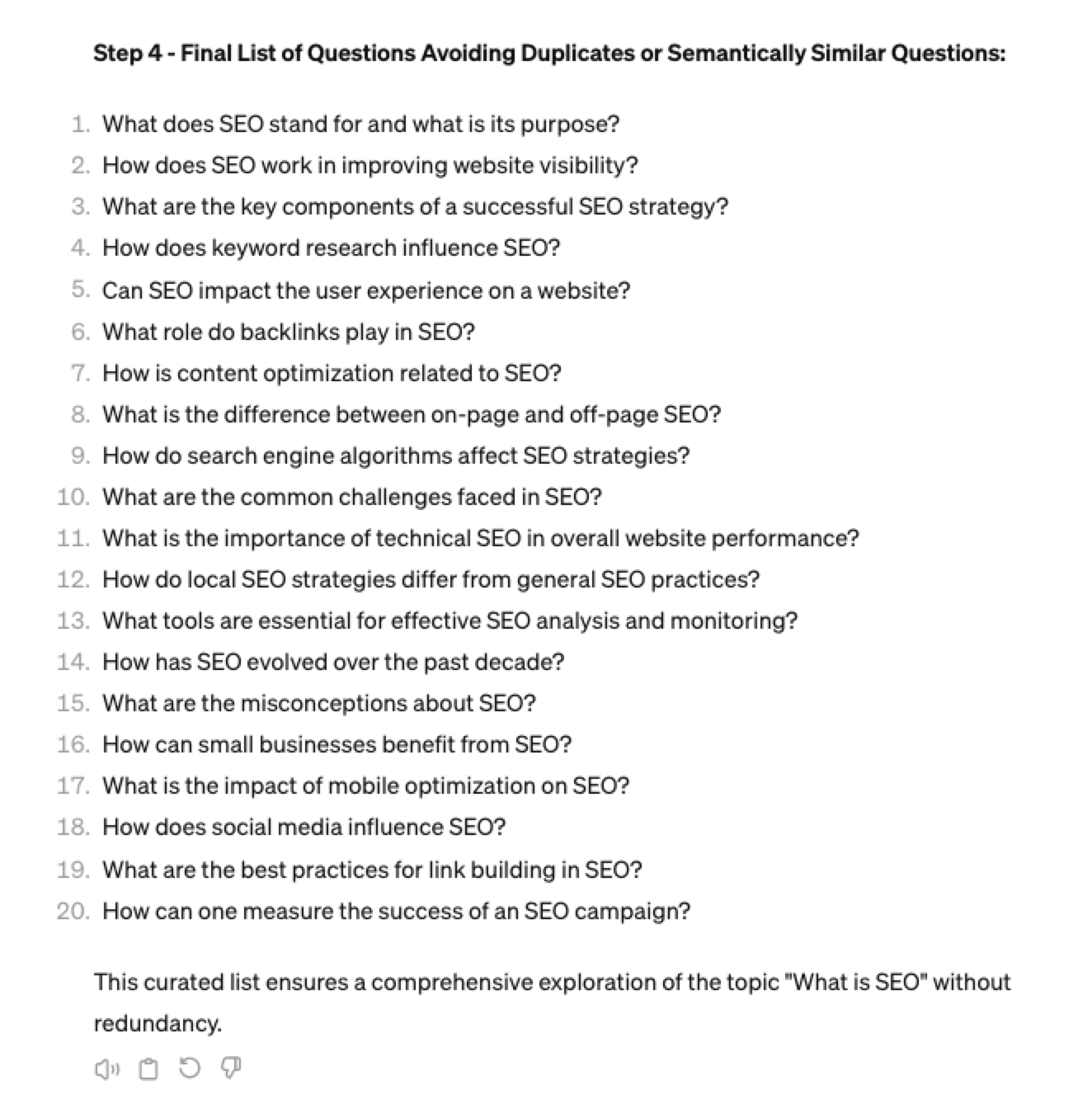

“Perform the following steps in a consecutive order Step 1, Step 2, Step 3, and Step 4. Step 1 Generate 10 questions about “{Question}”?. Step 2 – Generate 5 more questions about “{Question}” that do not repeat the above. Step 3 – Generate 5 more questions about “{Question}” that do not repeat the above. Step 4 – Based on the above Steps 1,2,3 suggest a final list of questions avoiding duplicates or semantically similar questions.”

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 2024Generating Keyword Ideas Using ChatGPT Based On The Alphabet Soup Method

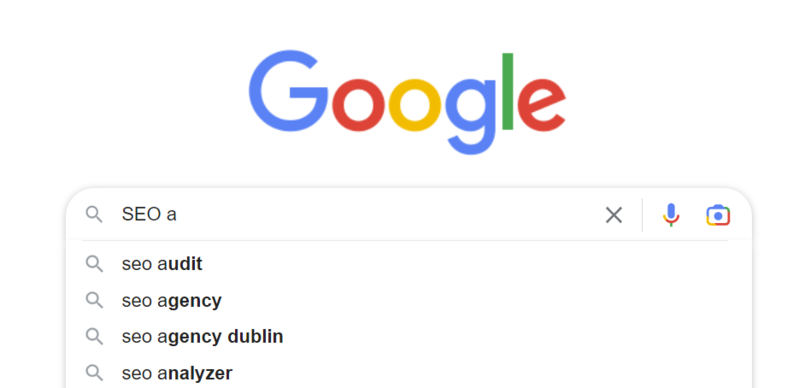

One of my favorite methods, manually, without even using a keyword research tool, is to generate keyword research ideas from Google autocomplete, going from A to Z.

-

Screenshot from Google autocomplete, April 2024

Screenshot from Google autocomplete, April 2024

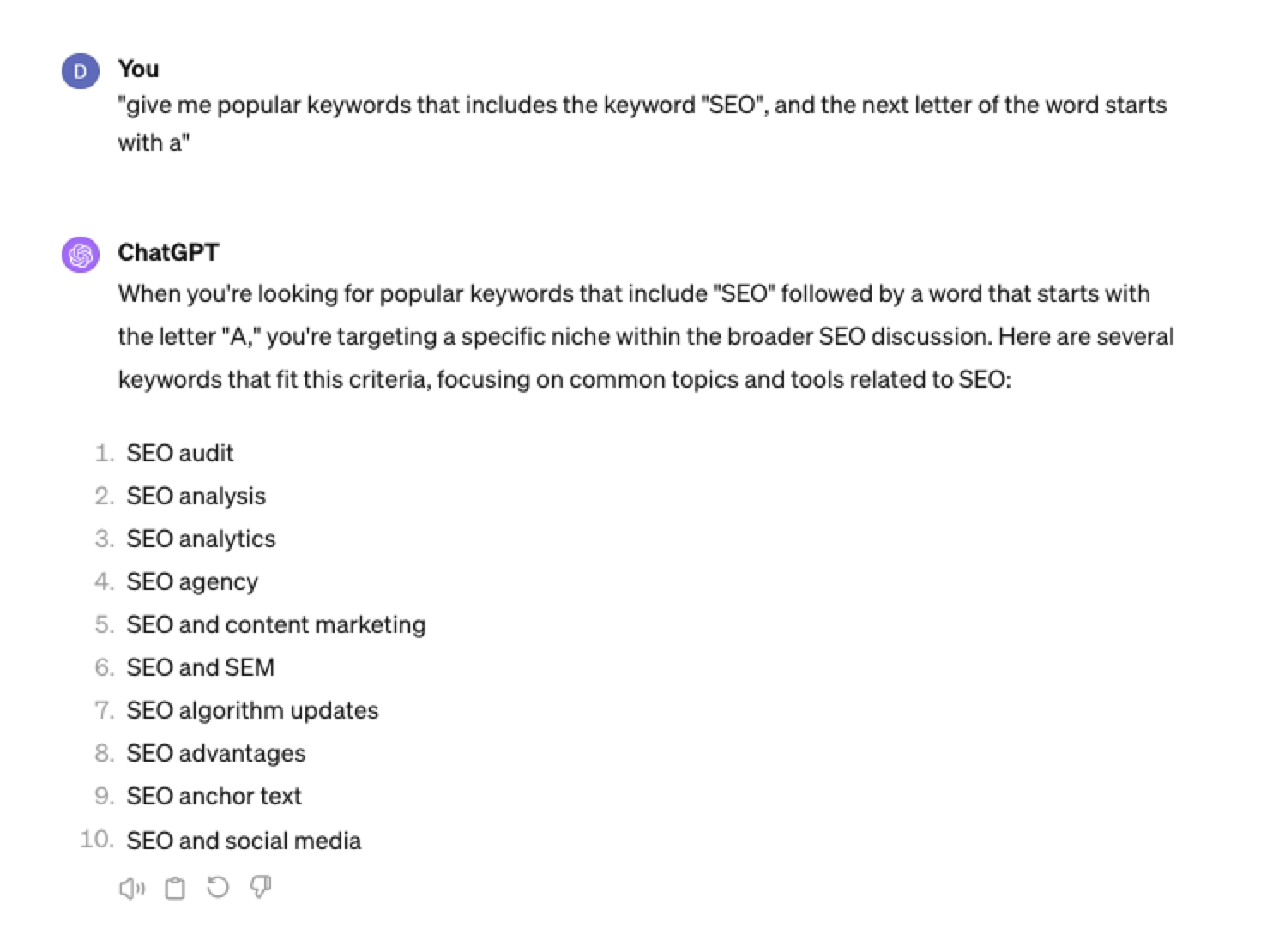

You can also do this using ChatGPT.

Example prompt:

“give me popular keywords that includes the keyword “SEO”, and the next letter of the word starts with a”

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024Tip: Using the onion prompting method above, we can combine all this in one prompt.

“Give me five popular keywords that include “SEO” in the word, and the following letter starts with a. Once the answer has been done, move on to giving five more popular keywords that include “SEO” for each letter of the alphabet b to z.”

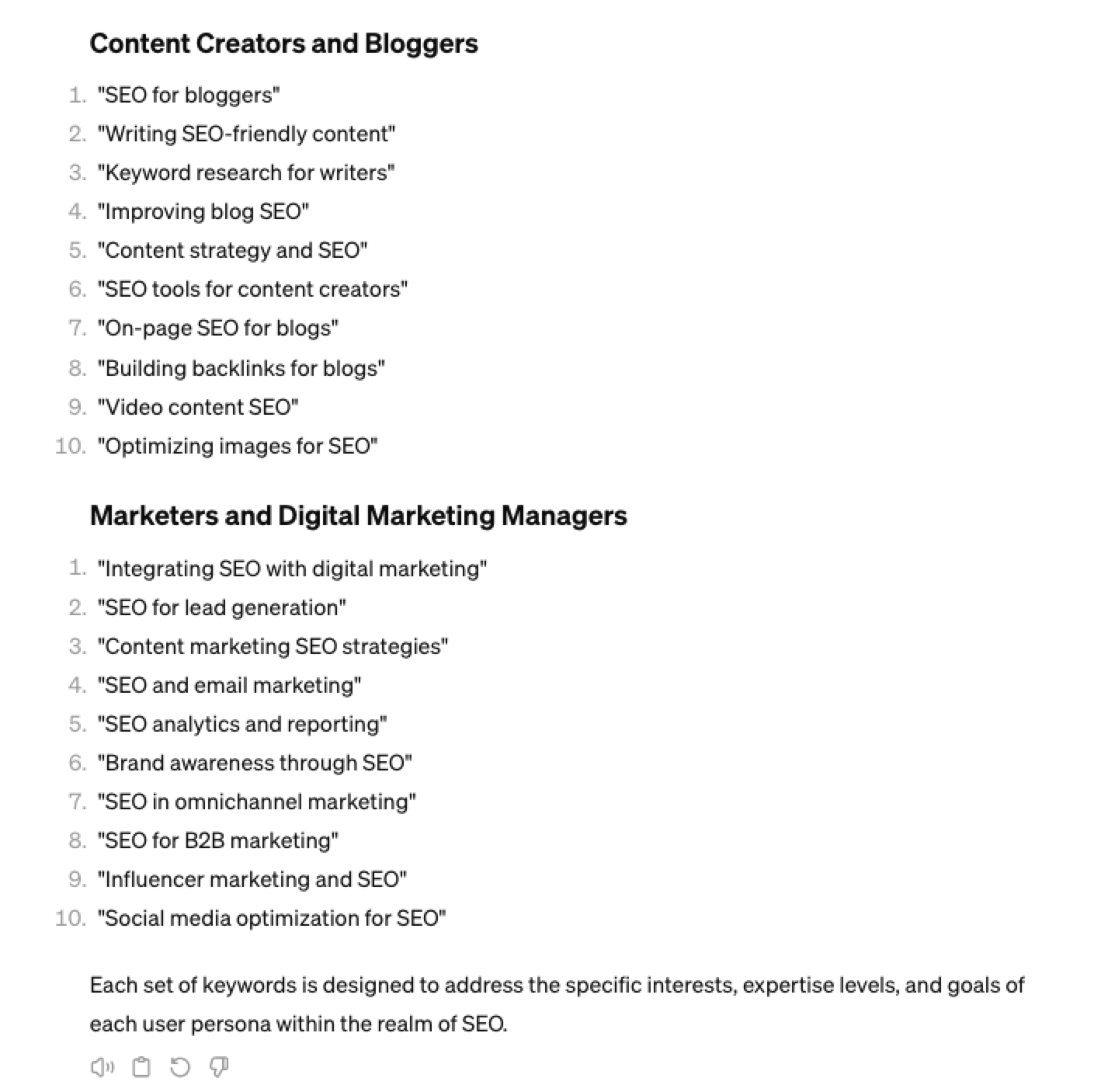

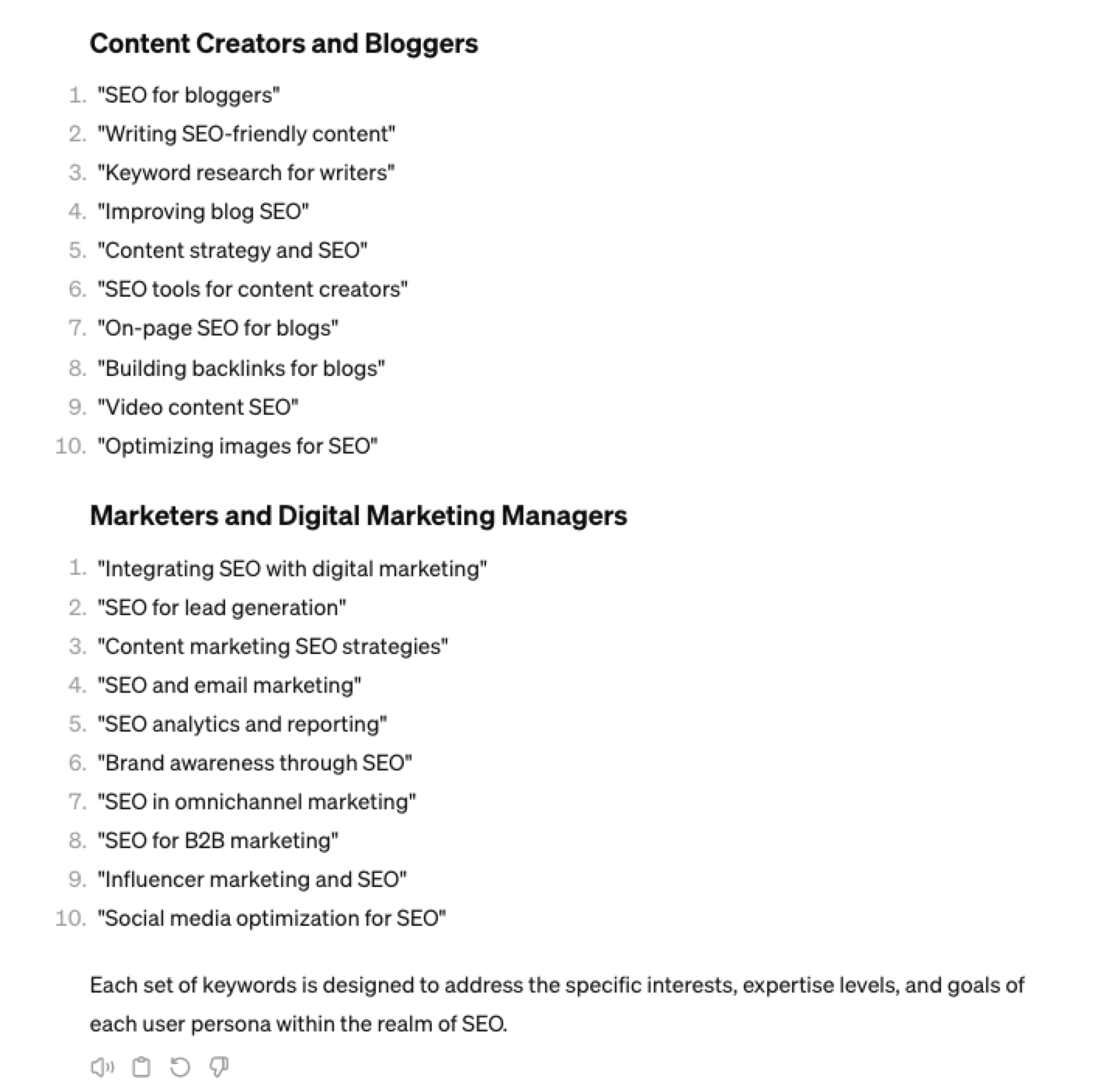

Generating Keyword Ideas Based On User Personas

When it comes to keyword research, understanding user personas is essential for understanding your target audience and keeping your keyword research focused and targeted. ChatGPT may help you get an initial understanding of customer personas.

Example prompt:

“For the topic of “{Topic}” list 10 keywords each for the different types of user personas”

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024You could even go a step further and ask for questions based on those topics that those specific user personas may be searching for:

Screenshot ChatGPT 4, April 2024

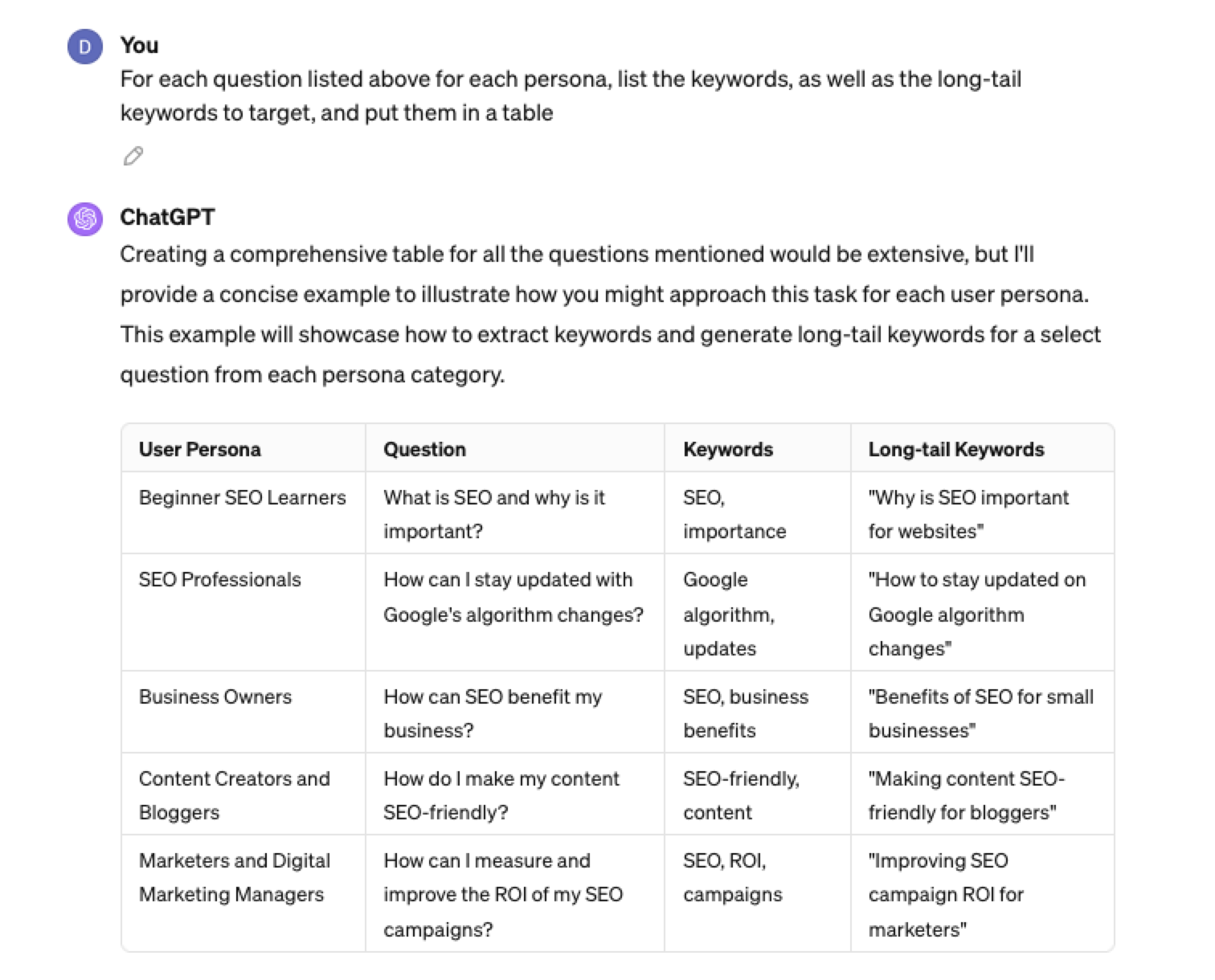

Screenshot ChatGPT 4, April 2024As well as get the keywords to target based on those questions:

“For each question listed above for each persona, list the keywords, as well as the long-tail keywords to target, and put them in a table”

Screenshot from ChatGPT 4, April 2024

Screenshot from ChatGPT 4, April 2024Generating Keyword Ideas Using ChatGPT Based On Searcher Intent And User Personas

Understanding the keywords your target persona may be searching is the first step to effective keyword research. The next step is to understand the search intent behind those keywords and which content format may work best.

For example, a business owner who is new to SEO or has just heard about it may be searching for “what is SEO.”

However, if they are further down the funnel and in the navigational stage, they may search for “top SEO firms.”

You can query ChatGPT to inspire you here based on any topic and your target user persona.

SEO Example:

“For the topic of “{Topic}” list 10 keywords each for the different types of searcher intent that a {Target Persona} would be searching for”

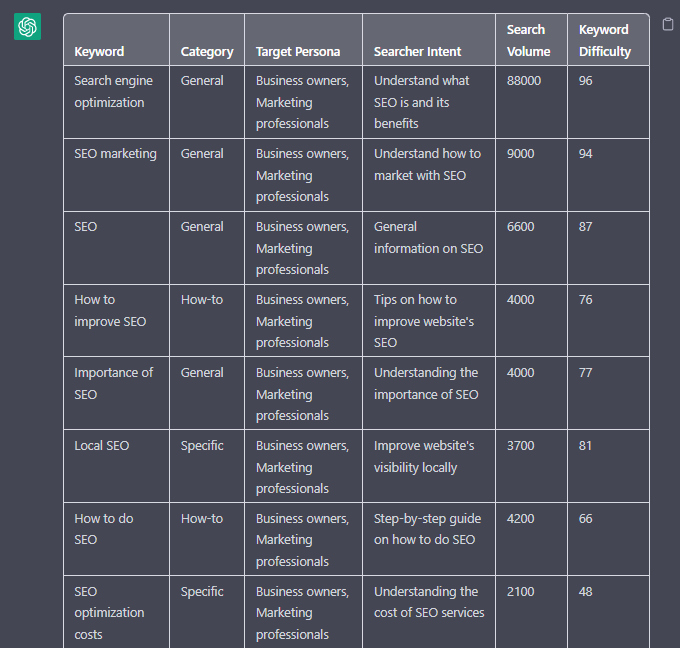

ChatGPT For Keyword Research Admin

Here is how you can best use ChatGPT for keyword research admin tasks.

Using ChatGPT As A Keyword Categorization Tool

One of the use cases for using ChatGPT is for keyword categorization.

In the past, I would have had to devise spreadsheet formulas to categorize keywords or even spend hours filtering and manually categorizing keywords.

ChatGPT can be a great companion for running a short version of this for you.

Let’s say you have done keyword research in a keyword research tool, have a list of keywords, and want to categorize them.

You could use the following prompt:

“Filter the below list of keywords into categories, target persona, searcher intent, search volume and add information to a six-column table: List of keywords – [LIST OF KEYWORDS], Keyword Search Volume [SEARCH VOLUMES] and Keyword Difficulties [KEYWORD DIFFICUTIES].”

-

Screenshot from ChatGPT, April 2024

Screenshot from ChatGPT, April 2024

Tip: Add keyword metrics from the keyword research tools, as using the search volumes that a ChatGPT prompt may give you will be wildly inaccurate at best.

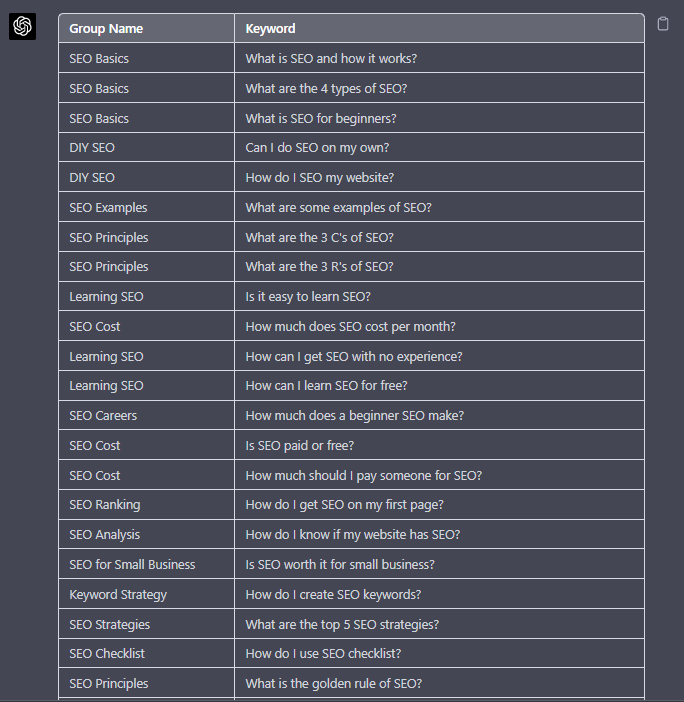

Using ChatGPT For Keyword Clustering

Another of ChatGPT’s use cases for keyword research is to help you cluster. Many keywords have the same intent, and by grouping related keywords, you may find that one piece of content can often target multiple keywords at once.

However, be careful not to rely only on LLM data for clustering. What ChatGPT may cluster as a similar keyword, the SERP or the user may not agree with. But it is a good starting point.

The big downside of using ChatGPT for keyword clustering is actually the amount of keyword data you can cluster based on the memory limits.

So, you may find a keyword clustering tool or script that is better for large keyword clustering tasks. But for small amounts of keywords, ChatGPT is actually quite good.

A great use small keyword clustering use case using ChatGPT is for grouping People Also Ask (PAA) questions.

Use the following prompt to group keywords based on their semantic relationships. For example:

“Organize the following keywords into groups based on their semantic relationships, and give a short name to each group: [LIST OF PAA], create a two-column table where each keyword sits on its own row.

-

Screenshot from ChatGPT, April 2024

Screenshot from ChatGPT, April 2024

Using Chat GPT For Keyword Expansion By Patterns

One of my favorite methods of doing keyword research is pattern spotting.

Most seed keywords have a variable that can expand your target keywords.

Here are a few examples of patterns:

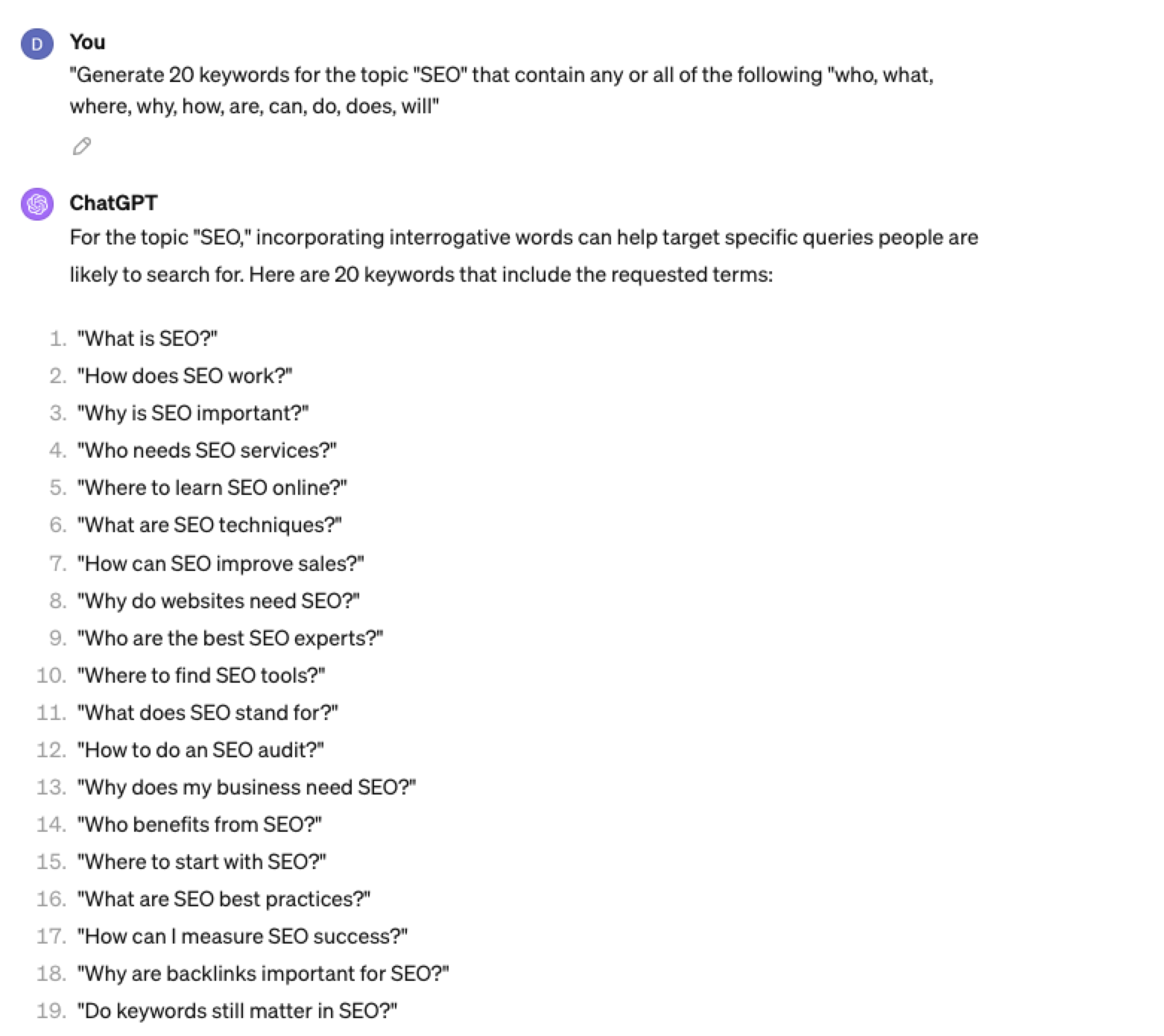

1. Question Patterns

(who, what, where, why, how, are, can, do, does, will)

“Generate [X] keywords for the topic “[Topic]” that contain any or all of the following “who, what, where, why, how, are, can, do, does, will”

Screenshot ChatGPT 4, April 2024

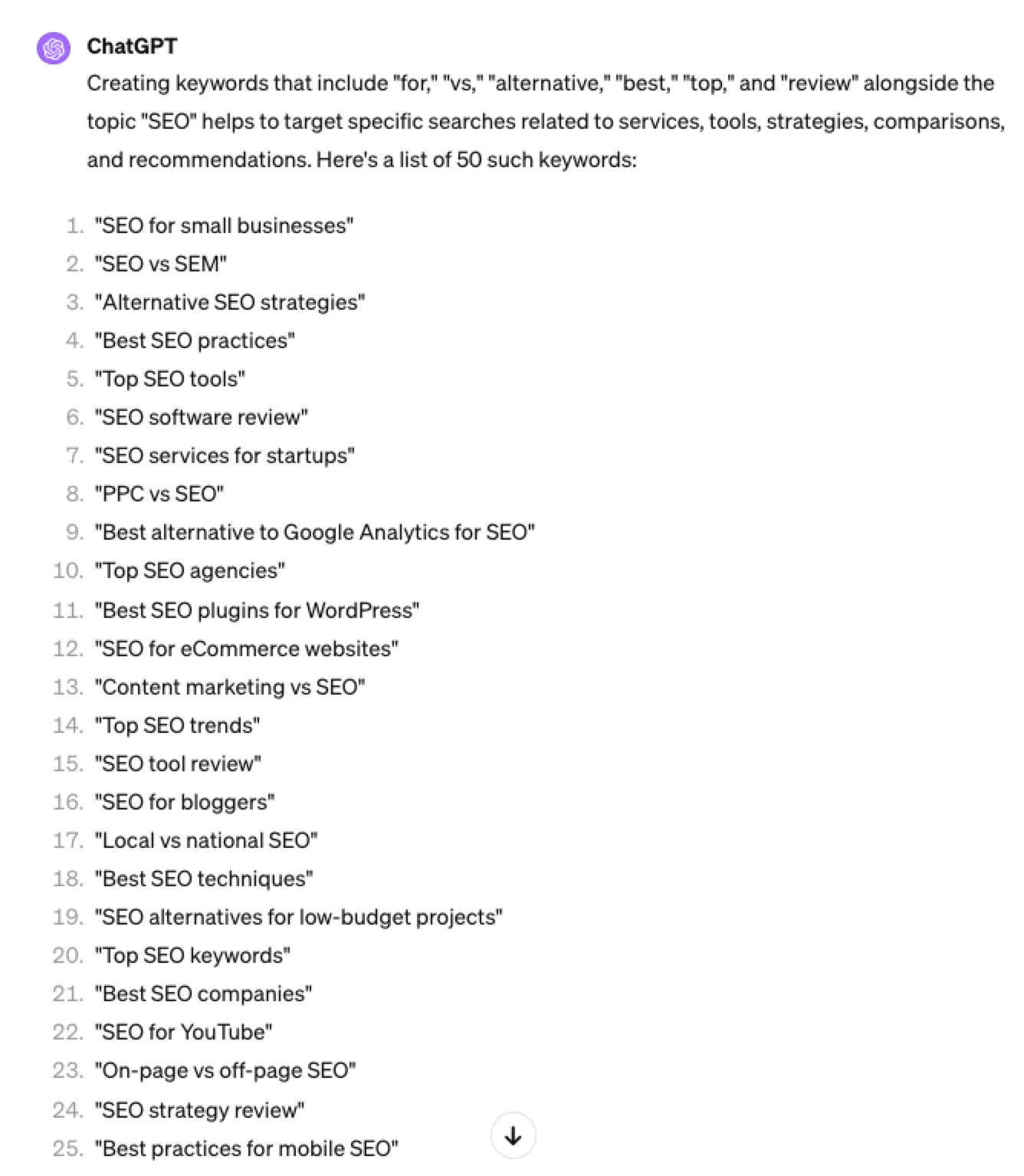

Screenshot ChatGPT 4, April 20242. Comparison Patterns

Example:

“Generate 50 keywords for the topic “{Topic}” that contain any or all of the following “for, vs, alternative, best, top, review”

Screenshot ChatGPT 4, April 2024

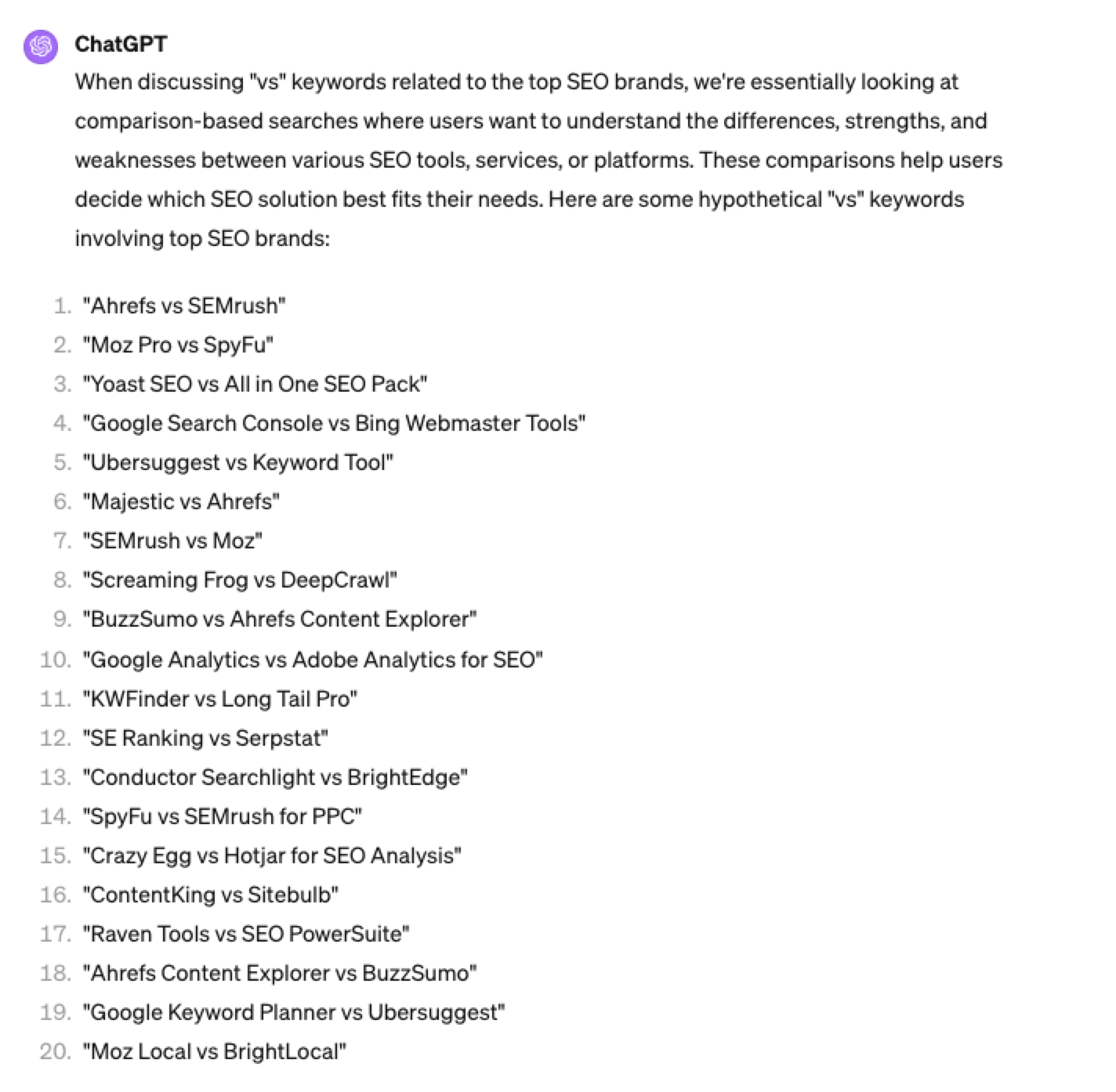

Screenshot ChatGPT 4, April 20243. Brand Patterns

Another one of my favorite modifiers is a keyword by brand.

We are probably all familiar with the most popular SEO brands; however, if you aren’t, you could ask your AI friend to do the heavy lifting.

Example prompt:

“For the top {Topic} brands what are the top “vs” keywords”

Screenshot ChatGPT 4, April 2024

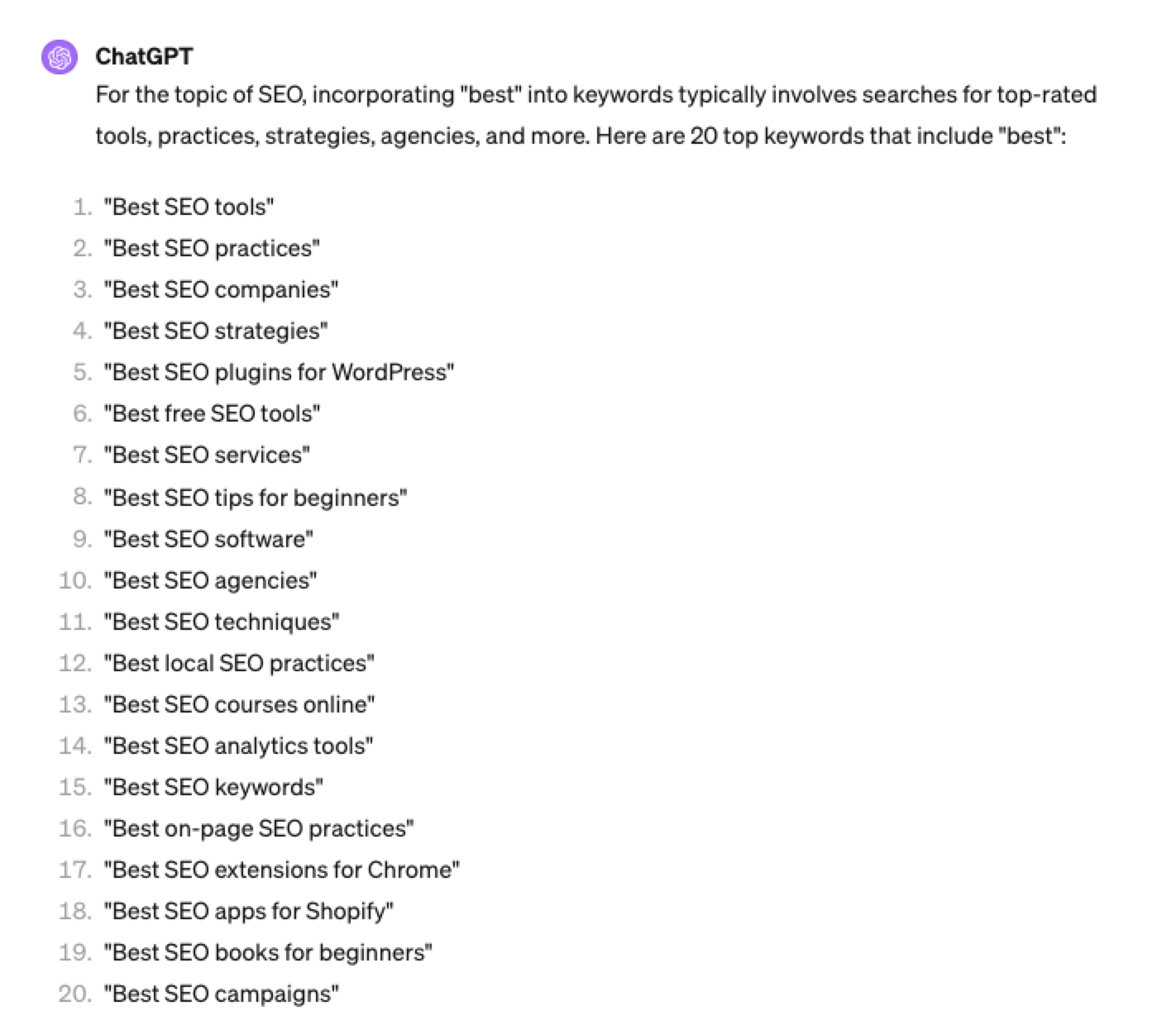

Screenshot ChatGPT 4, April 20244. Search Intent Patterns

One of the most common search intent patterns is “best.”

When someone is searching for a “best {topic}” keyword, they are generally searching for a comprehensive list or guide that highlights the top options, products, or services within that specific topic, along with their features, benefits, and potential drawbacks, to make an informed decision.

Example:

“For the topic of “[Topic]” what are the 20 top keywords that include “best”

Screenshot ChatGPT 4, April 2024

Screenshot ChatGPT 4, April 2024Again, this guide to keyword research using ChatGPT has emphasized the ease of generating keyword research ideas by utilizing ChatGPT throughout the process.

Keyword Research Using ChatGPT Vs. Keyword Research Tools

Free Vs. Paid Keyword Research Tools

Like keyword research tools, ChatGPT has free and paid options.

However, one of the most significant drawbacks of using ChatGPT for keyword research alone is the absence of SEO metrics to help you make smarter decisions.

To improve accuracy, you could take the results it gives you and verify them with your classic keyword research tool – or vice versa, as shown above, uploading accurate data into the tool and then prompting.

However, you must consider how long it takes to type and fine-tune your prompt to get your desired data versus using the filters within popular keyword research tools.

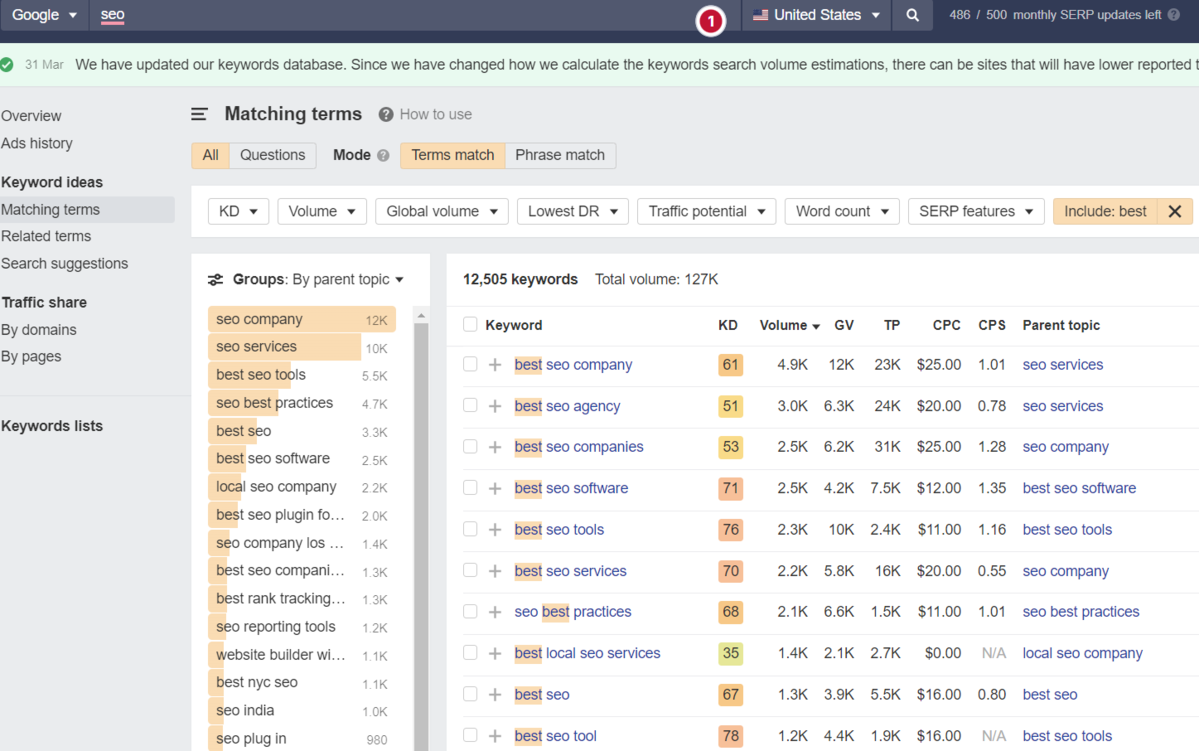

For example, if we use a popular keyword research tool using filters, you could have all of the “best” queries with all of their SEO metrics:

-

Screenshot from Ahrefs Keyword Explorer, March 2024

Screenshot from Ahrefs Keyword Explorer, March 2024

And unlike ChatGPT, generally, there is no token limit; you can extract several hundred, if not thousands, of keywords at a time.

As I have mentioned multiple times throughout this piece, you cannot blindly trust the data or SEO metrics it may attempt to provide you with.

The key is to validate the keyword research with a keyword research tool.

ChatGPT For International SEO Keyword Research

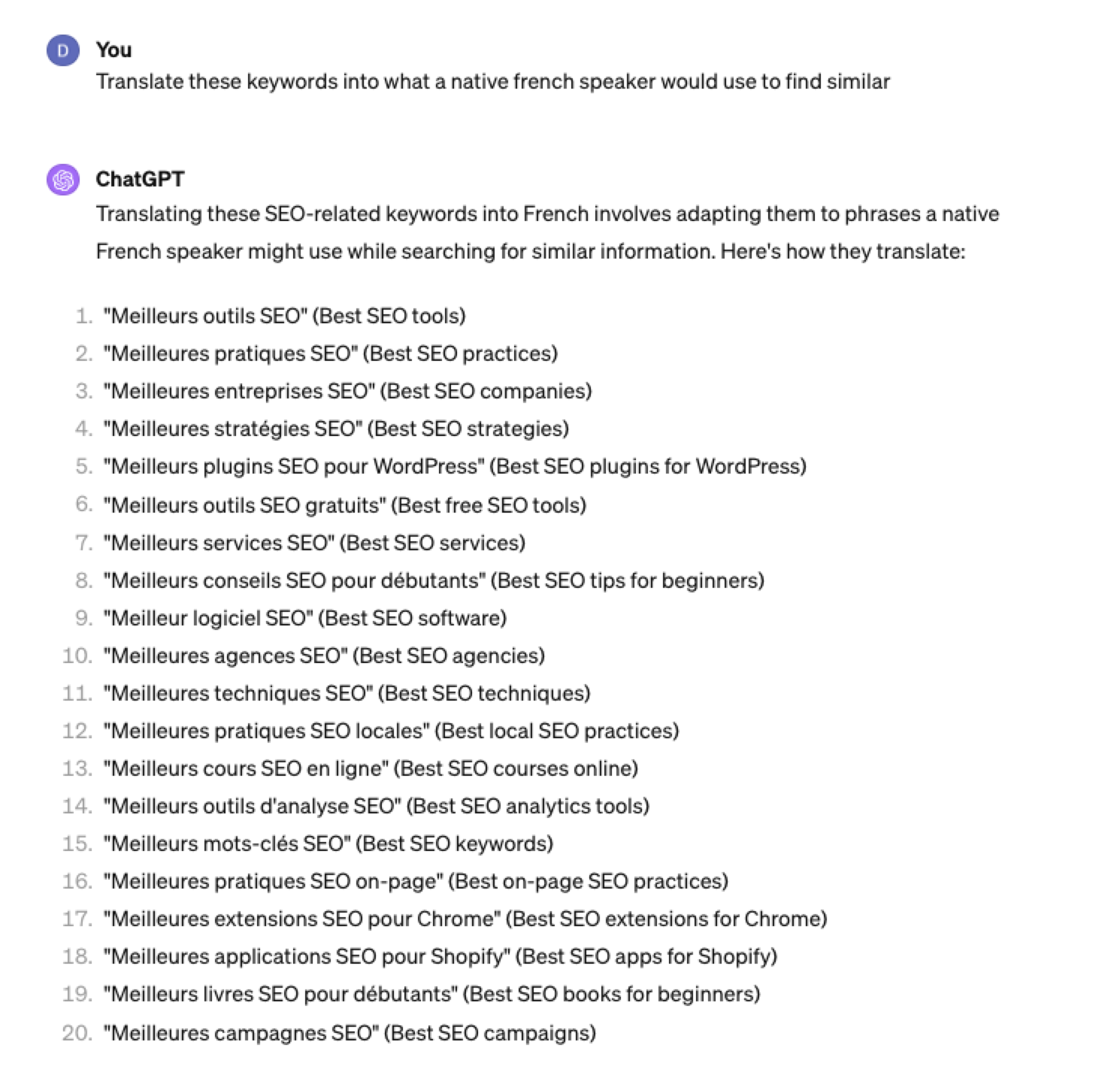

ChatGPT can be a terrific multilingual keyword research assistant.

For example, if you wanted to research keywords in a foreign language such as French. You could ask ChatGPT to translate your English keywords;

Screenshot ChatGPT 4, Apil 2024

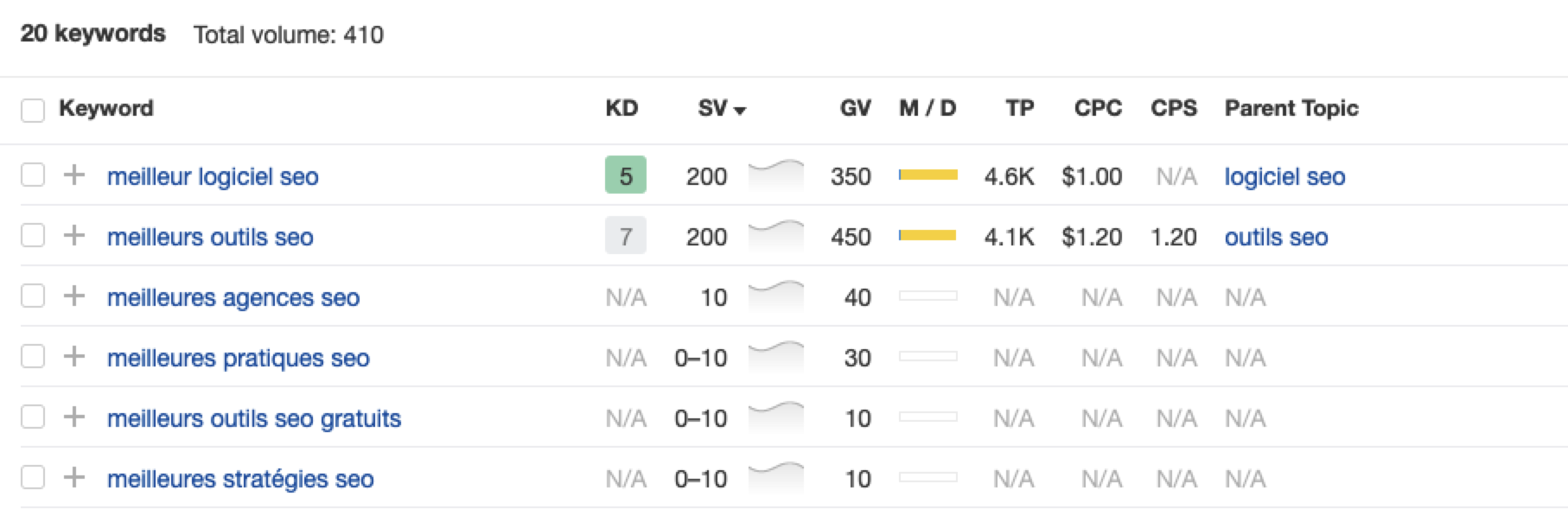

Screenshot ChatGPT 4, Apil 2024- The key is to take the data above and paste it into a popular keyword research tool to verify.

- As you can see below, many of the keyword translations for the English keywords do not have any search volume for direct translations in French.

Screenshot from Ahrefs Keyword Explorer, April 2024

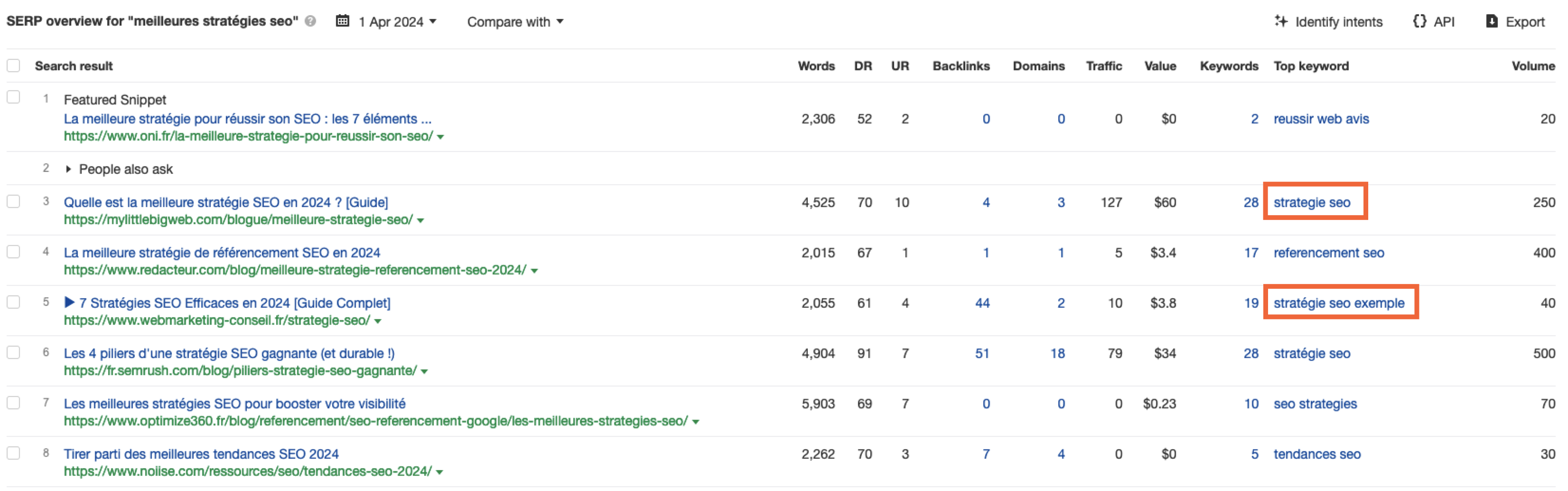

Screenshot from Ahrefs Keyword Explorer, April 2024But don’t worry, there is a workaround: If you have access to a competitor keyword research tool, you can see what webpage is ranking for that query – and then identify the top keyword for that page based on the ChatGPT translated keywords that do have search volume.

-

-

Screenshot from Ahrefs Keyword Explorer, April 2024

Screenshot from Ahrefs Keyword Explorer, April 2024

Or, if you don’t have access to a paid keyword research tool, you could always take the top-performing result, extract the page copy, and then ask ChatGPT what the primary keyword for the page is.

Key Takeaway

-

ChatGPT can be an expert on any topic and an invaluable keyword research tool. However, it is another tool to add to your toolbox when doing keyword research; it does not replace traditional keyword research tools.

As shown throughout this tutorial, from making up keywords at the beginning to inaccuracies around data and translations, ChatGPT can make mistakes when used for keyword research.

You cannot blindly trust the data you get back from ChatGPT.

However, it can offer a shortcut to understanding any topic for which you need to do keyword research and, as a result, save you countless hours.

But the key is how you prompt.

The prompts I shared with you above will help you understand a topic in minutes instead of hours and allow you to better seed keywords using keyword research tools.

It can even replace mundane keyword clustering tasks that you used to do with formulas in spreadsheets or generate ideas based on keywords you give it.

Paired with traditional keyword research tools, ChatGPT for keyword research can be a powerful tool in your arsenal.

More resources:

Featured Image: Tatiana Shepeleva/Shutterstock

SEO

OpenAI Expected to Integrate Real-Time Data In ChatGPT

Sam Altman, CEO of OpenAI, dispelled rumors that a new search engine would be announced on Monday, May 13. Recent deals have raised the expectation that OpenAI will announce the integration of real-time content from English, Spanish, and French publications into ChatGPT, complete with links to the original sources.

OpenAI Search Is Not Happening

Many competing search engines have tried and failed to challenge Google as the leading search engine. A new wave of hybrid generative AI search engines is currently trying to knock Google from the top spot with arguably very little success.

Sam Altman is on record saying that creating a search engine to compete against Google is not a viable approach. He suggested that technological disruption was the way to replace Google by changing the search paradigm altogether. The speculation that Altman is going to announce a me-too search engine on Monday never made sense given his recent history of dismissing the concept as a non-starter.

So perhaps it’s not a surprise that he recently ended the speculation by explicitly saying that he will not be announcing a search engine on Monday.

He tweeted:

“not gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me.”

“New Stuff” May Be Iterative Improvement

It’s quite likely that what’s going to be announced is iterative which means it improves ChatGPT but not replaces it. This fits into how Altman recently expressed his approach with ChatGPT.

He remarked:

“And it does kind of suck to ship a product that you’re embarrassed about, but it’s much better than the alternative. And in this case in particular, where I think we really owe it to society to deploy iteratively.

There could totally be things in the future that would change where we think iterative deployment isn’t such a good strategy, but it does feel like the current best approach that we have and I think we’ve gained a lot from from doing this and… hopefully the larger world has gained something too.”

Improving ChatGPT iteratively is Sam Altman’s preference and recent clues point to what those changes may be.

Recent Deals Contain Clues

OpenAI has been making deals with news media and User Generated Content publishers since December 2023. Mainstream media has reported these deals as being about licensing content for training large language models. But they overlooked a a key detail that we reported on last month which is that these deals give OpenAI access to real-time information that they stated will be used to give attribution to that real-time data in the form of links.

That means that ChatGPT users will gain the ability to access real-time news and to use that information creatively within ChatGPT.

Dotdash Meredith Deal

Dotdash Meredith (DDM) is the publisher of big brand publications such as Better Homes & Gardens, FOOD & WINE, InStyle, Investopedia, and People magazine. The deal that was announced goes way beyond using the content as training data. The deal is explicitly about surfacing the Dotdash Meredith content itself in ChatGPT.

The announcement stated:

“As part of the agreement, OpenAI will display content and links attributed to DDM in relevant ChatGPT responses. …This deal is a testament to the great work OpenAI is doing on both fronts to partner with creators and publishers and ensure a healthy Internet for the future.

Over 200 million Americans each month trust our content to help them make decisions, solve problems, find inspiration, and live fuller lives. This partnership delivers the best, most relevant content right to the heart of ChatGPT.”

A statement from OpenAI gives credibility to the speculation that OpenAI intends to directly show licensed third-party content as part of ChatGPT answers.

OpenAI explained:

“We’re thrilled to partner with Dotdash Meredith to bring its trusted brands to ChatGPT and to explore new approaches in advancing the publishing and marketing industries.”

Something that DDM also gets out of this deal is that OpenAI will enhance DDM’s in-house ad targeting in order show more tightly focused contextual advertising.

Le Monde And Prisa Media Deals

In March 2024 OpenAI announced a deal with two global media companies, Le Monde and Prisa Media. Le Monde is a French news publication and Prisa Media is a Spanish language multimedia company. The interesting aspects of these two deals is that it gives OpenAI access to real-time data in French and Spanish.

Prisa Media is a global Spanish language media company based in Madrid, Spain that is comprised of magazines, newspapers, podcasts, radio stations, and television networks. It’s reach extends from Spain to America. American media companies include publications in the United States, Argentina, Bolivia, Chile, Colombia, Costa Rica, Ecuador, Mexico, and Panama. That is a massive amount of real-time information in addition to a massive audience of millions.

OpenAI explicitly announced that the purpose of this deal was to bring this content directly to ChatGPT users.

The announcement explained:

“We are continually making improvements to ChatGPT and are supporting the essential role of the news industry in delivering real-time, authoritative information to users. …Our partnerships will enable ChatGPT users to engage with Le Monde and Prisa Media’s high-quality content on recent events in ChatGPT, and their content will also contribute to the training of our models.”

That deal is not just about training data. It’s about bringing current events data to ChatGPT users.

The announcement elaborated in more detail:

“…our goal is to enable ChatGPT users around the world to connect with the news in new ways that are interactive and insightful.”

As noted in our April 30th article that revealed that OpenAI will show links in ChatGPT, OpenAI intends to show third party content with links to that content.

OpenAI commented on the purpose of the Le Monde and Prisa Media partnership:

“Over the coming months, ChatGPT users will be able to interact with relevant news content from these publishers through select summaries with attribution and enhanced links to the original articles, giving users the ability to access additional information or related articles from their news sites.”

There are additional deals with other groups like The Financial Times which also stress that this deal will result in a new ChatGPT feature that will allow users to interact with real-time news and current events .

OpenAI’s Monday May 13 Announcement

There are many clues that the announcement on Monday will be that ChatGPT users will gain the ability to interact with content about current events. This fits into the terms of recent deals with news media organizations. There may be other features announced as well but this part is something that there are many clues pointing to.

Watch Altman’s interview at Stanford University

Featured Image by Shutterstock/photosince

SEO

Google’s Strategies For Dealing With Content Decay

In the latest episode of the Search Off The Record podcast, Google Search Relations team members John Mueller and Lizzi Sassman did a deep dive into dealing with “content decay” on websites.

Outdated content is a natural issue all sites face over time, and Google has outlined strategies beyond just deleting old pages.

While removing stale content is sometimes necessary, Google recommends taking an intentional, format-specific approach to tackling content decay.

Archiving vs. Transitional Guides

Google advises against immediately removing content that becomes obsolete, like materials referencing discontinued products or services.

Removing content too soon could confuse readers and lead to a poor experience, Sassman explains:

“So, if I’m trying to find out like what happened, I almost need that first thing to know. Like, “What happened to you?” And, otherwise, it feels almost like an error. Like, “Did I click a wrong link or they redirect to the wrong thing?””

Sassman says you can avoid confusion by providing transitional “explainer” pages during deprecation periods.

A temporary transition guide informs readers of the outdated content while steering them toward updated resources.

Sassman continues:

“That could be like an intermediary step where maybe you don’t do that forever, but you do it during the transition period where, for like six months, you have them go funnel them to the explanation, and then after that, all right, call it a day. Like enough people know about it. Enough time has passed. We can just redirect right to the thing and people aren’t as confused anymore.”

When To Update Vs. When To Write New Content

For reference guides and content that provide authoritative overviews, Google suggests updating information to maintain accuracy and relevance.

However, for archival purposes, major updates may warrant creating a new piece instead of editing the original.

Sassman explains:

“I still want to retain the original piece of content as it was, in case we need to look back or refer to it, and to change it or rehabilitate it into a new thing would almost be worth republishing as a new blog post if we had that much additional things to say about it.”

Remove Potentially Harmful Content

Google recommends removing pages in cases where the outdated information is potentially harmful.

Sassman says she arrived at this conclusion when deciding what to do with a guide involving obsolete structured data:

“I think something that we deleted recently was the “How to Structure Data” documentation page, which I thought we should just get rid of it… it almost felt like that’s going to be more confusing to leave it up for a period of time.

And actually it would be negative if people are still adding markup, thinking they’re going to get something. So what we ended up doing was just delete the page and redirect to the changelog entry so that, if people clicked “How To Structure Data” still, if there was a link somewhere, they could still find out what happened to that feature.”

Internal Auditing Processes

To keep your content current, Google advises implementing a system for auditing aging content and flagging it for review.

Sassman says she sets automated alerts for pages that haven’t been checked in set periods:

“Oh, so we have a little robot to come and remind us, “Hey, you should come investigate this documentation page. It’s been x amount of time. Please come and look at it again to make sure that all of your links are still up to date, that it’s still fresh.””

Context Is Key

Google’s tips for dealing with content decay center around understanding the context of outdated materials.

You want to prevent visitors from stumbling across obsolete pages without clarity.

Additional Google-recommended tactics include:

- Prominent banners or notices clarifying a page’s dated nature

- Listing original publish dates

- Providing inline annotations explaining how older references or screenshots may be obsolete

How This Can Help You

Following Google’s recommendations for tackling content decay can benefit you in several ways:

- Improved user experience: By providing clear explanations, transition guides, and redirects, you can ensure that visitors don’t encounter confusing or broken pages.

- Maintained trust and credibility: Removing potentially harmful or inaccurate content and keeping your information up-to-date demonstrates your commitment to providing reliable and trustworthy resources.

- Better SEO: Regularly auditing and updating your pages can benefit your website’s search rankings and visibility.

- Archival purposes: By creating new content instead of editing older pieces, you can maintain a historical record of your website’s evolution.

- Streamlined content management: Implementing internal auditing processes makes it easier to identify and address outdated or problematic pages.

By proactively tackling content decay, you can keep your website a valuable resource, improve SEO, and maintain an organized content library.

Listen to the full episode of Google’s podcast below:

Featured Image: Stokkete/Shutterstock

-

PPC5 days ago

PPC5 days agoHow the TikTok Algorithm Works in 2024 (+9 Ways to Go Viral)

-

MARKETING7 days ago

MARKETING7 days agoA Recap of Everything Marketers & Advertisers Need to Know

-

SEO6 days ago

SEO6 days agoBlog Post Checklist: Check All Prior to Hitting “Publish”

-

SEO4 days ago

SEO4 days agoHow to Use Keywords for SEO: The Complete Beginner’s Guide

-

MARKETING5 days ago

MARKETING5 days agoHow To Protect Your People and Brand

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Started Enforcing The Site Reputation Abuse Policy

-

PPC6 days ago

PPC6 days agoHow to Craft Compelling Google Ads for eCommerce

-

MARKETING6 days ago

MARKETING6 days agoElevating Women in SEO for a More Inclusive Industry

You must be logged in to post a comment Login