SEO

Google Tag Manager Contains Hidden Data Leaks & Vulnerabilities

Researchers uncover data leaks in Google Tag Manager (GTM) as well as security vulnerabilities, arbitrary script injections and instances of consent for data collection enabled by default. A legal analysis identifies potential violations of EU data protection law.

There are many troubling revelations including that server-side GTM “obstructs compliance auditing endeavors from regulators, data protection officers, and researchers…”

GTM, developed by Google in 2012 to assist publishers in implementing third-party JavaScript scripts, is currently used on as many as 28 million websites. The research study evaluates both versions of GTM, the Client-side and the newer Server-side GTM that was introduced in 2020.

The analysis, undertaken by researchers and legal experts, revealed a number of issues inherent to the GTM architecture.

An examination of 78 Client-side Tags, 8 Server-side Tags, and two Consent Management Platforms (CMPs), revealed hidden data leaks, instances of Tags bypassing GTM permission systems in order to inject scripts, and consent set to enabled by default without any user interaction.

A significant finding pertains to the Server-side GTM. Server-side GTM works by loading and executing tags on a remote server, which creates the perception of the absence of third parties on the website.

However, the study showed that this architecture allows tags running on the server to clandestinely share users’ data with third parties, circumventing browser restrictions and security measures like like the Content-Security-Policy (CSP).

Methodology Used In Research On GTM Data Leaks

The researchers are from Centre Inria de l’Université, Centre Inria d’Université Côte d’Azur, Centre Inria de l’Université, and Utrecht University.

The methodology used by the researchers was to buy a domain and install GTM on a live website.

The research paper explains in detail:

“To conduct experiments and set up the GTM infrastructure, we bought a domain – we call it example.com here – and created a public website containing one basic webpage with a paragraph of text and an HTML login form. We have included a login form since Senol et al. …have recently found that user input is often leaked from the forms, so we decided to test whether Tags may be responsible for such leakage.

The website and the Server-side GTM infrastructure were hosted on a virtual machine we rented on the Microsoft Azure cloud computing platform located in a data center in the EU.

…We used the ‘profiles’ functionality of the browser to start every experiment in a fresh environment, devoid from cookies, local storage and other technologies than maintain a state.

The browser, visiting the website, was run on a computer connected to the Internet through an institutional network in the EU.

To create Client- and Server-side GTM installations, we created a new Google account, logged into it and followed the suggested steps in the official GTM documentation.”

The results of the analysis contain multiple critical findings, including that the “Google Tag” facilitates collecting multiple types of users’ data without consent and at the time of analysis it presented a security vulnerability.

Data Collection Is Hidden From Publishers

Another discovery was the extent of data collection by the “Pinterest Tag,” which garnered a significant amount of user data without disclosing it to the Publisher.

What some may find disturbing is that publishers who deploy these tags may not only be unaware of the data leaks but that the tools they rely on to help them monitor data collection don’t notify them of these issues.

The researchers documented their findings:

“We observe that the data sent by the Pinterest Tag is not visible to the Publisher on the Pinterest website, where we logged in to observe Pinterest’s disclosure about collected data.

Moreover, we find that the data collected by the Google Tag about form interaction is not shown in the Google Analytics dashboard.

This finding demonstrates that for such Tags, Publishers are not aware of the data collected by the Tags that they select.”

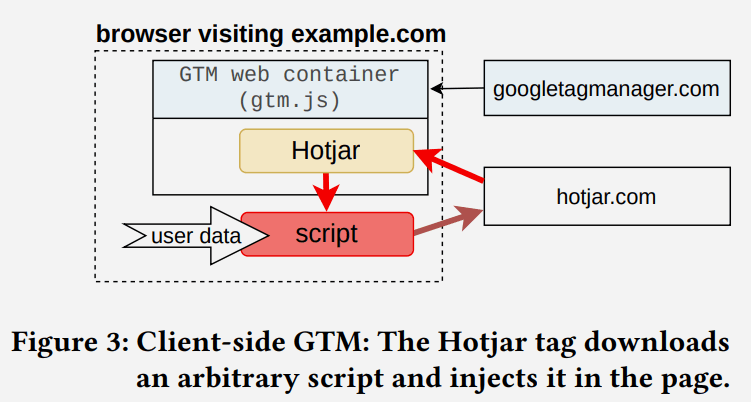

Injections of Third Party Scripts

Google Tag Managers has a feature for controlling tags, including third party tags, called Web Containers. The tags can run inside a sandbox that limits their functionalities. The sandbox also uses a permission system with one permission called inject_script that allows a script to download and run any (arbitrary) script outside of the Web Container.

The inject_script permission allows the tag to bypass the GTM permission system to gain access to all browser APIs and DOM.

Screenshot Illustrating Script Injection

The researchers analyzed 78 officially supported Client-side tags and discovered 11 tags that don’t have the inject_script permission but can inject arbitrary scripts. Seven of those eleven tags were provided by Google.

They write:

“11 out of 78 official Client-side tags inject a third-party script into the DOM bypassing the GTM permission system; and GTM “Consent Mode” enables some of the consent purposes by default, even before the user has interacted with the consent banner.”

The situation is even worse because it’s not just a privacy vulnerability, it’s also a security vulnerability.

The research paper explains the meaning of what they uncovered:

“This finding shows that the GTM permission system implemented in the Web Container sandbox allows Tags to insert arbitrary, uncontrolled scripts, thus opening potential security and privacy vulnerabilities to the website. We have disclosed this finding to Google via their Bug Bounty online system.”

Consent Management Platforms (CMP)

Consent Management Platforms (CMP) are a technology for managing what consent users have granted in terms of their privacy. This is a way to manage ad personalization, user data storage, analytics data storage and so on.

Google’s documentation for CMP usage states that setting the consent mode defaults is the responsibility of the marketers and publishers who use the GTM.

The defaults can be set to deny ad personalizaton by default, for example.

The documentation states:

“Set consent defaults

We recommend setting a default value for each consent type you are using.The consent state values in this article are only examples. You are responsible for making sure that default consent mode is set for each of your measurement products to match your organization’s policy.”

What the researchers discovered is that CMPs for Client-side GTMs are loaded in an undefined state on the webpage and that becomes problematic when a CMP does not load default variables (referred to as undefined variables).

The problem is that GTM considers undefined variables to mean that users have given their consent to all of the undefined variables, even though the user has not consented in any way.

The researchers explained what’s happening:

“Surprisingly, in this case, GTM considers all such undefined variables to be accepted by the end user, even though the end user has not interacted with the consent banner of the CMP yet.

Among two CMPs tested (see §3.1.1), we detected this behavior for the Consentmanager CMP.

This CMP sets a default value to only two consent variables – analytics_storage and ad_storage – leaving three GTM consent variables – security_-storage , personalization_storage functionality_storage – and consent variables specific to this CMP – e.g., cmp_purpose_c56 which corresponds to the “Social Media” purpose – in undefined state.

These extra variables are hence considered granted by GTM. As a result, all the Tags that depend on these four consent variables get executed even without user consent.”

Legal Implications

The research paper notes that United States privacy laws like the European Union General Data Protection Regulation (GDPR) and the ePrivacy Directive (ePD) regulate the processing of user data and the use of tracking technologies and impose significant fines for violations of those laws, such as requiring consent for the storage of cookies and other tracking technologies.

A legal analysis of the Client-Side GTM flagged a total of seven potential violations.

Seven Potential Violations Of Data Protection Laws

- Potential violation 1. CMP scanners often miss purposes

- Potential violation 2. Mapping CMP purposes to GTM consent variables is not compliant.

- Potential violation 3. GTM purposes are limited to clientside storage.

- Potential violation 4. GTM purposes are not specific nor explicit.

- Potential violation 5. Defaulting consent variables to “accepted” means that Tags run without consent.

- Potential violation 6. Google Tag sends data independently of user’s consent decisions.

- Potential violation 7. GTM allows Tag Providers to inject scripts exposing end users to security risks.

Legal analysis of Server-Side GTM

The researchers write that the findings raise legal concerns about GTM in its current state. They assert that the system introduces more legal challenges than resolutions, complicating compliance efforts and posing a challenge for regulators to monitor effectively.

These are some of the factors that caused concern about the ability to comply with regulations:

- Complying with data subject rights is hard for the Publisher

For both Client- and Server-Side GTM there is no easy way for a publisher to comply with a request for access to collected data as required by Article 15 of the GDPR. The publisher would have to manually track down every Data Collector to comply with that legal request. - Built-in consent raises trust issues

When using tags with built-in consent, publishers are forced to trust that Tag Providers actually implement the built-in consent within the code. There’s no easy way for a publisher to review the code to verify that the Tag Provider is actually ignoring the consent and collecting user information. Reviewing the code is impossible for official tags that are sandboxed within the gtm.js script. The researchers state that reviewing the code for compliance “requires heavy reverse engineering.” - Server-side GTM is invisible for regulatory monitoring and auditing

The researchers write that Server-side GTM blocks obstructs compliance auditing because the data collection occurs remotely on a server. - Consent is hard to configure on GTM Server Containers

Consent management tools are missing in GTM Server Containers, which prevents CMPs from displaying the purposes and the Data Collectors as required by regulations.

Auditing is described as highly difficult:

“Moreover, auditing and monitoring is exclusively attainable by only contacting the Publisher to grant access to the configuration of the GTM Server Container.

Furthermore, the Publisher is able to change the configuration of the GTM Server Container at any point in time (e.g., before any regulatory investigation), masking any compliance check.”

Conclusion: GTM Has Pitfalls And Flaws

The researchers were gave GTM poor marks for security and the non-compliant defaults, stating that it introduces more legal issues than solutions while complicating the compliance with regulations and making it hard for regulators to monitor for compliance.

Read the research paper:

Google Tag Manager: Hidden Data Leaks and its Potential Violations under EU Data Protection Law

Download the PDF of the research paper here.

Featured Image by Shutterstock/Praneat

SEO

Big Update To Google’s Ranking Drop Documentation

Google updated their guidance with five changes on how to debug ranking drops. The new version contains over 400 more words that address small and large ranking drops. There’s room to quibble about some of the changes but overall the revised version is a step up from what it replaced.

Change# 1: Downplays Fixing Traffic Drops

The opening sentence was changed so that it offers less hope for bouncing back from an algorithmic traffic drop. Google also joined two sentences into one sentence in the revised version of the documentation.

The documentation previously said that most traffic drops can be reversed and that identifying the reasons for a drop aren’t straightforward. The part about most of them can be reversed was completely removed.

Here is the original two sentences:

“A drop in organic Search traffic can happen for several reasons, and most of them can be reversed. It may not be straightforward to understand what exactly happened to your site”

Now there’s no hope offered for “most of them can be reversed” and more emphasis on understanding what happened is not straightforward.

This is the new guidance

“A drop in organic Search traffic can happen for several reasons, and it may not be straightforward to understand what exactly happened to your site.”

Change #2 Security Or Spam Issues

Google updated the traffic graph illustrations so that they precisely align with the causes for each kind of traffic decline.

The previous version of the graph was labeled:

“Site-level technical issue (Manual Action, strong algorithmic changes)”

The problem with the previous label is that manual actions and strong algorithmic changes are not technical issues and the new version fixes that issue.

The updated version now reads:

“Large drop from an algorithmic update, site-wide security or spam issue”

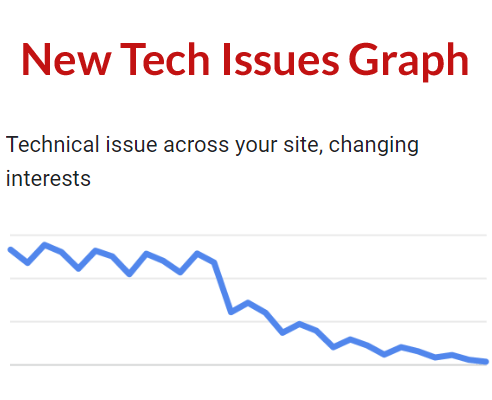

Change #3 Technical Issues

There’s one more change to a graph label, also to make it more accurate.

This is how the previous graph was labeled:

“Page-level technical issue (algorithmic changes, market disruption)”

The updated graph is now labeled:

“Technical issue across your site, changing interests”

Now the graph and label are more specific as a sitewide change and “changing interests” is more general and covers a wider range of changes than market disruption. Changing interests includes market disruption (where a new product makes a previous one obsolete or less desirable) but it also includes products that go out of style or loses their trendiness.

Change #4 Google Adds New Guidance For Algorithmic Changes

The biggest change by far is their brand new section for algorithmic changes which replaces two smaller sections, one about policy violations and manual actions and a second one about algorithm changes.

The old version of this one section had 108 words. The updated version contains 443 words.

A section that’s particularly helpful is where the guidance splits algorithmic update damage into two categories.

Two New Categories:

- Small drop in position? For example, dropping from position 2 to 4.

- Large drop in position? For example, dropping from position 4 to 29.

The two new categories are perfect and align with what I’ve seen in the search results for sites that have lost rankings. The reasons for dropping up and down within the top ten are different from the reasons why a site drops completely out of the top ten.

I don’t agree with the guidance for large drops. They recommend reviewing your site for large drops, which is good advice for some sites that have lost rankings. But in other cases there’s nothing wrong with the site and this is where less experienced SEOs tend to be unable to fix the problems because there’s nothing wrong with the site. Recommendations for improving EEAT, adding author bios or filing link disavows do not solve what’s going on because there’s nothing wrong with the site. The problem is something else in some of the cases.

Here is the new guidance for debugging search position drops:

“Algorithmic update

Google is always improving how it assesses content and updating its search ranking and serving algorithms accordingly; core updates and other smaller updates may change how some pages perform in Google Search results. We post about notable improvements to our systems on our list of ranking updates page; check it to see if there’s anything that’s applicable to your site.If you suspect a drop in traffic is due to an algorithmic update, it’s important to understand that there might not be anything fundamentally wrong with your content. To determine whether you need to make a change, review your top pages in Search Console and assess how they were ranking:

Small drop in position? For example, dropping from position 2 to 4.

Large drop in position? For example, dropping from position 4 to 29.Keep in mind that positions aren’t static or fixed in place. Google’s search results are dynamic in nature because the open web itself is constantly changing with new and updated content. This constant change can cause both gains and drops in organic Search traffic.

Small drop in position

A small drop in position is when there’s a small shift in position in the top results (for example, dropping from position 2 to 4 for a search query). In Search Console, you might see a noticeable drop in traffic without a big change in impressions.Small fluctuations in position can happen at any time (including moving back up in position, without you needing to do anything). In fact, we recommend avoiding making radical changes if your page is already performing well.

Large drop in position

A large drop in position is when you see a notable drop out of the top results for a wide range of terms (for example, dropping from the top 10 results to position 29).In cases like this, self-assess your whole website overall (not just individual pages) to make sure it’s helpful, reliable and people-first. If you’ve made changes to your site, it may take time to see an effect: some changes can take effect in a few days, while others could take several months. For example, it may take months before our systems determine that a site is now producing helpful content in the long term. In general, you’ll likely want to wait a few weeks to analyze your site in Search Console again to see if your efforts had a beneficial effect on ranking position.

Keep in mind that there’s no guarantee that changes you make to your website will result in noticeable impact in search results. If there’s more deserving content, it will continue to rank well with our systems.”

Change #5 Trivial Changes

The rest of the changes are relatively trivial but nonetheless makes the documentation more precise.

For example, one of the headings was changed from this:

You recently moved your site

To this new heading:

Site moves and migrations

Google’s Updated Ranking Drops Documentation

Google’s updated documentation is a well thought out but I think that the recommendations for large algorithmic drops are helpful for some cases and not helpful for other cases. I have 25 years of SEO experience and have experienced every single Google algorithm update. There are certain updates where the problem is not solved by trying to fix things and Google’s guidance used to be that sometimes there’s nothing to fix. The documentation is better but in my opinion it can be improved even further.

Read the new documentation here:

Debugging drops in Google Search traffic

Review the previous documentation:

Internet Archive Wayback Machine: Debugging drops in Google Search traffic

Featured Image by Shutterstock/Tomacco

SEO

Google March 2024 Core Update Officially Completed A Week Ago

Google has officially completed its March 2024 Core Update, ending over a month of ranking volatility across the web.

However, Google didn’t confirm the rollout’s conclusion on its data anomaly page until April 26—a whole week after the update was completed on April 19.

Many in the SEO community had been speculating for days about whether the turbulent update had wrapped up.

The delayed transparency exemplifies Google’s communication issues with publishers and the need for clarity during core updates

Google March 2024 Core Update Timeline & Status

First announced on March 5, the core algorithm update is complete as of April 19. It took 45 days to complete.

Unlike more routine core refreshes, Google warned this one was more complex.

Google’s documentation reads:

“As this is a complex update, the rollout may take up to a month. It’s likely there will be more fluctuations in rankings than with a regular core update, as different systems get fully updated and reinforce each other.”

The aftershocks were tangible, with some websites reporting losses of over 60% of their organic search traffic, according to data from industry observers.

The ripple effects also led to the deindexing of hundreds of sites that were allegedly violating Google’s guidelines.

Addressing Manipulation Attempts

In its official guidance, Google highlighted the criteria it looks for when targeting link spam and manipulation attempts:

- Creating “low-value content” purely to garner manipulative links and inflate rankings.

- Links intended to boost sites’ rankings artificially, including manipulative outgoing links.

- The “repurposing” of expired domains with radically different content to game search visibility.

The updated guidelines warn:

“Any links that are intended to manipulate rankings in Google Search results may be considered link spam. This includes any behavior that manipulates links to your site or outgoing links from your site.”

John Mueller, a Search Advocate at Google, responded to the turbulence by advising publishers not to make rash changes while the core update was ongoing.

However, he suggested sites could proactively fix issues like unnatural paid links.

“If you have noticed things that are worth improving on your site, I’d go ahead and get things done. The idea is not to make changes just for search engines, right? Your users will be happy if you can make things better even if search engines haven’t updated their view of your site yet.”

Emphasizing Quality Over Links

The core update made notable changes to how Google ranks websites.

Most significantly, Google reduced the importance of links in determining a website’s ranking.

In contrast to the description of links as “an important factor in determining relevancy,” Google’s updated spam policies stripped away the “important” designation, simply calling links “a factor.”

This change aligns with Google’s Gary Illyes’ statements that links aren’t among the top three most influential ranking signals.

Instead, Google is giving more weight to quality, credibility, and substantive content.

Consequently, long-running campaigns favoring low-quality link acquisition and keyword optimizations have been demoted.

With the update complete, SEOs and publishers are left to audit their strategies and websites to ensure alignment with Google’s new perspective on ranking.

Core Update Feedback

Google has opened a ranking feedback form related to this core update.

You can use this form until May 31 to provide feedback to Google’s Search team about any issues noticed after the core update.

While the feedback provided won’t be used to make changes for specific queries or websites, Google says it may help inform general improvements to its search ranking systems for future updates.

Google also updated its help documentation on “Debugging drops in Google Search traffic” to help people understand ranking changes after a core update.

Featured Image: Rohit-Tripathi/Shutterstock

FAQ

After the update, what steps should websites take to align with Google’s new ranking criteria?

After Google’s March 2024 Core Update, websites should:

- Improve the quality, trustworthiness, and depth of their website content.

- Stop heavily focusing on getting as many links as possible and prioritize relevant, high-quality links instead.

- Fix any shady or spam-like SEO tactics on their sites.

- Carefully review their SEO strategies to ensure they follow Google’s new guidelines.

SEO

Google Declares It The “Gemini Era” As Revenue Grows 15%

Alphabet Inc., Google’s parent company, announced its first quarter 2024 financial results today.

While Google reported double-digit growth in key revenue areas, the focus was on its AI developments, dubbed the “Gemini era” by CEO Sundar Pichai.

The Numbers: 15% Revenue Growth, Operating Margins Expand

Alphabet reported Q1 revenues of $80.5 billion, a 15% increase year-over-year, exceeding Wall Street’s projections.

Net income was $23.7 billion, with diluted earnings per share of $1.89. Operating margins expanded to 32%, up from 25% in the prior year.

Ruth Porat, Alphabet’s President and CFO, stated:

“Our strong financial results reflect revenue strength across the company and ongoing efforts to durably reengineer our cost base.”

Google’s core advertising units, such as Search and YouTube, drove growth. Google advertising revenues hit $61.7 billion for the quarter.

The Cloud division also maintained momentum, with revenues of $9.6 billion, up 28% year-over-year.

Pichai highlighted that YouTube and Cloud are expected to exit 2024 at a combined $100 billion annual revenue run rate.

Generative AI Integration in Search

Google experimented with AI-powered features in Search Labs before recently introducing AI overviews into the main search results page.

Regarding the gradual rollout, Pichai states:

“We are being measured in how we do this, focusing on areas where gen AI can improve the Search experience, while also prioritizing traffic to websites and merchants.”

Pichai reports that Google’s generative AI features have answered over a billion queries already:

“We’ve already served billions of queries with our generative AI features. It’s enabling people to access new information, to ask questions in new ways, and to ask more complex questions.”

Google reports increased Search usage and user satisfaction among those interacting with the new AI overview results.

The company also highlighted its “Circle to Search” feature on Android, which allows users to circle objects on their screen or in videos to get instant AI-powered answers via Google Lens.

Reorganizing For The “Gemini Era”

As part of the AI roadmap, Alphabet is consolidating all teams building AI models under the Google DeepMind umbrella.

Pichai revealed that, through hardware and software improvements, the company has reduced machine costs associated with its generative AI search results by 80% over the past year.

He states:

“Our data centers are some of the most high-performing, secure, reliable and efficient in the world. We’ve developed new AI models and algorithms that are more than one hundred times more efficient than they were 18 months ago.

How Will Google Make Money With AI?

Alphabet sees opportunities to monetize AI through its advertising products, Cloud offerings, and subscription services.

Google is integrating Gemini into ad products like Performance Max. The company’s Cloud division is bringing “the best of Google AI” to enterprise customers worldwide.

Google One, the company’s subscription service, surpassed 100 million paid subscribers in Q1 and introduced a new premium plan featuring advanced generative AI capabilities powered by Gemini models.

Future Outlook

Pichai outlined six key advantages positioning Alphabet to lead the “next wave of AI innovation”:

- Research leadership in AI breakthroughs like the multimodal Gemini model

- Robust AI infrastructure and custom TPU chips

- Integrating generative AI into Search to enhance the user experience

- A global product footprint reaching billions

- Streamlined teams and improved execution velocity

- Multiple revenue streams to monetize AI through advertising and cloud

With upcoming events like Google I/O and Google Marketing Live, the company is expected to share further updates on its AI initiatives and product roadmap.

Featured Image: Sergei Elagin/Shutterstock

-

WORDPRESS7 days ago

WORDPRESS7 days ago13 Best HubSpot Alternatives for 2024 (Free + Paid)

-

MARKETING6 days ago

MARKETING6 days agoAdvertising in local markets: A playbook for success

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Core Update Flux, AdSense Ad Intent, California Link Tax & More

-

SEARCHENGINES5 days ago

SEARCHENGINES5 days agoGoogle Needs Very Few Links To Rank Pages; Links Are Less Important

-

SEO5 days ago

SEO5 days agoHow to Become an SEO Lead (10 Tips That Advanced My Career)

-

MARKETING6 days ago

MARKETING6 days agoHow to Use AI For a More Effective Social Media Strategy, According to Ross Simmonds

-

PPC4 days ago

PPC4 days ago10 Most Effective Franchise Marketing Strategies

-

SEARCHENGINES3 days ago

SEARCHENGINES3 days agoGoogle Won’t Change The 301 Signals For Ranking & SEO

![The Current State of Google’s Search Generative Experience [What It Means for SEO in 2024] person typing on laptop with](https://articles.entireweb.com/wp-content/uploads/2024/04/The-Current-State-of-Googles-Search-Generative-Experience-What-It.webp-400x240.webp)

![The Current State of Google’s Search Generative Experience [What It Means for SEO in 2024] person typing on laptop with](https://articles.entireweb.com/wp-content/uploads/2024/04/The-Current-State-of-Googles-Search-Generative-Experience-What-It.webp-80x80.webp)