SEO

What It Is & Why It Matters For SEO

You may have run across the W3C in your web development and SEO travels.

The W3C is the World Wide Web Consortium, and it was founded by the creator of the World Wide Web, Tim Berners-Lee.

This web standards body creates coding specifications for web standards worldwide.

It also offers a validator service to ensure that your HTML (among other code) is valid and error-free.

Making sure that your page validates is one of the most important things one can do to achieve cross-browser and cross-platform compatibility and provide an accessible online experience to all.

Invalid code can result in glitches, rendering errors, and long processing or loading times.

Simply put, if your code doesn’t do what it was intended to do across all major web browsers, this can negatively impact user experience and SEO.

WC3 Validation: How It Works & Supports SEO

Web standards are important because they give web developers a standard set of rules for writing code.

If all code used by your company is created using the same protocols, it will be much easier for you to maintain and update this code in the future.

This is especially important when working with other people’s code.

If your pages adhere to web standards, they will validate correctly against W3C validation tools.

When you use web standards as the basis for your code creation, you ensure that your code is user-friendly with built-in accessibility.

When it comes to SEO, validated code is always better than poorly written code.

According to John Mueller, Google doesn’t care how your code is written. That means a WC3 validation error won’t cause your rankings to drop.

You won’t rank better with validated code, either.

But there are indirect SEO benefits to well-formatted markup:

- Eliminates Code Bloat: Validating code means that you tend to avoid code bloat. Validated code is generally leaner, better, and more compact than its counterpart.

- Faster Rendering Times: This could potentially translate to better render times as the browser needs less processing, and we know that page speed is a ranking factor.

- Indirect Contributions to Core Web Vitals Scores: When you pay attention to coding standards, such as adding the width and height attribute to your images, you eliminate steps that the browser must take in order to render the page. Faster rendering times can contribute to your Core Web Vitals scores, improving these important metrics overall.

Roger Montti compiled these six reasons Google still recommends code validation, because it:

- Could affect crawl rate.

- Affects browser compatibility.

- Encourages a good user experience.

- Ensures that pages function everywhere.

- Useful for Google Shopping Ads.

- Invalid HTML in head section breaks Hreflang.

Multiple Device Accessibility

Valid code also helps translate into better cross-browser and cross-platform compatibility because it conforms to the latest in W3C standards, and the browser will know better how to process that code.

This leads to an improved user experience for people who access your sites from different devices.

If you have a site that’s been validated, it will render correctly regardless of the device or platform being used to view it.

That is not to say that all code doesn’t conform across multiple browsers and platforms without validating, but there can be deviations in rendering across various applications.

Common Reasons Code Doesn’t Validate

Of course, validating your web pages won’t solve all problems with rendering your site as desired across all platforms and all browsing options. But it does go a long way toward solving those problems.

In the event that something does go wrong with validation on your part, you now have a baseline from which to begin troubleshooting.

You can go into your code and see what is making it fail.

It will be easier to find these problems and troubleshoot them with a validated site because you know where to start looking.

Having said that, there are several reasons pages may not validate.

Browser Specific Issues

It may be that something in your code will only work on one browser or platform, but not another.

This problem would then need to be addressed by the developer of the offending script.

This would mean having to actually edit the code itself in order for it to validate on all platforms/browsers instead of just some of them.

You Are Using Outdated Code

The W3C only started rendering validation tests over the course of the past couple of decades.

If your page was created to validate in a browser that predates this time (IE 6 or earlier, for example), it will not pass these new standards because it was written with older technologies and formats in mind.

While this is a relatively rare issue, it still happens.

This problem can be fixed by reworking code to make it W3C compliant, but if you want to maintain compatibility with older browsers, you may need to continue using code that works, and thus forego passing 100% complete validation.

Both problems could potentially be solved with a little trial and error.

With some work and effort, both types of sites can validate across multiple devices and platforms without issue – hopefully!

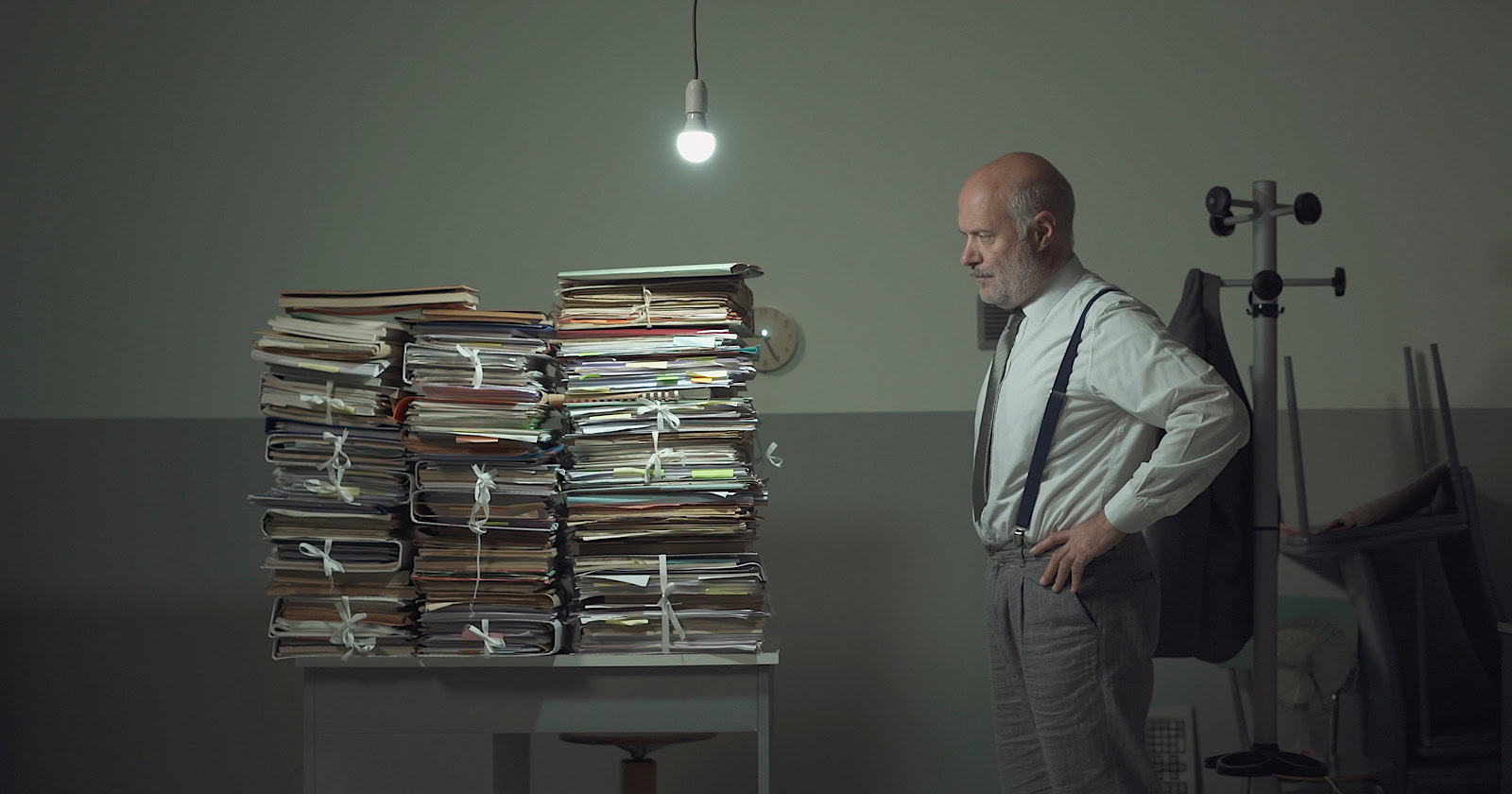

Polyglot Documents

Polyglot documents include any document that may have been transferred from an older version of code, and never re-worked to be compatible with the new version.

In other words, it’s a combination of documents with a different code type than what the current document was coded for (say an HTML 4.01 transitional document type compared to an XHTML document type).

Make no mistake: Even though both may be “HTML” per se, they are very different languages and need to be treated as such.

You can’t copy and paste one over and expect things to be all fine and dandy.

What does this mean?

For example, you may have seen situations where you may validate code, but nearly every single line of a document has something wrong with it on the W3C validator.

This could be due to somebody transferring over code from another version of the site, and not updating it to reflect new coding standards.

Either way, the only way to repair this is to either rework the code line by line (an extraordinarily tedious process).

How WC3 Validation Works

The W3C validator is this author’s validator of choice for making sure that your code validates across a wide variety of platforms and systems.

The W3C validator is free to use, and you can access it here.

With the W3C validator, it’s possible to validate your pages by page URL, file upload, and Direct Input.

- Validate Your Pages by URL: This is relatively simple. Just copy and paste the URL into the Address field, and you can click on the check button in order to validate your code.

- Validate Your Pages by File Upload: When you validate by file upload, you will upload the html files of your choice one file at a time. Caution: if you’re using Internet Explorer or certain versions Windows XP, this option may not work for you.

- Validate Your Pages by Direct Input: With this option, all you have to do is copy and paste the code you want to validate into the editor, and the W3C validator will do the rest.

While some professionals claim that some W3C errors have no rhyme or reason, in 99.9% of cases, there is a rhyme and reason.

If there isn’t a rhyme and reason throughout the entire document, then you may want to refer to our section on polyglot documents below as a potential problem.

HTML Syntax

Let’s start at the top with HTML syntax. Because it’s the backbone of the World Wide Web, this is the most common coding that you will run into as an SEO professional.

The W3C has created a specification for HTML 5 called “the HTML5 Standard”.

This document explains how HTML should be written on an ideal level for processing by popular browsers.

If you go to their site, you can utilize their validator to make sure that your code is valid according to this spec.

They even give examples of some of the rules that they look for when it comes to standards compliance.

This makes it easier than ever to check your work before you publish it!

Validators For Other Languages

Now let’s move on to some of the other languages that you may be using online.

For example, you may have heard of CSS3.

The W3C has standards documentation for CSS 3 as well called “the CSS3 Standard.”

This means that there is even more opportunity for validation!

You can validate your HTML against their standard and then validate your CSS against the same standard to ensure conformity across platforms.

While it may seem like overkill to validate your code against so many different standards at once, remember that this means that there are more chances than ever to ensure conformity across platforms.

And for those of you who only work in one language, you now have the opportunity to expand your horizons!

It can be incredibly difficult if not impossible to align everything perfectly, so you will need to pick your battles.

You may also just need something checked quickly online without having the time or resources available locally.

Common Validation Errors

You will need to be aware of the most common validation errors as you go through the validation process, and it’s also a good idea to know what those errors mean.

This way, if your page does not validate, you will know exactly where to start looking for possible problems.

Some of the most common validation errors (and their meanings) include:

- Type Mismatch: When your code is trying to make one kind of data object appear like another data object (e.g., submitting a number as text), you run the risk of getting this message. This error usually signals that some kind of coding mistake has been made. The solution would be to figure out exactly where that mistake was made and fix it so that the code validates successfully.

- Parse Error: This error tells you that there was a mistake in the coding somewhere, but it does not tell you where that mistake is. If this happens, you will have to do some serious sleuthing in order to find where your code went wrong.

- Syntax Errors: These types of errors involve (mostly) careless mistakes in coding syntax. Either the syntax is typed incorrectly, or its context is incorrect. Either way, these errors will show up in the W3C validator.

The above are just some examples of errors that you may see when you’re validating your page.

Unfortunately, the list goes on and on – as does the time spent trying to fix these problems!

More Specific Errors (And Their Solutions)

You may find more specific errors that apply to your site. They may include errors that reference “type attribute used in tag.”

This refers to some tags like JavaScript declaration tags, such as the following: <script type=”text/javascript”>.

The type attribute of this tag is not needed anymore and is now considered legacy coding.

If you use that kind of coding now, you may end up unintentionally throwing validation errors all over the place in certain validators.

Did you know that not using alternative text (alt text) – also called alt tags by some – is a W3C issue? It does not conform to the W3C rules for accessibility.

Alternative text is the text that is coded into images.

It is primarily used by screen readers for the blind.

If a blind person visits your site, and you do not have alternative text (or meaningful alternative text) in your images, then they will be unable to use your site effectively.

The way these screen readers work is that they speak aloud the words that are coded into images, so the blind can use their sense of hearing to understand what’s on your web page.

If your page is not very accessible in this regard, this could potentially lead to another sticky issue: that of accessibility lawsuits.

This is why it pays to pay attention to your accessibility standards and validate your code against these standards.

Other types of common errors include using tags out of context.

For code context errors, you will need to make sure they are repaired according to the W3C documentation so these errors are no longer thrown by the validator.

Preventing Errors From Impacting Your Site Experience

The best way to prevent validation errors from happening is by making sure your site validates before launch.

It’s also useful to validate your pages regularly after they’re launched so that new errors do not crop up unexpectedly over time.

If you think about it, validation errors are the equivalent of spelling mistakes in an essay – once they’re there, they’re difficult (if not impossible) to erase, and they need to be fixed as soon as humanly possible.

If you adopt the habit of always using the W3C validator in order to validate your code, then you can, in essence, stop these coding mistakes from ever happening in the first place.

Heads Up: There Is More Than One Way To Do It

Sometimes validation won’t go as planned according to all standards.

And there is more than one way to accomplish the same goal.

For example, if you use <button> to create a button and then give it an href tag inside of it using the <a> element, this doesn’t seem to be possible according to W3C standards.

But is perfectly acceptable in JavaScript because there are actually ways to do this within the language itself.

This is an example of how we create this particular code and insert it into the direct input of the W3C validator:

In the next step, during validation, as discussed above we find that there are at least 4 errors just within this particular code alone, indicating that this is not exactly a particularly well-coded line:

Screenshot from W3C validator, February 2022

Screenshot from W3C validator, February 2022While validation, on the whole, can help you immensely, it is not always going to be 100% complete.

This is why it’s important to familiarize yourself by coding with the validator as much as you can.

Some adaptation will be needed. But it takes experience to achieve the best possible cross-platform compatibility while also remaining compliant with today’s browsers.

The ultimate goal here is improving accessibility and achieving compatibility with all browsers, operating systems, and devices.

Not all browsers and devices are created equal, and validation achieves a cohesive set of instructions and standards that can accomplish the goal of making your page equal enough for all browsers and devices.

When in doubt, always err on the side of proper code validation.

By making sure that you work to include the absolute best practices in your coding, you can ensure that your code is as accessible as it possibly can be for all types of users.

On top of that, validating your HTML against W3C standards helps you achieve cross-platform compatibility between different browsers and devices.

By working to always ensure that your code validates, you are on your way to making sure that your site is as safe, accessible, and efficient as possible.

More resources:

Featured Image: graphicwithart/Shutterstock

SEO

OpenAI Expected to Integrate Real-Time Data In ChatGPT

Sam Altman, CEO of OpenAI, dispelled rumors that a new search engine would be announced on Monday, May 13. Recent deals have raised the expectation that OpenAI will announce the integration of real-time content from English, Spanish, and French publications into ChatGPT, complete with links to the original sources.

OpenAI Search Is Not Happening

Many competing search engines have tried and failed to challenge Google as the leading search engine. A new wave of hybrid generative AI search engines is currently trying to knock Google from the top spot with arguably very little success.

Sam Altman is on record saying that creating a search engine to compete against Google is not a viable approach. He suggested that technological disruption was the way to replace Google by changing the search paradigm altogether. The speculation that Altman is going to announce a me-too search engine on Monday never made sense given his recent history of dismissing the concept as a non-starter.

So perhaps it’s not a surprise that he recently ended the speculation by explicitly saying that he will not be announcing a search engine on Monday.

He tweeted:

“not gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me.”

“New Stuff” May Be Iterative Improvement

It’s quite likely that what’s going to be announced is iterative which means it improves ChatGPT but not replaces it. This fits into how Altman recently expressed his approach with ChatGPT.

He remarked:

“And it does kind of suck to ship a product that you’re embarrassed about, but it’s much better than the alternative. And in this case in particular, where I think we really owe it to society to deploy iteratively.

There could totally be things in the future that would change where we think iterative deployment isn’t such a good strategy, but it does feel like the current best approach that we have and I think we’ve gained a lot from from doing this and… hopefully the larger world has gained something too.”

Improving ChatGPT iteratively is Sam Altman’s preference and recent clues point to what those changes may be.

Recent Deals Contain Clues

OpenAI has been making deals with news media and User Generated Content publishers since December 2023. Mainstream media has reported these deals as being about licensing content for training large language models. But they overlooked a a key detail that we reported on last month which is that these deals give OpenAI access to real-time information that they stated will be used to give attribution to that real-time data in the form of links.

That means that ChatGPT users will gain the ability to access real-time news and to use that information creatively within ChatGPT.

Dotdash Meredith Deal

Dotdash Meredith (DDM) is the publisher of big brand publications such as Better Homes & Gardens, FOOD & WINE, InStyle, Investopedia, and People magazine. The deal that was announced goes way beyond using the content as training data. The deal is explicitly about surfacing the Dotdash Meredith content itself in ChatGPT.

The announcement stated:

“As part of the agreement, OpenAI will display content and links attributed to DDM in relevant ChatGPT responses. …This deal is a testament to the great work OpenAI is doing on both fronts to partner with creators and publishers and ensure a healthy Internet for the future.

Over 200 million Americans each month trust our content to help them make decisions, solve problems, find inspiration, and live fuller lives. This partnership delivers the best, most relevant content right to the heart of ChatGPT.”

A statement from OpenAI gives credibility to the speculation that OpenAI intends to directly show licensed third-party content as part of ChatGPT answers.

OpenAI explained:

“We’re thrilled to partner with Dotdash Meredith to bring its trusted brands to ChatGPT and to explore new approaches in advancing the publishing and marketing industries.”

Something that DDM also gets out of this deal is that OpenAI will enhance DDM’s in-house ad targeting in order show more tightly focused contextual advertising.

Le Monde And Prisa Media Deals

In March 2024 OpenAI announced a deal with two global media companies, Le Monde and Prisa Media. Le Monde is a French news publication and Prisa Media is a Spanish language multimedia company. The interesting aspects of these two deals is that it gives OpenAI access to real-time data in French and Spanish.

Prisa Media is a global Spanish language media company based in Madrid, Spain that is comprised of magazines, newspapers, podcasts, radio stations, and television networks. It’s reach extends from Spain to America. American media companies include publications in the United States, Argentina, Bolivia, Chile, Colombia, Costa Rica, Ecuador, Mexico, and Panama. That is a massive amount of real-time information in addition to a massive audience of millions.

OpenAI explicitly announced that the purpose of this deal was to bring this content directly to ChatGPT users.

The announcement explained:

“We are continually making improvements to ChatGPT and are supporting the essential role of the news industry in delivering real-time, authoritative information to users. …Our partnerships will enable ChatGPT users to engage with Le Monde and Prisa Media’s high-quality content on recent events in ChatGPT, and their content will also contribute to the training of our models.”

That deal is not just about training data. It’s about bringing current events data to ChatGPT users.

The announcement elaborated in more detail:

“…our goal is to enable ChatGPT users around the world to connect with the news in new ways that are interactive and insightful.”

As noted in our April 30th article that revealed that OpenAI will show links in ChatGPT, OpenAI intends to show third party content with links to that content.

OpenAI commented on the purpose of the Le Monde and Prisa Media partnership:

“Over the coming months, ChatGPT users will be able to interact with relevant news content from these publishers through select summaries with attribution and enhanced links to the original articles, giving users the ability to access additional information or related articles from their news sites.”

There are additional deals with other groups like The Financial Times which also stress that this deal will result in a new ChatGPT feature that will allow users to interact with real-time news and current events .

OpenAI’s Monday May 13 Announcement

There are many clues that the announcement on Monday will be that ChatGPT users will gain the ability to interact with content about current events. This fits into the terms of recent deals with news media organizations. There may be other features announced as well but this part is something that there are many clues pointing to.

Watch Altman’s interview at Stanford University

Featured Image by Shutterstock/photosince

SEO

Google’s Strategies For Dealing With Content Decay

In the latest episode of the Search Off The Record podcast, Google Search Relations team members John Mueller and Lizzi Sassman did a deep dive into dealing with “content decay” on websites.

Outdated content is a natural issue all sites face over time, and Google has outlined strategies beyond just deleting old pages.

While removing stale content is sometimes necessary, Google recommends taking an intentional, format-specific approach to tackling content decay.

Archiving vs. Transitional Guides

Google advises against immediately removing content that becomes obsolete, like materials referencing discontinued products or services.

Removing content too soon could confuse readers and lead to a poor experience, Sassman explains:

“So, if I’m trying to find out like what happened, I almost need that first thing to know. Like, “What happened to you?” And, otherwise, it feels almost like an error. Like, “Did I click a wrong link or they redirect to the wrong thing?””

Sassman says you can avoid confusion by providing transitional “explainer” pages during deprecation periods.

A temporary transition guide informs readers of the outdated content while steering them toward updated resources.

Sassman continues:

“That could be like an intermediary step where maybe you don’t do that forever, but you do it during the transition period where, for like six months, you have them go funnel them to the explanation, and then after that, all right, call it a day. Like enough people know about it. Enough time has passed. We can just redirect right to the thing and people aren’t as confused anymore.”

When To Update Vs. When To Write New Content

For reference guides and content that provide authoritative overviews, Google suggests updating information to maintain accuracy and relevance.

However, for archival purposes, major updates may warrant creating a new piece instead of editing the original.

Sassman explains:

“I still want to retain the original piece of content as it was, in case we need to look back or refer to it, and to change it or rehabilitate it into a new thing would almost be worth republishing as a new blog post if we had that much additional things to say about it.”

Remove Potentially Harmful Content

Google recommends removing pages in cases where the outdated information is potentially harmful.

Sassman says she arrived at this conclusion when deciding what to do with a guide involving obsolete structured data:

“I think something that we deleted recently was the “How to Structure Data” documentation page, which I thought we should just get rid of it… it almost felt like that’s going to be more confusing to leave it up for a period of time.

And actually it would be negative if people are still adding markup, thinking they’re going to get something. So what we ended up doing was just delete the page and redirect to the changelog entry so that, if people clicked “How To Structure Data” still, if there was a link somewhere, they could still find out what happened to that feature.”

Internal Auditing Processes

To keep your content current, Google advises implementing a system for auditing aging content and flagging it for review.

Sassman says she sets automated alerts for pages that haven’t been checked in set periods:

“Oh, so we have a little robot to come and remind us, “Hey, you should come investigate this documentation page. It’s been x amount of time. Please come and look at it again to make sure that all of your links are still up to date, that it’s still fresh.””

Context Is Key

Google’s tips for dealing with content decay center around understanding the context of outdated materials.

You want to prevent visitors from stumbling across obsolete pages without clarity.

Additional Google-recommended tactics include:

- Prominent banners or notices clarifying a page’s dated nature

- Listing original publish dates

- Providing inline annotations explaining how older references or screenshots may be obsolete

How This Can Help You

Following Google’s recommendations for tackling content decay can benefit you in several ways:

- Improved user experience: By providing clear explanations, transition guides, and redirects, you can ensure that visitors don’t encounter confusing or broken pages.

- Maintained trust and credibility: Removing potentially harmful or inaccurate content and keeping your information up-to-date demonstrates your commitment to providing reliable and trustworthy resources.

- Better SEO: Regularly auditing and updating your pages can benefit your website’s search rankings and visibility.

- Archival purposes: By creating new content instead of editing older pieces, you can maintain a historical record of your website’s evolution.

- Streamlined content management: Implementing internal auditing processes makes it easier to identify and address outdated or problematic pages.

By proactively tackling content decay, you can keep your website a valuable resource, improve SEO, and maintain an organized content library.

Listen to the full episode of Google’s podcast below:

Featured Image: Stokkete/Shutterstock

SEO

25 Snapchat Statistics & Facts For 2024

Snapchat, known for its ephemeral content, innovative augmented reality (AR) features, and fiercely loyal user base, is a vital player in the social media landscape.

While it sometimes flies under the radar – as other platforms like TikTok, YouTube, and Instagram tend to dominate the cultural conversation – Snapchat is an incredibly powerful marketing tool that holds a unique place in the hearts and minds of its users.

In this article, we’ll explore what you need to know about Snapchat, with insights that shed light on what audiences think of the app and where its strengths lie.

From user growth trends to advertising effectiveness, let’s look at the state of Snapchat right now.

What Is Snapchat?

Snapchat is a social media app that allows users to share photos and videos with friends and followers online.

Unlike other social platforms like Facebook, Instagram, and TikTok – where much of the content is stored permanently – Snapchat prioritizes ephemeral content only.

Once viewed, Snapchat content disappears, which adds a layer of spontaneity and privacy to digital interactions.

Snapchat leverages the power of augmented reality to entertain its audience by creating interactive and immersive experiences through features like AR lenses.

Users can also explore a variety of stickers, drawing tools, and emojis to add a personal touch to everything they post.

What started as a small collection of tools in 2011 has now expanded to a massive library of innovative features, such as a personalized 3D Snap Map, gesture recognition, audio recommendations for lenses, generative AI capabilities, and much more.

Creating an account on Snapchat is easy. Simply download the app on Google Play or the App Store. Install it on your device, and you’re ready!

-

Screenshot from Google Play, December 2023

25 Surprising Facts You Didn’t Know About Snapchat

Let’s dive in!

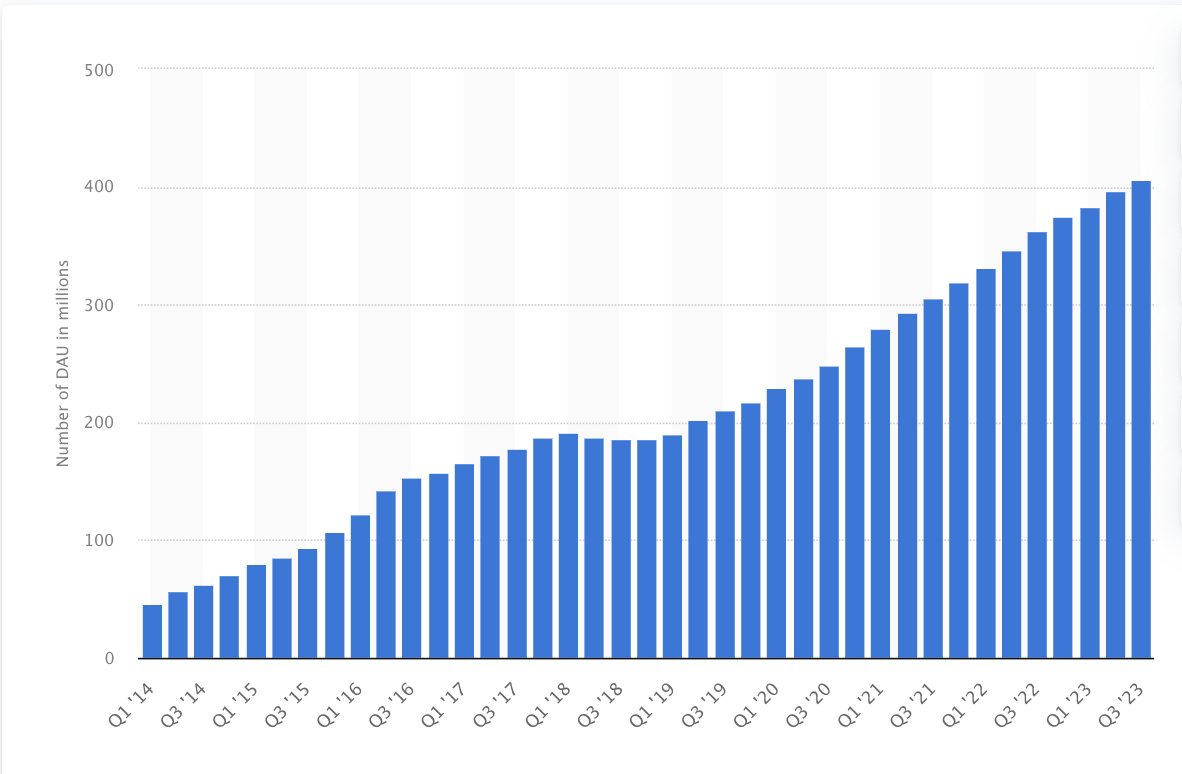

1. Snapchat Has 406 Million Daily Active Users

That number, released by the company in October 2023, represents an increase of 43 million year-over-year – a 12% increase.

Here’s a chart from Statista showing Snapchat’s user growth from 2014 to 2023:

-

Screenshot from Statista.com, December 2023

Screenshot from Statista.com, December 2023

And with 750 million monthly active users (MAUs), Snapchat is the fifth-biggest social media network in the world.

2. Users 18-24 Years Old Account For The Biggest Chunk Of Snapchat’s Audience

According to Snapchat’s own advertising data, the platform has 243.5 million users aged 18 to 24 – representing 38.6% of its total ad audience.

The second largest group of users are between the ages of 25 and 34, followed by 13-17-year-olds – proving that Snapchat is reaching young people around the world.

On the flip side, the platform isn’t huge with older users; people aged 50 and over account for only 3.8% of Snapchat’s total ad audience.

As a marketer, you can take a hint on what your campaign should focus on if you use Snapchat. As Snapchat’s own report puts it:

“From its inception, Snapchat has inherently created a frictionless space where Gen Z creatives can experiment with their identities, yet not have to feel like they’re ‘on brand’ in communicating to their close friend groups.”

3. Snapchat Reaches 90% Of The 13 To 24-Year-Old Population

It also reaches 75% of people between the ages of 13 and 34 in over 25 countries, according to Snapchat’s estimates.

In the US, 59% of American teenagers (between the ages of 13 and 17) report using Snapchat. This number amounts to roughly six in 10 US teens.

4. Snapchat Users Open The App Nearly 40 Times A Day

According to the company, this means people interact with their social circles on Snapchat more than any other social network.

In the US, about half of teenagers (51%) report using Snapchat at least once a day – making it slightly more popular than Instagram, but not quite as popular as YouTube or TikTok.

5. Taco Bell Paid $75,000 For 24 Hours Of The Taco Filter/Ad

To boost sales, Taco Bell launched the taco filter on Snapchat. Here’s what it looked like.

Today only: turn yourself into a taco using our @Snapchat lens. Because Cinco de Mayo. pic.twitter.com/P4KwLdFNFZ

— Taco Bell (@tacobell) May 5, 2016

The filter is humorous, relevant, and unique. Users adored it, and it got 224 million views.

That’s great, considering Taco Bell paid $75,000 for the ad – which actually proved to be a great investment for the exposure the brand received.

6. More Than Half (50.6%) Of Snapchat Users Are Female

In contrast, 48.7% of the platform’s global users are male.

While there is not a huge discrepancy between the demographics here, it’s helpful information for any marketers looking to put together Snapchat campaigns.

7. Snapchat Is The No. 1 App People Use To Share What They Bought

Is your brand looking to reach young social media users around the world? Snapchat could be the perfect platform for you.

People are 45% more likely to recommend brands to friends on Snapchat compared to other platforms.

They’re also 2X more likely to post about a gift after receiving it – making Snapchat a powerful tool for influencer marketing and brand partnerships.

8. Snapchat Pioneered Vertical Video Ads

Once a novelty in the social media industry, vertical video ads have become one of the most popular ways to advertise on social media and reach global audiences.

What are vertical video ads? It’s self-explanatory: They’re ads that can be viewed with your phone held vertically. The ad format is optimized for how we use our mobile devices and designed to create a non-disruptive experience for users.

You’ve definitely seen countless video ads by now, but did you know Snapchat pioneered them?

9. You Can Follow Rock Star Business Experts On Snapchat

Who knew Snapchat could be a powerful business tool? Here are the top three experts you should follow right now:

10. More Than 250 Million Snapchatters Engage With AR Every Day, On Average

Snapchat was the first social media app to really prioritize the development of AR features, and it’s paid off.

Over 70% of users engage with AR on the first day that they download the app – and, to date, there have been more than 3 million lenses launched on Snapchat.

11. People Are 34% More Likely To Purchase Products They See Advertised On Snapchat

When compared to watching the same ad on other social media platform, Snapchat proves to be an effective way to reach and convert.

12. Snapchat Is The King Of Ephemeral Content Marketing

Ephemeral content marketing uses video, photos, and media that are only accessible for a limited time.

Here are three reasons it works:

- It creates a sense of urgency.

- It appeals to buyers who don’t want to feel “sold.”

- It’s more personalized than traditional sales funnel marketing.

Guess who’s one of the kings of ephemeral content marketing? That’s right: Snapchat.

Consider that if it weren’t for Snapchat, Instagram Stories would likely not exist right now.

13. More Than 5 Million People Subscribe To Snapchat+

Snapchat+ is the platform’s paid subscription service that gives users access to exclusive and pre-release features on the platform.

Subscribers also receive a range of other perks, including options to customize their app experience and the ability to see how many times their content has been rewatched.

The fact that so many millions of users are willing to pay for special access and features to Snapchat should be a sign to brands and marketers everywhere that the platform has a strong pull with its audience.

Beyond that, the fact that Snapchat+ drew 5 million subscribers within just a year or so of launching is impressive on its own.

14. Snapchat Reaches Nearly Half Of US Smartphone Users

According to Statista, approximately 309 million American adults use smartphones today.

Snapchat’s ability to reach such a considerable portion of US smartphone users is notable.

15. Snapchat Users Spend An Average Of 19 Minutes Per Day On The App

That’s 19 minutes brands can use to connect with people, grow brand awareness, and convey their message.

16. Snapchat’s Original Name Was Picaboo

In fact, Snapchat did run as Picaboo for about a year.

17. Snapchat Was Created After 34 Failures

Snapchat creators Evan Spiegel, Bobby Murphy, and Frank Reginald Brown worked on the Snapchat project while they were studying at Stanford University.

After 34 failures, they finally developed the app as we know it today.

18. Snapchat’s Creators Had A Major Falling-Out Before The App Was Released

Frank Reginald Brown was ousted from the Snapchat project by his friends.

Although no one knows the real story, Brown claims Spiegel and Murphy changed the server passwords and ceased communication with him a month before Snapchat was launched.

19. Snapchat Downloads Doubled After The Launch Of The Toddler & Gender Swap Filters

Users downloaded Snapchat 41.5 million times in a month after the release of these filters!

20. Mark Zuckerberg Tried To Buy Snapchat

Snapchat’s owners refused to sell Snapchat to Zuckerberg (even though the offer went as high as $3 billion!).

21. Snapchat’s Mascot Is Called Ghostface Chillah

The mascot was inspired by Ghostface Killah of the Wu-Tang Clan – and when you consider that the app was once called “Picaboo,” the ghost logo makes more sense.

Apparently, Snapchat co-founder and CEO Evan Spiegel has said that he developed the mascot himself and chose a ghost based on the ephemeral nature of Snapchat content.

22. Facebook And Instagram Borrowed Ephemeral Content From Snapchat

As we mentioned above, we have Snapchat to thank for Facebook and Instagram Stories, which have since become integral to the social media experience.

Snapchat also pioneered the use of AR filters, which were adopted by Instagram and paved the way for the filters that dominate the world of TikTok today.

23. 75% Of Gen Z And Millennials Say Snapchat Is The No. 1 Platform For Sharing Real-Life Experiences

Social media is all about authentic moments and human connection – and social media marketing is no different.

With such a large number of young people preferring Snapchat over other platforms for sharing their life experiences, marketers should follow suit.

Find ways to share behind-the-scenes moments with your team and company, and emphasize the humans behind the brand.

24. Snapchat Users Have Over $4.4 Trillion In Global Spending Power

That’s nothing to sneeze at.

25. In 2022, Snapchat Generated $4.6 Billion In Revenue

It is currently valued at over $20 billion.

Looking Ahead With Snapchat

Snapchat’s ephemeral content, intimacy, and spontaneity are strong points for everyday users, content creators, and businesses alike.

Marketers should keep a keen eye on emerging trends within the platform, such as new AR advancements and evolving user demographics.

Those looking to reach younger audiences or show an authentic, human side of their brand should consider wading into the waters of Snapchat.

By harnessing the power of ephemeral content and engaging features, brands can effectively use Snapchat to grow their brand awareness, engage with audiences on a more personal level, and stay relevant in the fast-paced world of digital marketing.

More resources:

Featured Image: Trismegist san/Shutterstock

-

MARKETING6 days ago

MARKETING6 days agoA Recap of Everything Marketers & Advertisers Need to Know

-

PPC5 days ago

PPC5 days agoHow the TikTok Algorithm Works in 2024 (+9 Ways to Go Viral)

-

SEARCHENGINES5 days ago

SEARCHENGINES5 days agoGoogle Started Enforcing The Site Reputation Abuse Policy

-

SEO5 days ago

SEO5 days agoBlog Post Checklist: Check All Prior to Hitting “Publish”

-

SEO3 days ago

SEO3 days agoHow to Use Keywords for SEO: The Complete Beginner’s Guide

-

MARKETING4 days ago

MARKETING4 days agoHow To Protect Your People and Brand

-

PPC6 days ago

PPC6 days agoHow to Craft Compelling Google Ads for eCommerce

-

MARKETING5 days ago

MARKETING5 days agoElevating Women in SEO for a More Inclusive Industry

You must be logged in to post a comment Login