SEO

First Input Delay – A Simple Explanation via @sejournal, @martinibuster

First Input Delay (FID) is a user experience metric that Google uses as a small ranking factor.

This article offers an easy-to-understand overview of FID to help make sense of the topic.

First input delay is more than trying to please Google. Improvements to site performance generally lead to increased sales, ad revenue, and leads.

What is First Input Delay?

FID is the measurement of the time it takes for a browser to respond to a site visitor’s first interaction with the site while the site is loading. This is sometimes called Input Latency.

An interaction can be tapping a button, a link, or a keypress, and the response given in response. Text input areas, dropdowns, and checkboxes are other kinds of interaction points that FID will measure.

Scrolling or zooming do not count as interactions because there’s no response expected from the site itself.

The goal for FID is to measure how responsive a site is while it’s loading.

Advertisement

Continue Reading Below

The Cause of First Input Delay

First Input Delay is generally caused by images and scripts that download in a non-orderly manner.

This disordered coding causes the web page download to excessively pause, then start, then pause. This causes unresponsive behavior for site visitors attempting to interact with the web page.

It’s like a traffic jam caused by a free-for-all where there are no traffic signals. Fixing it is about bringing order to the traffic.

Google describes the cause of input latency like this:

“In general, input delay (a.k.a. input latency) happens because the browser’s main thread is busy doing something else, so it can’t (yet) respond to the user.

One common reason this might happen is the browser is busy parsing and executing a large JavaScript file loaded by your app.

While it’s doing that, it can’t run any event listeners because the JavaScript it’s loading might tell it to do something else.”

Advertisement

Continue Reading Below

How to Fix Input Latency

Since the root cause of First Input Delay is the disorganized download of scripts and images, the way to fix the problem is to thoughtfully bring order to how those scripts and images are presented to the browser for download.

Solving the problem of FID generally consists of using HTML attributes to control how scripts download, optimizing images (the HTML and the images), and thoughtfully omitting unnecessary scripts.

The goal is to optimize what is downloaded to eliminate the typical pause-and-start downloading of unorganized web pages.

Why Browsers Become Unresponsive

Browsers are software that complete tasks to show a web page. The tasks consist of downloading code, images, fonts, style information, and scripts, and then running (executing) the scripts and building the web page according to the HTML instructions.

This process is called rendering. The word render means “to make,” and that’s what a browser does by assembling the code and images to render a web page.

The individual rendering tasks are called threads, short for “thread of execution.” This means an individual sequence of action (in this case, the many individual tasks done to render a web page).

In a browser, there is one thread called the Main Thread and it is responsible for creating (rendering) the web page that a site visitor sees.

The main thread can be visualized as a highway in which cars are symbolic of the images and scripts that are downloading and executing when a person visits a website.

Some code is large and slow. This causes the other tasks to stop and wait for the big and slow code to get off the highway (finish downloading and executing).

The goal is to code the web page in a manner that optimizes which code is downloaded first and when the code is executed, in an orderly manner, so that the web page downloads in the fastest possible manner.

Don’t Lose Sleep Over Third-Party Code

When it comes to Core Web Vitals and especially with First Input Delay, you’ll find there is some code over you just can’t do much about. However, this is likely to be the case for your competitors, as well.

Advertisement

Continue Reading Below

For example, if your business depends on Google AdSense (a big render-blocking script), the problem is going to be the same for your competitor. Solutions like lazy loading using Google Ad Manager can help.

In some cases, it may be enough to do the best you can because your competitors may not do any better either.

In those cases, it’s best to take your wins where you can find them. Don’t sweat the losses where you can’t make a change.

JavaScript Impact on First Input Delay

JavaScript is like a little engine that makes things happen. When a name is entered on a form, JavaScript might be there to make sure both the first and last name is entered.

When a button is pressed, JavaScript may be there to tell the browser to spawn a thank you message in a popup.

The problem with JavaScript is that it not only has to download but also has to run (execute). So those are two things that contribute to input latency.

Advertisement

Continue Reading Below

If a big JavaScript file is located near the top of the page, that file is going to block the rest of the page beneath it from rendering (becoming visible and interactive) until that script is finished downloading and executing.

This is called blocking the page.

The obvious solution is to relocate these kinds of scripts from the top of the page and put them at the bottom so they don’t interfere with all the other page elements that are waiting to render.

But this can be a problem if, for example, it’s placed at the end of a very long web page.

This is because once the large page is loaded and the user is ready to interact with it, the browser will still be signaling that it is downloading (because the big JavaScript file is lagging at the end). The page may download faster but then stall while waiting for the JavaScript to execute.

There’s a solution for that!

Advertisement

Continue Reading Below

Defer and Async Attributes

The Defer and Async HTML attributes are like traffic signals that control the start and stop of how JavaScript downloads and executes.

An HTML attribute is something that transforms an HTML element, kind of like extending the purpose or behavior of the element.

It’s like when you learn a skill; that skill becomes an attribute of who you are.

In this case, the Defer and Async attributes tell the browser to not block HTML parsing while downloading. These attributes tell the browser to keep the main thread going while the JavaScript is downloading.

Async Attribute

JavaScript files with the Async attribute will download and then execute as soon as it is downloaded. When it begins to execute is the point at which the JavaScript file blocks the main thread.

Normally, the file would block the main thread when it begins to download. But not with the async (or defer) attribute.

This is called an asynchronous download, where it downloads independently of the main thread and in parallel with it.

Advertisement

Continue Reading Below

The async attribute is useful for third-party JavaScript files like advertising and social sharing — files where the order of execution doesn’t matter.

Defer Attribute

JavaScript files with the “defer” attribute will also download asynchronously.

But the deferred JavaScript file will not execute until the entire page is downloaded and rendered. Deferred scripts also execute in the order in which they are located on a web page.

Scripts with the defer attribute are useful for JavaScript files that depend on page elements being loaded and when the order they are executed matter.

In general, use the defer attribute for scripts that aren’t essential to the rendering of the page itself.

Input Latency is Different for All Users

It’s important to be aware that First Input Delay scores are variable and inconsistent. The scores vary from visitor to visitor.

This variance in scores is unavoidable because the score depends on interactions that are particular to the individual visiting a site.

Advertisement

Continue Reading Below

Some visitors might be distracted and not interact until a moment where all the assets are loaded and ready to be interacted with.

This is how Google describes it:

“Not all users will interact with your site every time they visit. And not all interactions are relevant to FID…”

In addition, some users’ first interactions will be at bad times (when the main thread is busy for an extended period of time), and some user’s first interactions will be at good times (when the main thread is completely idle).

This means some users will have no FID values, some users will have low FID values, and some users will probably have high FID values.”

Why Most Sites Fail FID

Unfortunately, many content management systems, themes, and plugins were not built to comply with this relatively new metric.

This is the reason why so many publishers are dismayed to discover that their sites don’t pass the First Input Delay test.

Advertisement

Continue Reading Below

But that’s changing as the web software development community responds to demands for different coding standards from the publishing community.

And it’s not that the software developers making content management systems are at fault for producing products that don’t measure up against these metrics.

For example, WordPress addressed a shortcoming in the Gutenberg website editor that was causing it to score less well than it could.

Gutenberg is a visual way to build sites using the interface or metaphor of blocks. There’s a widgets block, a contact form block, and a footer block, etc.

So the process of creating a web page is more visual and done through the metaphor of building blocks, literally building a page with different blocks.

There are different kinds of blocks that look and behave in different ways. Each individual block has a corresponding style code (CSS), with much of it being specific and unique to that individual block.

The standard way of coding these styles is to create one style sheet containing the styles that are unique to each block. It makes sense to do it this way because you have a central location where all the code specific to blocks exists.

Advertisement

Continue Reading Below

The result is that on a page that might consist of (let’s say) twenty blocks, WordPress would load the styles for those blocks plus all the other blocks that aren’t being used.

Before Core Web Vitals (CWV), that was considered the standard way to package up CSS.

Since the introduction of Core Web Vitals, that practice is considered code bloat.

This is not meant as a slight against the WordPress developers. They did a fantastic job.

This is just a reflection of how rapidly changing standards can hit a bottleneck at the software development stage before being integrated into the coding ecosystem.

We went through the same thing with the transition to mobile-first web design.

Gutenberg 10.1 Improved Performance

WordPress Gutenberg 10.1 introduced an improved way to load the styles by only loading the styles that were needed and not loading the block styles that weren’t going to be used.

This is a huge win for WordPress, the publishers who rely on WordPress, and of course, the users who visit sites created with WordPress.

Advertisement

Continue Reading Below

Time to Fix First Input Delay is Now

Moving forward, we can expect that more and more software developers responsible for the CMS, themes, and plugins will transition to First Input Delay-friendly coding practices.

But until that happens, the burden is on the publisher to take steps to improve First Input Delay. Understanding it is the first step.

Citations

Google Search Console (Core Web Vitals report)

User-centric Performance Metrics

GitHub Script for Measuring Core Web Vitals

SEO

Google Defends Lack Of Communication Around Search Updates

While Google informs the public about broad core algorithm updates, it doesn’t announce every minor change or tweak, according to Google’s Search Liaison Danny Sullivan.

The comments were in response to Glenn Gabe’s question about why Google doesn’t provide information about volatility following the March core update.

OK, I love that Google informs us about broad core updates rolling out, but why not also explain when huge changes roll out that seem like an extension of the broad core update? I mean, it’s cool that Google can decouple algorithms from broad core updates and run them separately… https://t.co/2Oan7X6FTk

— Glenn Gabe (@glenngabe) May 9, 2024

Gabe wrote:

“… when site owners think a major update is done, they are not expecting crazy volatility that sometimes completely reverses what happened with the major update.

The impact from whatever rolled out on 5/3 and now 5/8 into 5/9 has been strong.”

Sullivan explained that Google continuously updates its search ranking systems, with around 5,000 updates per year across different algorithms and components.

Many of these are minor adjustments that would go unnoticed, Sullivan says:

“If we were giving notice about all the ranking system updates we do, it would be like this:

Hi. It’s 1:14pm — we just did an update to system 112!

Hi. It’s 2:26pm — we just did an update to system 34!That’s because we do around 5,000 updates per year.”

This is covered on our long-standing page about core updates: https://t.co/Jsq1P236ff

“We’re constantly making updates to our search algorithms, including smaller core updates. We don’t announce all of these because they’re generally not widely noticeable. Still, when released,…

— Google SearchLiaison (@searchliaison) May 9, 2024

While Google may consider these minor changes, combining thousands of those tweaks can lead to significant shifts in rankings and traffic that sites need help understanding.

More open communication from Google could go a long way.

Ongoing Shifts From Web Changes

Beyond algorithm adjustments, Sullivan noted that search results can fluctuate due to the nature of web content.

Google’s ranking systems continually process new information, Sullivan explains:

“… already launched and existing systems aren’t themselves being updated in how they operate, but the information they’re processing isn’t static but instead is constantly changing.”

Google focuses communications on major updates versus a never-ending stream of notifications about minor changes.

Sullivan continues:

“This type of constant ‘hey, we did an update’ notification stuff probably isn’t really that useful to creators. There’s nothing to ‘do’ with those types of updates.”

Why SEJ Cares

Understanding that Google Search is an ever-evolving platform is vital for businesses and publishers that rely on search traffic.

It reiterates the need for a long-term SEO strategy focused on delivering high-quality, relevant content rather than reacting to individual algorithm updates.

However, we realize Google’s approach to announcing updates can leave businesses scrambling to keep up with ranking movements.

More insight into these changes would be valuable for many.

How This Can Help You

Knowing that Google processes new information in addition to algorithm changes, you may have more realistic expectations post-core updates.

Instead of assuming stability after a major update, anticipate fluctuations as Google’s systems adapt to new web data.

Featured Image: Aerial Film Studio/Shutterstock

SEO

How to Use Keywords for SEO: The Complete Beginner’s Guide

In this guide, I’ll cover in detail how to make the best use of keywords in three steps:

- Finding good keywords: keywords that are rankable and bring value to your site.

- Using keywords in content and meta tags: how to use the target keyword to structure and write content that will satisfy readers and send relevance signals to search engines.

- Tracking keywords: monitoring your (and your competitors’) performance.

There’s really a lot you can do with just a single keyword, so at the end of the article, you’ll find a few advanced SEO tips.

Once you know how to find one good keyword, you will be able to create an entire list of keywords.

1. Pick relevant seed keywords to generate keyword ideas

Seed keywords are words or phrases that you can use as the starting point in a keyword research process to unlock more keywords. For example, for our site, these could be general terms like “seo, organic traffic, digital marketing, keywords, backlinks”, etc.

There are many good sources of seed keywords, and it’s not a bad idea to try them all:

- Brainstorming. This involves gathering a team or working solo to think deeply about the terms your potential customers might use when searching for your products or services.

- Your competitors’ website navigation. The labels they use in their navigation menus, headers, and footers often highlight critical industry terms and popular products or services that you might also want to target.

- Your competitors’ keywords. Tools like Ahrefs can help you discover which keywords your competitors are targeting in their SEO and paid ad campaigns. I’ll cover that in a bit.

- Your website and promo materials. Review your website’s text, especially high-performing pages, as well as any promotional materials like brochures, ads, and press releases. These sources can reveal the terms that already resonate with your audience.

- Generative AI. AI tools can generate keyword ideas based on brief descriptions of your business, products, or industry (example below).

Here’s what you can ask any generative AI for, whether that’s Copilot, ChatGPT, Perplexity, etc.

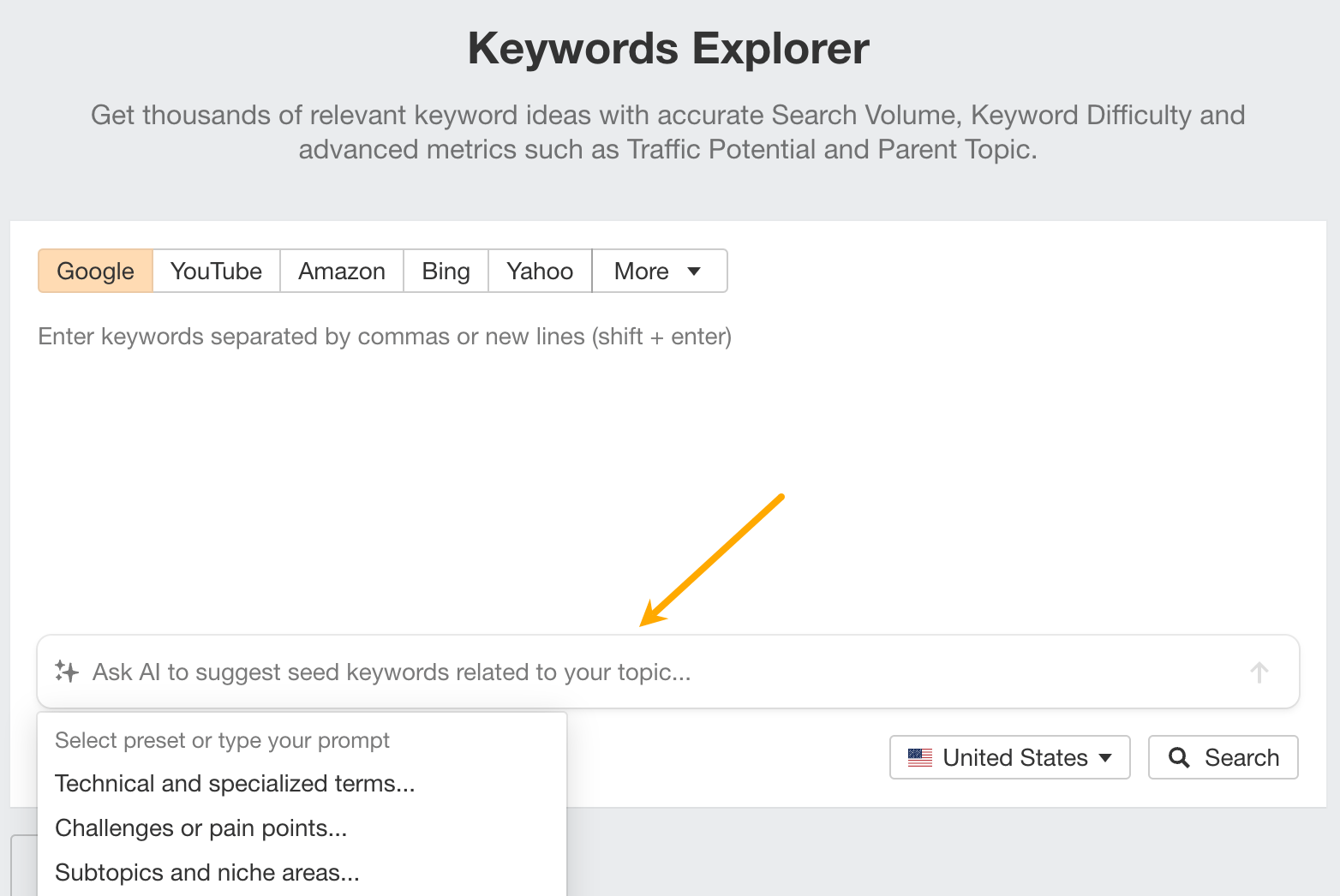

Next, paste your seed keywords into a tool like Ahrefs Keywords Explorer to generate keyword ideas. If you’re using Ahrefs, you can go straight into Keywords Explorer, get AI suggestions there, and start researching right away.

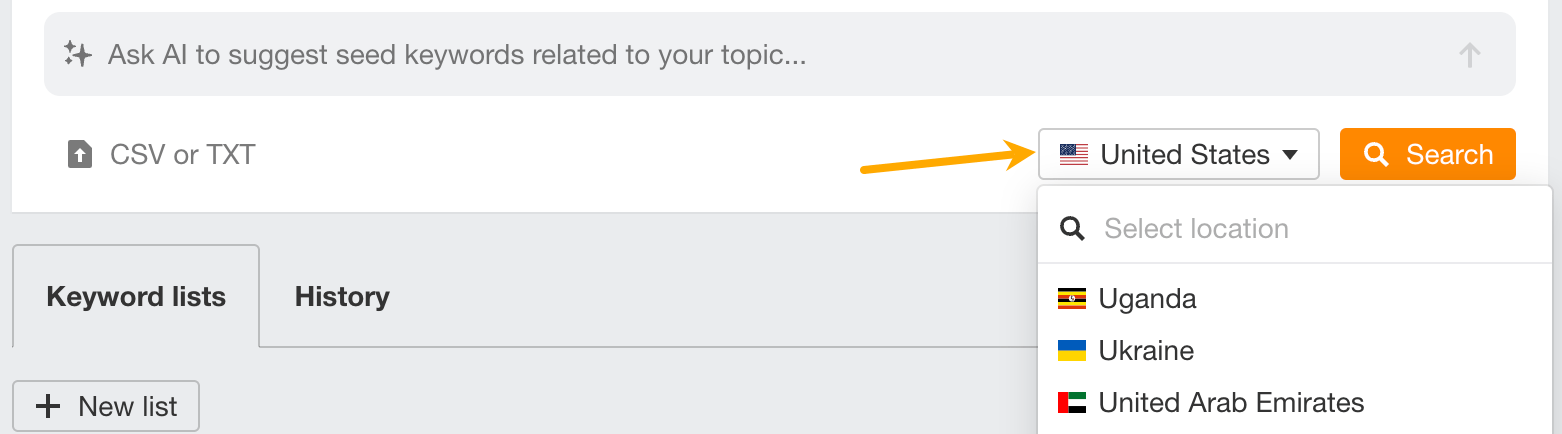

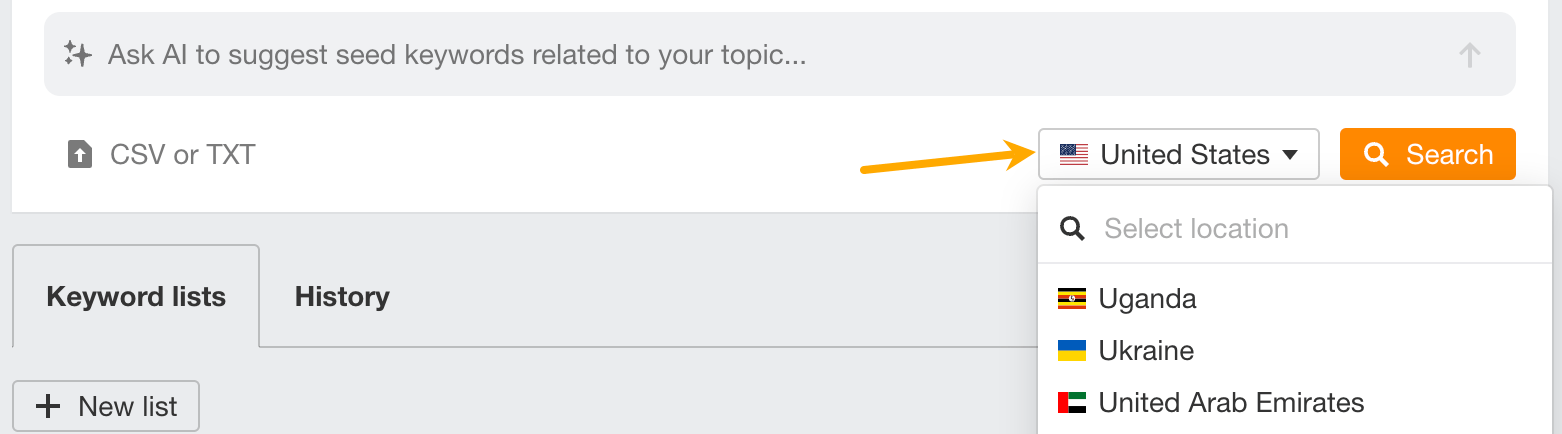

Next, make sure you’ve set up the country in which you’ll want to rank and hit “Search”.

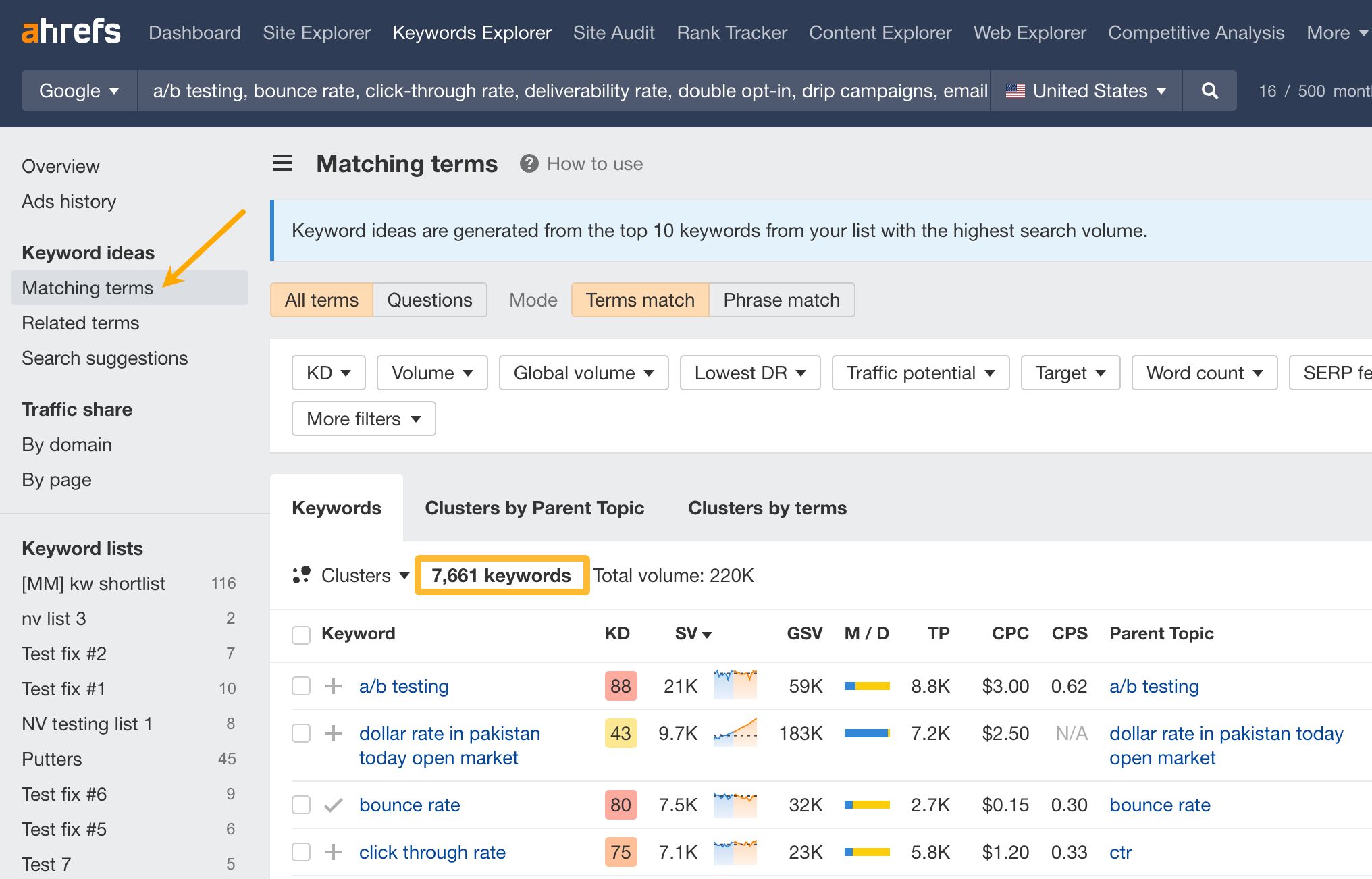

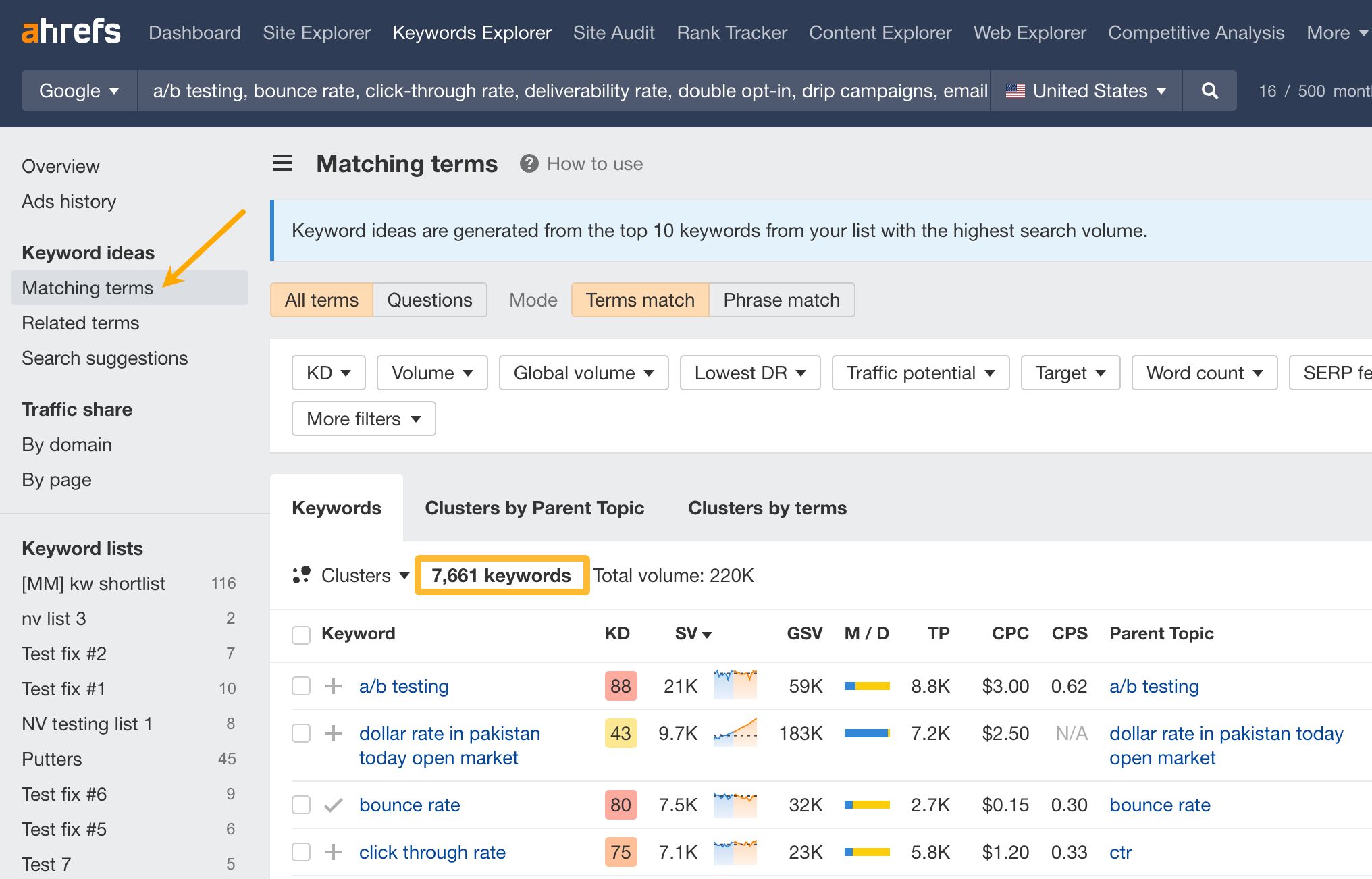

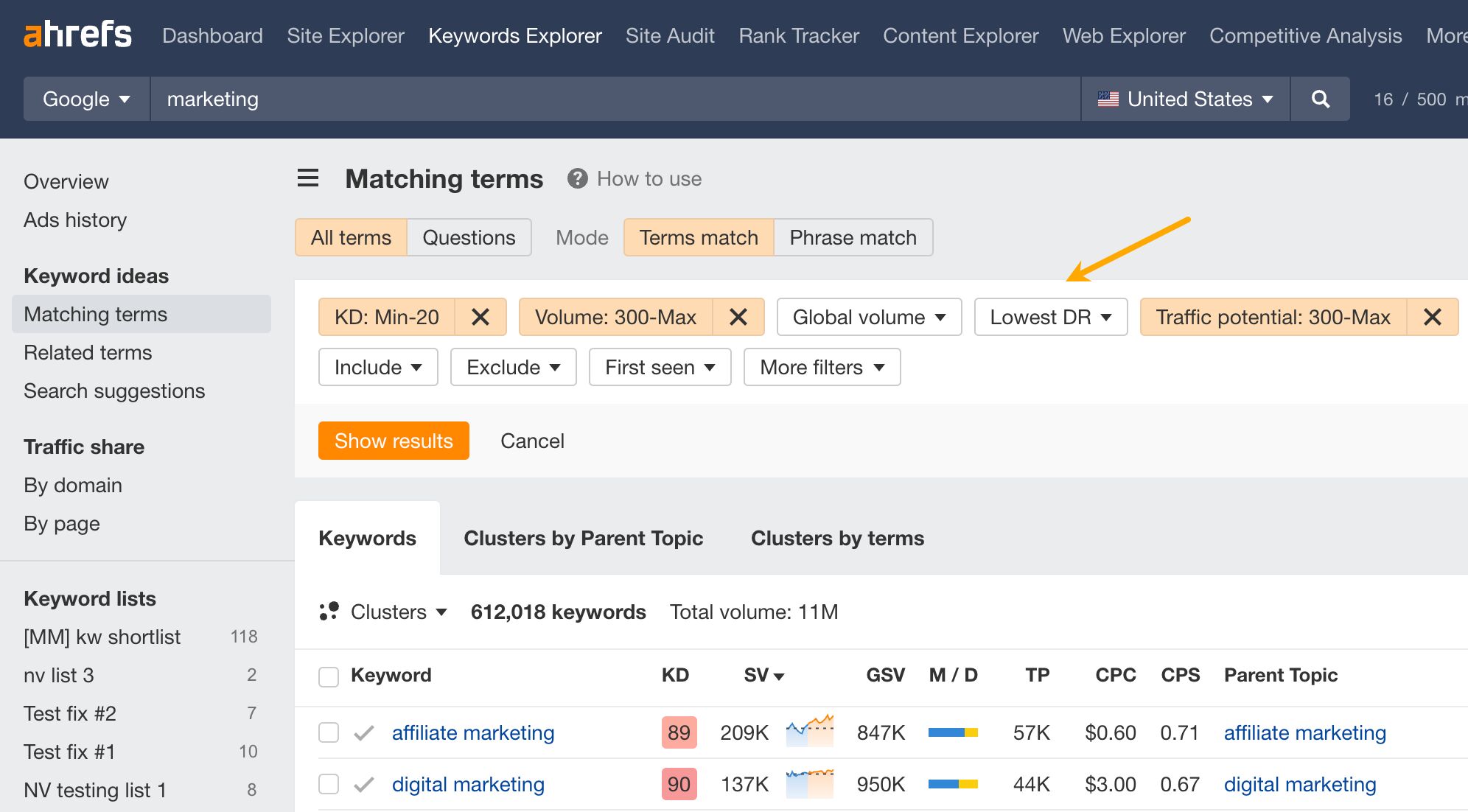

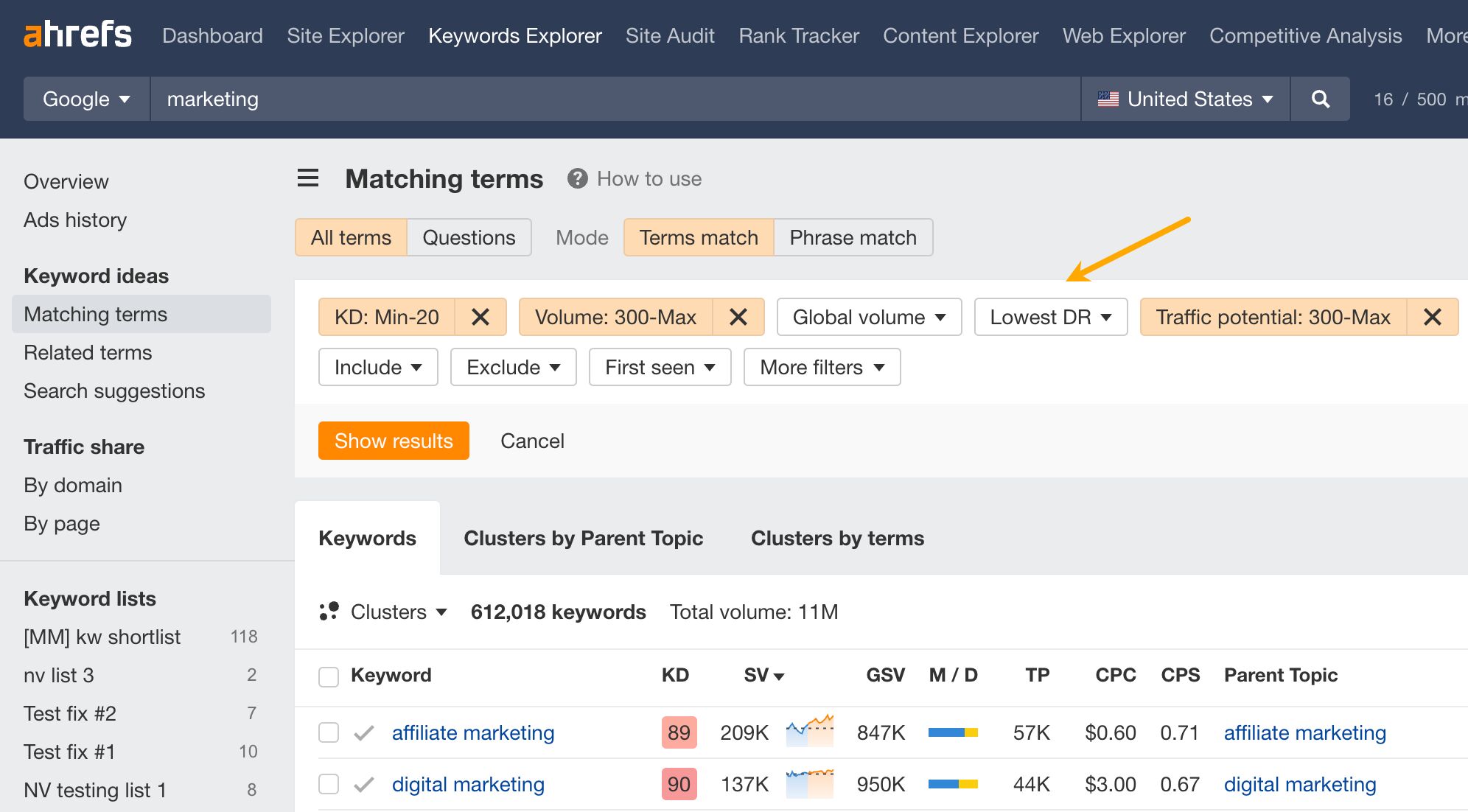

After hitting the “Search” button, go to the Matching terms report. You will see a big list of keywords.

The list you’ll get will be quite raw — not all keywords will be equally good and the list will likely be too big to manage. Next steps are all about refining the list because we’ll be looking for target keywords — the keywords that will become the topic of your content.

2. Refine the list and cluster

The next step is to refine your list using filters.

Some useful basic filters are:

- KD (Keyword Difficulty): how difficult it would be to rank on the first page of Google for a given keyword.

- Traffic potential: traffic you can get for ranking #1 for that keyword and other relevant keywords (based on the page that currently ranks #1).

- Lowest DR (Domain Rating): plug in the DR of your site to see keywords where another site with the same DR already ranks in the top 10. In other words, it helps to find “rankable” keywords.

- Target: one of the main use cases is excluding keywords you already rank for.

- Include/Exclude: see keywords that contain specific words to increase relevancy/hide keywords with irrelevant words.

For example, here’s how to find potentially rankable keywords with traffic potential above 300 monthly visits. Go to the Matching terms report in Keywords Explorer and set filters: keyword difficulty filter (KD) to your site’s Domain Rating, Traffic potential, and Volume filters to a minimum of 300.

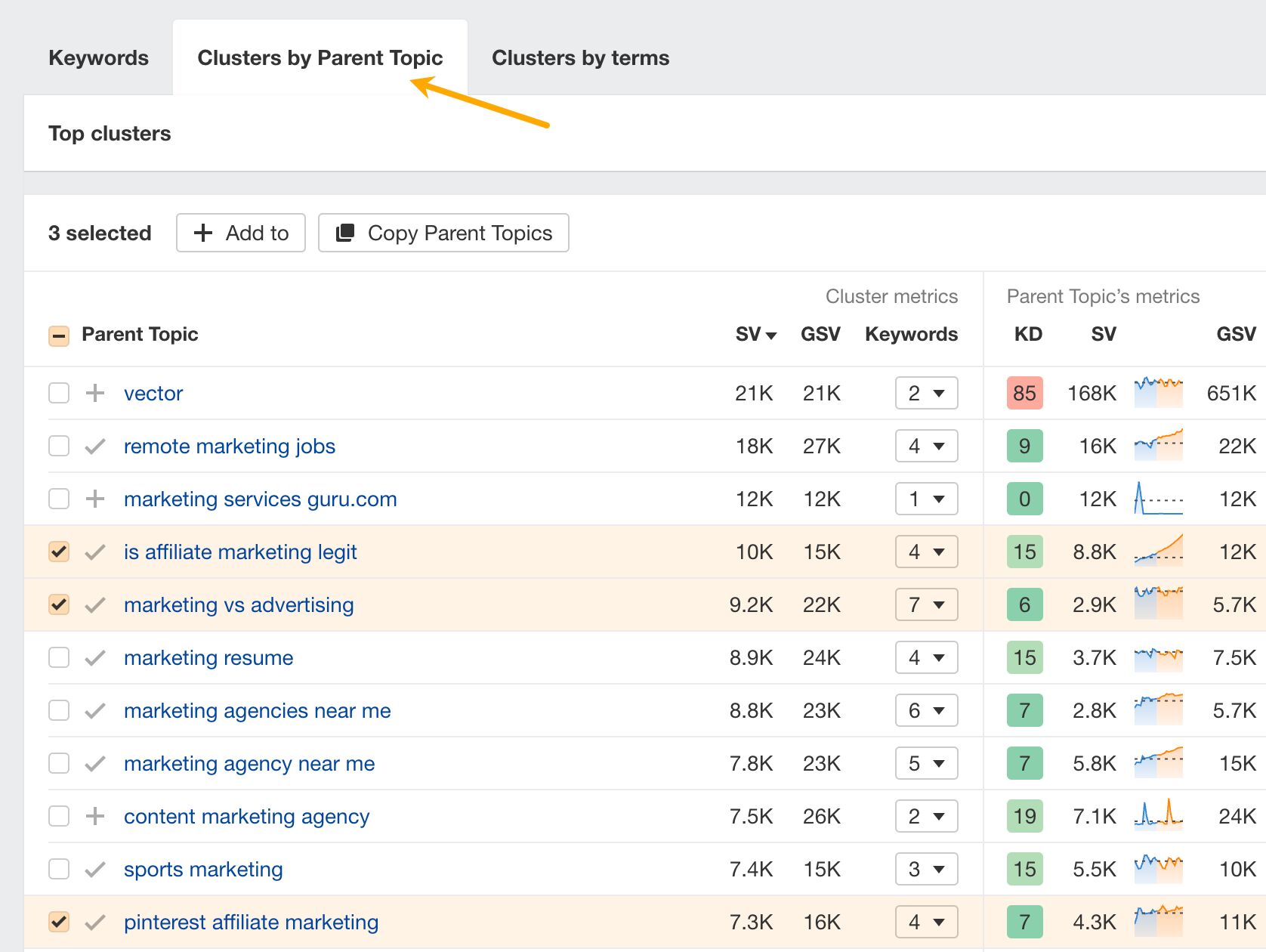

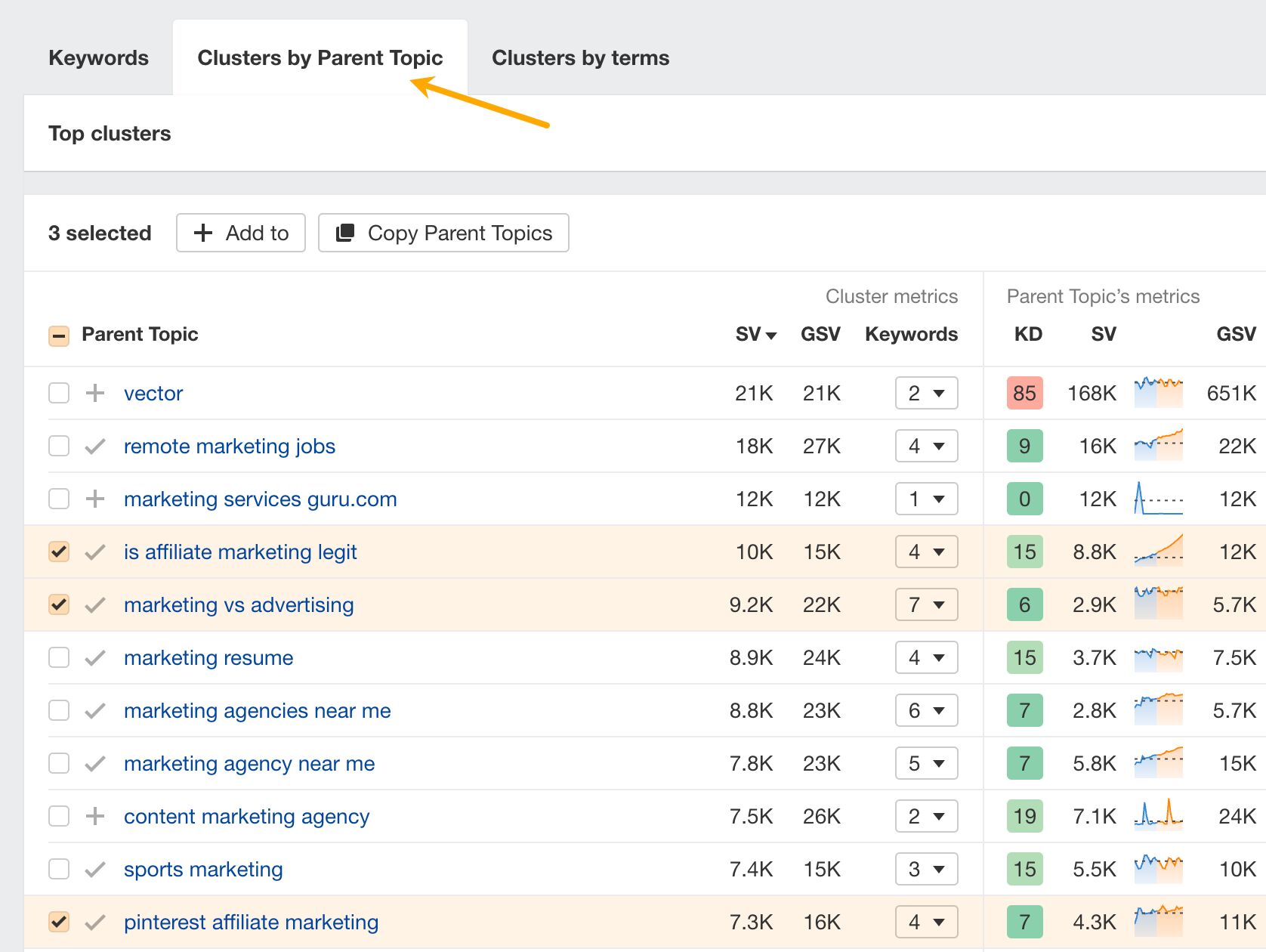

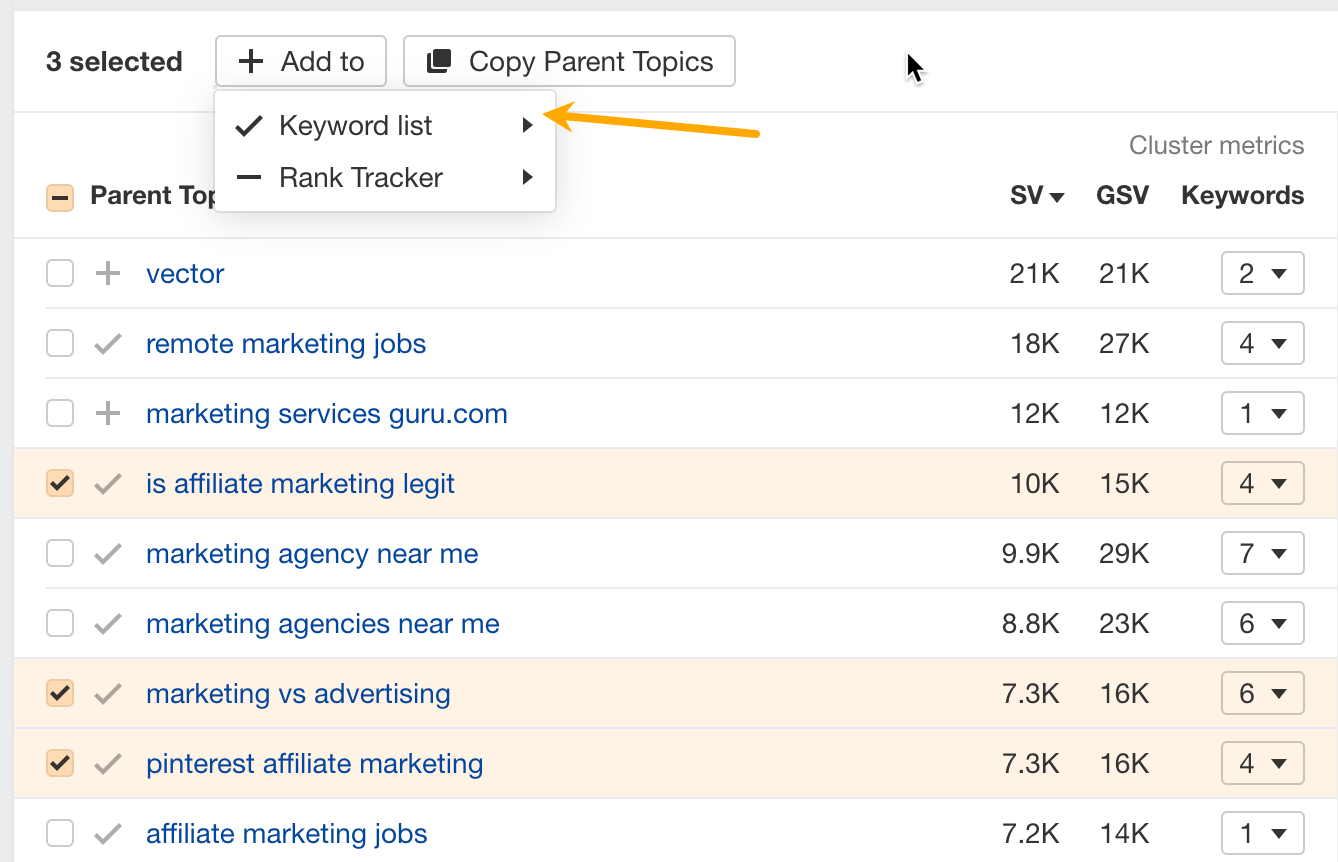

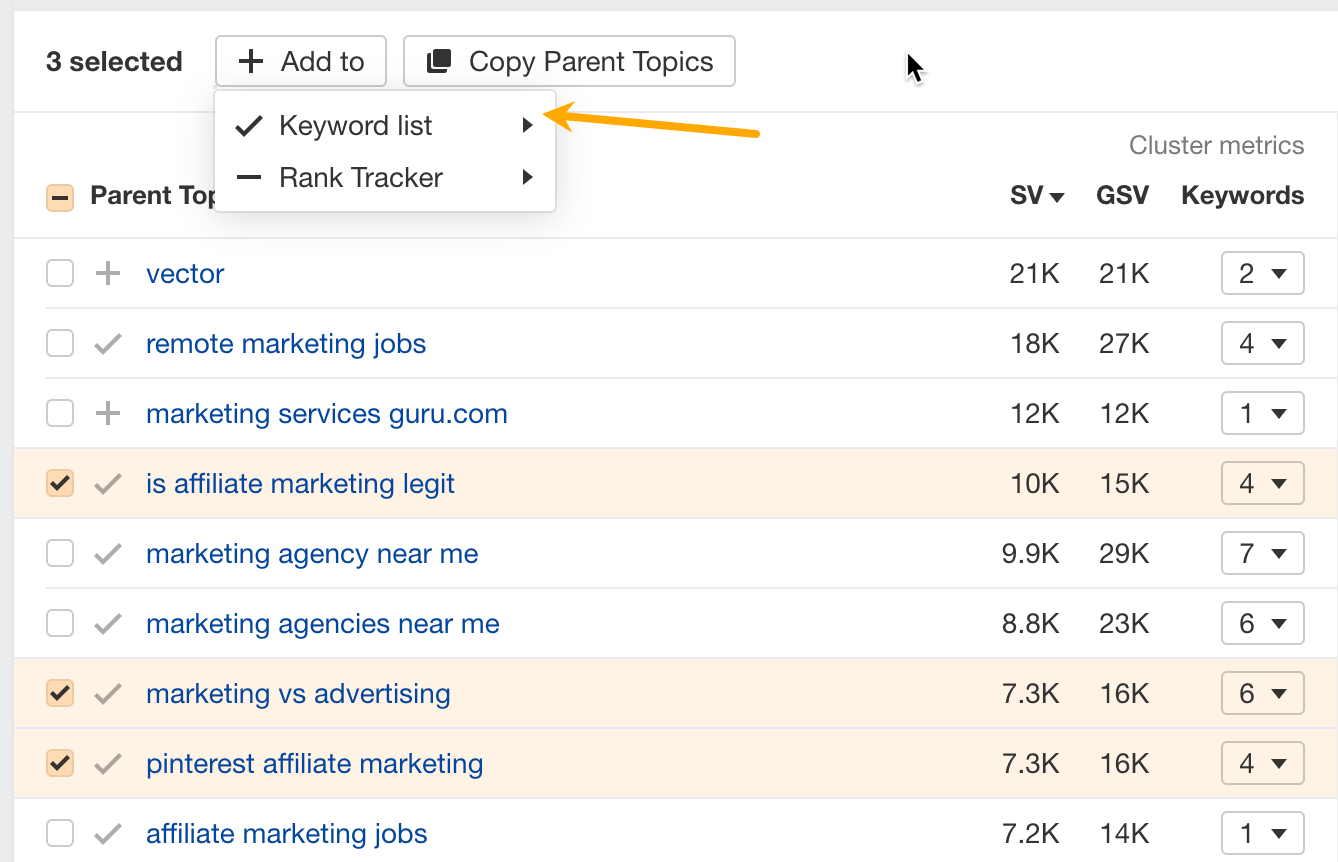

Clustering is another step to refine your list. It shows you if there is another keyword you could target to get more traffic (aka parent topic). At the same time, it shows which keywords most likely belong on the same page.

For example, here are some clusters distilled from low-competition topics about marketing.

Pro tip

Take keyword trends into account when choosing keywords.

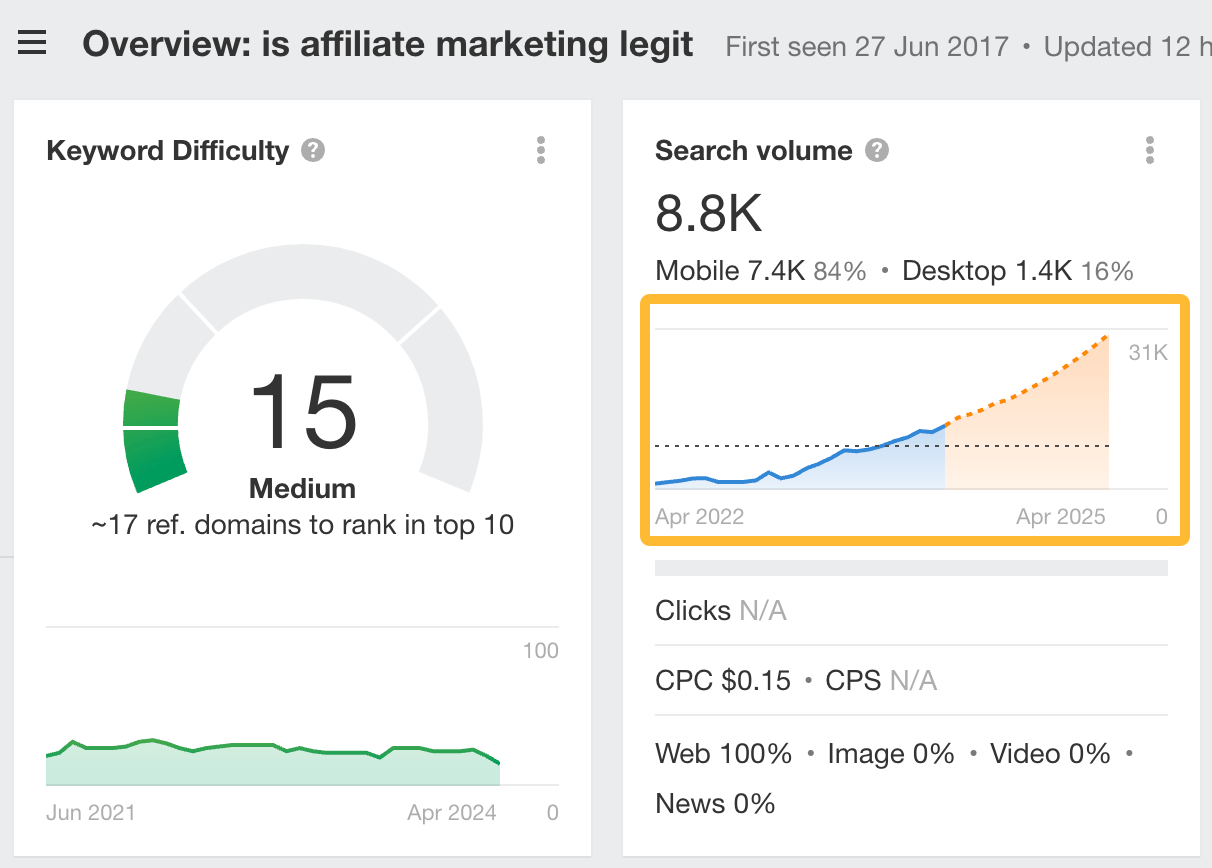

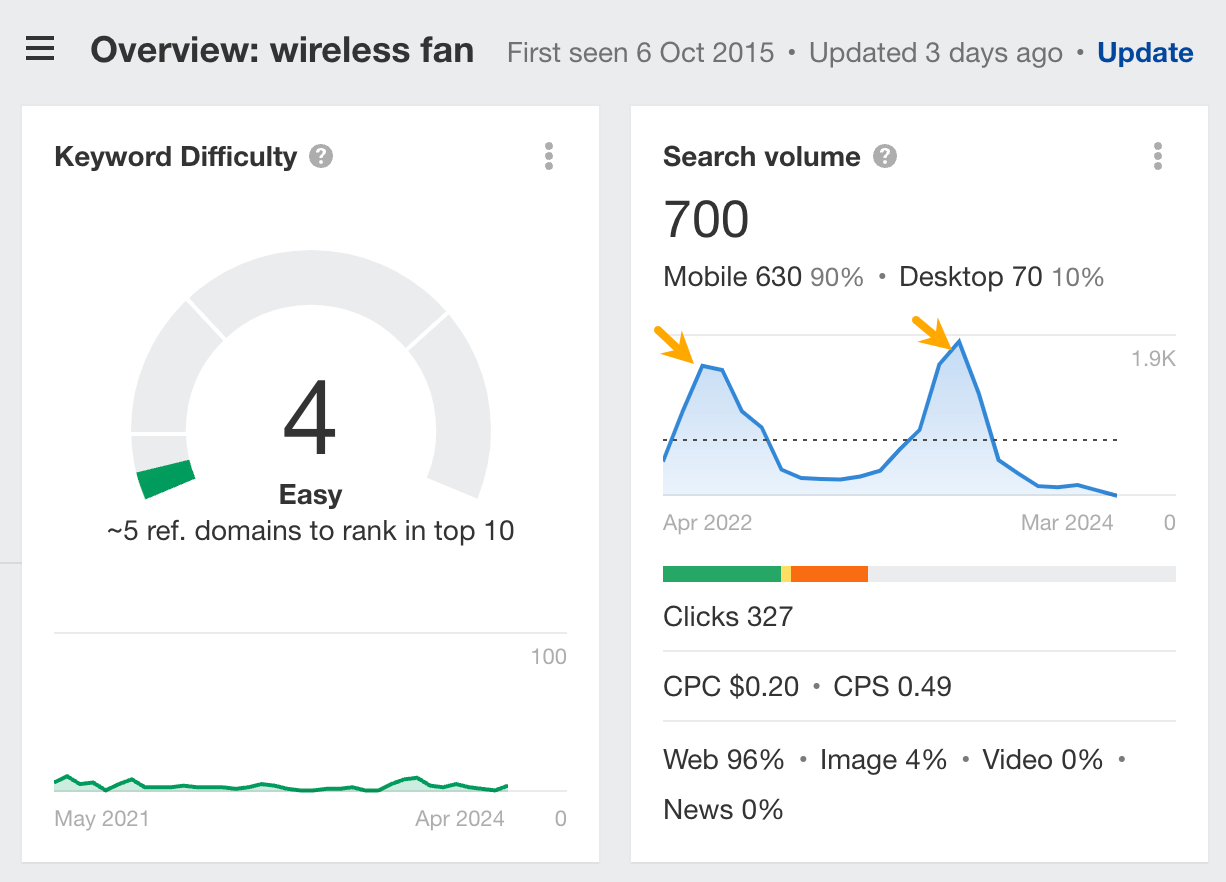

For example, the keyword “is affiliate marketing legit” is at 8.8k monthly search volume right now, but based on our forecast in Keywords Explorer (the orange part of the chart), if it continues its current growth rate it should be more than triple next year.

The graphs will also show you if the search volume is affected by seasonality (fluctuations in search volume throughout different times of the year).

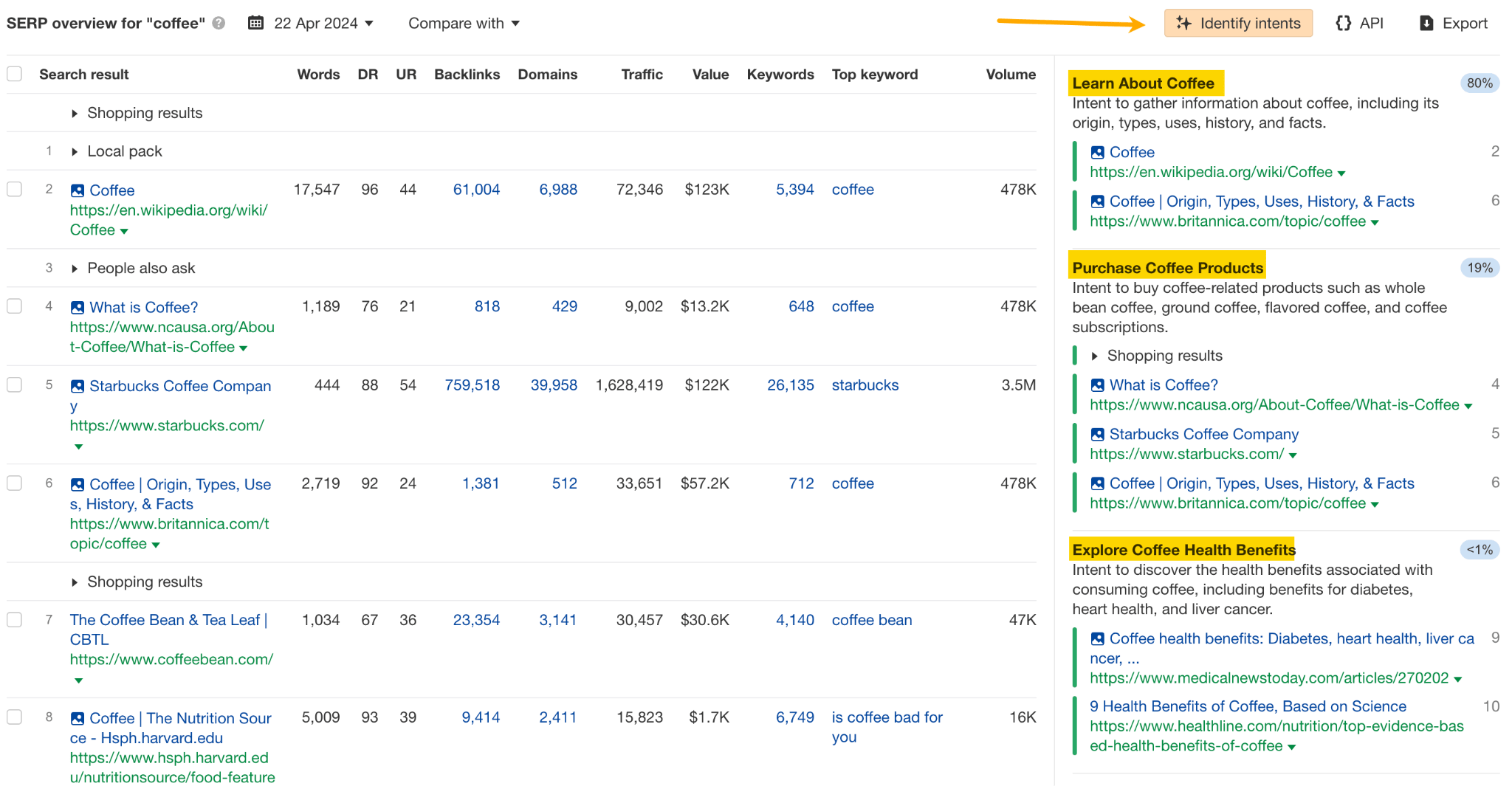

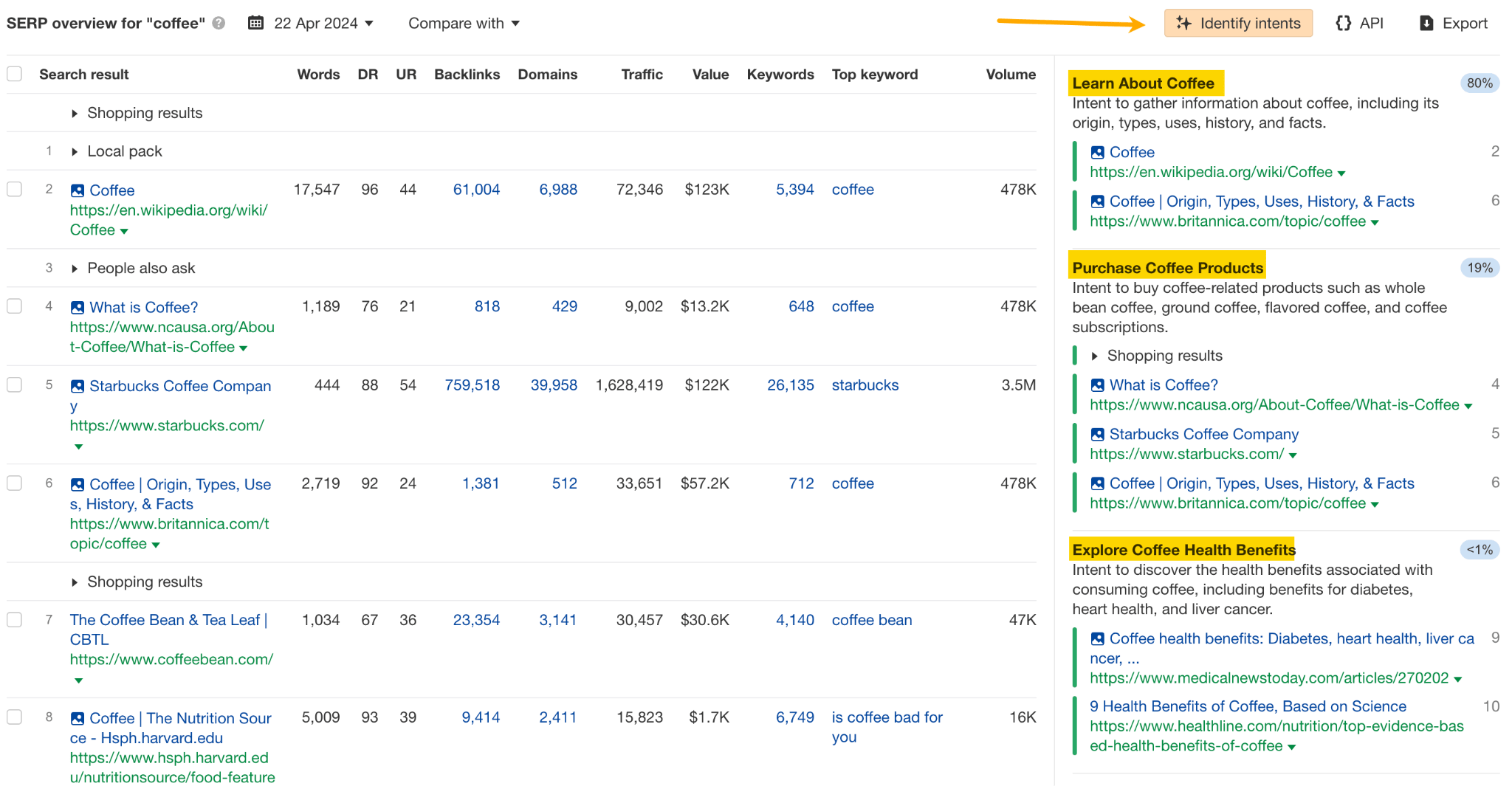

4. Identify search intent and determine value for your site

Before investing time in content, make sure you can give searchers what they want and that the keywords will attract the right kind of audience.

To identify the type of page you need to create to satisfy searchers, look at the top-ranking pages to see what purpose they serve (are they more informational or commercial), or simply use the Identify intents AI feature in Keywords Explorer.

So, for example, if the top-ranking pages are ecommerce pages and you’re not offering products on your site, it’s going to be very hard to rank.

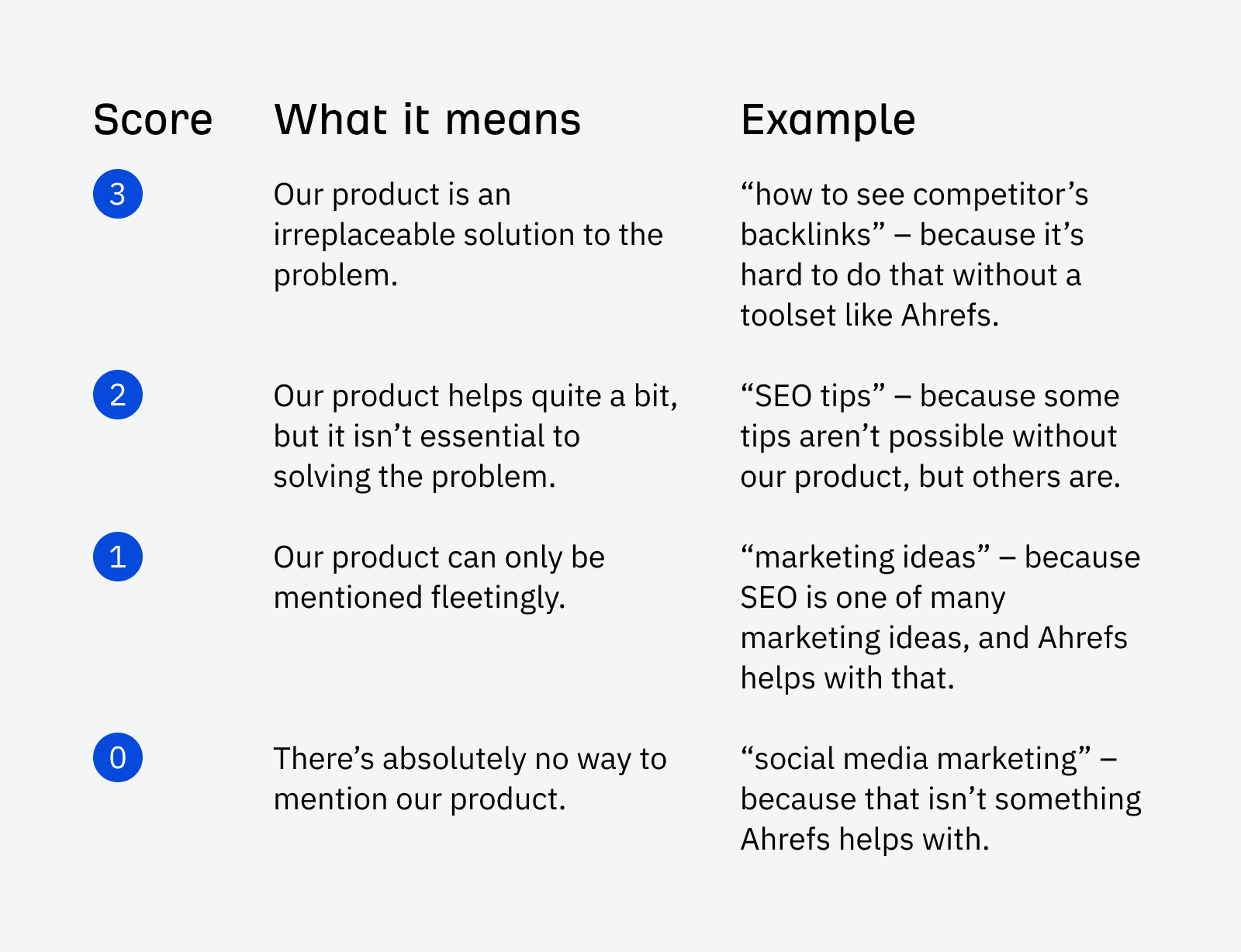

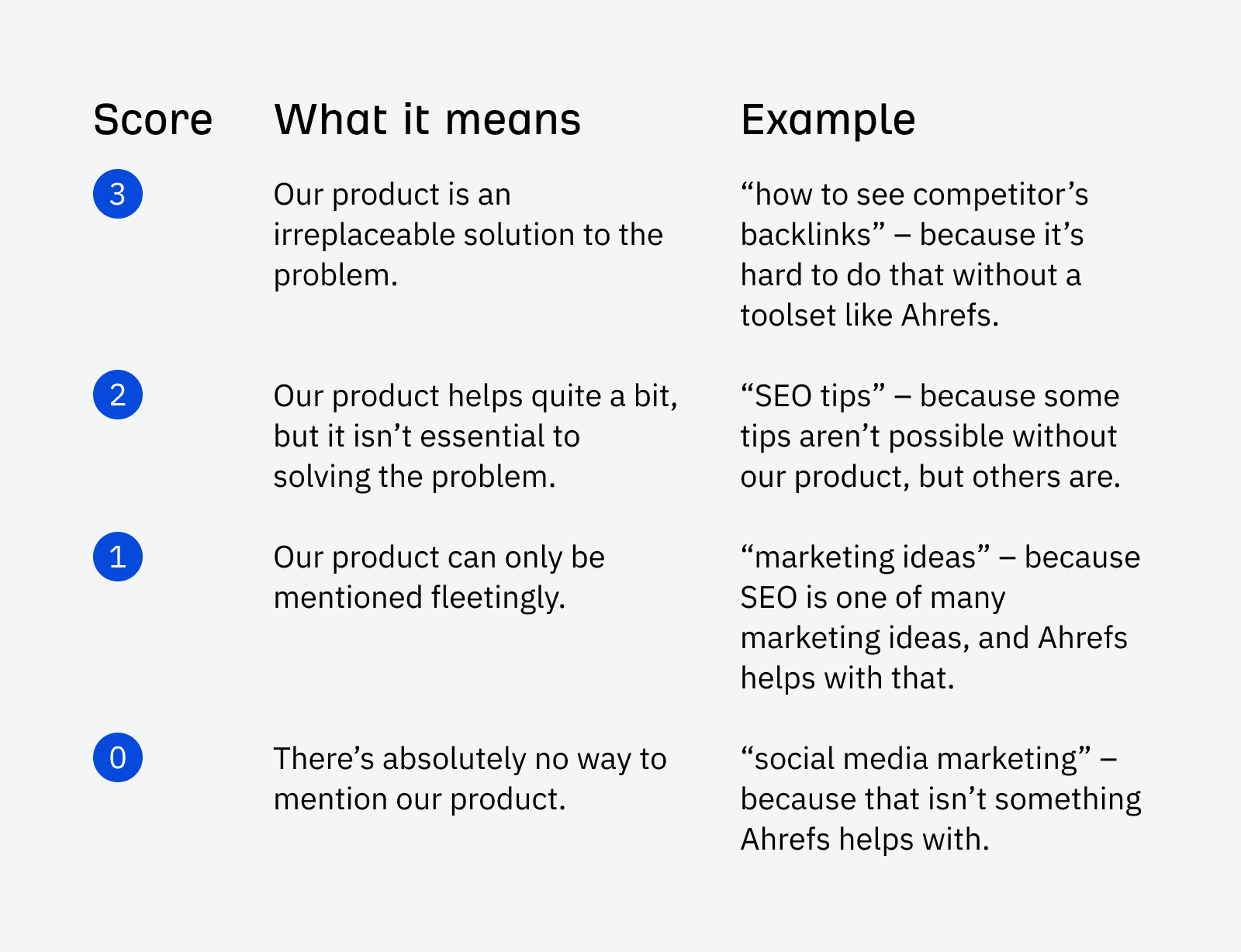

Then, ask yourself if visitors attracted by a keyword will be valuable to your business — whether they’re likely to subscribe to your newsletter or make a purchase. At Ahrefs, we use a business potential score to evaluate this.

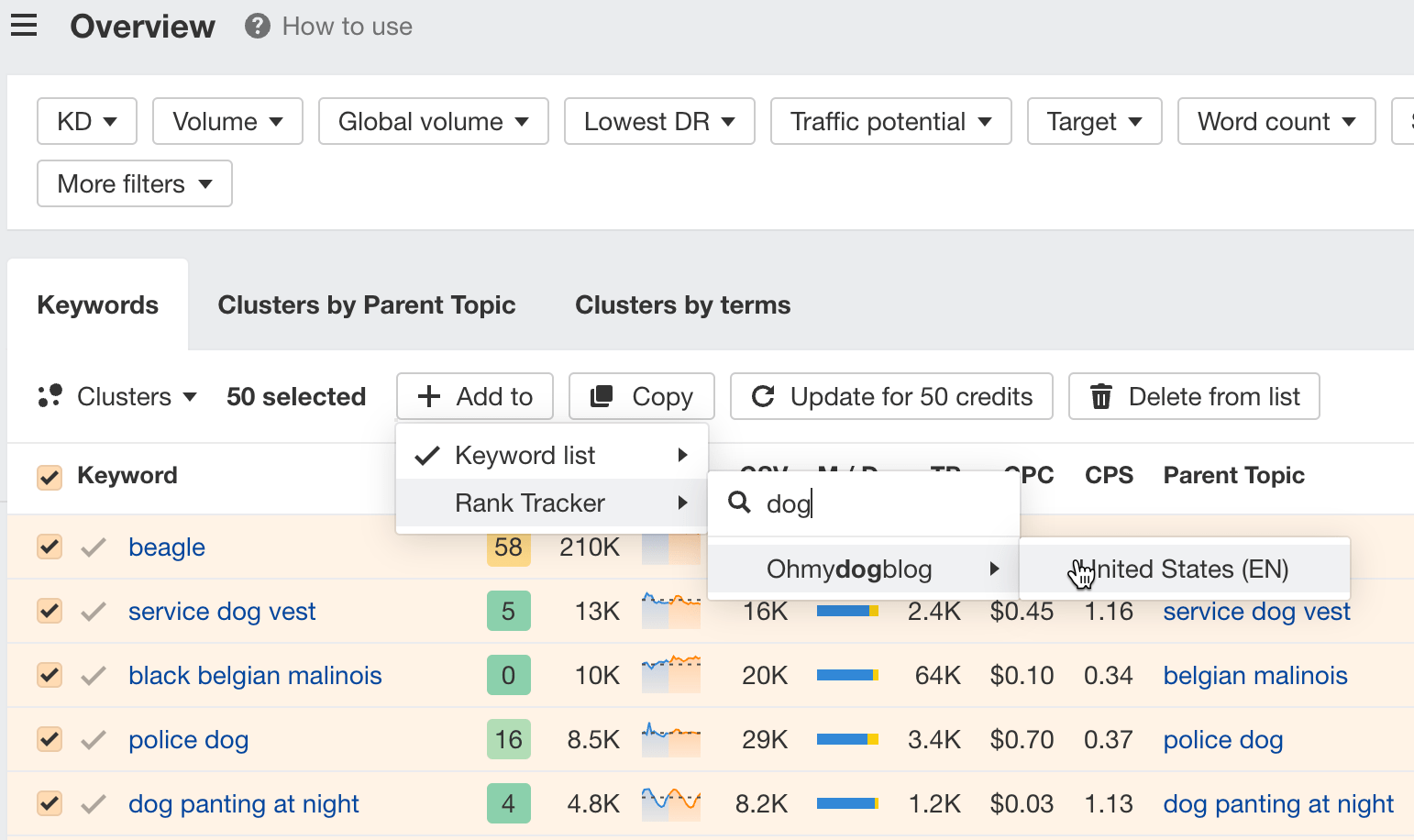

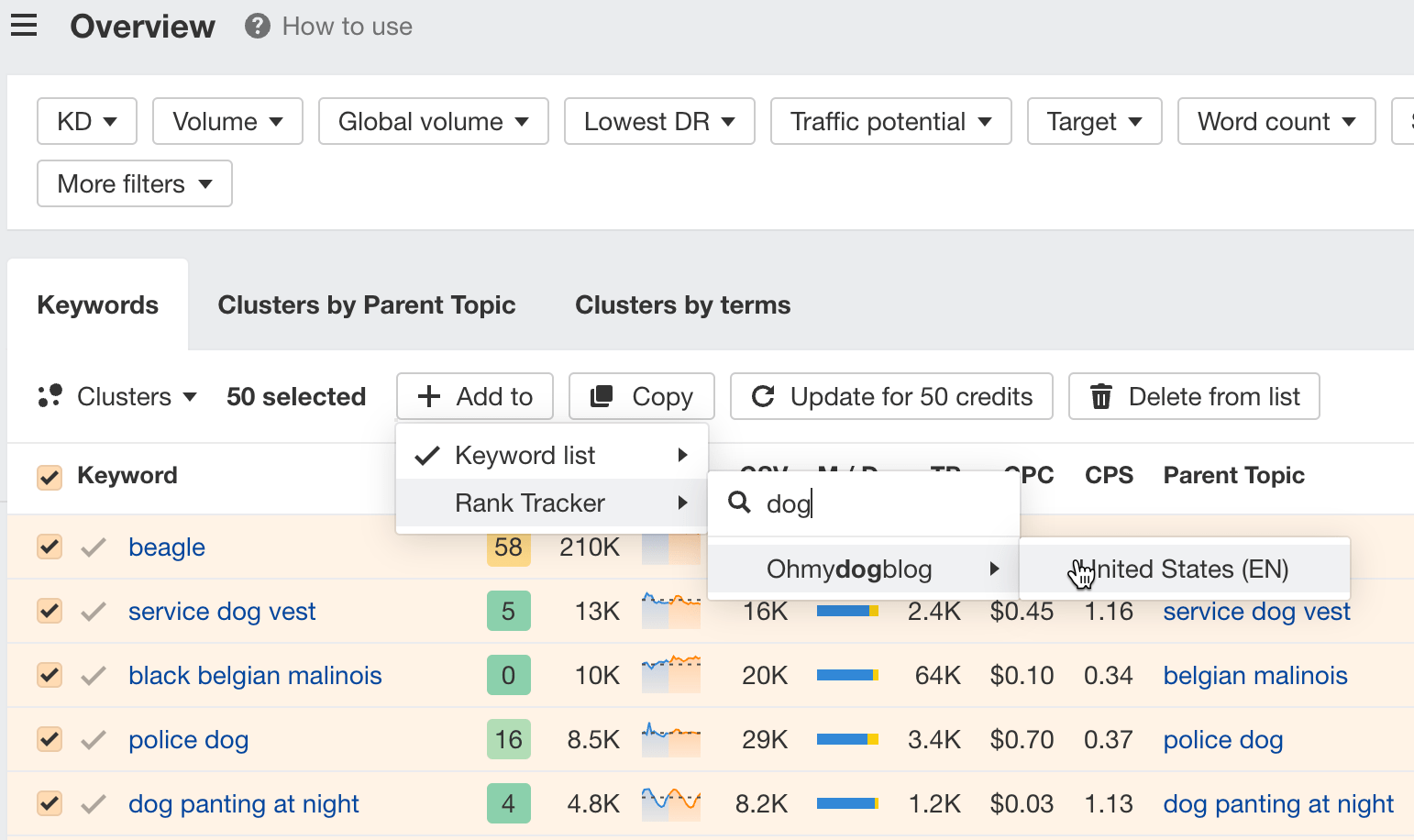

Finally, if a keyword checks all boxes, add it to a keyword list.

Now you’ve got a list of relevant, valuable target keywords with traffic potential ready for content creation. You can repeat the process as many times as you like with different seed keywords or different filters and find new ideas.

There’s one more great source of keywords — competitors.

5. Enrich the list with your competitors’ keywords

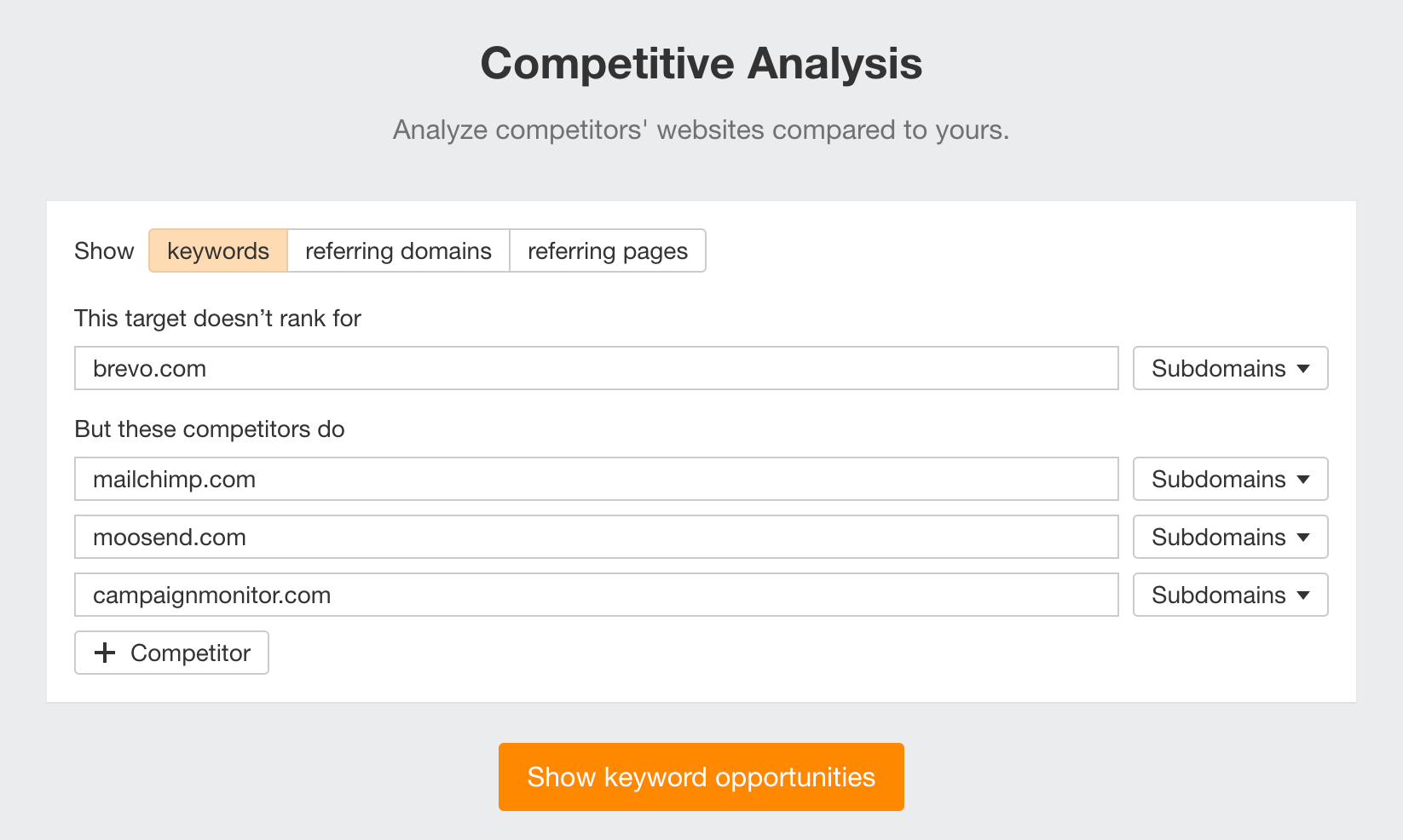

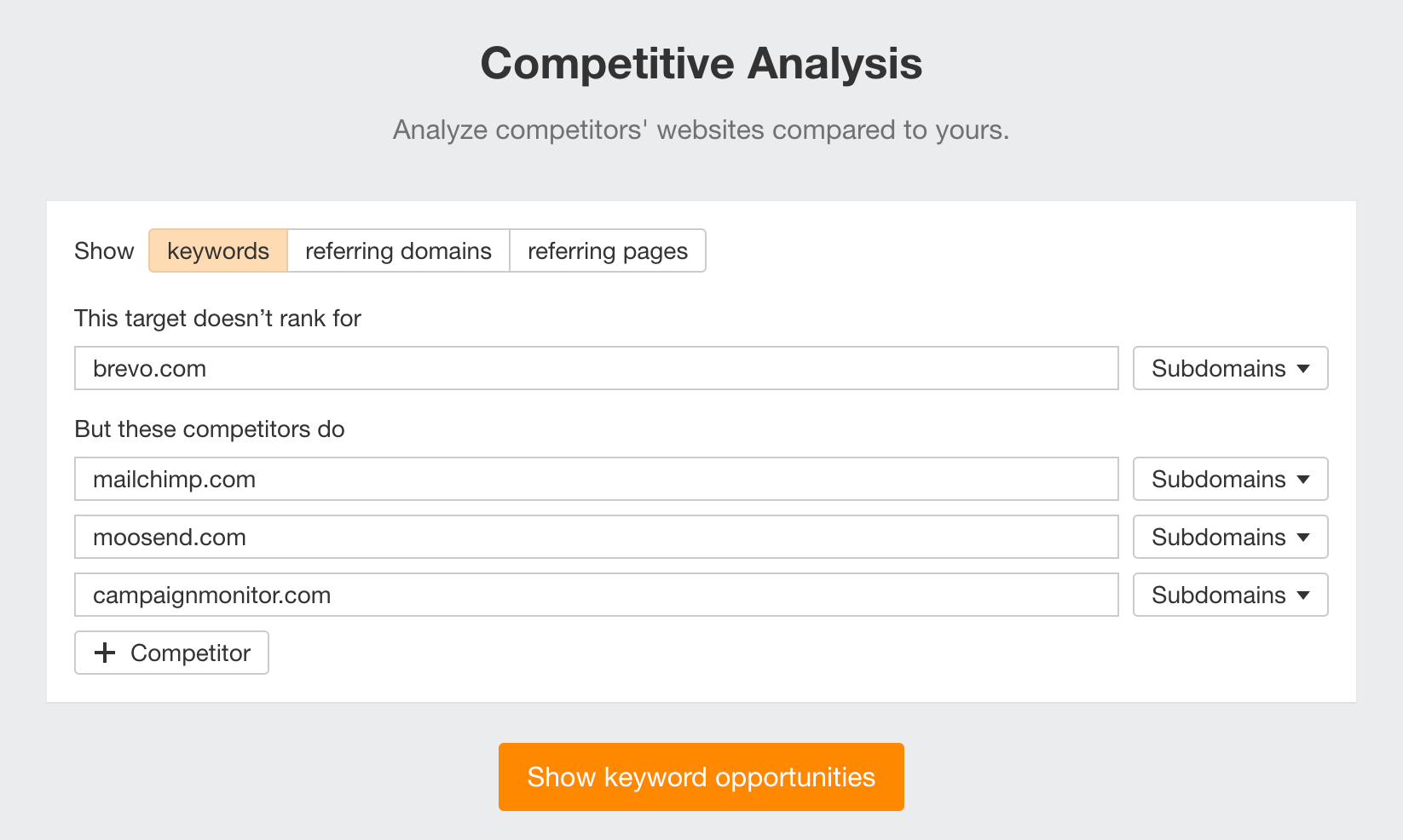

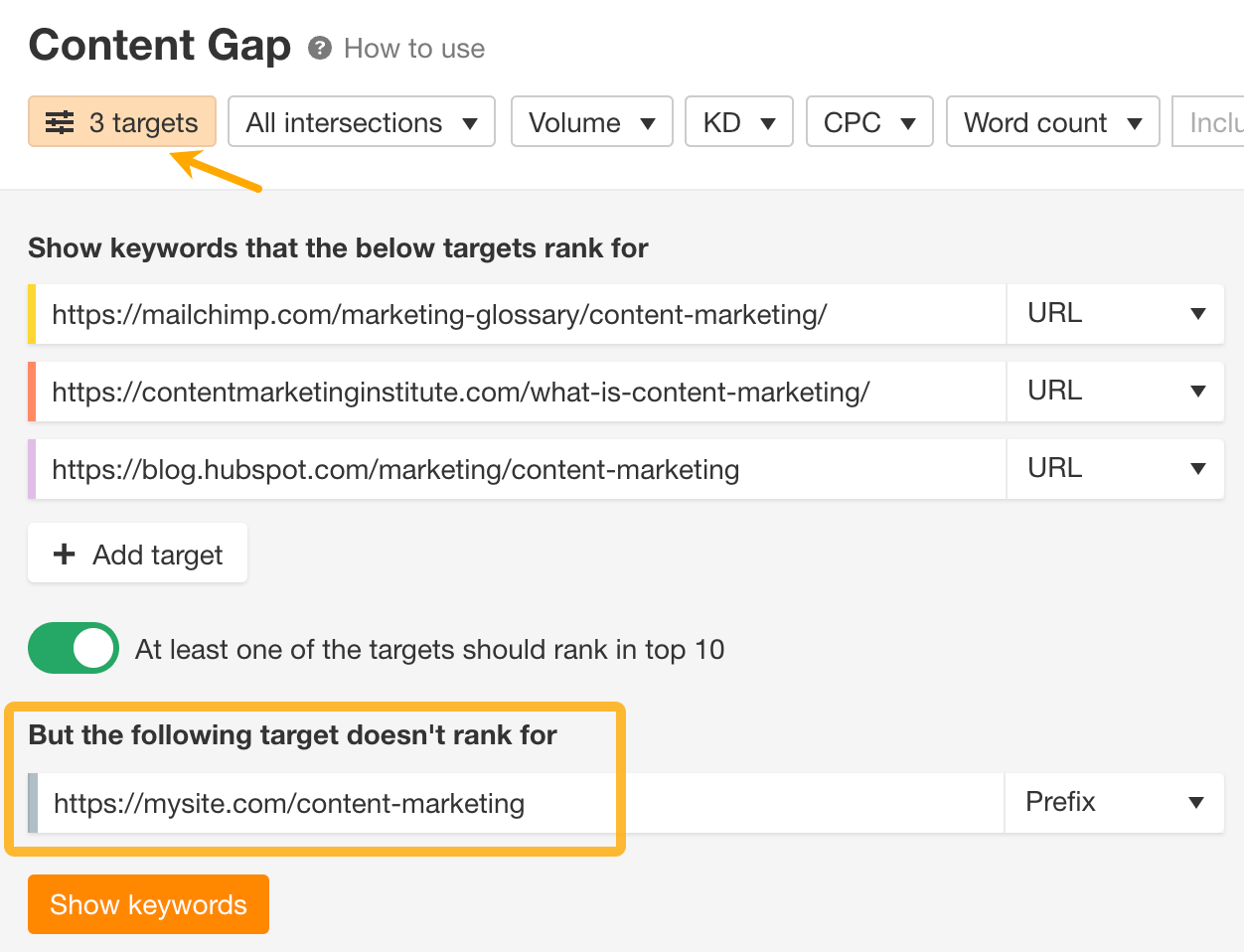

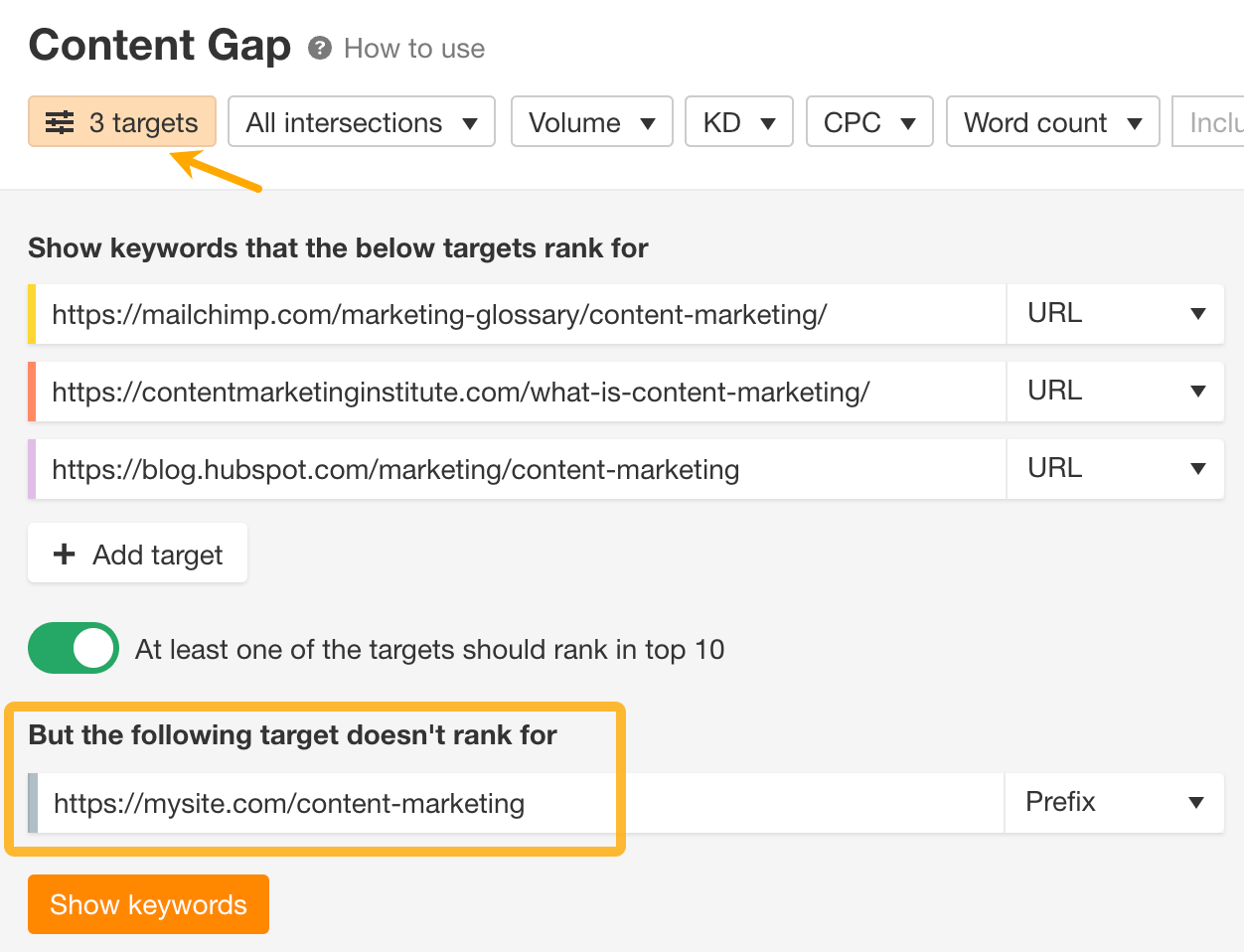

In this step, we’ll do a content gap analysis to find keywords your competitors already rank for, but you don’t.

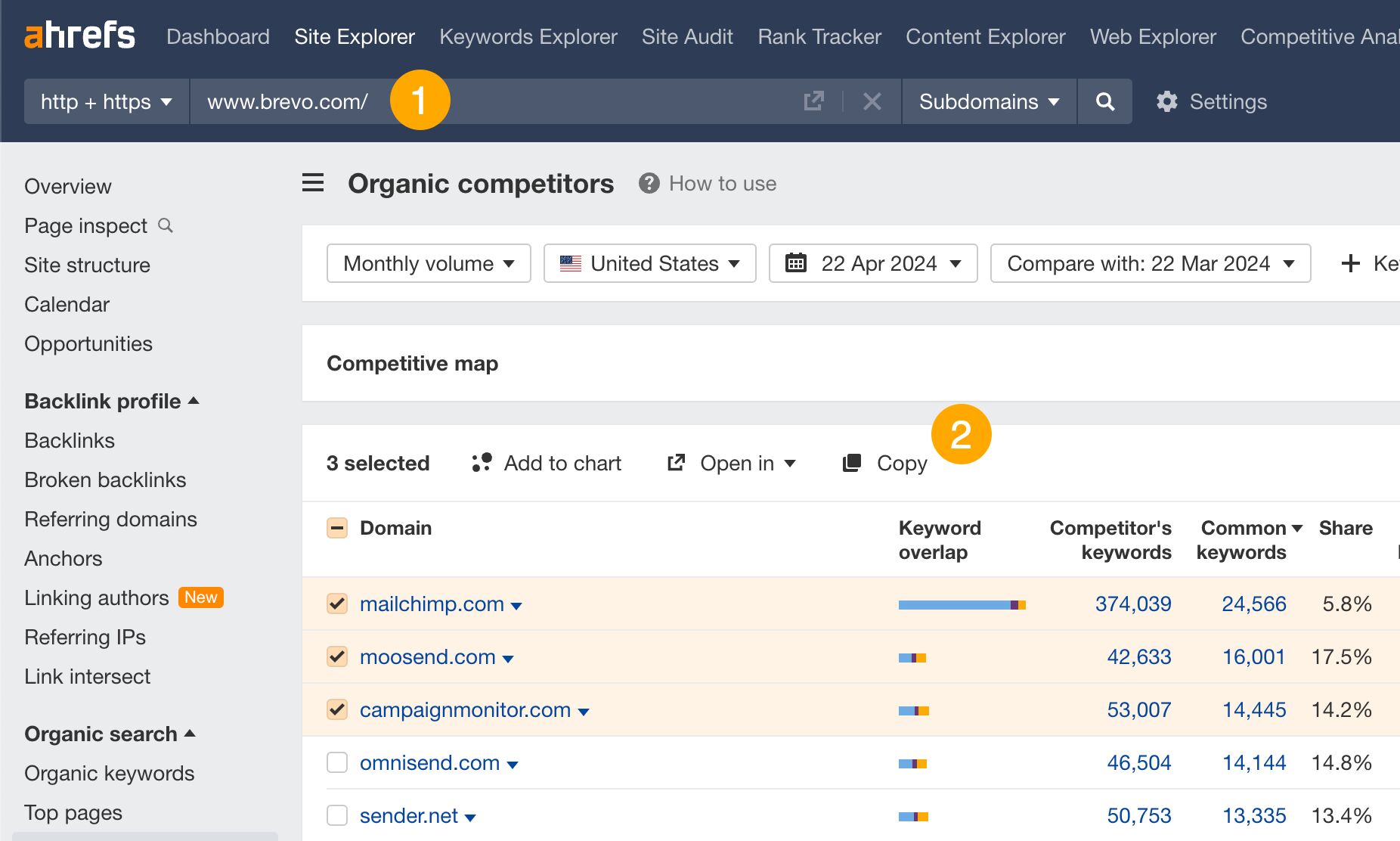

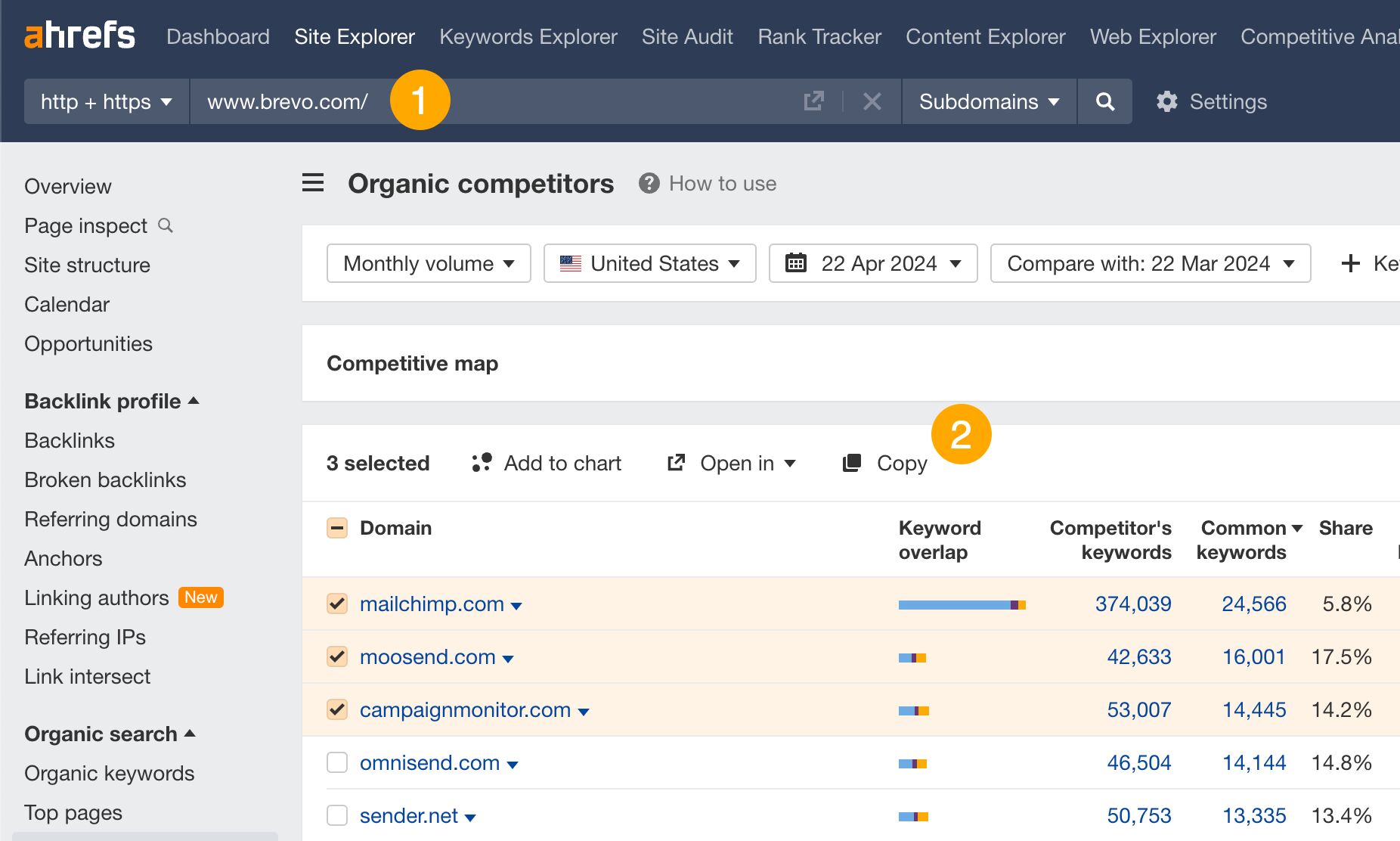

First, let’s find your competitors.

- Enter your domain in Ahrefs’ Site Explorer and go to the Organic competitors report.

- Select the most relevant competitors and click on Copy (this copies URLs — we’ll use them in another tool).

Next, we’ll see which keywords you’re missing.

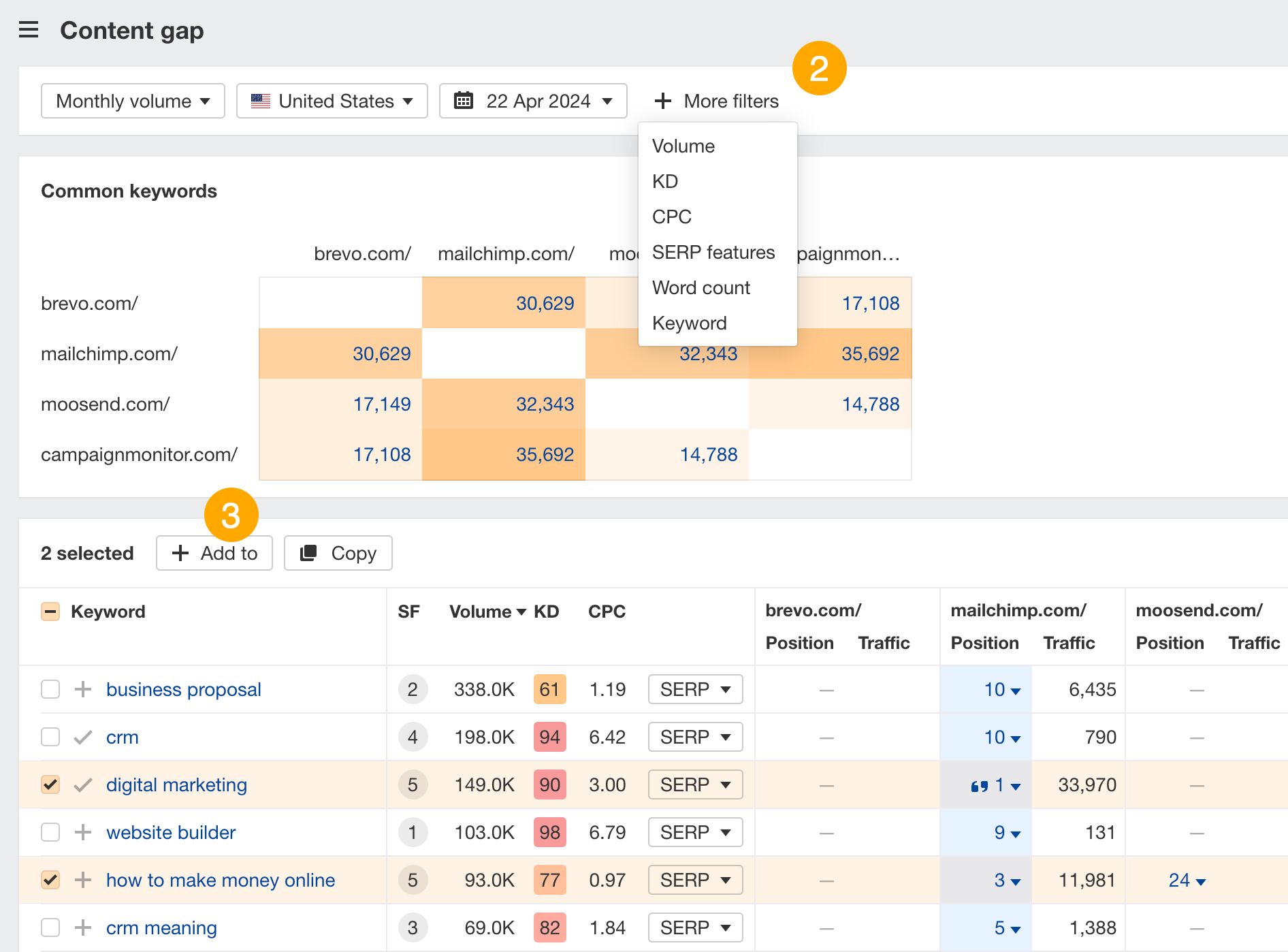

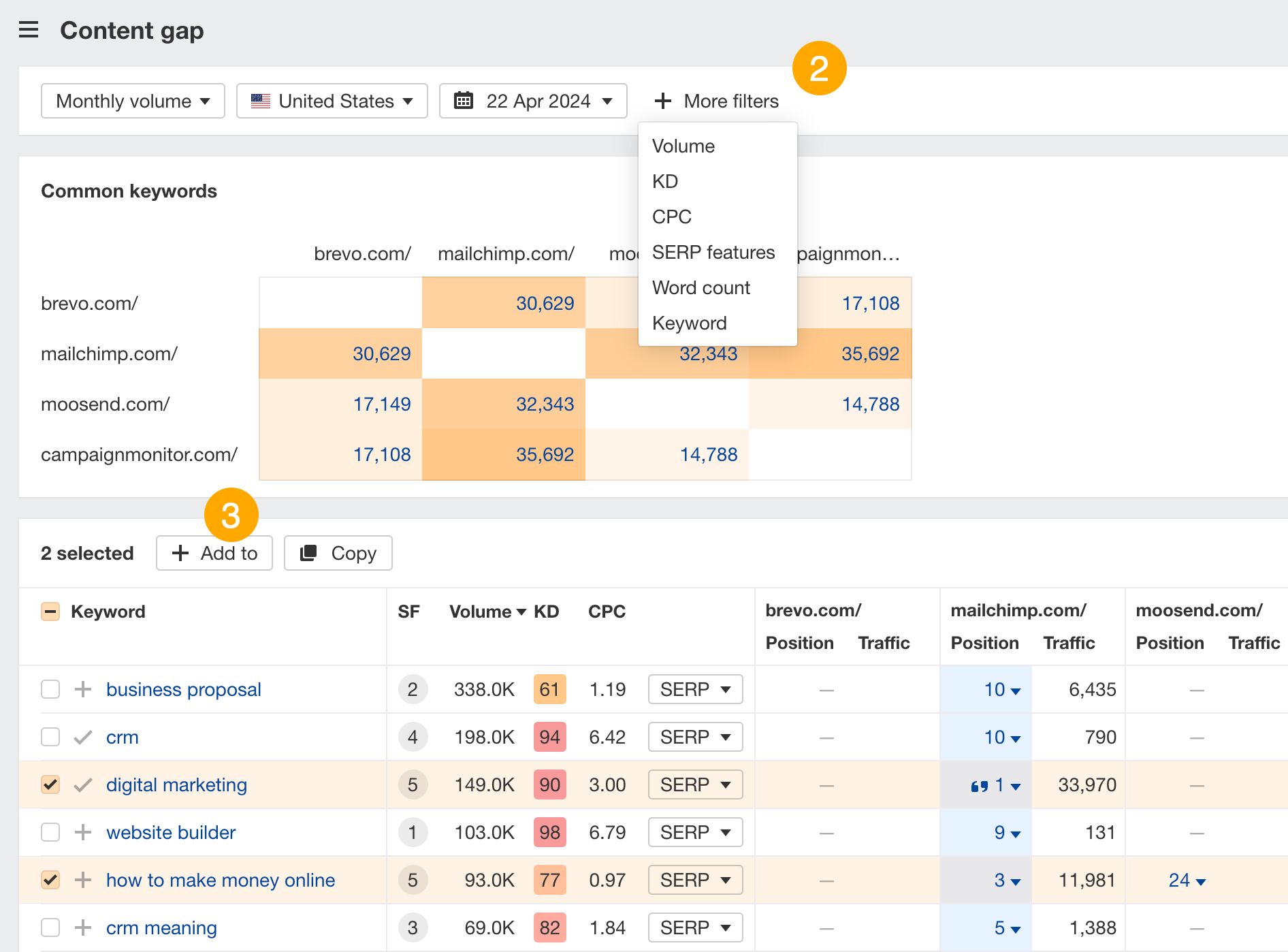

- Go to Ahrefs’ Competitive Analysis tool, paste the previously copied URLs, enter your domain on top and hit Show keyword opportunities.

- In the Content gap report, use filters to refine the report.

- Select keywords and add them to your list.

Pro tip

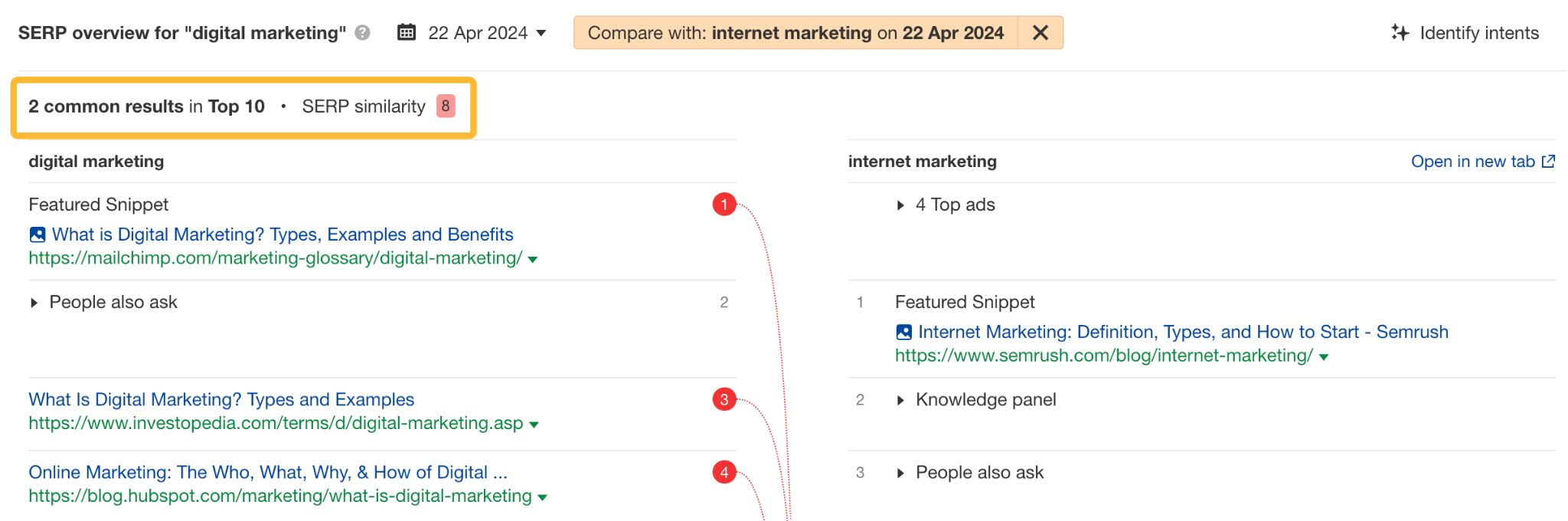

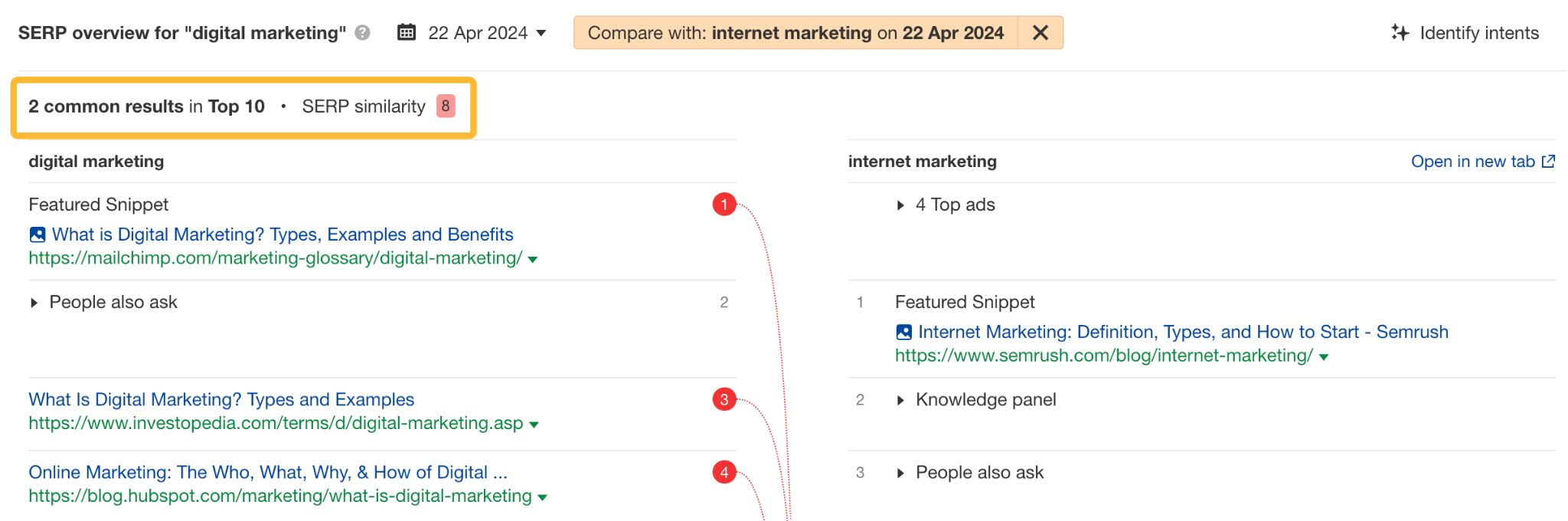

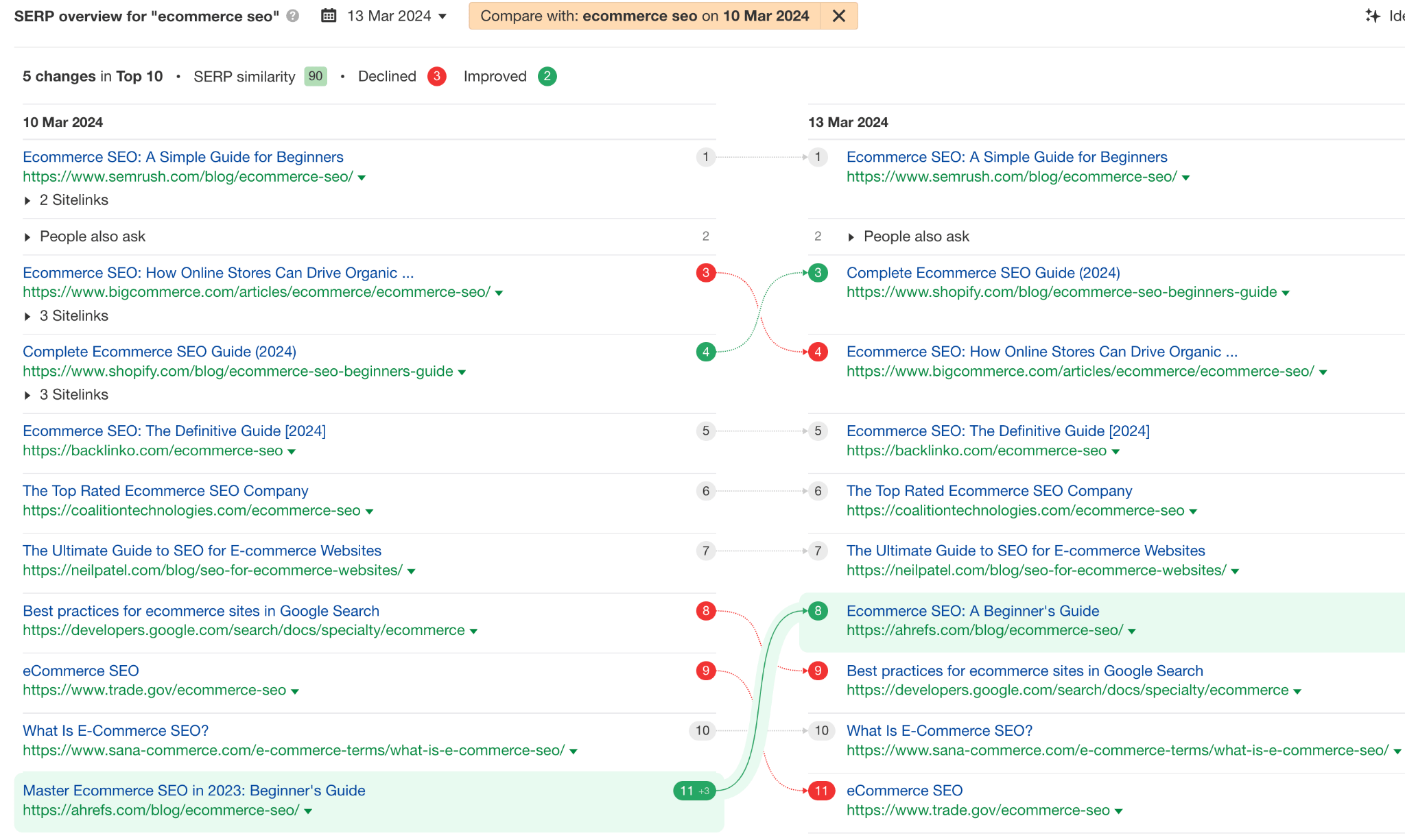

If you stumble across two similar keywords there’s an easy way to determine if they belong on the same page.

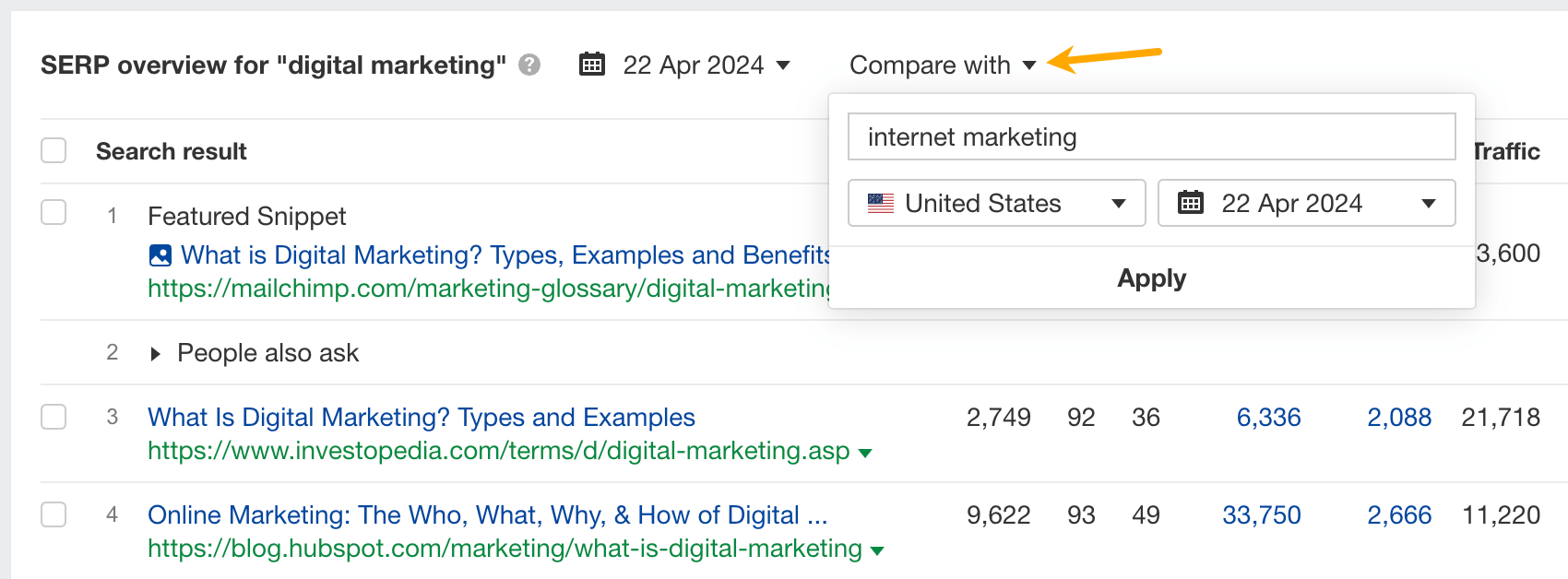

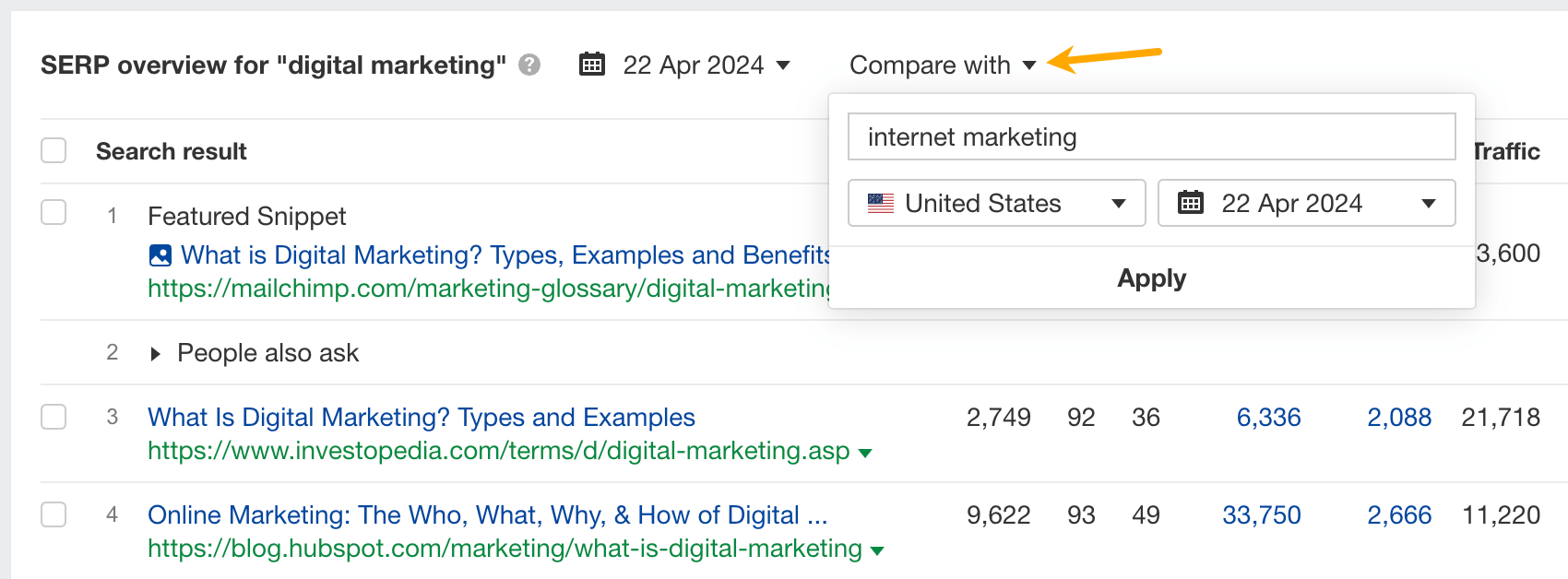

- Enter the keyword in Keywords Explorer.

- Scroll to SERP overview, click Compare with, and enter the keyword to compare with.

- Fewer common results and low SERP (Search Engine Result Page) similarity mean separate pages should target the two keywords.

Once you have your target keyword, you can include it in relevant places in your on-page content, including:

- Key elements of search intent (content type, format, and angle).

- URL slug.

- Title and H1.

- Meta description.

- Subheadings (H2 – H6).

- Main content.

- Anchor text for links.

And, just so we’re on the same page, the target keyword is the topic of the content and the main keyword you’ll be optimizing for and tracking later on.

Use the target keyword to determine the search intent

Search intent is the reason behind the search. Understanding it tells you what users are looking for and what you need to deliver in your content.

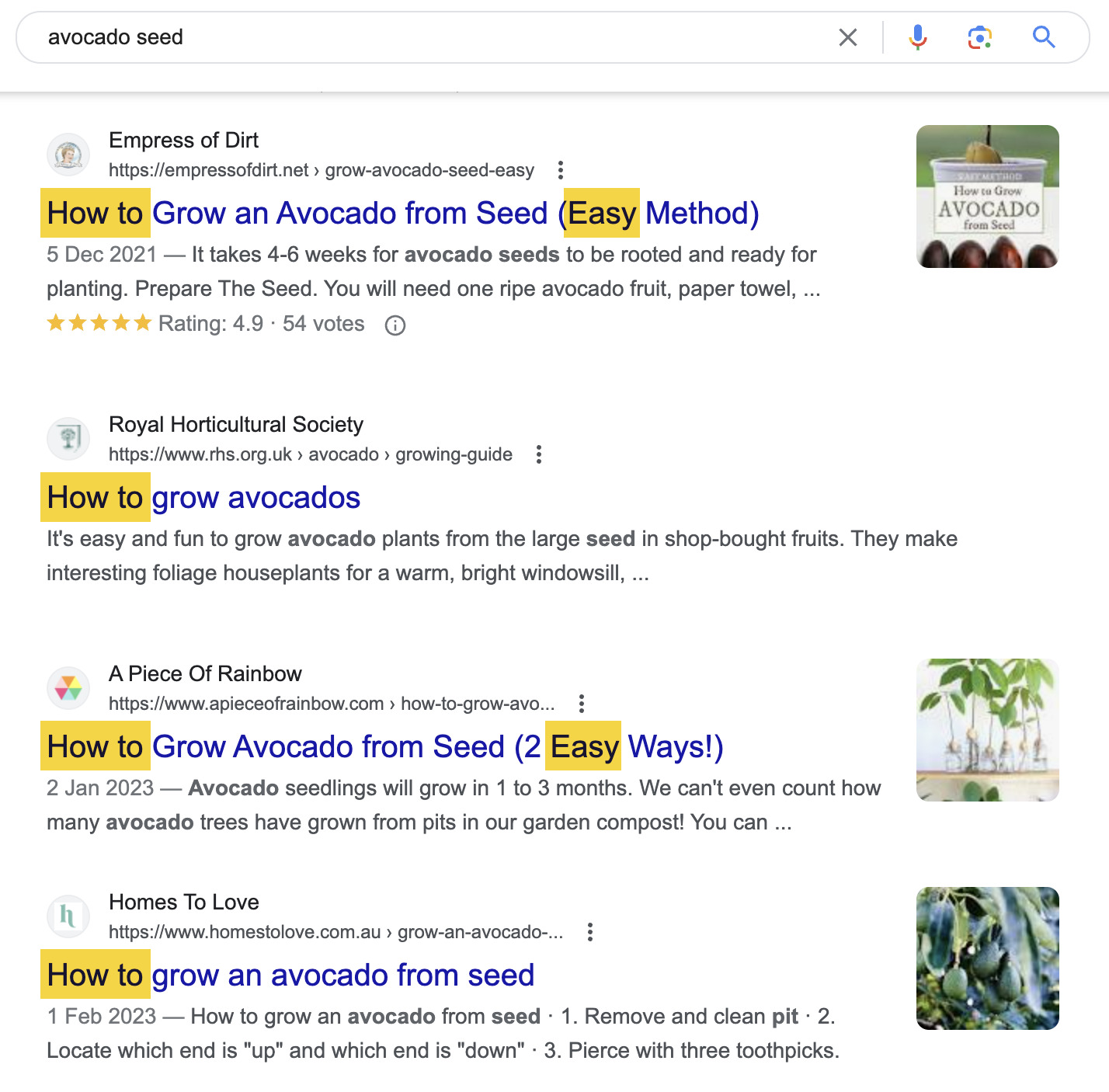

To identify search intent, look at the top-ranking results for your target keyword on Google and identify the three Cs of search intent:

- Content type – What is the dominating type of content? Is it a blog post, product page, video, or something else? If you’ve done that during the keyword research phase (highly recommended), only two elements to go.

- Content format – Some common formats include how-to guides, list posts, reviews, comparisons, etc.

- Content angle – The unique selling point of the top-ranking points, e.g., “best,” “cheapest,” “for beginners,” etc. Provides insight into what searchers value in a particular search.

For example, most top-ranking pages for “avocado seed” are blog posts serving as how-to guides for planting the seed. The use of easy and simple angles indicates that searchers are beginners looking for straightforward advice.

Use the target keyword in the URL slug

A URL slug is the part of the URL that identifies a specific page on a website in a form readable by both users and search engines.

If you look at the URL of the page you’re on, that will be the last part, “how-to-use-keywords-seo”.

https://ahrefs.com/blog/how-to-use-keywords-seoGoogle says to use words that are relevant to your content inside page URLs (source). Usually, the easiest way to do that is to set your target keyword in the slug part of the URL.

Use the target keyword in the title and match it with the H1 tag

A title tag is a bit of HTML code used to specify the title of a webpage.

<title>How to Use Keywords for SEO: A-Z Guide For Beginners</title>The H1 tag is an HTML heading that’s most commonly used to mark up a web page title.

<h1>How to Use Keywords for SEO: A-Z Guide For Beginners</h1>Both are very important to Google and searchers. Since they both indicate what the page is about, you can just match them, like I did in this article.

Titles help Google understand the context of a page. What’s more, even a slight improvement to your title can improve your rankings.

Google advises focusing on creating good titles, which should be “unique to the page, clear and concise, and accurately describe the contents of the page” (source). It’s hard to think of a better way to accurately describe the contents other than using the target keyword.

If it makes sense for the title, aim for an exact match of the keyword. But if you need to insert a preposition or break the phrase, this won’t make Google think your page is less relevant. Google understands close variations of the keyword really well, so there’s no need to stuff in similar keywords, misspellings, etc.

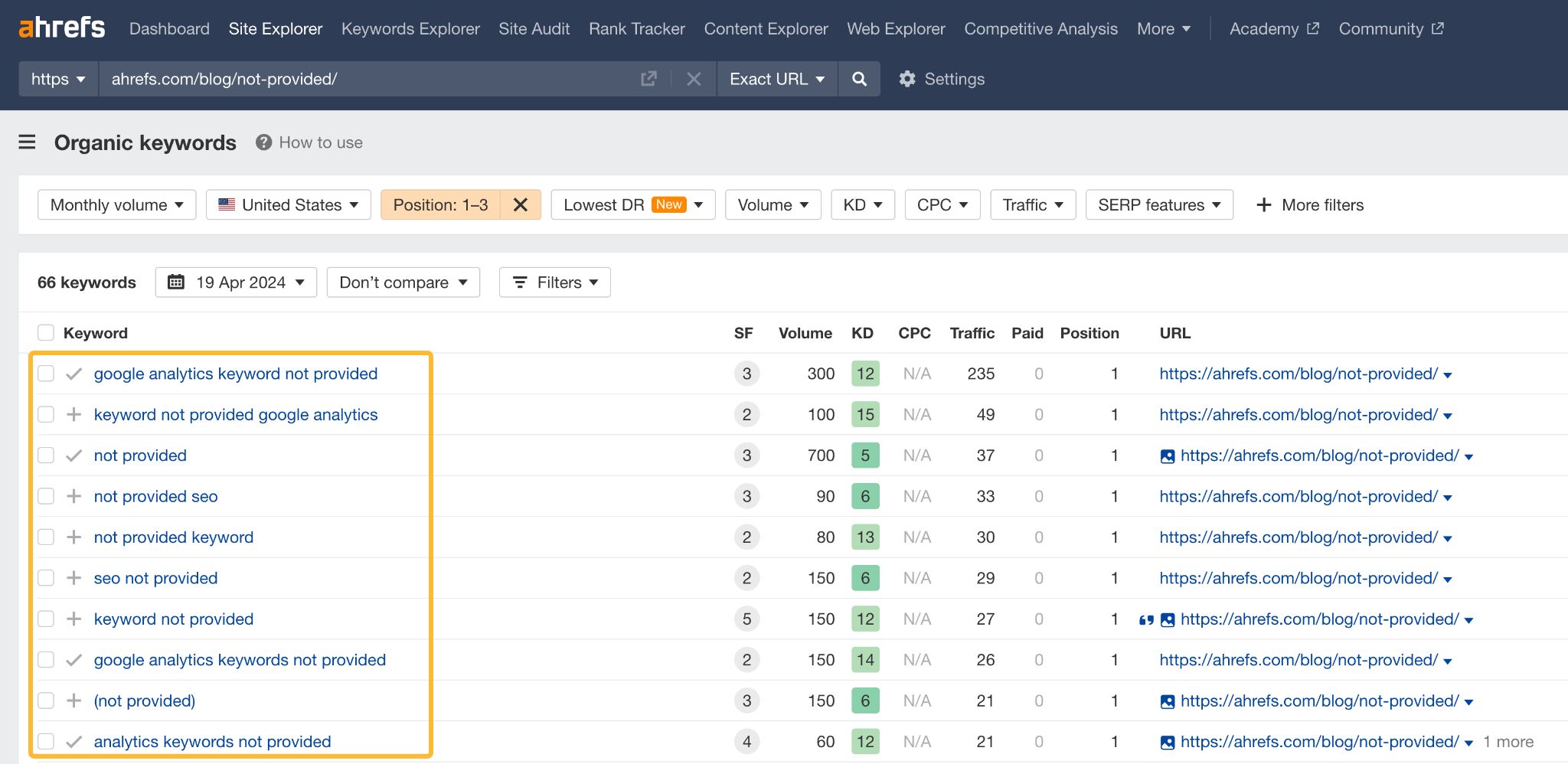

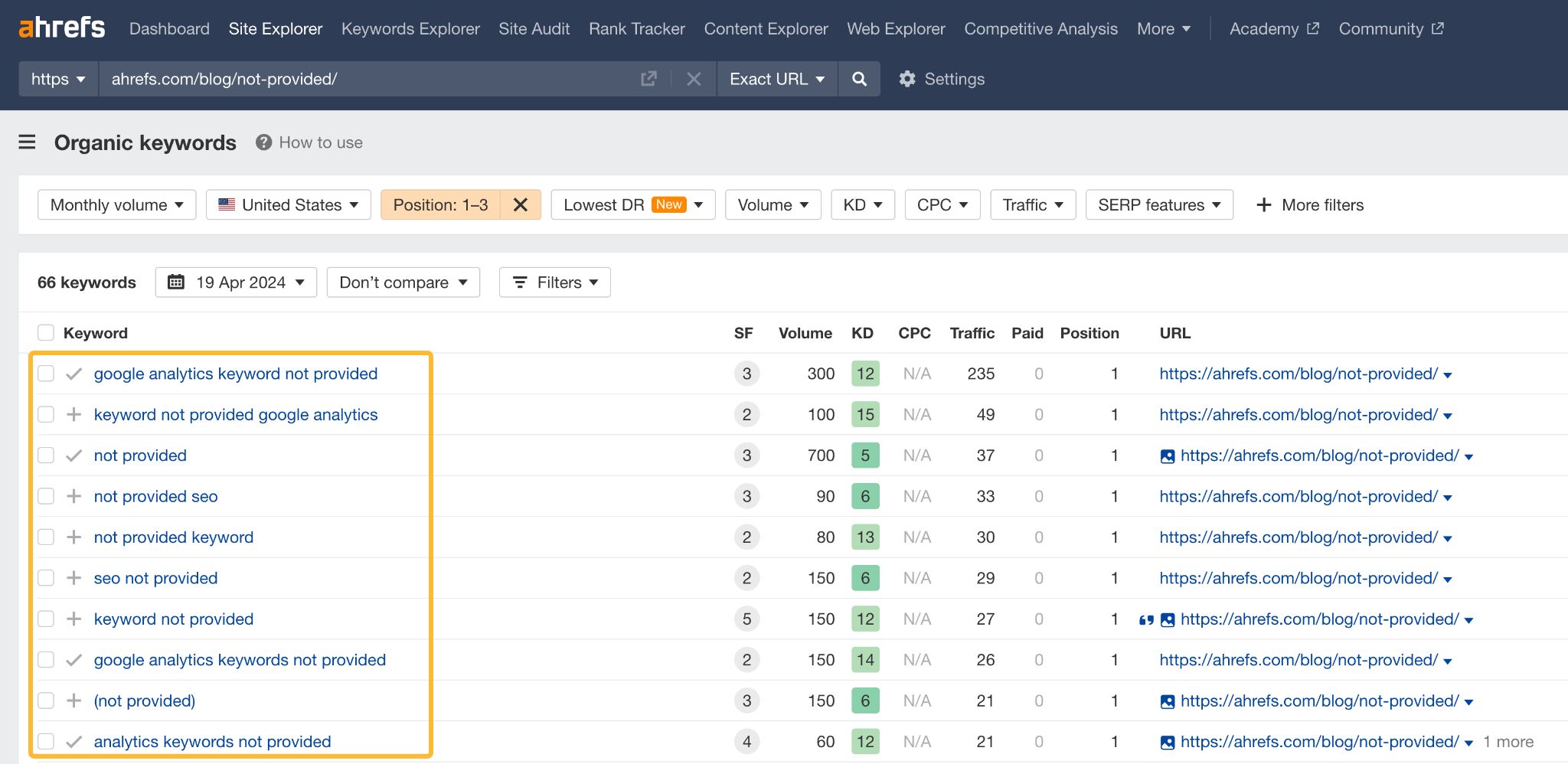

To illustrate, my old article on how to see keywords that Google Analytics won’t show ranks #1 for many variations of the phrase in the title.

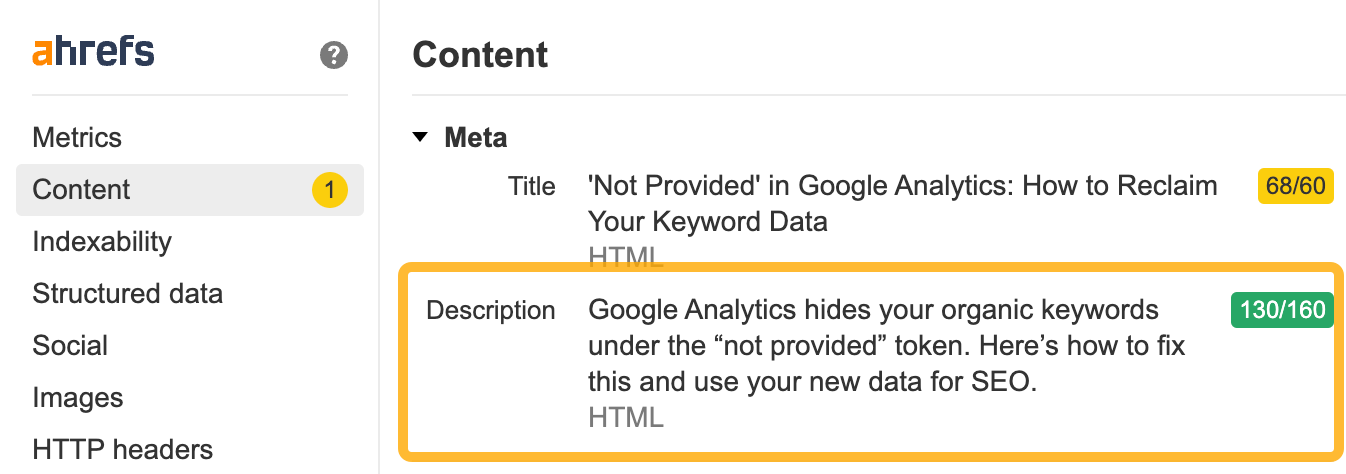

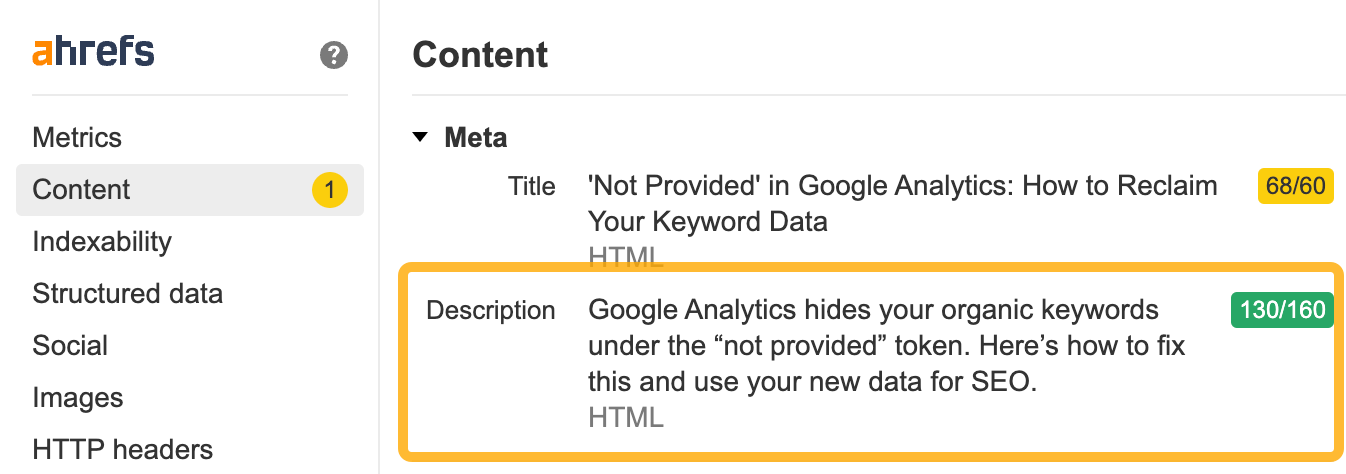

Use the keyword in meta description

However, don’t write meta descriptions solely for Google; Google rewrites them more than half of the time (study) and doesn’t use them for ranking purposes. Instead, focus on crafting meta descriptions for searchers.

These descriptions appear in the SERPs, where users can read them. If your description is relevant and compelling, it can increase the likelihood of users clicking on your link.

Including your target keyword in the meta description is usually natural. For instance, consider the description of the article mentioned earlier. Incorporating the keyword into the sentences simply provides a comprehensive way to describe the issue.

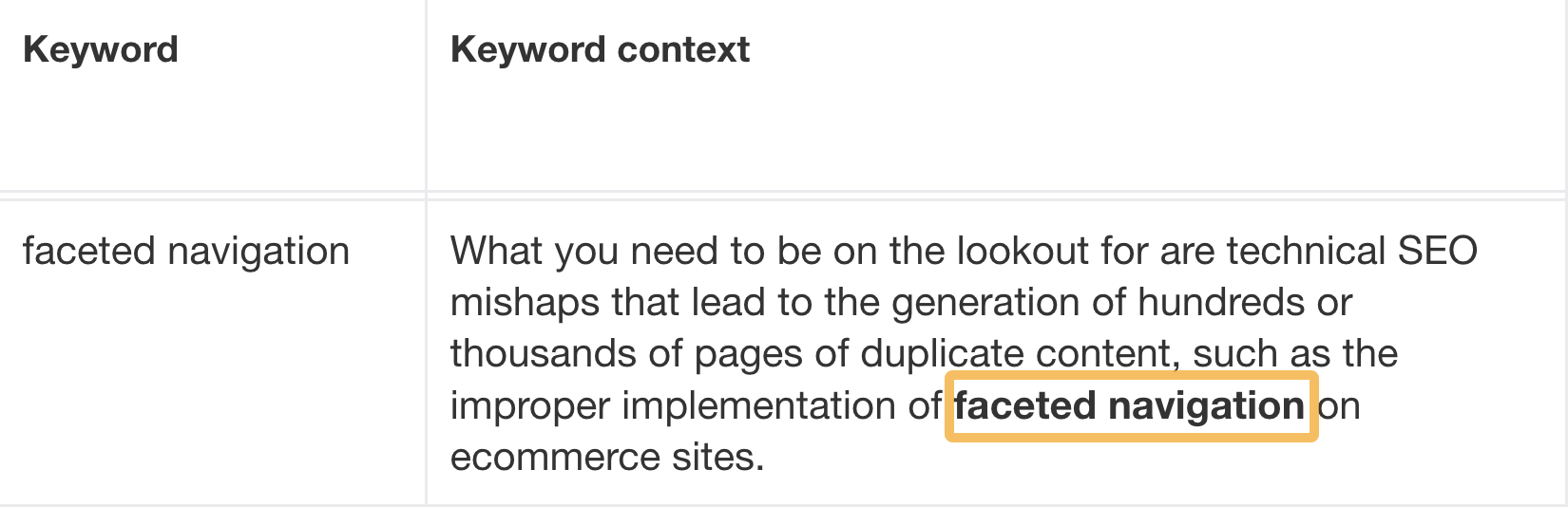

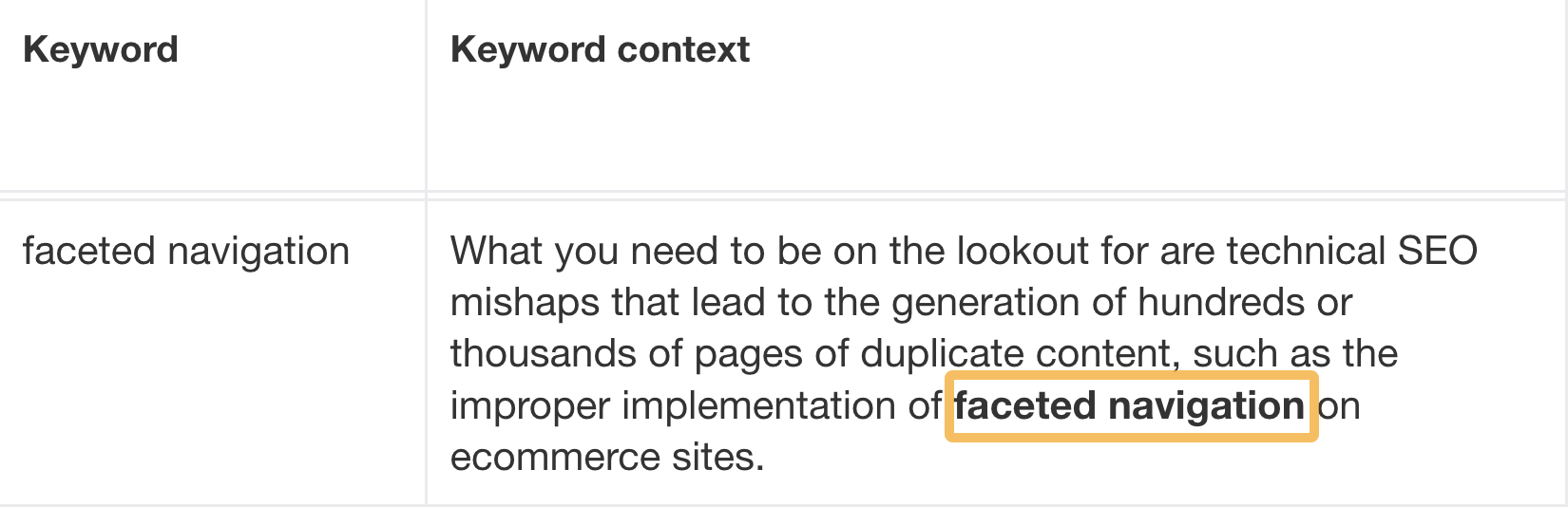

Use the target keyword to find secondary keywords

Secondary keywords are any keywords closely related to the primary keyword that you’re targeting with your page.

You can find them through your primary keyword and use them as subheadings (H2 to H6 tags) and talking points throughout the content. Here’s how.

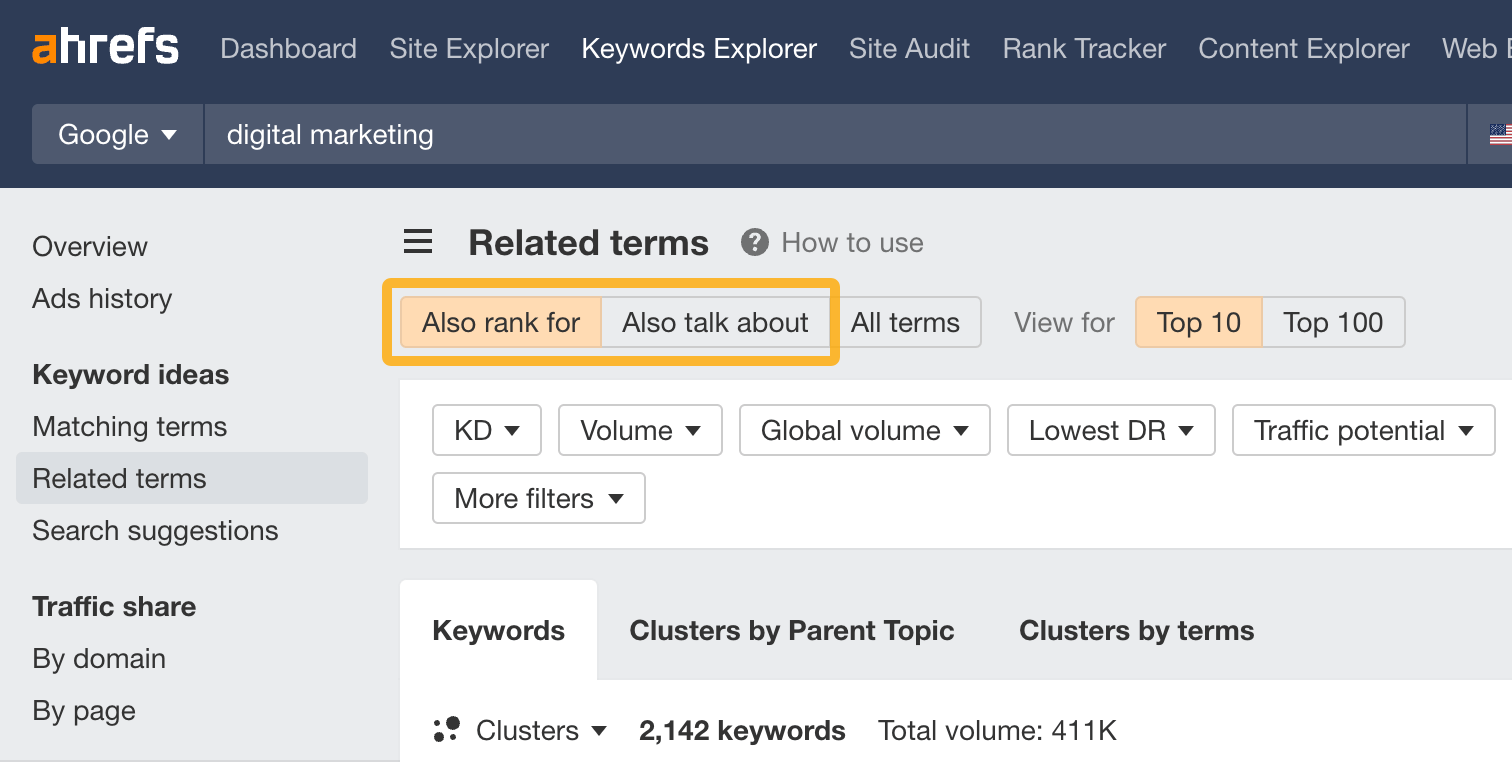

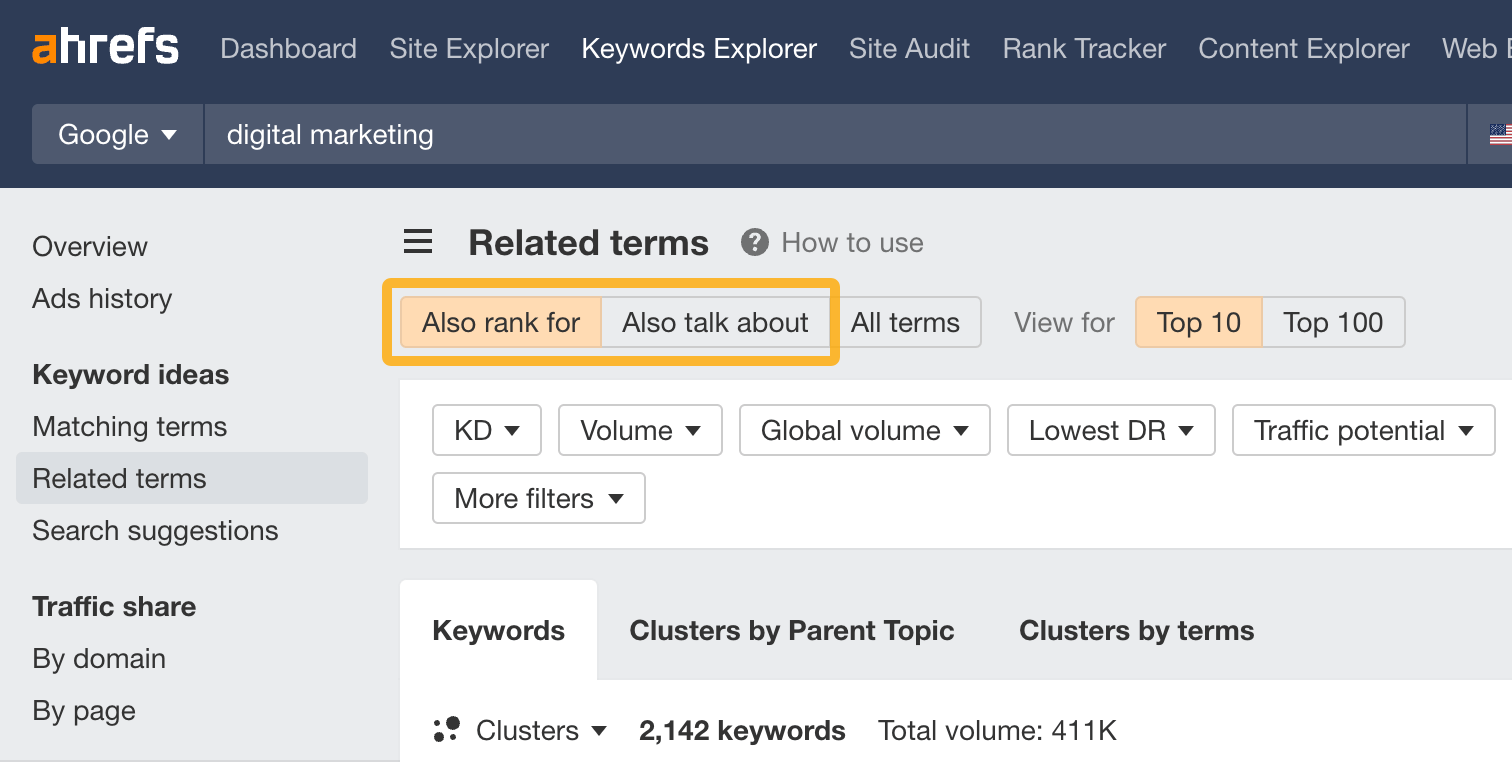

Go to Keywords Explorer and plug in your target keyword. From there, head on to the Related terms report and toggle between:

- Also rank for: keywords that the top 10 ranking pages also rank for.

- Also talk about: keywords frequently mention by top-ranking articles.

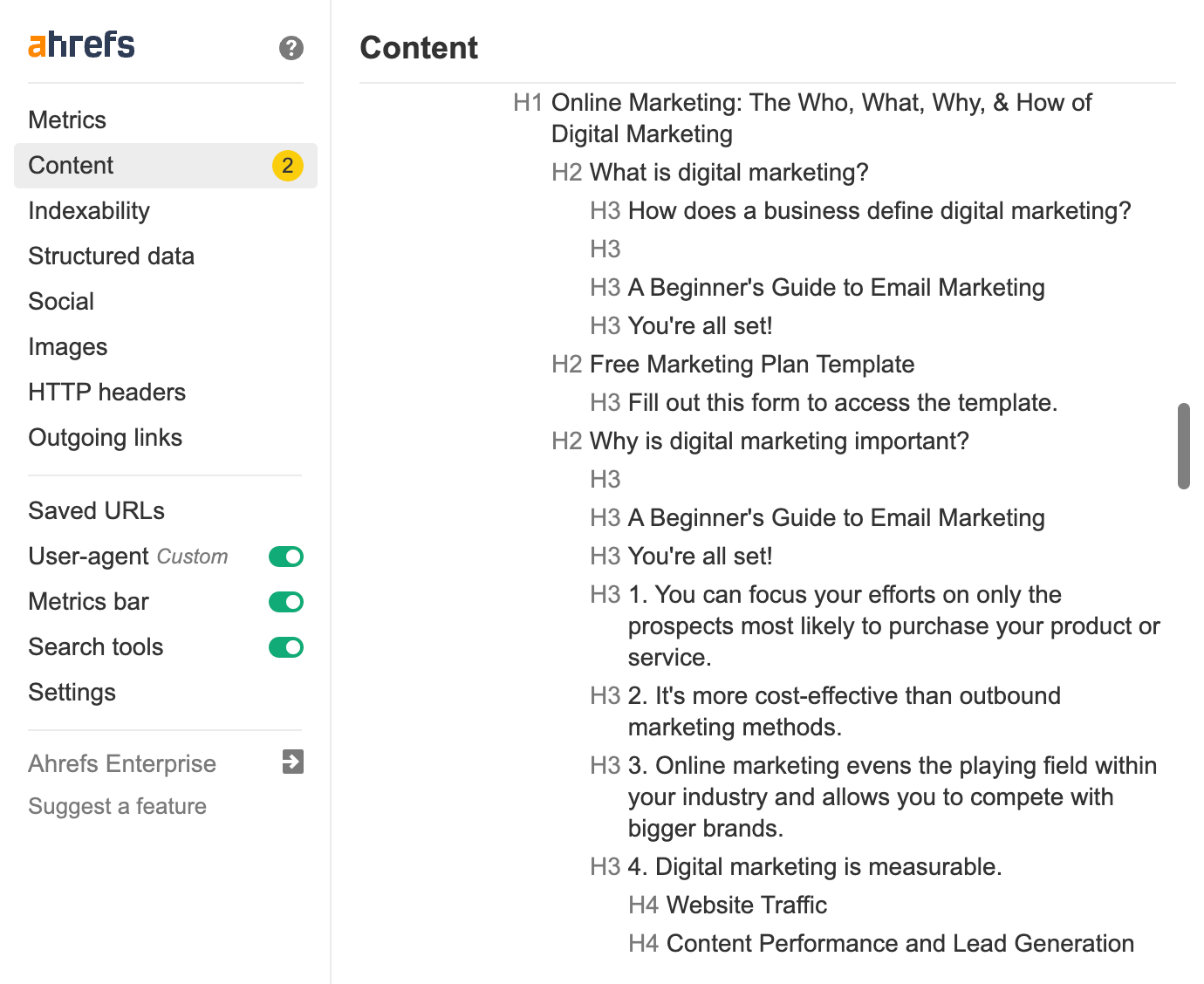

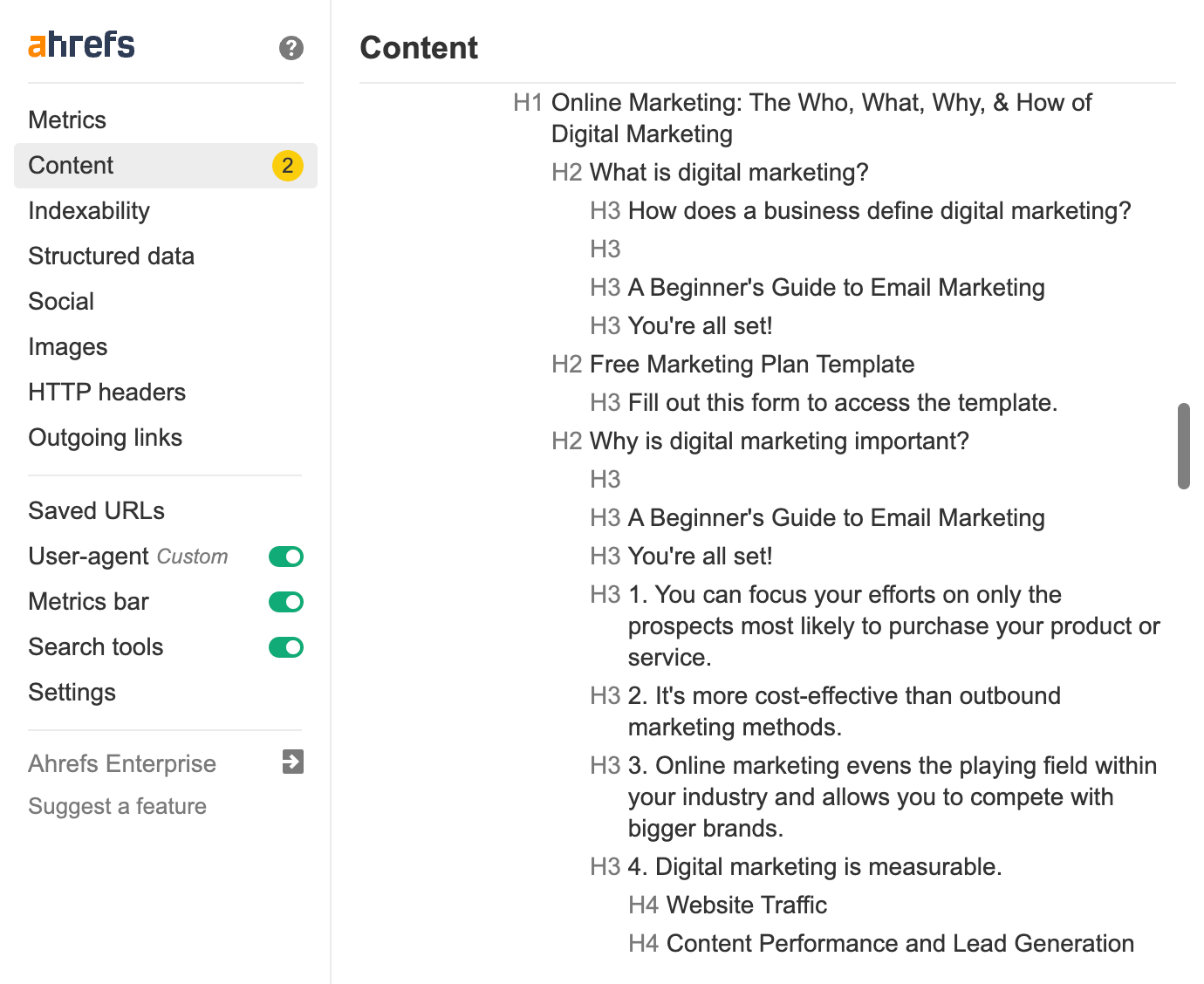

Now, to know how to use these keywords in your text, just manually look at the top ranking pages and see how and where they cover the keywords.

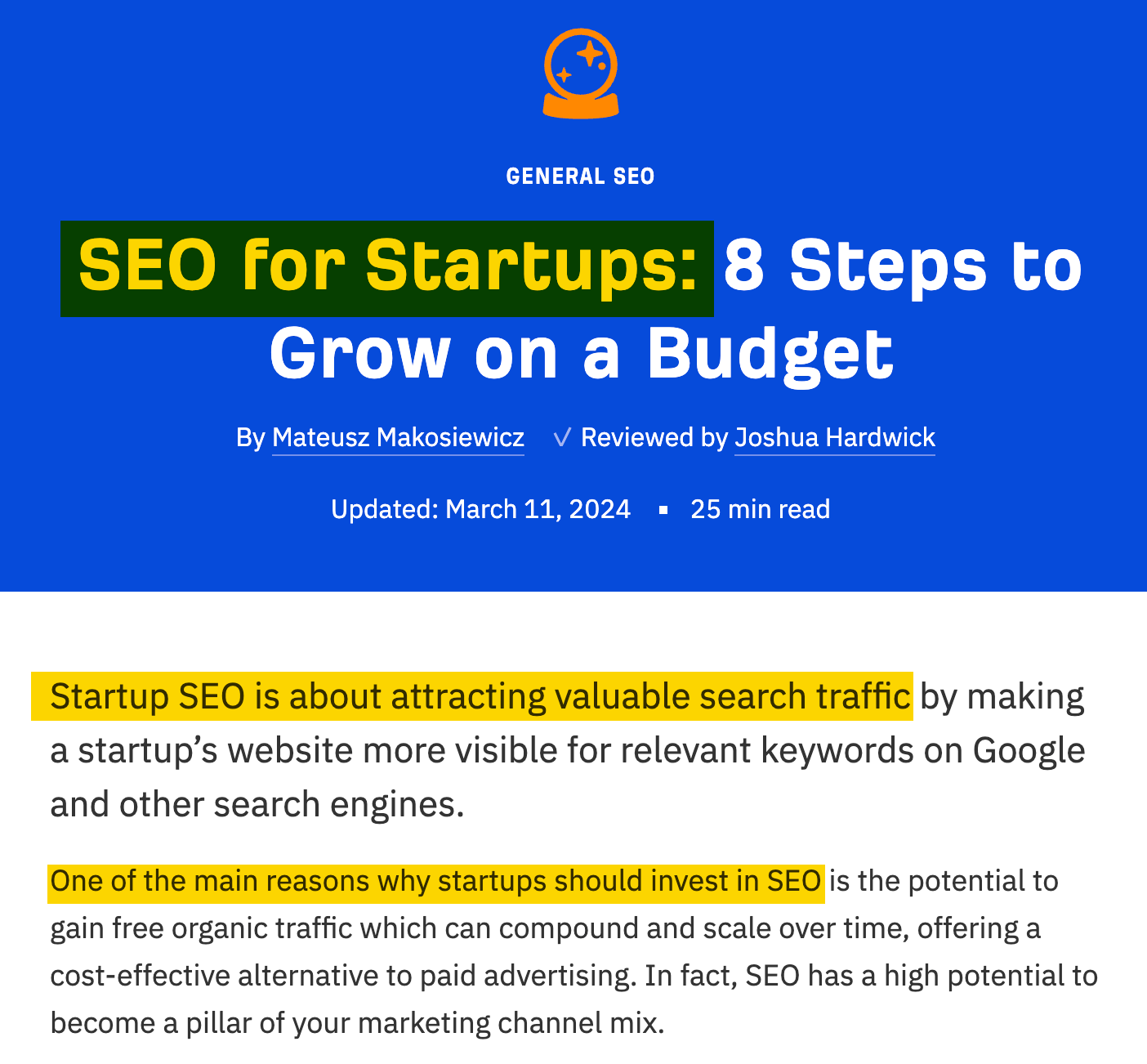

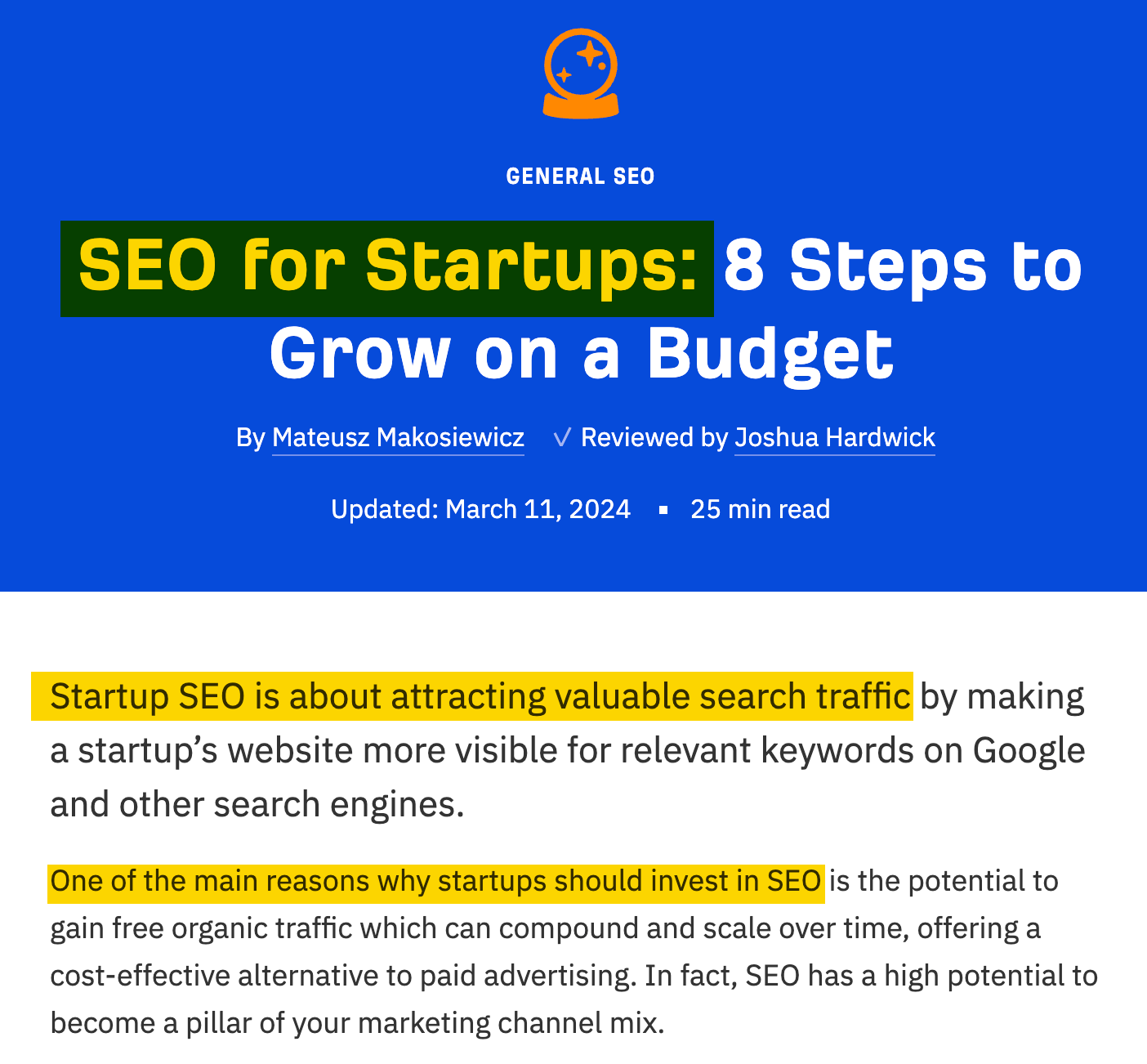

For example, looking at one of the top articles for “digital marketing”, we can see right away that some of the most important aspects are the definition, a template and importance. You can use the free Ahrefs SEO Toolbar to break down the structure of any page instantly.

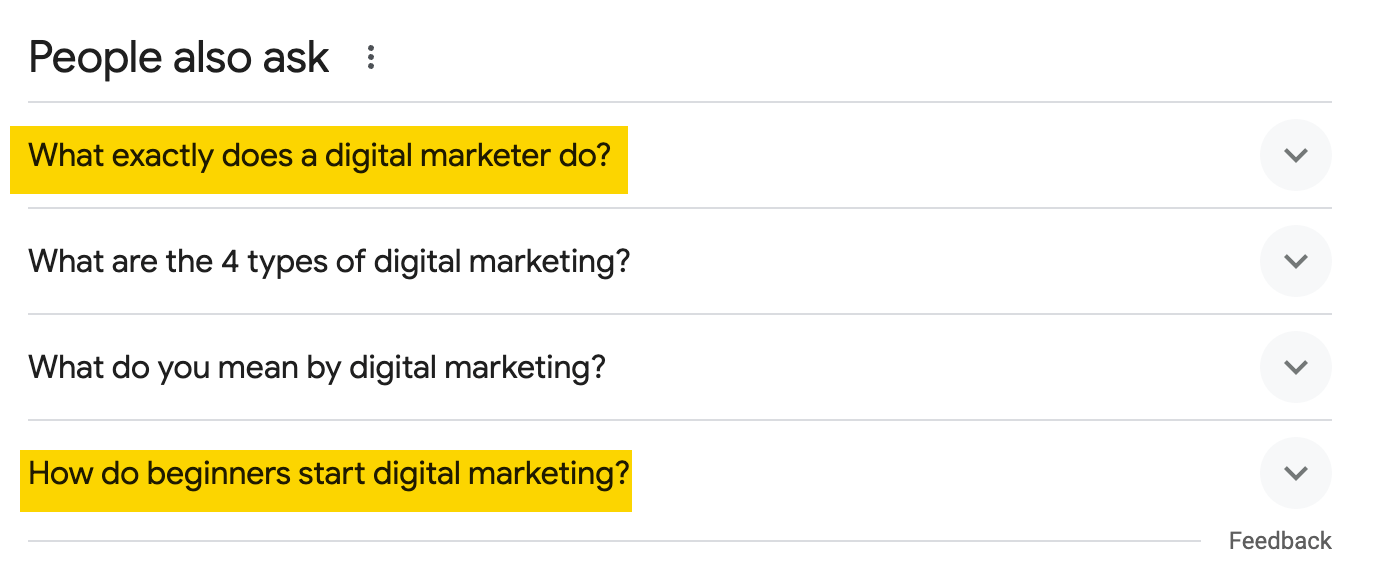

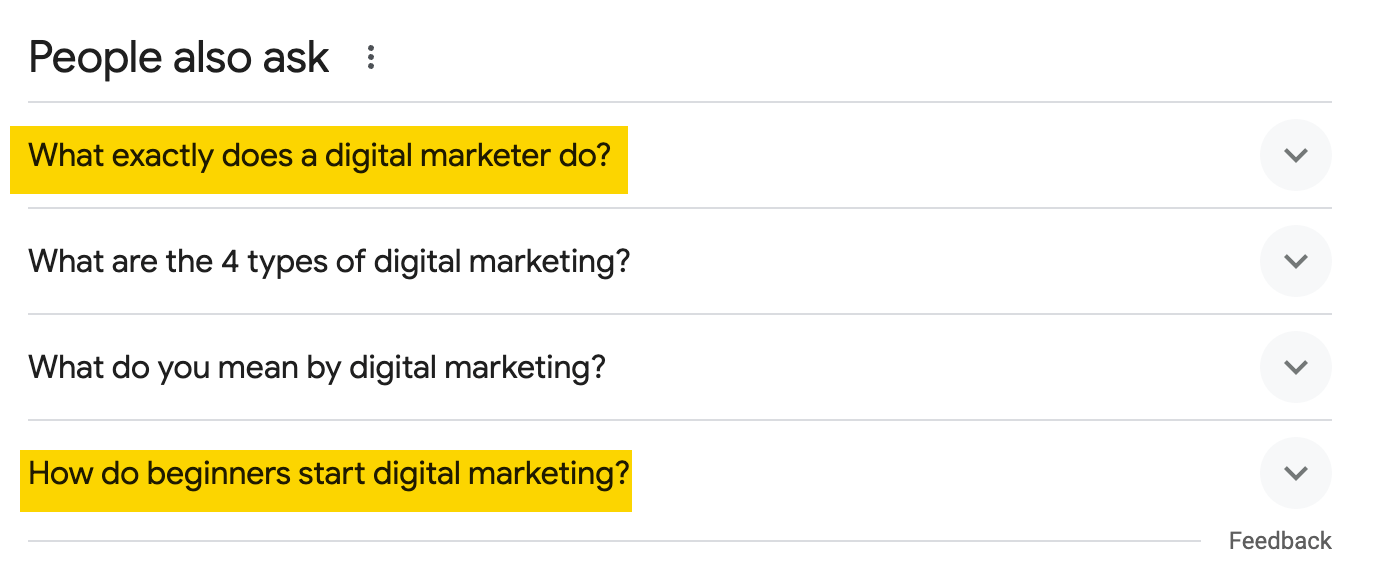

Another place you can look for inspiration is the People Also Ask Box in the SERPs. Use it to find words and subtopics that may be worth adding to the article.

Pro tip

Optimizing an existing article?

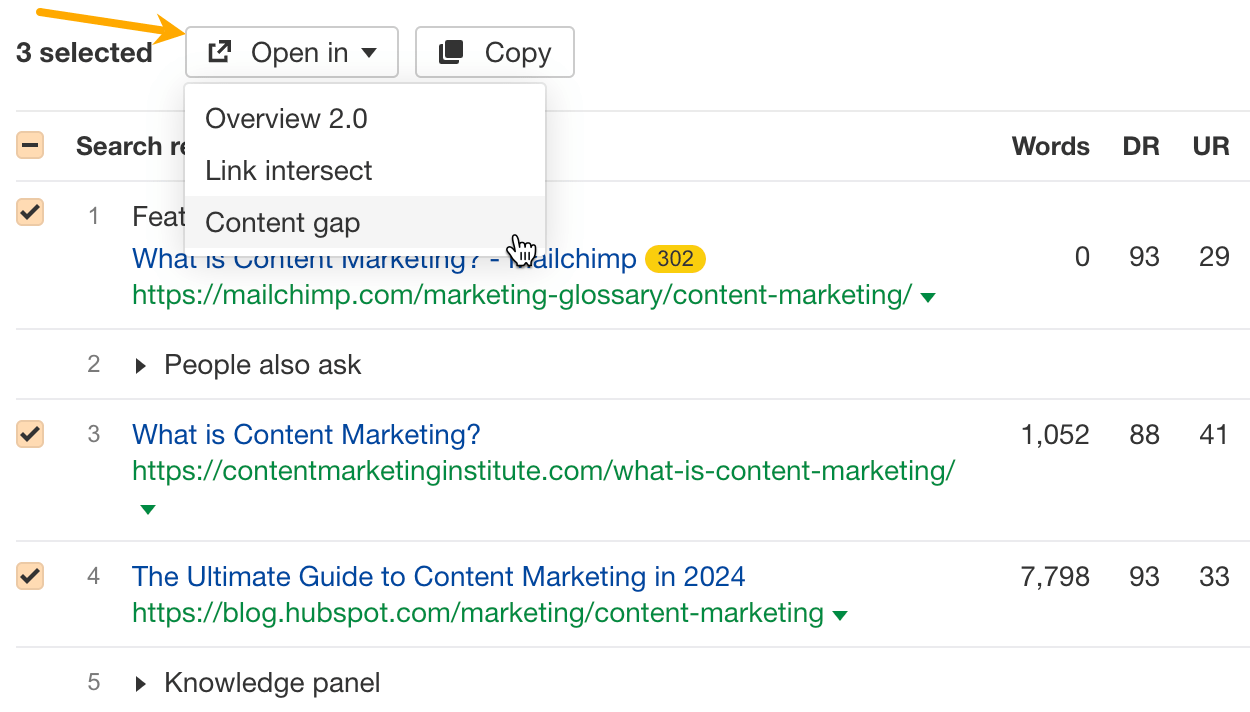

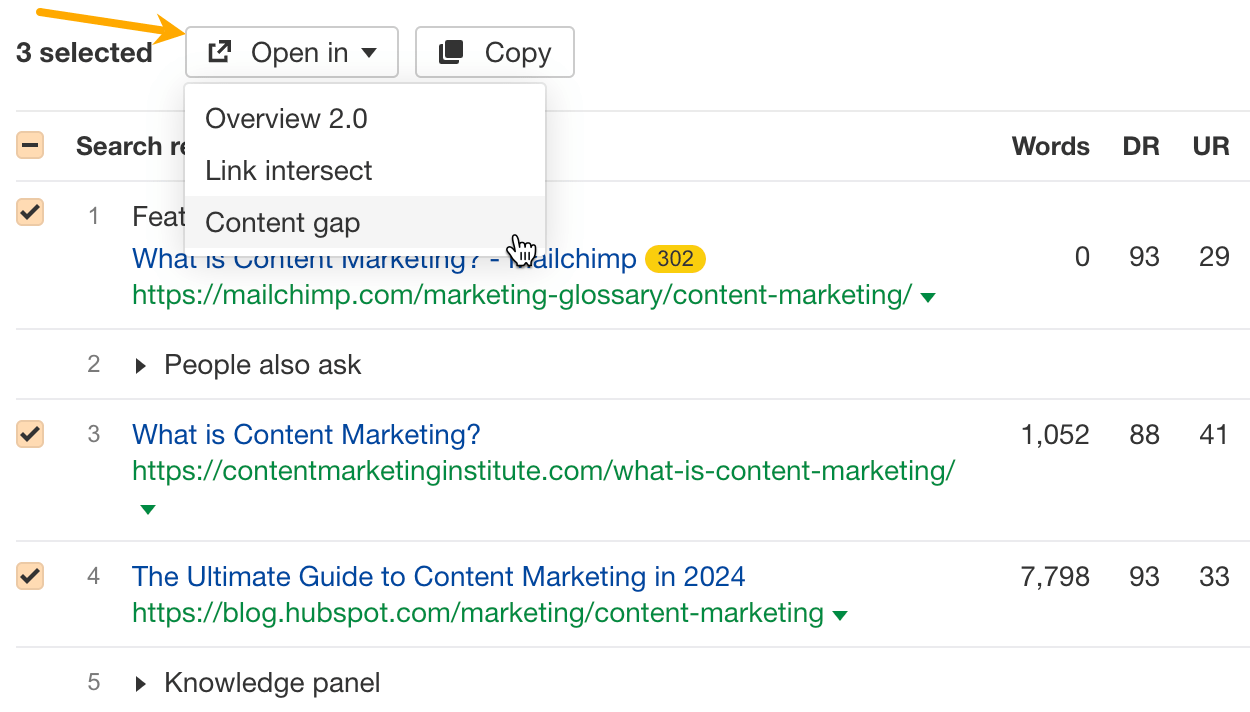

Use the Content Gap tool to find subtopics you may be missing. The tool shows keywords that your competitors’ pages rank for, and your page doesn’t.

- Go to Keywords Explorer and enter your target keyword.

- Scroll down to the SERP overview, select a few top pages, and click Open in Content Gap.

- In Content Gap, click on Targets and add the page you’re optimizing in the last field.

Use primary and secondary keywords in the main content

To rank high on search engines, it’s important to include your keywords in your text. Even though Google is good at understanding similar words and variations, it still helps to use the specific keywords people might search for. Google explains that in their short guide to how search works:

The most basic signal that information is relevant is when content contains the same keywords as your search query. For example, with webpages, if those keywords appear on the page, or if they appear in the headings or body of the text, the information might be more relevant.

When writing, it’s important to incorporate keywords naturally. Start your content with the most relevant information that people are likely to search for. This ensures that key points are immediately visible to your readers and search engines.

If you have a secondary keyword that’s less critical but still relevant, consider giving it a dedicated section. This approach allows you to explore the topic in detail, rather than briefly mentioning it at the end of your content.

However, avoid overemphasizing the frequency of your keywords. Effective SEO involves more than just repeating keywords. If SEO were simply about keyword density, it would be straightforward, but such strategies don’t lead to long-term success and can make your content feel spammy.

For instance, if ‘content strategy’ is a central theme of your discussion and you mention it only once, Google might perceive your content as incomplete. On the other hand, stuffing your article with the term ‘content strategy’ more than necessary won’t outperform your competitors and could potentially lead to your site being flagged as spam.

Use the target keyword in link anchor text and/or surrounding text

The anchor text or link text is the clickable text of an HTML hyperlink.

Google uses anchors to understand the page’s context. There even seems to be a consensus that anchor text is a ranking factor, although, according to our study, it is likely a weak one.

In situations like these, it’s just best to stick with Google’s advice:

Good anchor text is descriptive, reasonably concise, and relevant to the page that it’s on and to the page it links to. It provides context for the link, and sets the expectation for your readers. (…)

Remember to give context to your links: the words before and after links matter, so pay attention to the sentence as a whole.

So use the target keyword in the anchor text and or surrounding text but keep it natural — add only on pages that are related to the page you’re linking to and use text that will help the readers understand where and why you’re linking.

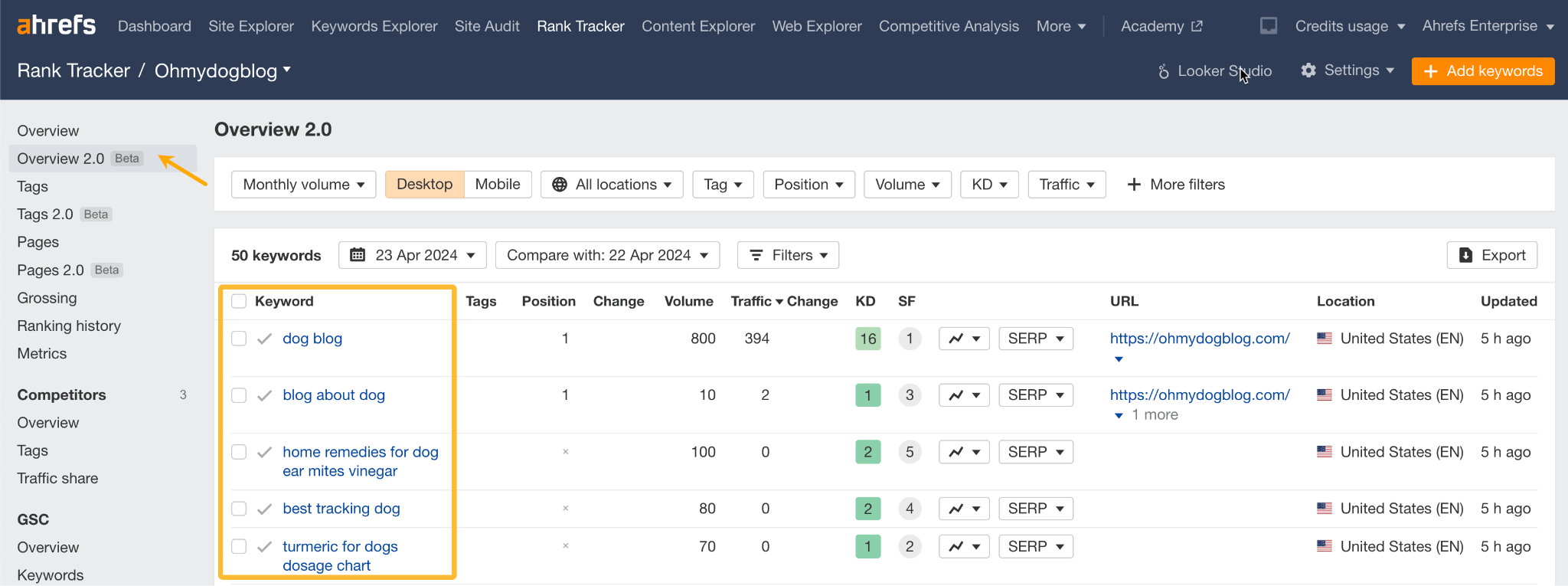

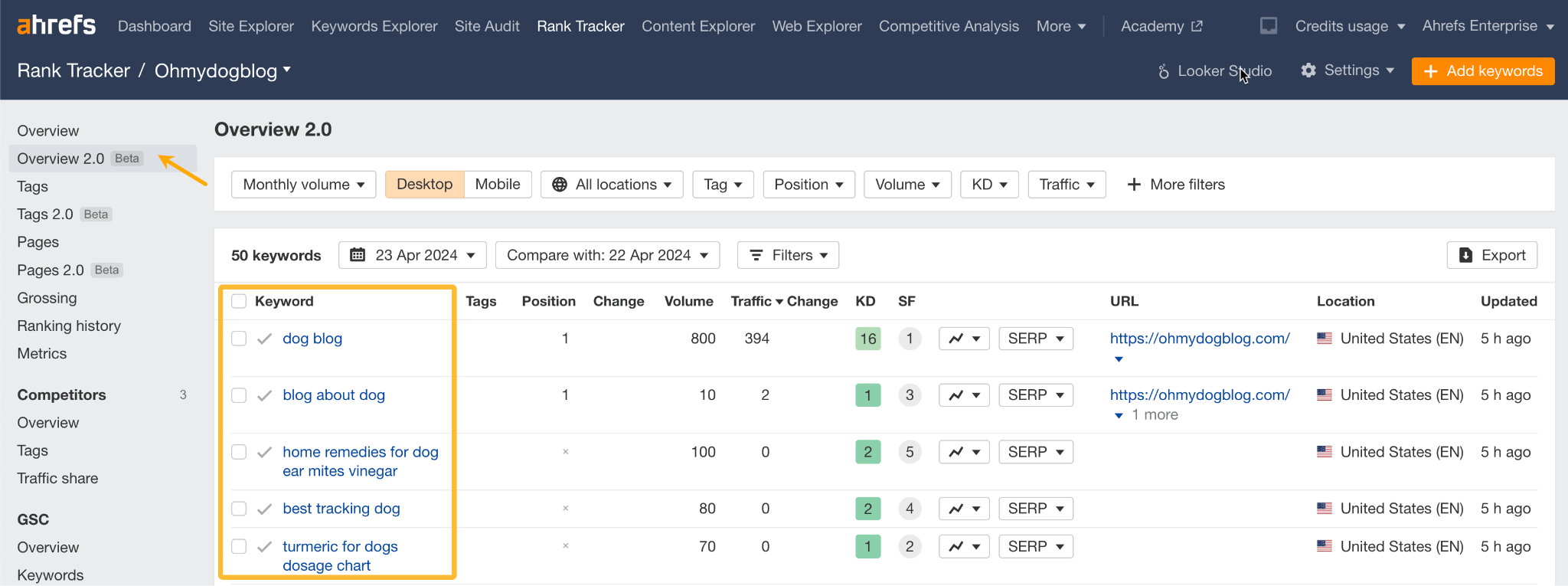

Rank tracking refers to monitoring the positions of a website’s pages in search engine results for specific keywords.

It’s pretty much an automated process; everything can be handled by a tool like Ahrefs’ Rank Tracker. No need to check rankings manually and note them down in a spreadsheet.

If you have a keyword list ready, all you need to do is add that list to Rank Tracker.

The keywords will appear in Rank Tracker’s Overview report.

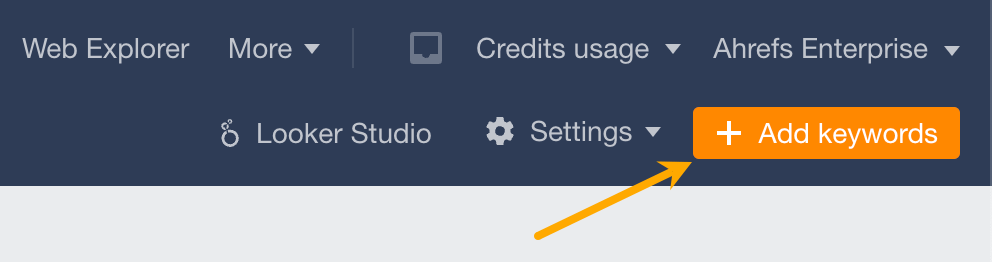

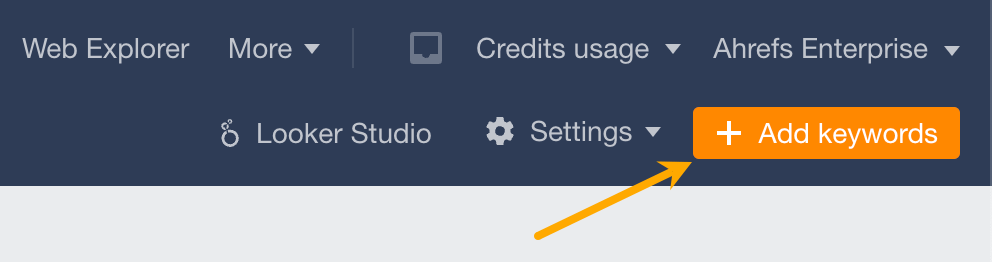

Another way to add keywords is to hit Add keywords in the top right corner (best for adding single keywords or importing a list from a document).

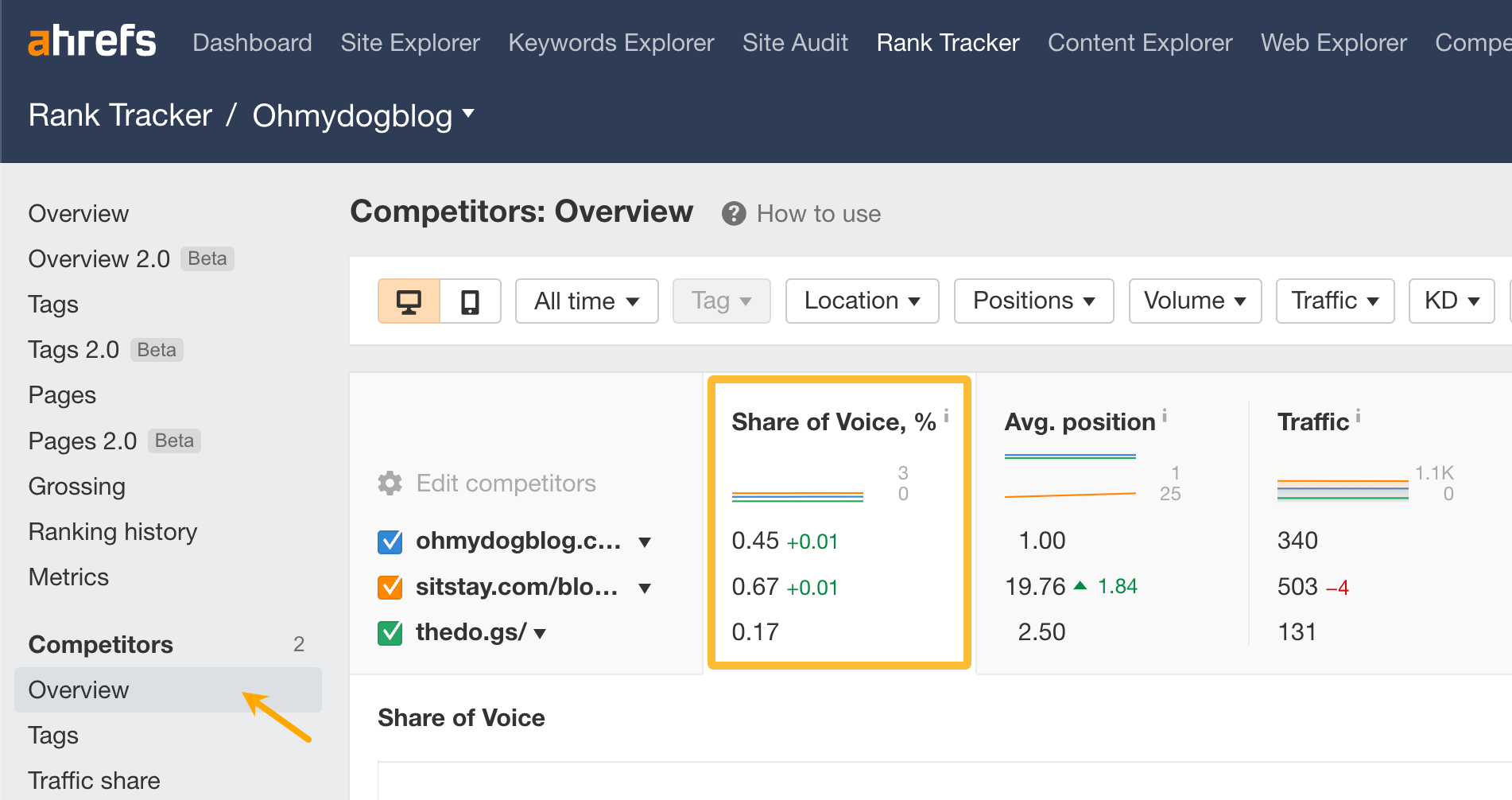

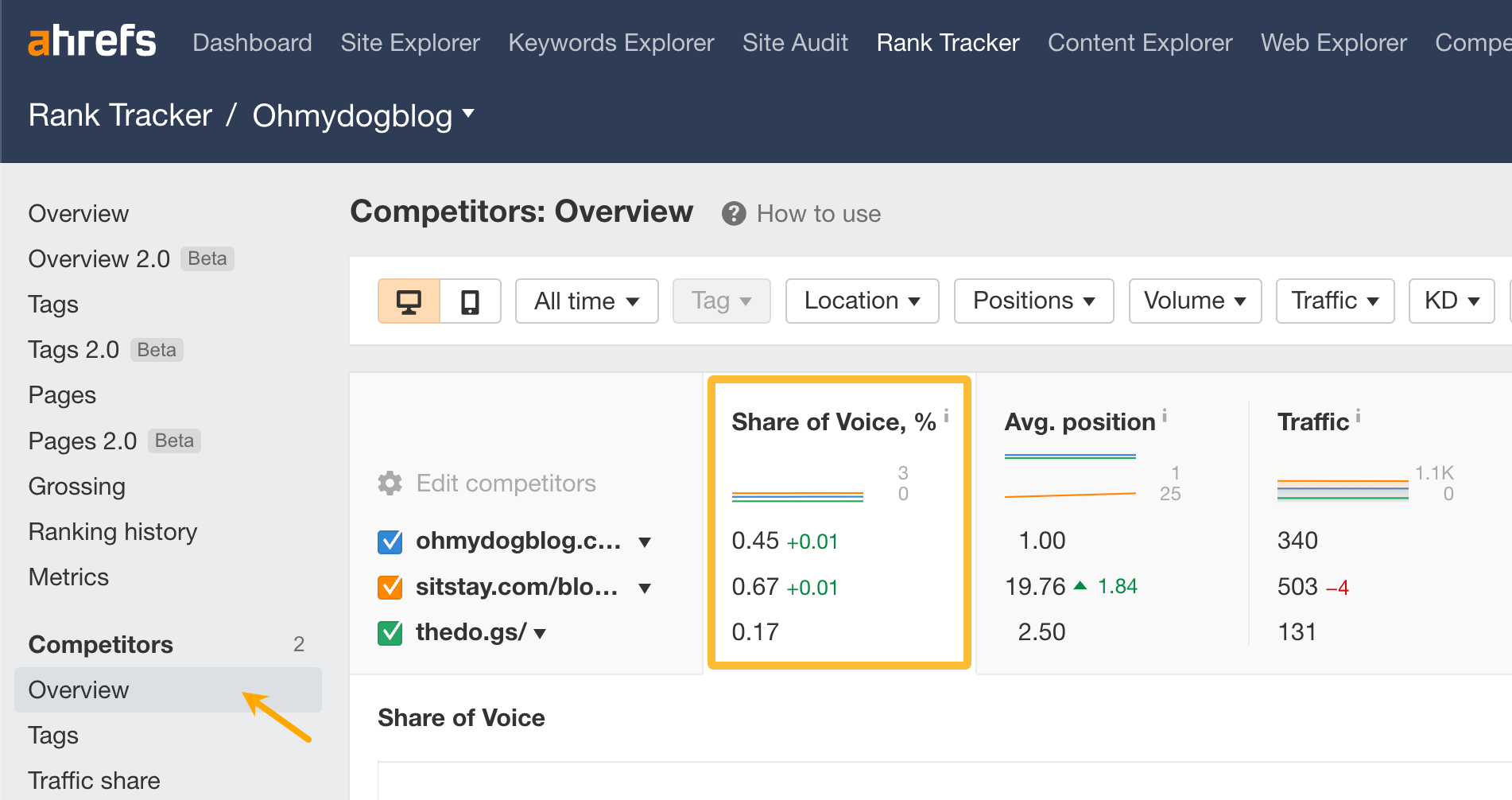

Now to compare your performance against competitors, just go to the Competitors report. The metric I recommend tracking is SOV (Share of Voice). It shows how many clicks go to your pages compared to competitors.

One of the key advantages of SOV is that it accounts for fluctuations in search volume trends. Therefore, if you notice a decrease in traffic but maintain a high SOV, it indicates that the drop is due to a decrease in the overall popularity of the keywords, not a decline in your SEO effectiveness.

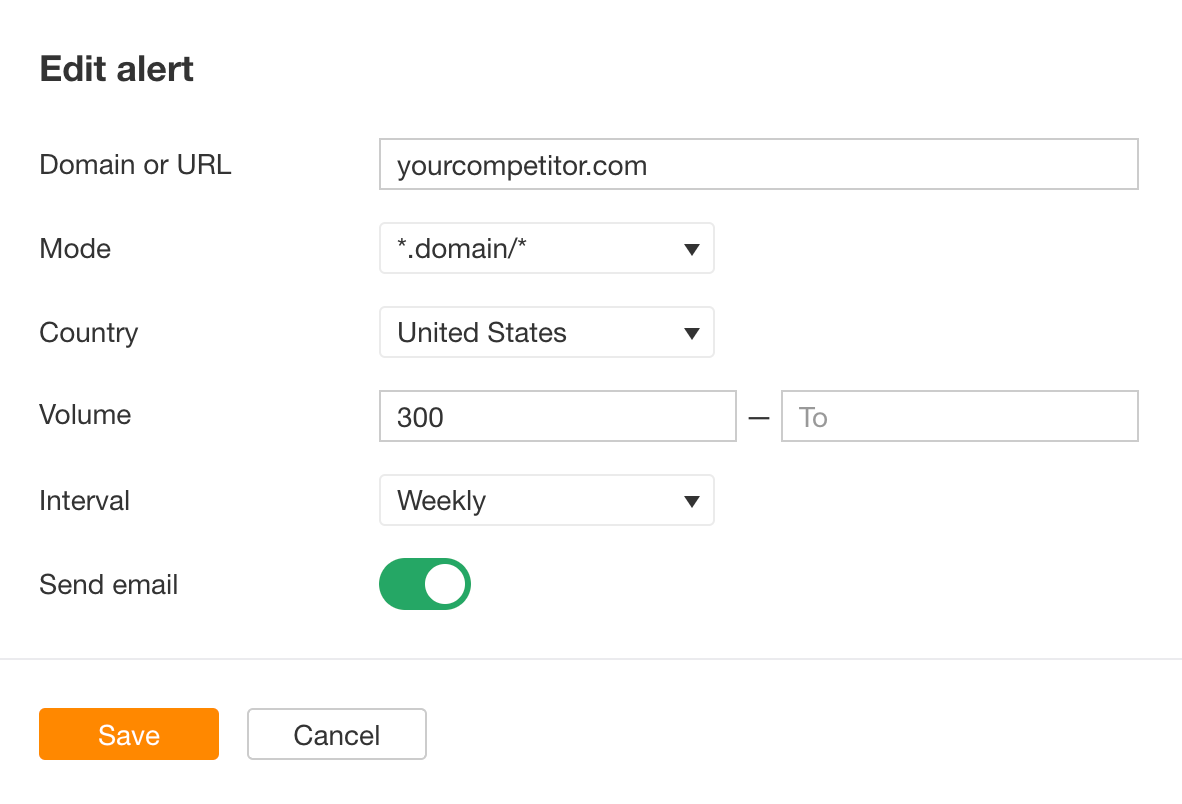

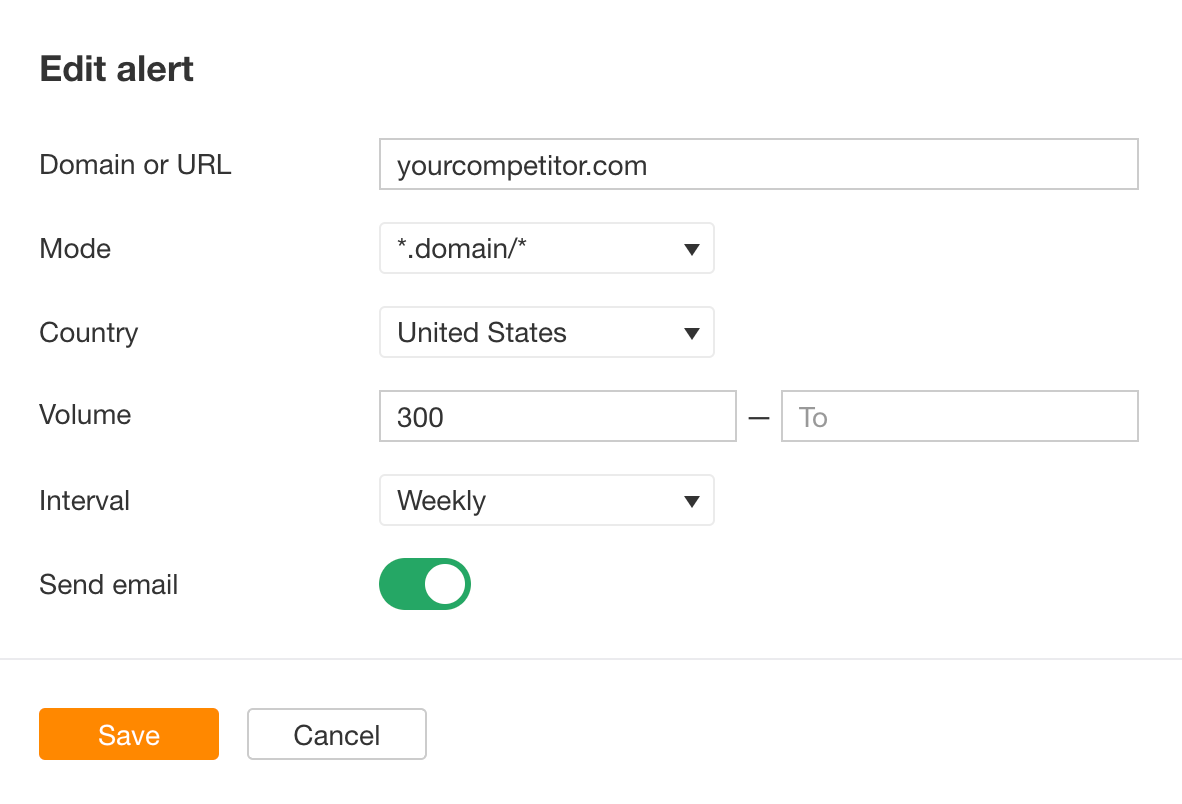

But not only can you track your competitors’ keywords, you can also monitor them. Use a tool like Ahrefs Alerts to get notifications whenever your competitors started working for a new keyword.

Just to go Alerts tool in Ahrefs and fill in the details.

There’s even more you can do with keywords and a bit more you should know to avoid some common mistakes.

1. Use keywords to find guest blogging opportunities

Guest blogging is the practice of writing and publishing a blog post on another person or company’s website.

It’s one of the most popular link building tactics with a few other benefits like exposure to a new, targeted audience.

Here’s how to find relevant, high-quality sites to pitch:

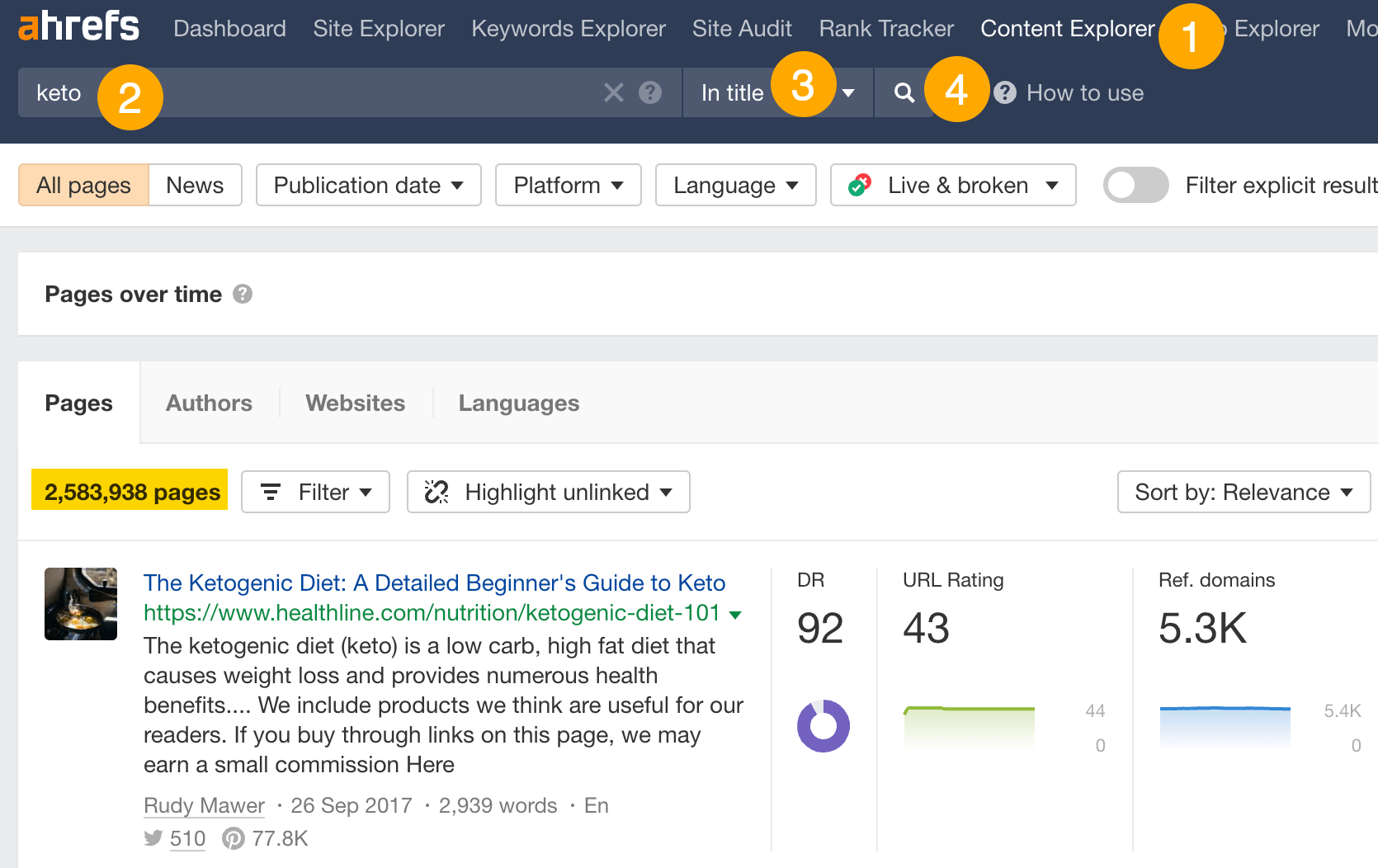

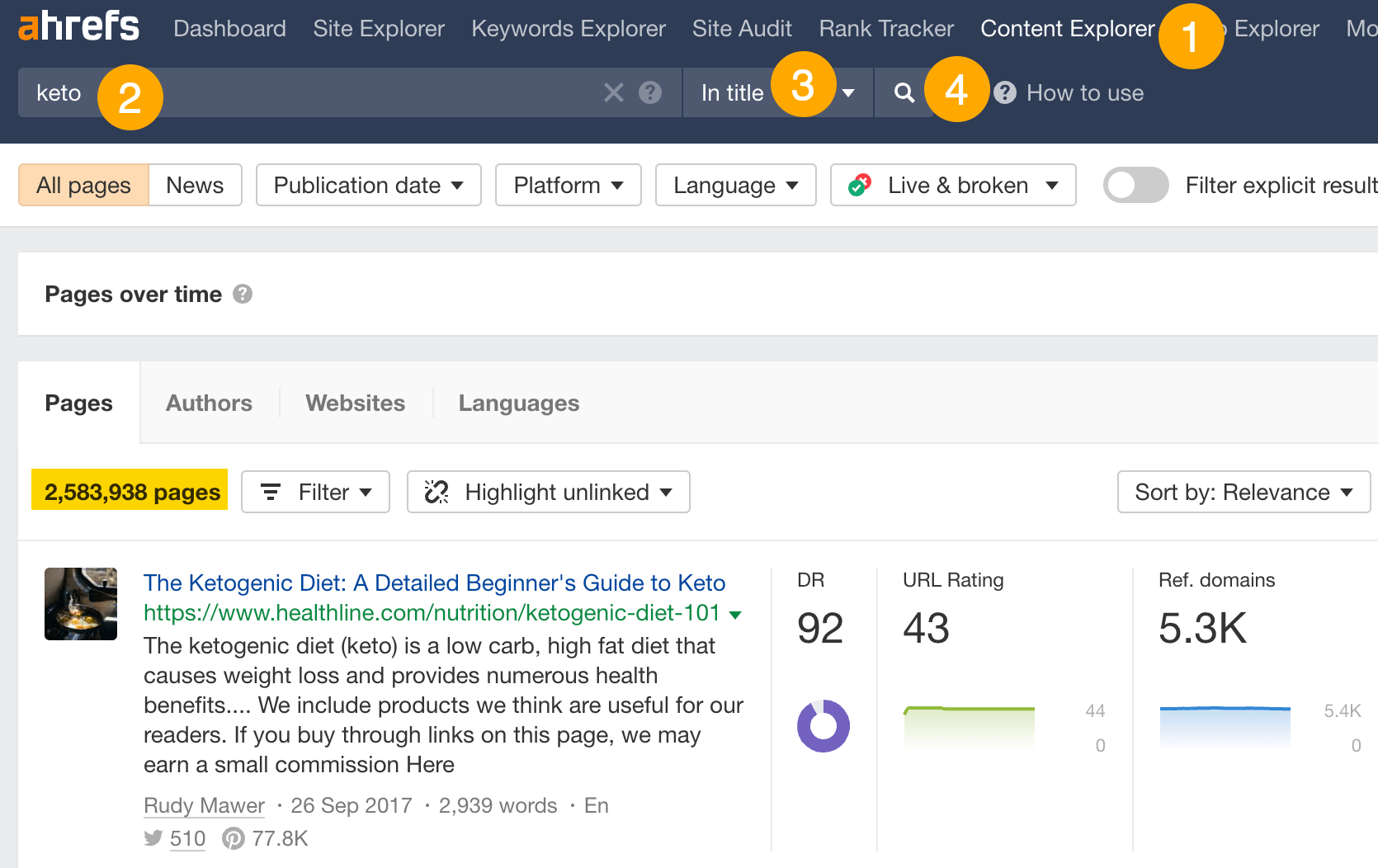

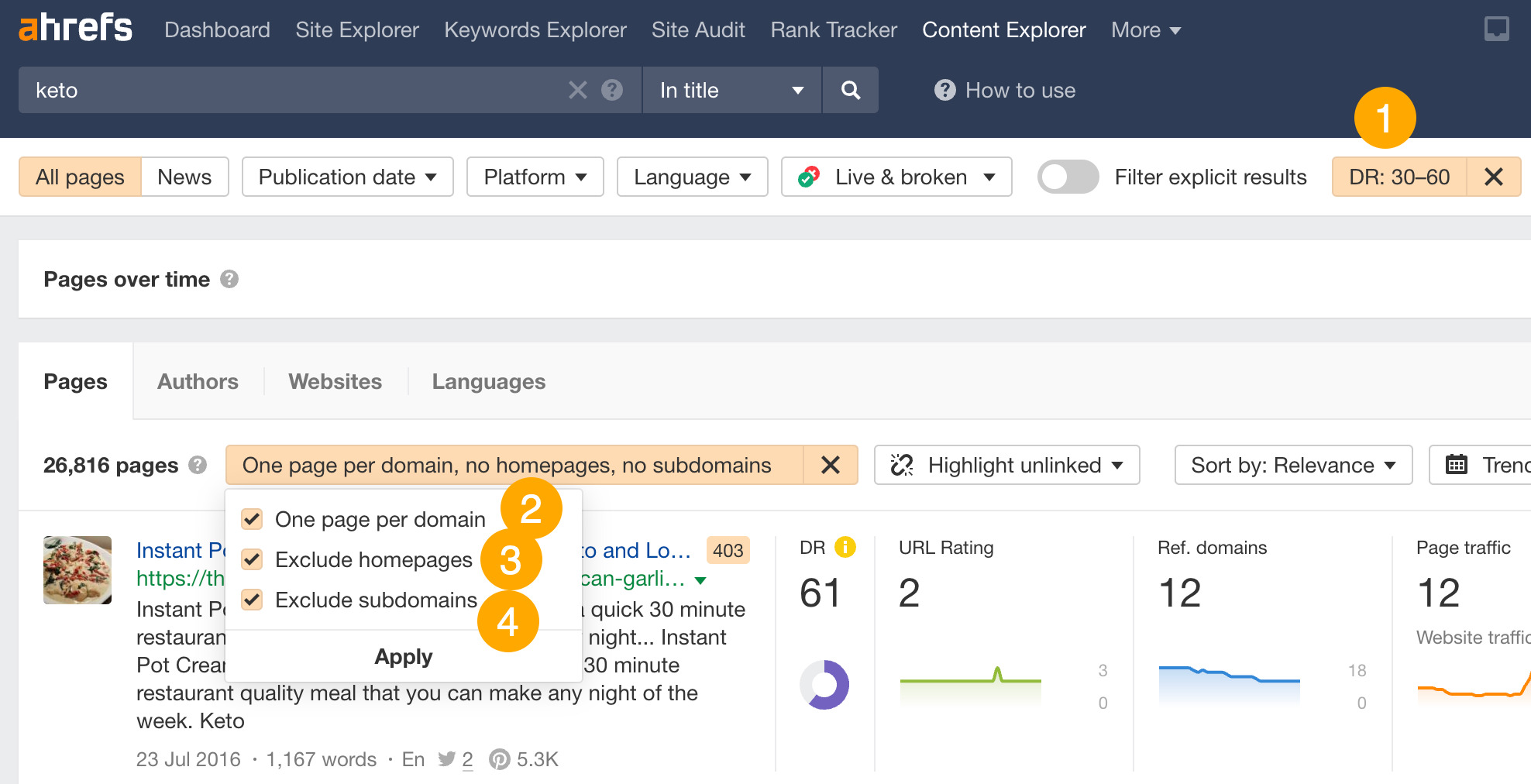

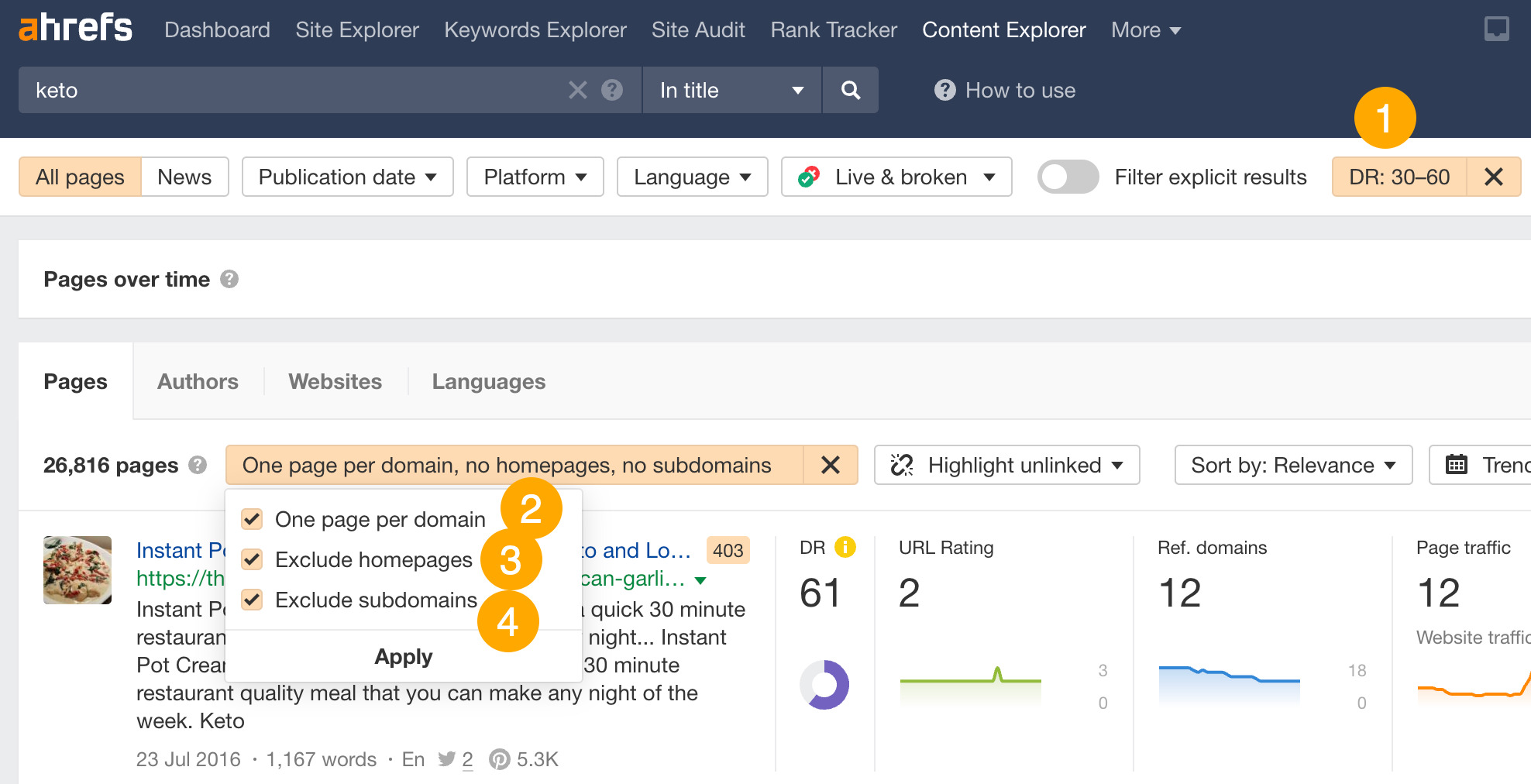

- Go to Ahrefs’ Content Explorer.

- Enter a broad keyword or phrase related to your niche.

- Select In title from the drop-down menu.

- Run the search.

Next, refine the list by applying these filters:

- Domain Rating (DR) from 30 to 60.

- Click the One page per domain filter.

- Click the Exclude homepages filter.

- Click the Exclude subdomains filter.

Finally, click on the Websites tab to see potential websites you could guest blog for.

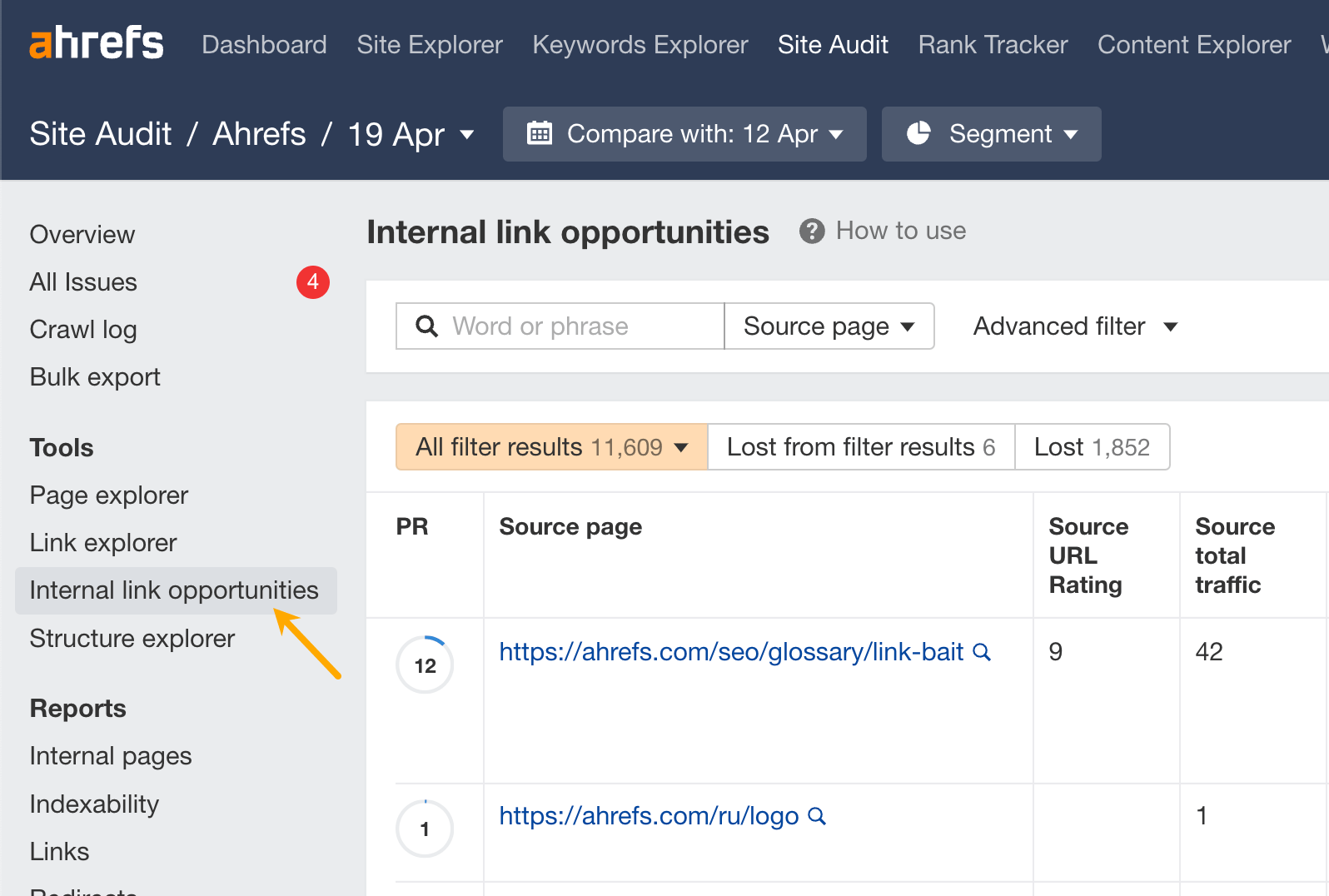

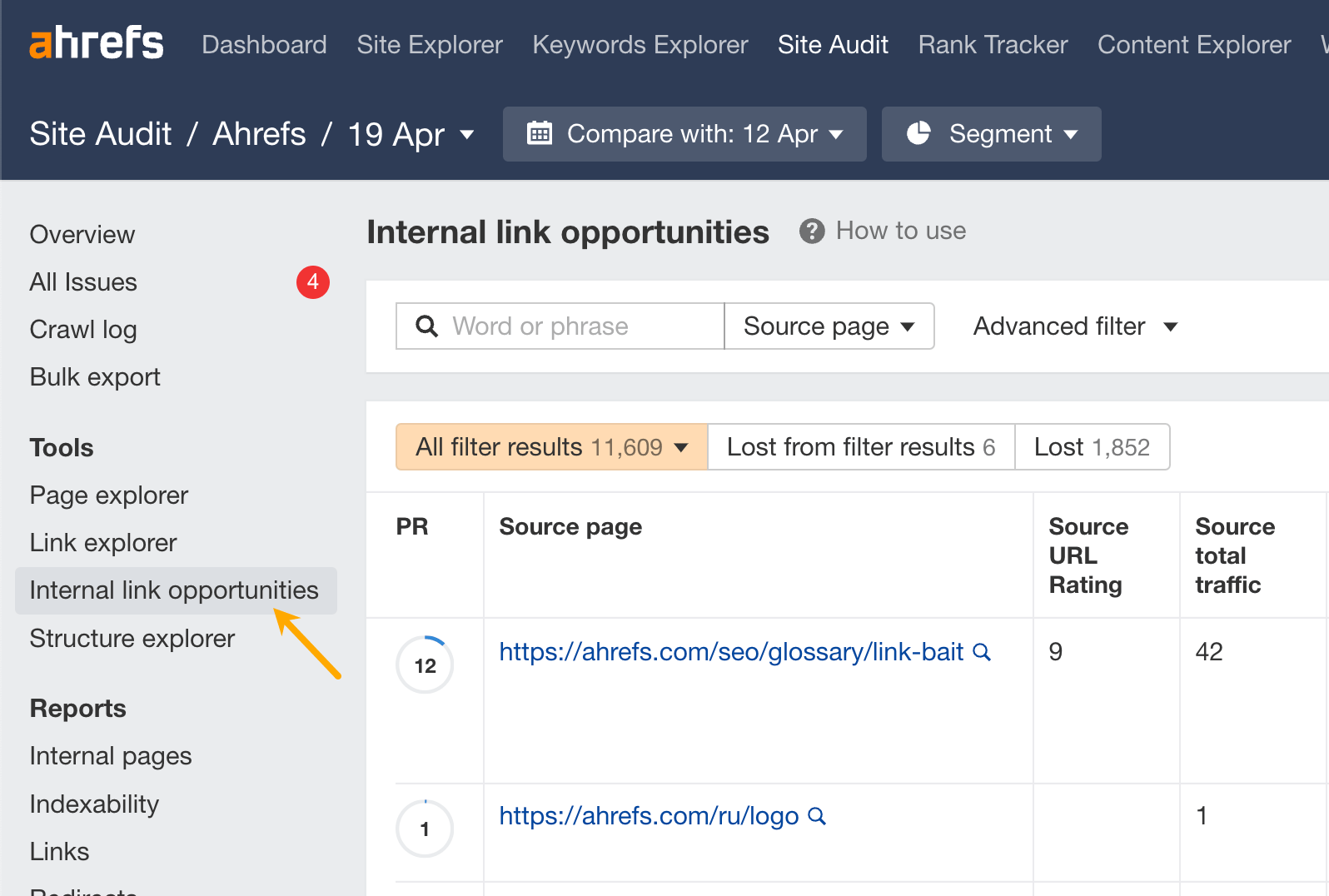

2. Use keywords to find internal link opportunities

Internal links take visitors from one page to another on your website. Their main purpose is to help visitors easily navigate your website, but they can also help boost SEO by aiding the flow of link equity.

Finding new internal link opportunities is also a time-consuming process if done manually, but you can identify them in bulk using Ahrefs’ Site Audit. The tool takes the top 10 keywords (by traffic) for each crawled page, then looks for mentions of those on your other crawled pages.

Click on the Internal link opportunities report in Site Audit.

You’ll see a bunch of suggestions on how to improve your internal linking using new links. The tool even suggests exactly where to place the internal link.

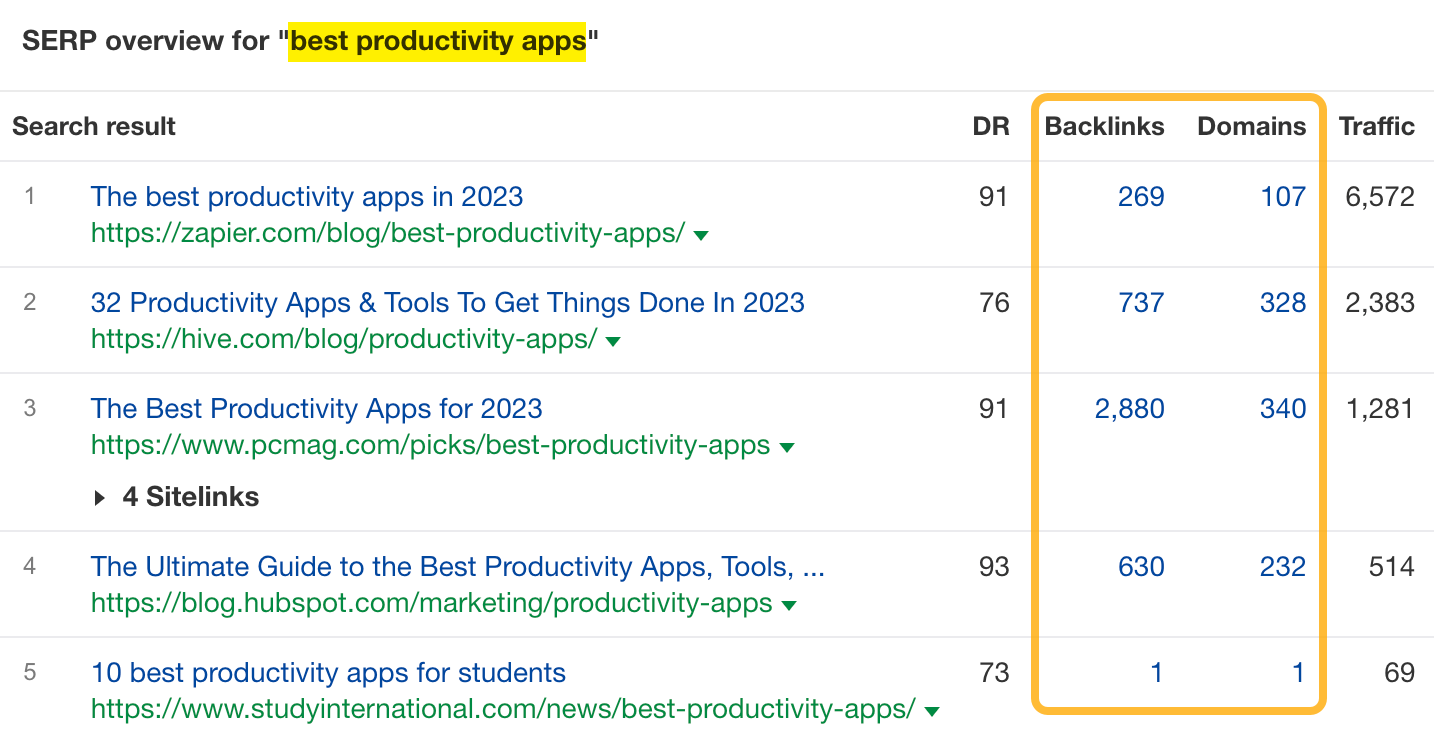

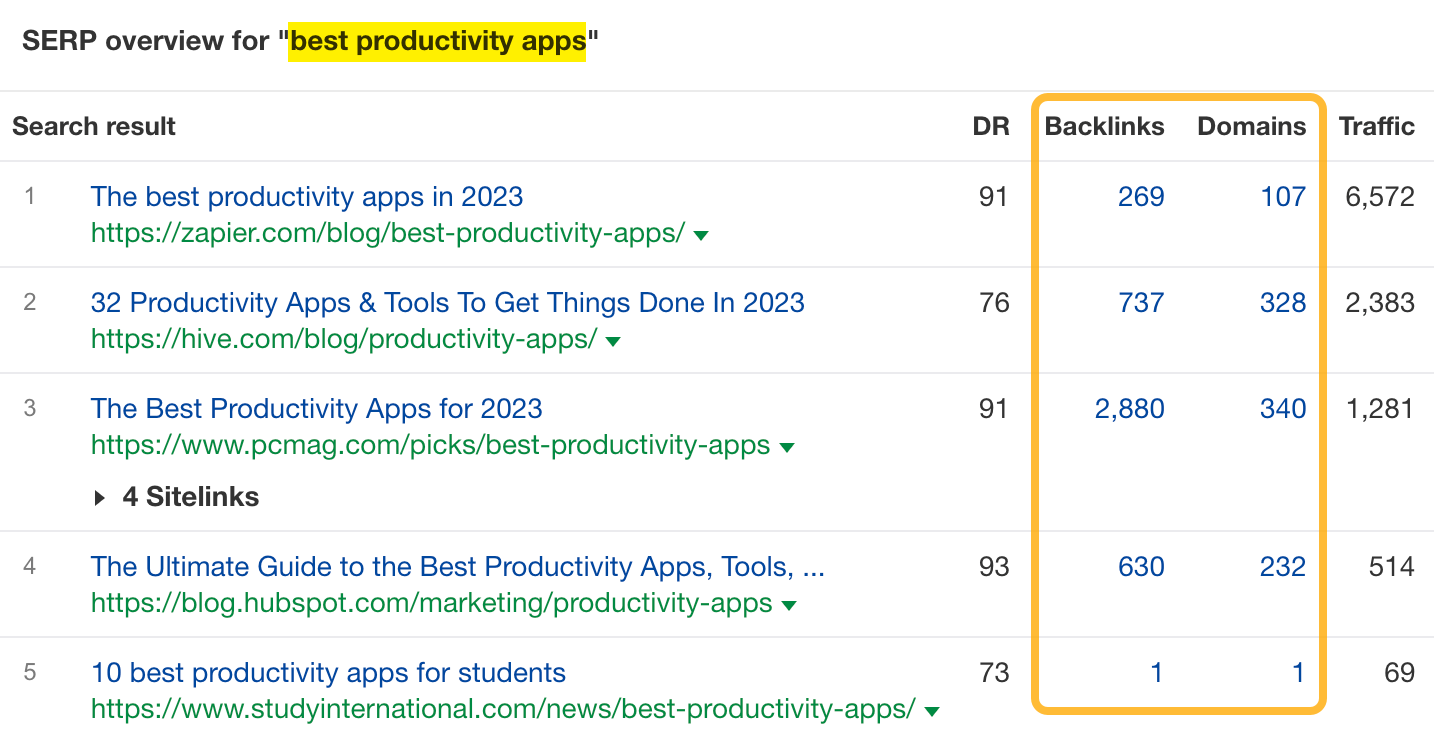

3. Use keywords to find link building opportunities

Link building is the process of getting other websites to link to pages on your website. Its purpose is to boost the “authority” of your pages in the eyes of Google and help your pages rank.

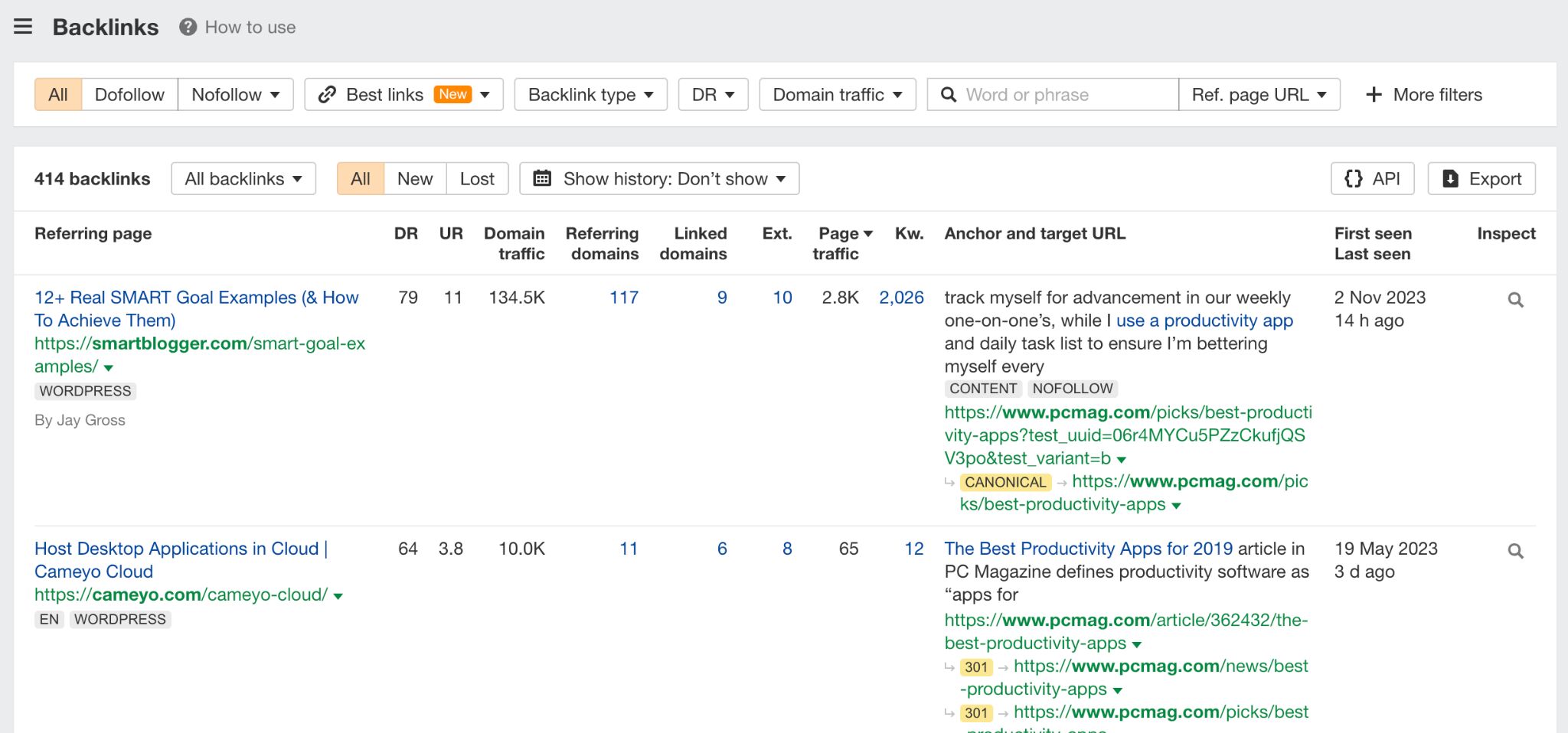

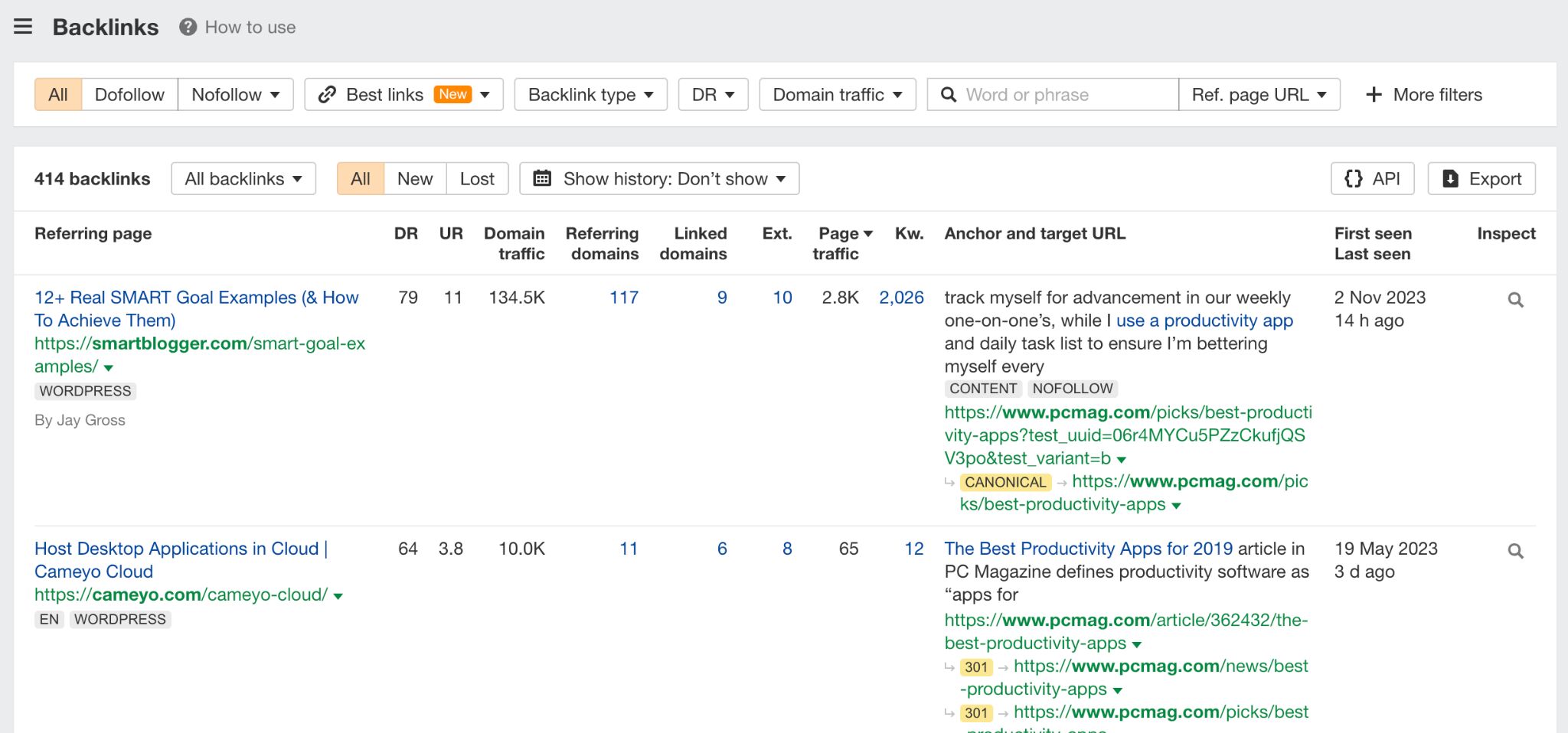

A good place to start is to pull up the top-ranking pages for your target keyword and research where they got their links from.

Put your keyword into Keywords Explorer and scroll down to the SERP overview. You’ll see the top-ranking pages and their number of backlinks (and linking domains).

Once you click on any of the backlink numbers, you’ll be redirected to a list of backlinks of a given page in Site Explorer.

From that point, the typical process involves identifying sites with the highest potential to boost your SEO and contacting their owners if you think they’d be willing to link to your content. We’re covering the details of this process and everything else you need to know to start with link building in this guide.

4. Avoid common keyword pitfalls

Four big don’ts of using keywords.

- Don’t use the same keyword excessively on a page. Repeating a keyword too frequently within a single page can lead to keyword stuffing, which is treated as spam by Google.

- Don’t use the same focus keyword across multiple pages. Each page on your website should have a unique focus keyword. Using the same keyword across multiple pages can lead to keyword cannibalization, where pages compete against each other in search results.

- Don’t sacrifice quality content for keyword usage. While keywords are essential for SEO, prioritize high-quality, informative content above all else. Don’t make your content read unnatural or too long by cramming in keywords. This won’t help you rank and will decrease content quality.

- Don’t use keywords just for the sake of using them. This means two things. First, don’t target keywords not related to your website or business — this will only bring you useless traffic. Second, don’t try to hit some keyword frequency goal which is often suggested by content optimization tools by just mentioning the keyword without any substance — SEO doesn’t work that way anymore.

Final thoughts

This article focused on general SEO for text-based content. For using keywords in other types of content and SEO, see these guides:

SEO

Google Launches New ‘Saved Comparisons’ Feature For Analytics

Google announced a new tool for Analytics to streamline data comparisons.

The ‘saved comparisons’ feature allows you to save filtered user data segments for rapid side-by-side analysis.

Google states in an announcement:

“We’re launching saved comparisons to help you save time when comparing the user bases you care about.

Learn how you can do that without recreating the comparison every time!”

We’re launching saved comparisons to help you save time when comparing the user bases you care about. Learn how you can do that without recreating the comparison every time! → https://t.co/29nN2MiPtm pic.twitter.com/r8924rAT05

— Google Analytics (@googleanalytics) May 8, 2024

Google links to a help page that lists several benefits and use cases:

“Comparisons let you evaluate subsets of your data side by side. For example, you could compare data generated by Android devices to data generated by iOS devices.”

“In Google Analytics 4, comparisons take the place of segments in Universal Analytics.”

Saved Comparisons: How They Work

The new comparisons tool allows you to create customized filtered views of Google Analytics data based on dimensions like platform, country, traffic source, and custom audiences.

These dimensions can incorporate multiple conditions using logic operators.

For example, you could generate a comparison separating “Android OR iOS” traffic from web traffic. Or you could combine location data like “Country = Argentina OR Japan” with platform filters.

These customized comparison views can then be saved to the property level in Analytics.

Users with access can quickly apply saved comparisons to any report for efficient analysis without rebuilding filters.

Google’s documentation states:

“As an administrator or editor…you can save comparisons to your Google Analytics 4 property. Saved comparisons enable you and others with access to compare the user bases you care about without needing to recreate the comparisons each time.”

Rollout & Limitations

The saved comparisons feature is rolling out gradually. There’s a limit of 200 saved comparisons per property.

For more advanced filtering needs, such as sequences of user events, Google recommends creating a custom audience first and saving a comparison based on that audience definition.

Some reports may be incompatible if they don’t include the filtered dimensions used in a saved comparison. In that case, the documentation suggests choosing different dimensions or conditions for that report type.

Why SEJ Cares

The ability to create and apply saved comparisons addresses a time-consuming aspect of analytics work.

Analysts must view data through different lenses, segmenting by device, location, traffic source, etc. Manually recreating these filtered comparisons for each report can slow down production.

Any innovation streamlining common tasks is welcome in an arena where data teams are strapped for time.

How This Can Help You

Saved comparisons mean less time getting bogged down in filter recreation and more time for impactful analysis.

Here are a few key ways this could benefit your work:

- Save time by avoiding constant recreation of filters for common comparisons (e.g. mobile vs desktop, traffic sources, geo locations).

- Share saved comparisons with colleagues for consistent analysis views.

- Switch between comprehensive views and isolated comparisons with a single click.

- Break down conversions, engagement, audience origins, and more by your saved user segments.

- Use thoughtfully combined conditions to surface targeted segments (e.g. paid traffic for a certain product/location).

The new saved comparisons in Google Analytics may seem like an incremental change. However, simplifying workflows and reducing time spent on mundane tasks can boost productivity in a big way.

Featured Image: wan wei/Shutterstock

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: May 3, 2024

-

MARKETING5 days ago

MARKETING5 days agoHow Tagging Strategies Transform Marketing Campaigns

-

MARKETING7 days ago

MARKETING7 days agoLet’s Start Treating Content More Like We Treat Code

-

MARKETING6 days ago

MARKETING6 days agoTinuiti Recognized in Forrester Report for Media Management Excellence

-

WORDPRESS6 days ago

WORDPRESS6 days agoThe Top 5 AI-Powered Tools for WordPress Creatives – WordPress.com News

-

PPC6 days ago

PPC6 days agoStandard Performance Max vs Performance Max for Retail

-

SEO6 days ago

SEO6 days agoGoogle Performance Max For Marketplaces: Advertise Without A Website

-

SEARCHENGINES4 days ago

SEARCHENGINES4 days agoThe Industry Mourns The Loss Of Mark Irvine